linux源码解读(二十五):mmap原理和实现方式

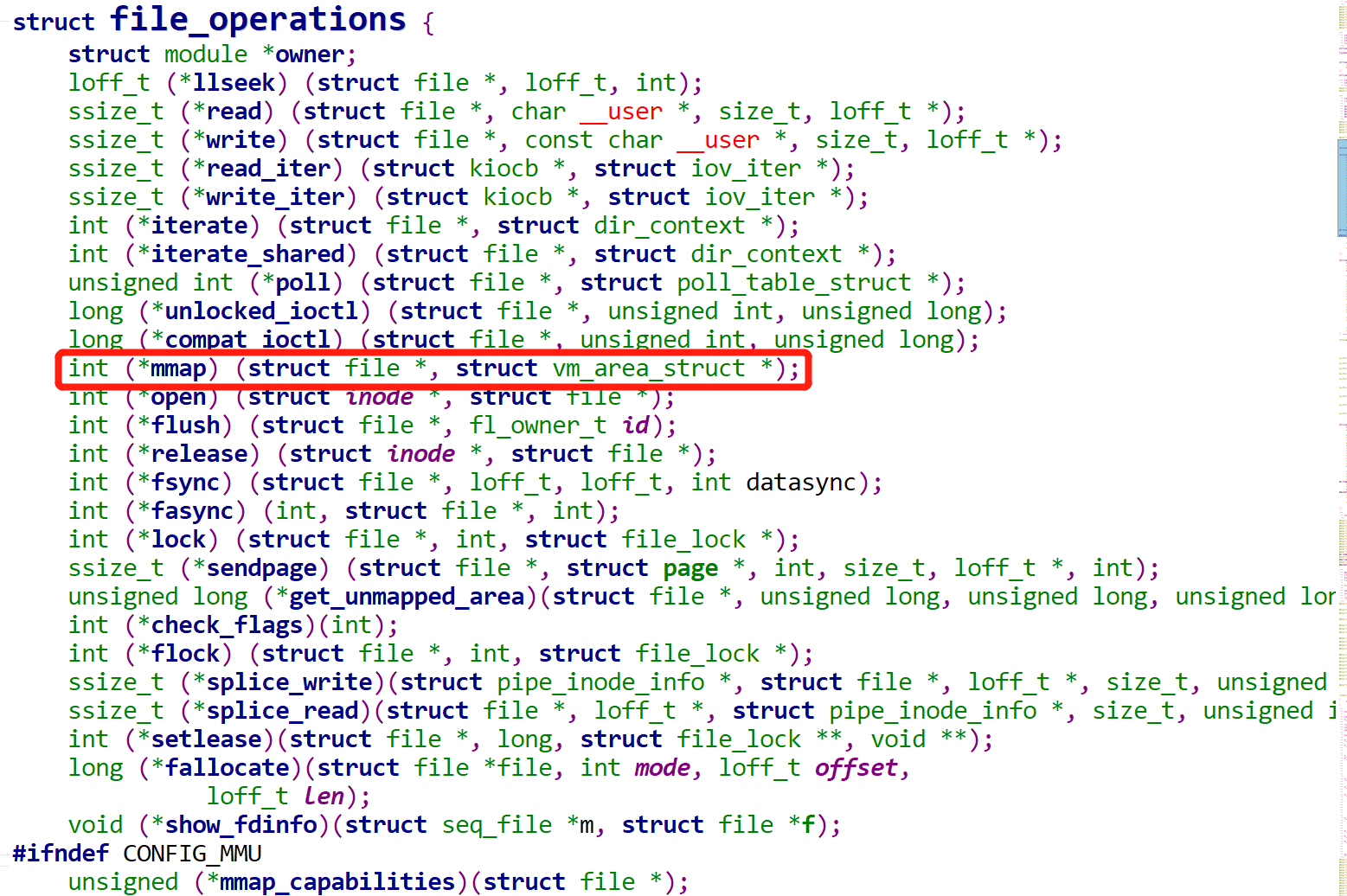

众所周知,linux的理念是万物皆文件,自然少不了对文件的各种操作,常见的诸如open、read、write等,都是大家耳熟能详的操作。除了这些常规操作外,还有一个不常规的操作:mmap,其在file_operations结构体中的定义如下: 这个函数的作用是什么了?

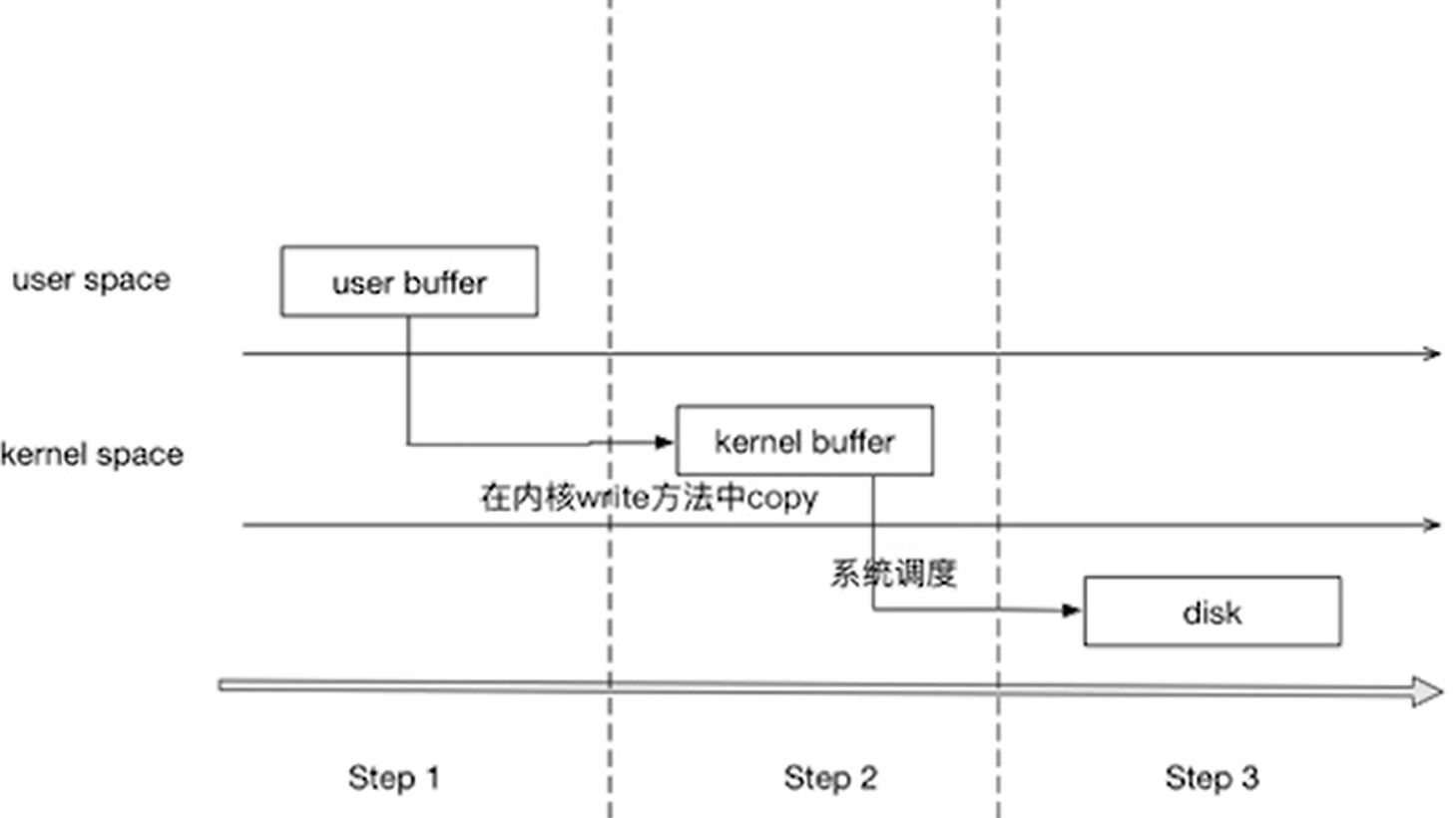

1、对于读写文件,传统经典的api都是这样的:先open文件,拿到文件的fd;再调用read或write读写文件。由于文件存放在磁盘,3环的app是没有权限直接操作磁盘的,所以需要通过系统调用进入操作系统的内核,再通过事先安装好的驱动读写磁盘数据。这样一来,磁盘的数据会分别存放在内核空间和用户空间,也就是同一份数据会在内存内部放在两个不同的地方,而且也需要拷贝2次,整个过程是“又费柴油又费马达”;流程示例如下:

这样做既然浪费内存空间,也浪费拷贝的时间,该怎么优化了?

2、上述做法的结症在于同一份数据拷贝2次,那么能不能只拷贝1次了?答案是可以的,mmap就是这么干的!

(1)先看看mmap的用例,直观了解一下是怎么使用的,如下:

#include<stdlib.h> #include<sys/mman.h> #include<fcntl.h> int main(void) { int *p; int fd = open("hello", O_RDWR); if(fd < 0) { perror("open hello"); exit(1); } p = mmap(NULL,6, PROT_WRITE, MAP_SHARED, fd, 0); if(p == MAP_FAILED) { perror("mmap"); //程序进里面了,证明mmap失败 exit(1); } close(fd); p[0] = 0x30313233; munmap(p, 6); return 0; }

用例是不是很简单了?还是先调用open函数得到文件的fd,再调用mmap建立文件在内存的映射,这时得到了文件在内存映射的地址p,最后通过p指针读写文件数据!整个逻辑非常简单,是个码农都能看懂!这么简单方便、效率还高(只复制一次)的mmap又是怎么实现的了?

(2)先说一下mmap的原理:mmap只复制1次的原理也简单,就是在进程的虚拟内存开辟一块空间,映射到内核的物理内存,并建立和文件的关联,再把文件内容读到这块内存;后续3环的app读写文件都不走磁盘了,而是直接读写这块建立好映射的内存!等到进程退出或出意外奔溃,操作系统把映射内存的数据重新写回磁盘的文件!

mmap的原理也不复杂,具体是到代码层面是怎么做的了?

(3)从上面的demo可以看出,3环应用层直接调用的是mmap函数,但很明显这个功能因为涉及到磁盘读写,肯定是需要操作系统支持得,所以mmap肯定需要通过系统调用进入内核执行代码。操作系统提供的系统调用函数是do_mmap,在mm\mmap.c文件中,代码如下:

/* * The caller must hold down_write(¤t->mm->mmap_sem). 根据用户传入的参数做了一系列的检查,然后根据参数初始化vm_area_struct的标志vm_flags、 vma->vm_file = get_file(file)建立文件与vma的映射 */ unsigned long do_mmap(struct file *file, unsigned long addr, unsigned long len, unsigned long prot, unsigned long flags, vm_flags_t vm_flags, unsigned long pgoff, unsigned long *populate) { struct mm_struct *mm = current->mm;//当前进程的虚拟内存描述符 int pkey = 0; *populate = 0; if (!len) return -EINVAL; /* * Does the application expect PROT_READ to imply PROT_EXEC? * * (the exception is when the underlying filesystem is noexec * mounted, in which case we dont add PROT_EXEC.) */ if ((prot & PROT_READ) && (current->personality & READ_IMPLIES_EXEC)) if (!(file && path_noexec(&file->f_path))) prot |= PROT_EXEC; /* 假如没有设置MAP_FIXED标志,且addr小于mmap_min_addr, 因为可以修改addr, 所以就需要将addr设为mmap_min_addr的页对齐后的地址 */ if (!(flags & MAP_FIXED)) addr = round_hint_to_min(addr); /* Careful about overflows.. 检查长度,防止溢出??*/ /* 进行Page大小的对齐,因为内存映射大小必须页对齐 */ len = PAGE_ALIGN(len); if (!len) return -ENOMEM; /* offset overflow? */ if ((pgoff + (len >> PAGE_SHIFT)) < pgoff) return -EOVERFLOW; /* Too many mappings? */ /* 判断该进程的地址空间的虚拟区间数量是否超过了限制 */ if (mm->map_count > sysctl_max_map_count) return -ENOMEM; /* Obtain the address to map to. we verify (or select) it and ensure * that it represents a valid section of the address space. 从当前进程的用户空间获取一个未被映射区间的起始地址:这里就涉及到红黑树了 */ addr = get_unmapped_area(file, addr, len, pgoff, flags); if (offset_in_page(addr))/* 检查addr是否有效 */ return addr; if (prot == PROT_EXEC) { pkey = execute_only_pkey(mm); if (pkey < 0) pkey = 0; } /* Do simple checking here so the lower-level routines won't have * to. we assume access permissions have been handled by the open * of the memory object, so we don't do any here. */ vm_flags |= calc_vm_prot_bits(prot, pkey) | calc_vm_flag_bits(flags) | mm->def_flags | VM_MAYREAD | VM_MAYWRITE | VM_MAYEXEC; /* 假如flags设置MAP_LOCKED,即类似于mlock()将申请的地址空间锁定在内存中, 检查是否可以进行lock*/ if (flags & MAP_LOCKED) if (!can_do_mlock()) return -EPERM; if (mlock_future_check(mm, vm_flags, len)) return -EAGAIN; if (file) { struct inode *inode = file_inode(file); /*根据标志指定的map种类,把为文件设置的访问权考虑进去。 如果所请求的内存映射是共享可写的,就要检查要映射的文件是为写入而打开的,而不 是以追加模式打开的,还要检查文件上没有上强制锁。 对于任何种类的内存映射,都要检查文件是否为读操作而打开的。 */ switch (flags & MAP_TYPE) { case MAP_SHARED: if ((prot&PROT_WRITE) && !(file->f_mode&FMODE_WRITE)) return -EACCES; /* * Make sure we don't allow writing to an append-only * file.. */ if (IS_APPEND(inode) && (file->f_mode & FMODE_WRITE)) return -EACCES; /* * Make sure there are no mandatory locks on the file. */ if (locks_verify_locked(file)) return -EAGAIN; vm_flags |= VM_SHARED | VM_MAYSHARE; if (!(file->f_mode & FMODE_WRITE)) vm_flags &= ~(VM_MAYWRITE | VM_SHARED); /* fall through */ case MAP_PRIVATE: if (!(file->f_mode & FMODE_READ)) return -EACCES; if (path_noexec(&file->f_path)) { if (vm_flags & VM_EXEC) return -EPERM; vm_flags &= ~VM_MAYEXEC; } if (!file->f_op->mmap) return -ENODEV; if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP)) return -EINVAL; break; default: return -EINVAL; } } else { switch (flags & MAP_TYPE) { case MAP_SHARED: if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP)) return -EINVAL; /* * Ignore pgoff. */ pgoff = 0; vm_flags |= VM_SHARED | VM_MAYSHARE; break; case MAP_PRIVATE: /* * Set pgoff according to addr for anon_vma. */ pgoff = addr >> PAGE_SHIFT; break; default: return -EINVAL; } } /* * Set 'VM_NORESERVE' if we should not account for the * memory use of this mapping. */ if (flags & MAP_NORESERVE) { /* We honor MAP_NORESERVE if allowed to overcommit */ if (sysctl_overcommit_memory != OVERCOMMIT_NEVER) vm_flags |= VM_NORESERVE; /* hugetlb applies strict overcommit unless MAP_NORESERVE */ if (file && is_file_hugepages(file)) vm_flags |= VM_NORESERVE; } /*创建和初始化虚拟内存区域,并加入红黑树管理*/ addr = mmap_region(file, addr, len, vm_flags, pgoff); if (!IS_ERR_VALUE(addr) && ((vm_flags & VM_LOCKED) || /* 假如没有设置MAP_POPULATE标志位内核并不在调用mmap()时就为进程分配物理内存空间, 而是直到下次真正访问地址空间时发现数据不存在于物理内存空间时才触发Page Fault ,将缺失的 Page 换入内存空间 */ (flags & (MAP_POPULATE | MAP_NONBLOCK)) == MAP_POPULATE)) *populate = len; return addr; }

代码有很多,但是核心功能其实并不复杂:找到空闲的虚拟内存地址,并根据不同的文件打开方式设置不同的vm标志位flag!在函数末尾处调用了mmap_region函数,核心功能是创建和初始化虚拟内存区域,并加入红黑树节点进行管理,代码如下:

/*创建和初始化虚拟内存区域,并加入红黑树节点进行管理*/ unsigned long mmap_region(struct file *file, unsigned long addr, unsigned long len, vm_flags_t vm_flags, unsigned long pgoff) { struct mm_struct *mm = current->mm; struct vm_area_struct *vma, *prev; int error; struct rb_node **rb_link, *rb_parent; unsigned long charged = 0; /* Check against address space limit. 申请的虚拟内存空间是否超过了限制 */ if (!may_expand_vm(mm, vm_flags, len >> PAGE_SHIFT)) { unsigned long nr_pages; /* * MAP_FIXED may remove pages of mappings that intersects with * requested mapping. Account for the pages it would unmap. */ nr_pages = count_vma_pages_range(mm, addr, addr + len); if (!may_expand_vm(mm, vm_flags, (len >> PAGE_SHIFT) - nr_pages)) return -ENOMEM; } /* Clear old maps 检查[addr, addr+len)的区间是否存在映射空间,假如存在重合的映射空间需要munmap*/ while (find_vma_links(mm, addr, addr + len, &prev, &rb_link, &rb_parent)) { if (do_munmap(mm, addr, len)) return -ENOMEM; } /* * Private writable mapping: check memory availability */ if (accountable_mapping(file, vm_flags)) { charged = len >> PAGE_SHIFT; if (security_vm_enough_memory_mm(mm, charged)) return -ENOMEM; vm_flags |= VM_ACCOUNT; } /* * Can we just expand an old mapping? 检查是否可以合并[addr, addr+len)区间内的虚拟地址空间vma */ vma = vma_merge(mm, prev, addr, addr + len, vm_flags, NULL, file, pgoff, NULL, NULL_VM_UFFD_CTX); if (vma)/* 假如合并成功,即使用合并后的vma, 并跳转至out */ goto out; /* * Determine the object being mapped and call the appropriate * specific mapper. the address has already been validated, but * not unmapped, but the maps are removed from the list. 如果不能和已有的虚拟内存区域合并,通过 Memory Descriptor 来申请一个 vma */ vma = kmem_cache_zalloc(vm_area_cachep, GFP_KERNEL); if (!vma) { error = -ENOMEM; goto unacct_error; } vma->vm_mm = mm; vma->vm_start = addr; vma->vm_end = addr + len; vma->vm_flags = vm_flags; vma->vm_page_prot = vm_get_page_prot(vm_flags); vma->vm_pgoff = pgoff; INIT_LIST_HEAD(&vma->anon_vma_chain);//vma通过链表连接,这里初始化链表头 /* 假如指定了文件映射 */ if (file) { /* 映射的文件不允许写入,调用 deny_write_accsess(file) 排斥常规的文件操作 */ if (vm_flags & VM_DENYWRITE) { error = deny_write_access(file); if (error) goto free_vma; } if (vm_flags & VM_SHARED) {/* 映射的文件允许其他进程可见, 标记文件为可写 */ error = mapping_map_writable(file->f_mapping); if (error) goto allow_write_and_free_vma; } /* ->mmap() can change vma->vm_file, but must guarantee that * vma_link() below can deny write-access if VM_DENYWRITE is set * and map writably if VM_SHARED is set. This usually means the * new file must not have been exposed to user-space, yet. */ vma->vm_file = get_file(file);/* 递增 File 的引用次数,返回 File 赋给 vma */ error = file->f_op->mmap(file, vma); /* 调用文件系统指定的 mmap 函数*/ if (error) goto unmap_and_free_vma; /* Can addr have changed?? * * Answer: Yes, several device drivers can do it in their * f_op->mmap method. -DaveM * Bug: If addr is changed, prev, rb_link, rb_parent should * be updated for vma_link() */ WARN_ON_ONCE(addr != vma->vm_start); addr = vma->vm_start; vm_flags = vma->vm_flags; /* 假如标志为 VM_SHARED,但没有指定映射文件,需要调用 shmem_zero_setup() shmem_zero_setup() 实际映射的文件是 dev/zero */ } else if (vm_flags & VM_SHARED) { error = shmem_zero_setup(vma); if (error) goto free_vma; } /*新分配的vma加入红黑树*/ vma_link(mm, vma, prev, rb_link, rb_parent); /* Once vma denies write, undo our temporary denial count */ if (file) { if (vm_flags & VM_SHARED) mapping_unmap_writable(file->f_mapping); if (vm_flags & VM_DENYWRITE) allow_write_access(file); } file = vma->vm_file; out: perf_event_mmap(vma); /* 更新进程的虚拟地址空间 mm */ vm_stat_account(mm, vm_flags, len >> PAGE_SHIFT); if (vm_flags & VM_LOCKED) { if (!((vm_flags & VM_SPECIAL) || is_vm_hugetlb_page(vma) || vma == get_gate_vma(current->mm))) mm->locked_vm += (len >> PAGE_SHIFT); else vma->vm_flags &= VM_LOCKED_CLEAR_MASK; } if (file) uprobe_mmap(vma); /* * New (or expanded) vma always get soft dirty status. * Otherwise user-space soft-dirty page tracker won't * be able to distinguish situation when vma area unmapped, * then new mapped in-place (which must be aimed as * a completely new data area). */ vma->vm_flags |= VM_SOFTDIRTY; vma_set_page_prot(vma); return addr; unmap_and_free_vma: vma->vm_file = NULL; fput(file); /* Undo any partial mapping done by a device driver. */ unmap_region(mm, vma, prev, vma->vm_start, vma->vm_end); charged = 0; if (vm_flags & VM_SHARED) mapping_unmap_writable(file->f_mapping); allow_write_and_free_vma: if (vm_flags & VM_DENYWRITE) allow_write_access(file); free_vma: kmem_cache_free(vm_area_cachep, vma); unacct_error: if (charged) vm_unacct_memory(charged); return error; }

以上两个函数的核心功能是查找、分配、初始化空闲的vma,并加入链表和红黑树管理,同时设置vma的各种flags属性,便于后续管理!那么问题来了:数据最终都是要存放在物理内存的,截至目前所有的操作都是虚拟内存,这些vma都是在哪和物理内存建立映射的了?关键的函数是remap_pfn_range,在mm/memory.c文件中;

/** * remap_pfn_range - remap kernel memory to userspace 将内核空间的内存映射到用户空间,或者说是 将用户空间的一个vma虚拟内存区映射到以page开始的一段连续物理页面上 * @vma: user vma to map to:需要映射(或者说挂载关联)物理地址的vma * @addr: target user address to start at:用户空间地址的起始位置 * @pfn: physical address of kernel memory:内核的物理地址空间 * @size: size of map area * @prot: page protection flags for this mapping:内存页面的属性 * * Note: this is only safe if the mm semaphore is held when called. */ int remap_pfn_range(struct vm_area_struct *vma, unsigned long addr, unsigned long pfn, unsigned long size, pgprot_t prot) { pgd_t *pgd; unsigned long next; /*需要映射的虚拟地址尾部:注意要页对齐,因为cpu硬件是以页为单位管理内存的*/ unsigned long end = addr + PAGE_ALIGN(size); struct mm_struct *mm = vma->vm_mm; unsigned long remap_pfn = pfn; int err; /* * Physically remapped pages are special. Tell the * rest of the world about it: * VM_IO tells people not to look at these pages * (accesses can have side effects). * VM_PFNMAP tells the core MM that the base pages are just * raw PFN mappings, and do not have a "struct page" associated * with them. * VM_DONTEXPAND * Disable vma merging and expanding with mremap(). * VM_DONTDUMP * Omit vma from core dump, even when VM_IO turned off. * * There's a horrible special case to handle copy-on-write * behaviour that some programs depend on. We mark the "original" * un-COW'ed pages by matching them up with "vma->vm_pgoff". * See vm_normal_page() for details. */ if (is_cow_mapping(vma->vm_flags)) { if (addr != vma->vm_start || end != vma->vm_end) return -EINVAL; vma->vm_pgoff = pfn; } err = track_pfn_remap(vma, &prot, remap_pfn, addr, PAGE_ALIGN(size)); if (err) return -EINVAL; /*改变虚拟地址的标志*/ vma->vm_flags |= VM_IO | VM_PFNMAP | VM_DONTEXPAND | VM_DONTDUMP; BUG_ON(addr >= end); pfn -= addr >> PAGE_SHIFT; /* /* To find an entry in a generic PGD。宏定义展开后如下: #define pgd_index(address) (((address) >> PGDIR_SHIFT) & (PTRS_PER_PGD-1)) #define pgd_offset(mm, address) ((mm)->pgd+pgd_index(address)) 查找addr第1级页目录项中对应的页表项的地址 */ pgd = pgd_offset(mm, addr); /*刷新TLB缓存;这个缓存和CPU的L1、L2、L3的缓存思想一致, 既然进行地址转换需要的内存IO次数多,且耗时, 那么干脆就在CPU里把页表尽可能地cache起来不就行了么, 所以就有了TLB(Translation Lookaside Buffer), 专门用于改进虚拟地址到物理地址转换速度的缓存。 其访问速度非常快,和寄存器相当,比L1访问还快。*/ flush_cache_range(vma, addr, end); do { /* 计算下一个将要被映射的虚拟地址,如果addr到end可以被一个pgd映射的话,那么返回end的值 */ next = pgd_addr_end(addr, end); /*完成虚拟内存和物理内存映射,本质就是填写完CR3指向的页表; 过程就是逐级完成:1级是pgd,上面已经完成;2级是pud,3级是pmd,4级是pte */ err = remap_pud_range(mm, pgd, addr, next, pfn + (addr >> PAGE_SHIFT), prot); if (err) break; } while (pgd++, addr = next, addr != end); if (err) untrack_pfn(vma, remap_pfn, PAGE_ALIGN(size)); return err; }

最核心的就是remap_pud_range方法了,从这个方法开始,逐级构造页表的各个映射转换!阅读代码前,可以先熟悉一下4级页表转换原理如下:

代码如下:3个方法的结构类似,层层深入,直到最后一级pte!pte内部调用set_pte_at方法最终完成物理地址和虚拟地址的映射!

/* * maps a range of physical memory into the requested pages. the old * mappings are removed. any references to nonexistent pages results * in null mappings (currently treated as "copy-on-access") */ static int remap_pte_range(struct mm_struct *mm, pmd_t *pmd, unsigned long addr, unsigned long end, unsigned long pfn, pgprot_t prot) { pte_t *pte; spinlock_t *ptl; pte = pte_alloc_map_lock(mm, pmd, addr, &ptl); if (!pte) return -ENOMEM; arch_enter_lazy_mmu_mode(); do { BUG_ON(!pte_none(*pte)); /*这是映射的最后一级:把物理地址的值填写到pte表项*/ set_pte_at(mm, addr, pte, pte_mkspecial(pfn_pte(pfn, prot))); pfn++; } while (pte++, addr += PAGE_SIZE, addr != end); arch_leave_lazy_mmu_mode(); pte_unmap_unlock(pte - 1, ptl); return 0; } static inline int remap_pmd_range(struct mm_struct *mm, pud_t *pud, unsigned long addr, unsigned long end, unsigned long pfn, pgprot_t prot) { pmd_t *pmd; unsigned long next; pfn -= addr >> PAGE_SHIFT; pmd = pmd_alloc(mm, pud, addr); if (!pmd) return -ENOMEM; VM_BUG_ON(pmd_trans_huge(*pmd)); do { next = pmd_addr_end(addr, end); if (remap_pte_range(mm, pmd, addr, next, pfn + (addr >> PAGE_SHIFT), prot)) return -ENOMEM; } while (pmd++, addr = next, addr != end); return 0; } static inline int remap_pud_range(struct mm_struct *mm, pgd_t *pgd, unsigned long addr, unsigned long end, unsigned long pfn, pgprot_t prot) { pud_t *pud; unsigned long next; pfn -= addr >> PAGE_SHIFT; /*返回pgd值*/ pud = pud_alloc(mm, pgd, addr); if (!pud) return -ENOMEM; do { next = pud_addr_end(addr, end); if (remap_pmd_range(mm, pud, addr, next, pfn + (addr >> PAGE_SHIFT), prot)) return -ENOMEM; } while (pud++, addr = next, addr != end); return 0; }

注意事项&总结事项:

1、脱壳的时候如果遇到mmap就要注意了:有可能是要加载壳文件了!

2、页对齐的代码:也可以借鉴用来做其他数字的对齐,把PAGE_SIZE改成其他数字就好

#define PAGE_MASK (~(PAGE_SIZE-1)) #define PAGE_ALIGN(x) ((x + PAGE_SIZE - 1) & PAGE_MASK)

3、核心原理:只分配1块物理内存,把进程的虚拟地址映射到这块物理内存,达到读写一次到位的目的!

参考:

1、https://mp.weixin.qq.com/s/y4LT5rtLZXXSvk66w3tVcQ 三种实现mmap的方式

2、https://www.bilibili.com/video/BV1XK411A7q2 linux mmap机制

3、https://www.bilibili.com/video/BV1mk4y1C76p mmap机制

4、https://www.leviathan.vip/2019/01/13/mmap%E6%BA%90%E7%A0%81%E5%88%86%E6%9E%90/ mmap源码分析

5、https://www.cnblogs.com/pengdonglin137/p/8150981.html remap_pfn_range源码分析

6、https://zhuanlan.zhihu.com/p/79607142 TLB缓存

浙公网安备 33010602011771号

浙公网安备 33010602011771号