【CoreImage】

-

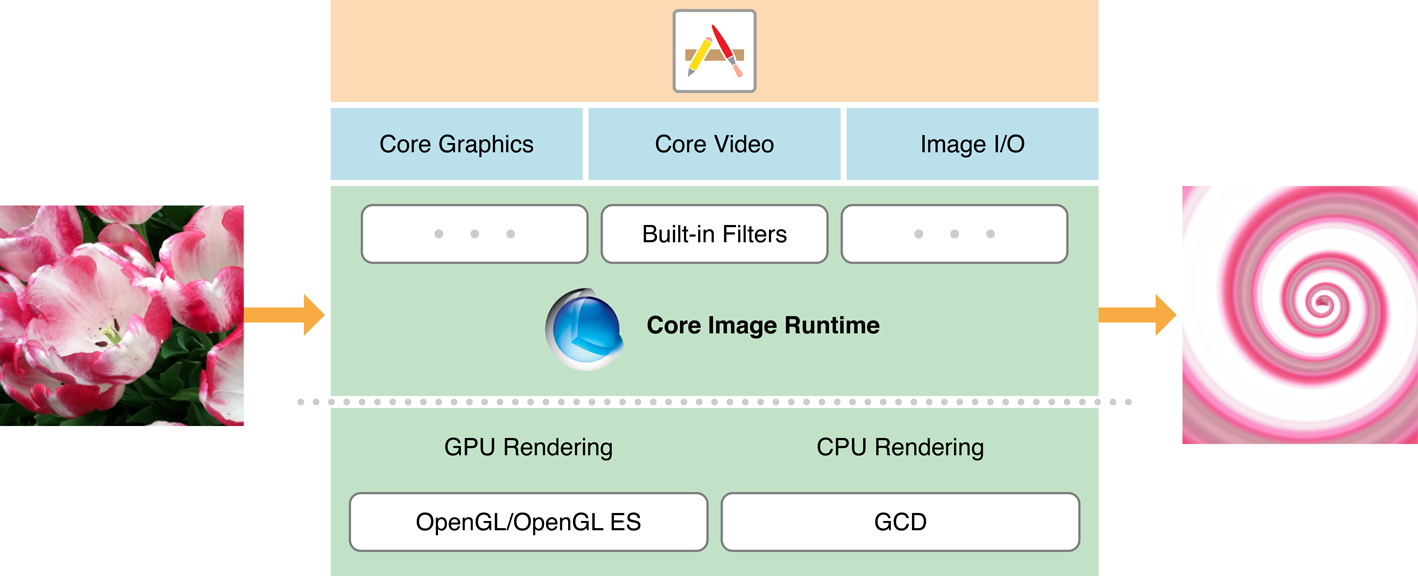

CIContextis an object through which Core Image draws the results produced by a filter. A Core Image context can be based on the CPU or the GPU.

CIContext *context = [CIContext contextWithOptions:nil]; // 1 CIImage *image = [CIImage imageWithContentsOfURL:myURL]; // 2 CIFilter *filter = [CIFilter filterWithName:@"CISepiaTone"]; // 3 [filter setValue:image forKey:kCIInputImageKey]; [filter setValue:@0.8f forKey:kCIInputIntensityKey]; CIImage *result = [filter valueForKey:kCIOutputImageKey]; // 4 CGRect extent = [result extent]; CGImageRef cgImage = [context createCGImage:result fromRect:extent]; // 5

The contextWithOptions: method is only available on iOS.

The list of built-in filters can change, so for that reason, Core Image provides methods that let you query the system for the available filters.

Most filters have one or more input parameters that let you control how processing is done. Each input parameter has an attribute class that specifies its data type, such as NSNumber. An input parameter can optionally have other attributes, such as its default value, the allowable minimum and maximum values, the display name for the parameter, and any other attributes that are described in CIFilter Class Reference.

If your app supports real-time image processing you should create a CIContext object from an EAGL context rather than usingcontextWithOptions: and specifying the GPU.

Core Image does not perform any image processing until you call a method that actually renders the image.

【Detecting Faces in an Image】

Core Image can analyze and find human faces in an image. It performs face detection, not recognition. Face detection is the identification of rectangles that contain human face features, whereas face recognition is the identification of specific human faces (John, Mary, and so on). After Core Image detects a face, it can provide information about face features, such as eye and mouth positions. It can also track the position an identified face in a video.

CIContext *context = [CIContext contextWithOptions:nil]; // 1 NSDictionary *opts = @{ CIDetectorAccuracy : CIDetectorAccuracyHigh }; // 2 CIDetector *detector = [CIDetector detectorOfType:CIDetectorTypeFace context:context options:opts]; // 3 opts = @{ CIDetectorImageOrientation : [[myImage properties] valueForKey:kCGImagePropertyOrientation] }; // 4 NSArray *features = [detector featuresInImage:myImage options:opts]; // 5

The only type of detector you can create is one for human faces. Core Image returns an array of CIFeature objects, each of which represents a face in the image.

Face features include:

-

left and right eye positions

-

mouth position

-

tracking ID and tracking frame count which Core Image uses to follow a face in a video segment

// Examining face feature bounds for (CIFaceFeature *f in features) { NSLog(NSStringFromRect(f.bounds)); if (f.hasLeftEyePosition) NSLog("Left eye %g %g", f.leftEyePosition.x. f.leftEyePosition.y); if (f.hasRightEyePosition) NSLog("Right eye %g %g", f.rightEyePosition.x. f.rightEyePosition.y); if (f.hasmouthPosition) NSLog("Mouth %g %g", f.mouthPosition.x. f.mouthPosition.y); }

【Auto Enhancement】

The auto enhancement feature of Core Image analyzes an image for its histogram, face region contents, and metadata properties. It then returns an array of CIFilter objects whose input parameters are already set to values that will improve the analyzed image.

The auto enhancement API has only two methods: autoAdjustmentFilters and autoAdjustmentFiltersWithOptions:. In most cases, you’ll want to use the method that provides an options dictionary.

You can set these options:

-

The image orientation, which is important for the CIRedEyeCorrection and CIFaceBalance filters, so that Core Image can find faces accurately.

-

Whether to apply only red eye correction. (Set

kCIImageAutoAdjustEnhancetofalse.) -

Whether to apply all filters except red eye correction. (Set

kCIImageAutoAdjustRedEyetofalse.)

// Getting auto enhancement filters and applying them to an image NSDictionary *options = @{ CIDetectorImageOrientation : [[image properties] valueForKey:kCGImagePropertyOrientation] }; NSArray *adjustments = [myImage autoAdjustmentFiltersWithOptions:options]; for (CIFilter *filter in adjustments) { [filter setValue:myImage forKey:kCIInputImageKey]; myImage = filter.outputImage; }

Use the filterNamesInCategory: and filterNamesInCategories: methods to discover exactly which filters are available.

使用上述2种方法,可以根据Category来查询可用的fitler。

最后,网上看到一篇文章说GPUImage开源库中的filter比CoreImage更加高效。

参考:

浙公网安备 33010602011771号

浙公网安备 33010602011771号