使用Pytorch实现逻辑回归深度学习模型

import torch

import numpy as np

import pandas as pd

import matplotlib.pyplot

from torch import nn

#读取数据

data = pd.read_csv(r'C:\Users\22789\Desktop\学校课程学习\路飞python\1.基础部分(第1-7章)参考代码和数据集\第4章\credit-a.csv',header=None)

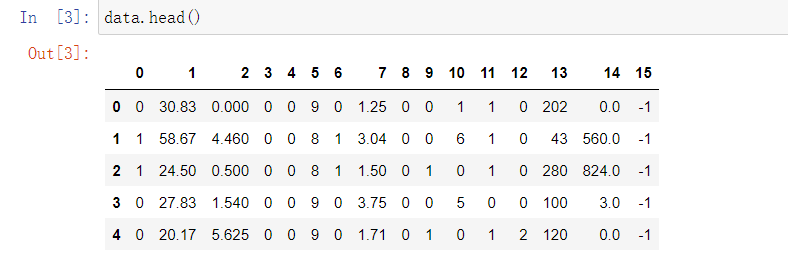

#由下面图一可以知道,左边15列是特征值,最后一列是标签

#接下来是数据预处理,分别取出特征值和标签

X = data.iloc[:,:-1] # 取特征值

Y = data.iloc[:,-1].replace(-1,0)

X = torch.from_numpy(X.values).type(torch.float32)

Y = torch.from_numpy(Y.values.reshape(-1,1)).type(torch.float32)#转换成tensor

model = nn.Sequential(

nn.Linear(15,1), #输入特征是15,输出特征是1

nn.Sigmoid()

) #顺序连接多个层

# 1.初始化损失函数

loss_fn = nn.BCELoss() # 二分类交叉熵损失函数

opt = torch.optim.Adam(model.parameters(),lr = 0.002) #优化函数

batches = 16

number_batch = 653//16

epoches = 1888 #定义训练论数为1888

for epoch in range(epoches):

for batch in range(number_batch):

start = batch * batches

end = start + batches

x = X[start:end]

y = Y[start:end]

y_pred = model(x)

loss = loss_fn(y_pred,y)

opt.zero_grad()

loss.backward()

opt.step()

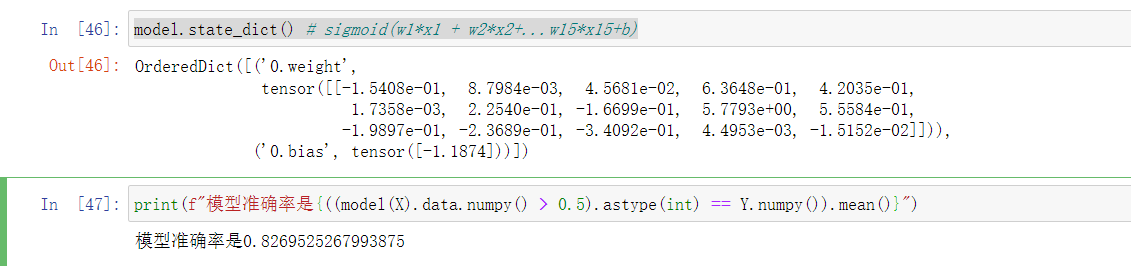

model.state_dict() # sigmoid(w1*x1 + w2*x2+...w15*x15+b)

print(f"模型准确率是{((model(X).data.numpy() > 0.5).astype(int) == Y.numpy()).mean()}")

训练预测结果:

本文来自博客园,作者:TCcjx,转载请注明原文链接:https://www.cnblogs.com/tccjx/articles/16473687.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号