三、Kubernetes (一)

一、部署coredns、梳理域名解析流程

1. 安装部署coredns

1.1 上传提前下载好的kubernetes文件包并解压

root@k8s-master1:/usr/local/src# ll

total 468448

drwxr-xr-x 2 root root 4096 Jan 15 07:43 ./

drwxr-xr-x 10 root root 4096 Aug 24 08:42 ../

-rw-r--r-- 1 root root 29011907 Jan 1 05:54 kubernetes-v1.22.5-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 118916964 Jan 1 05:54 kubernetes-v1.22.5-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 331170423 Jan 1 05:54 kubernetes-v1.22.5-server-linux-amd64.tar.gz

-rw-r--r-- 1 root root 565584 Jan 1 05:54 kubernetes-v1.22.5.tar.gz

root@k8s-master1:/usr/local/src# tar xf kubernetes-v1.22.5-client-linux-amd64.tar.gz

root@k8s-master1:/usr/local/src# tar xf kubernetes-v1.22.5-node-linux-amd64.tar.gz

root@k8s-master1:/usr/local/src# tar xf kubernetes-v1.22.5-server-linux-amd64.tar.gz

root@k8s-master1:/usr/local/src# tar xf kubernetes-v1.22.5.tar.gz

root@k8s-master1:/usr/local/src# ll

total 468452

drwxr-xr-x 3 root root 4096 Jan 15 07:47 ./

drwxr-xr-x 10 root root 4096 Aug 24 08:42 ../

drwxr-xr-x 10 root root 4096 Dec 16 08:54 kubernetes/

-rw-r--r-- 1 root root 29011907 Jan 1 05:54 kubernetes-v1.22.5-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 118916964 Jan 1 05:54 kubernetes-v1.22.5-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 331170423 Jan 1 05:54 kubernetes-v1.22.5-server-linux-amd64.tar.gz

-rw-r--r-- 1 root root 565584 Jan 1 05:54 kubernetes-v1.22.5.tar.gz

1.2 复制coredns.yaml.base文件到root目录,并进行修改

root@k8s-master1:/usr/local/src# cd kubernetes/

root@k8s-master1:/usr/local/src/kubernetes# ll

total 35232

drwxr-xr-x 10 root root 4096 Dec 16 08:54 ./

drwxr-xr-x 3 root root 4096 Jan 15 07:47 ../

drwxr-xr-x 2 root root 4096 Dec 16 08:50 addons/

drwxr-xr-x 3 root root 4096 Dec 16 08:54 client/

drwxr-xr-x 9 root root 4096 Dec 16 08:54 cluster/

drwxr-xr-x 2 root root 4096 Dec 16 08:54 docs/

drwxr-xr-x 3 root root 4096 Dec 16 08:54 hack/

-rw-r--r-- 1 root root 36027025 Dec 16 08:50 kubernetes-src.tar.gz

drwxr-xr-x 4 root root 4096 Dec 16 08:54 LICENSES/

drwxr-xr-x 3 root root 4096 Dec 16 08:48 node/

-rw-r--r-- 1 root root 3387 Dec 16 08:54 README.md

drwxr-xr-x 3 root root 4096 Dec 16 08:54 server/

-rw-r--r-- 1 root root 8 Dec 16 08:54 version

root@k8s-master1:/usr/local/src/kubernetes# cd cluster/addons/dns

root@k8s-master1:/usr/local/src/kubernetes/cluster/addons/dns# ll

total 24

drwxr-xr-x 5 root root 4096 Dec 16 08:54 ./

drwxr-xr-x 20 root root 4096 Dec 16 08:54 ../

drwxr-xr-x 2 root root 4096 Dec 16 08:54 coredns/

drwxr-xr-x 2 root root 4096 Dec 16 08:54 kube-dns/

drwxr-xr-x 2 root root 4096 Dec 16 08:54 nodelocaldns/

-rw-r--r-- 1 root root 129 Dec 16 08:54 OWNERS

root@k8s-master1:/usr/local/src/kubernetes/cluster/addons/dns# cd coredns/

root@k8s-master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# ll

total 44

drwxr-xr-x 2 root root 4096 Dec 16 08:54 ./

drwxr-xr-x 5 root root 4096 Dec 16 08:54 ../

-rw-r--r-- 1 root root 4966 Dec 16 08:54 coredns.yaml.base

-rw-r--r-- 1 root root 5016 Dec 16 08:54 coredns.yaml.in

-rw-r--r-- 1 root root 5018 Dec 16 08:54 coredns.yaml.sed

-rw-r--r-- 1 root root 1075 Dec 16 08:54 Makefile

-rw-r--r-- 1 root root 344 Dec 16 08:54 transforms2salt.sed

-rw-r--r-- 1 root root 287 Dec 16 08:54 transforms2sed.sed

root@k8s-master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cp coredns.yaml.base /root/coredns.yaml

root@k8s-master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cd ~

root@k8s-master1:~# ll

total 96

drwx------ 7 root root 4096 Jan 15 07:50 ./

drwxr-xr-x 21 root root 4096 Dec 27 13:15 ../

drwxr-xr-x 3 root root 4096 Jan 8 08:28 .ansible/

-rw------- 1 root root 2584 Jan 15 07:11 .bash_history

-rw-r--r-- 1 root root 3190 Jan 8 08:36 .bashrc

drwx------ 2 root root 4096 Dec 27 14:34 .cache/

-rw-r--r-- 1 root root 4966 Jan 15 07:50 coredns.yaml

-rwxr-xr-x 1 root root 15351 Jan 8 07:15 ezdown*

drwxr-xr-x 3 root root 4096 Jan 8 09:41 .kube/

-rw-r--r-- 1 root root 161 Dec 5 2019 .profile

drwxr-xr-x 3 root root 4096 Dec 27 13:33 snap/

drwx------ 2 root root 4096 Jan 8 07:04 .ssh/

-rw------- 1 root root 21437 Jan 15 07:14 .viminfo

-rw-r--r-- 1 root root 218 Jan 8 07:19 .wget-hsts

-rw------- 1 root root 117 Jan 15 07:12 .Xauthority

root@k8s-master1:~# vim coredns.yaml

##修改第70、135、139、205行

70 kubernetes __DNS__DOMAIN__ in-addr.arpa ip6.arpa

70 kubernetes cluster.local in-addr.arpa ip6.arpa

135 image: k8s.gcr.io/coredns/coredns:v1.8.0

135 image: coredns/coredns:1.8.6

139 memory: __DNS__MEMORY__LIMIT__

139 memory: 256Mi

205 clusterIP: __DNS__SERVER__

205 clusterIP: 10.100.0.2

##DNS__DOMAIN可以通过kubernetes的hosts文件查询

root@k8s-master1:~# cat /etc/kubeasz/clusters/k8s-cluster1/hosts

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

##查询

1.3 安装coredns

root@k8s-master1:~# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (108m ago) 6d18h

default net-test2 1/1 Running 1 (108m ago) 6d18h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (62m ago) 6d18h

kube-system calico-node-2vt85 1/1 Running 0 6d18h

kube-system calico-node-6dcbp 1/1 Running 1 (6d18h ago) 6d18h

kube-system calico-node-trnbj 1/1 Running 1 (108m ago) 6d18h

kube-system calico-node-vmm5c 1/1 Running 1 (108m ago) 6d18h

kube-system coredns-69d84cdc49-qpxgr 0/1 Running 0 32s

1.4 解决coredns无法启动的问题

root@k8s-master1:~# kubectl logs coredns-69d84cdc49-qpxgr -n kube-system

E0115 08:17:03.463381 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

[INFO] plugin/ready: Still waiting on: "kubernetes"

[INFO] plugin/ready: Still waiting on: "kubernetes"

root@k8s-master1:~# vim coredns.yaml

##在35行下添加以下内容

36 - apiGroups:

37 - discovery.k8s.io

38 resources:

39 - endpointslices

40 verbs:

41 - list

42 - watch

root@k8s-master1:~# kubectl apply -f coredns.yaml

serviceaccount/coredns unchanged

clusterrole.rbac.authorization.k8s.io/system:coredns configured

clusterrolebinding.rbac.authorization.k8s.io/system:coredns unchanged

configmap/coredns unchanged

deployment.apps/coredns unchanged

service/kube-dns unchanged

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (118m ago) 6d18h

default net-test2 1/1 Running 1 (118m ago) 6d18h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (72m ago) 6d18h

kube-system calico-node-2vt85 1/1 Running 0 6d18h

kube-system calico-node-6dcbp 1/1 Running 1 (6d18h ago) 6d18h

kube-system calico-node-trnbj 1/1 Running 1 (118m ago) 6d18h

kube-system calico-node-vmm5c 1/1 Running 1 (118m ago) 6d18h

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 10m

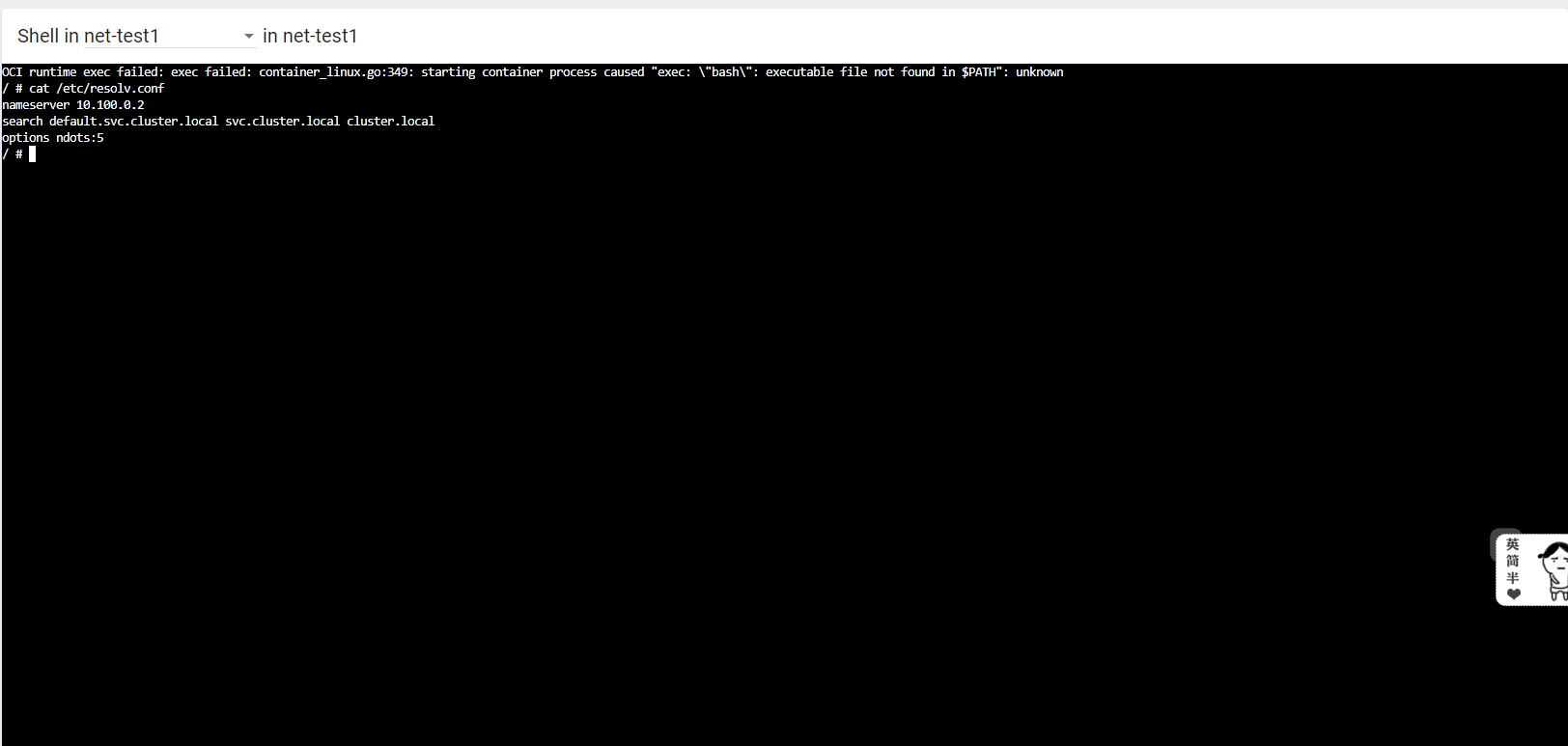

1.5 进入容器验证coredns

root@k8s-master1:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # cat /etc/resolv.conf

nameserver 10.100.0.2

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

/ # exit

root@k8s-master1:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping www.baidu.com

PING www.baidu.com (14.215.177.38): 56 data bytes

64 bytes from 14.215.177.38: seq=0 ttl=127 time=12.625 ms

64 bytes from 14.215.177.38: seq=1 ttl=127 time=10.688 ms

^C

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 10.688/11.656/12.625 ms

2. coredns域名解析流程

从 K8S 1.11 开始,K8S 已经使用 CoreDNS,替换 KubeDNS 来充当其 DNS 解析

CoreDNS规定协议:DNS、DNS over TLS、DNS over HTTP/2、DNS over gRPC

kubernetes 中的pod基于service域名解析后,再负载均衡分发到service后端的各个pod服务中,如果没有DNS解析,则无法查到各个服务对应的service服务;

kubernetes 服务发现有两种方式:

- 基于环境变量的方式

- 基于内部域名的方式

DNS如何解析,依赖于容器内resolv文件的配置

/ # cat /etc/resolv.conf

nameserver 10.100.0.2

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

ndots:5 : 如果查询的域名包含的点“.”不到5个,那么进行DNS查找,将使用非完全限定名称或者叫绝对域名,如果查询的域名包含点数大于等于5,那么DNS查询,默认会使用绝对域名进行查询。

Kubernetes 域名的全称,必须是 service-name.namespace.svc.cluster.local 这种模式

/ # nslookup kubernetes.default.svc.cluster.local

Server: 10.100.0.2

Address: 10.100.0.2:53

Name: kubernetes.default.svc.cluster.local

Address: 10.100.0.1

DNS策略:

- None:用于自定义DNS配置场景,需要和DNSConfig配合使用;

- Default:让kubelet来决定使用何种DNS策略,默认使用宿主机的/etc/resolv.conf,kubelet配置使用策略参数:--resolv-conf;

- ClusterFirst:表示Pod内的DNS使用集群中配置的DNS服务,使用kubernetes中的kube-dns或coredns服务进行域名解析。

- ClusterFirstWithHostNet:POD 是用 HOST 模式启动的(HOST模式),用 HOST 模式表示 POD 中的所有容器,都使用宿主机的 /etc/resolv.conf 进行 DNS 查询,但如果使用了 HOST 模式,还继续使用Kubernetes 的 DNS 服务,那就将 dnsPolicy 设置为 ClusterFirstWithHostNet

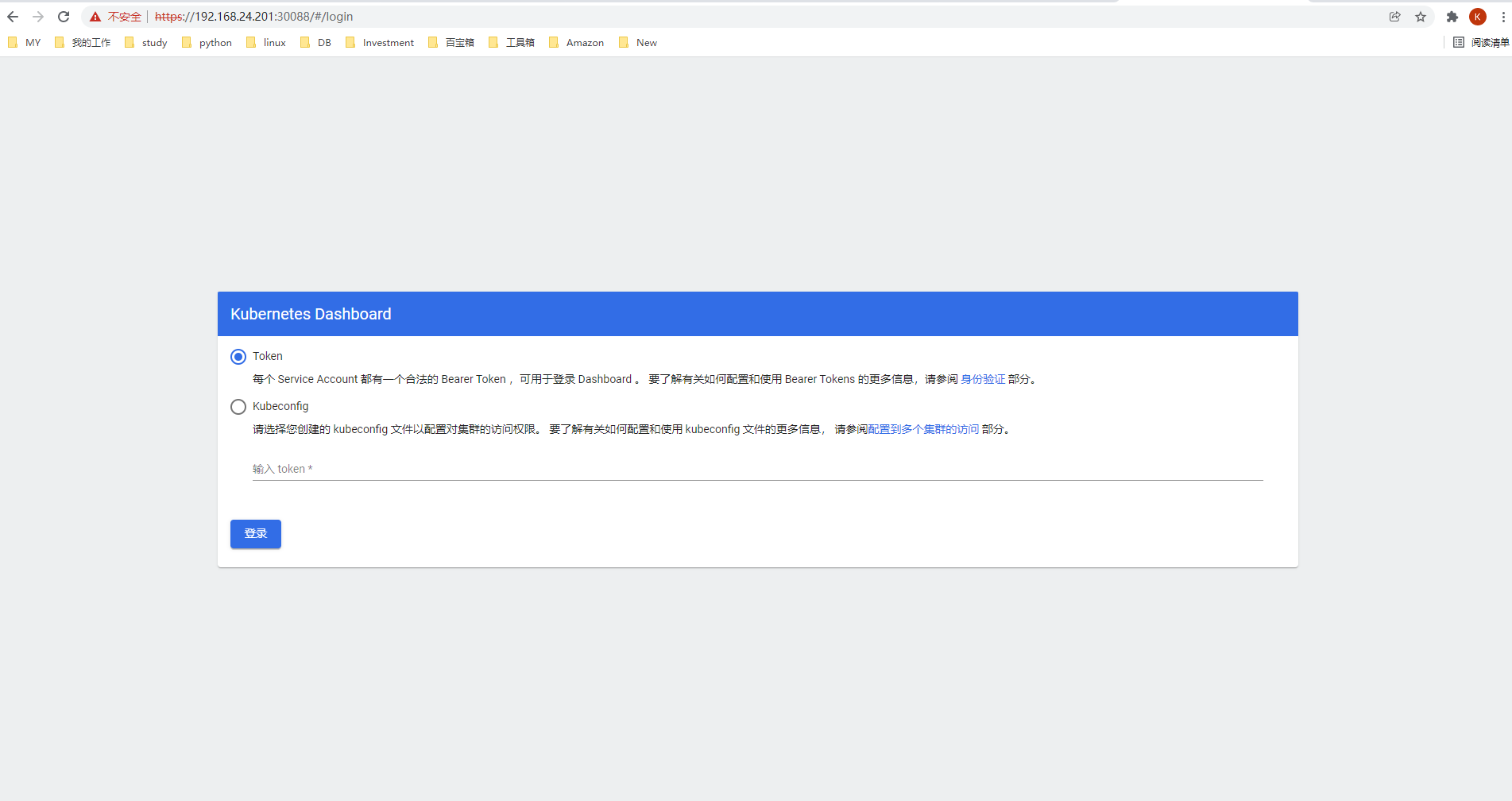

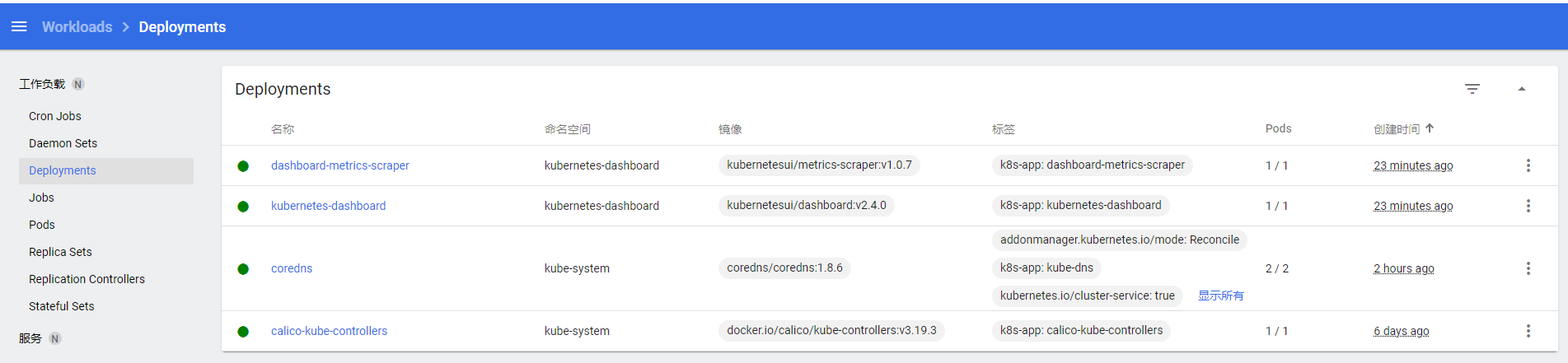

二、dashboard的使用

1. 官方DashBoard部署

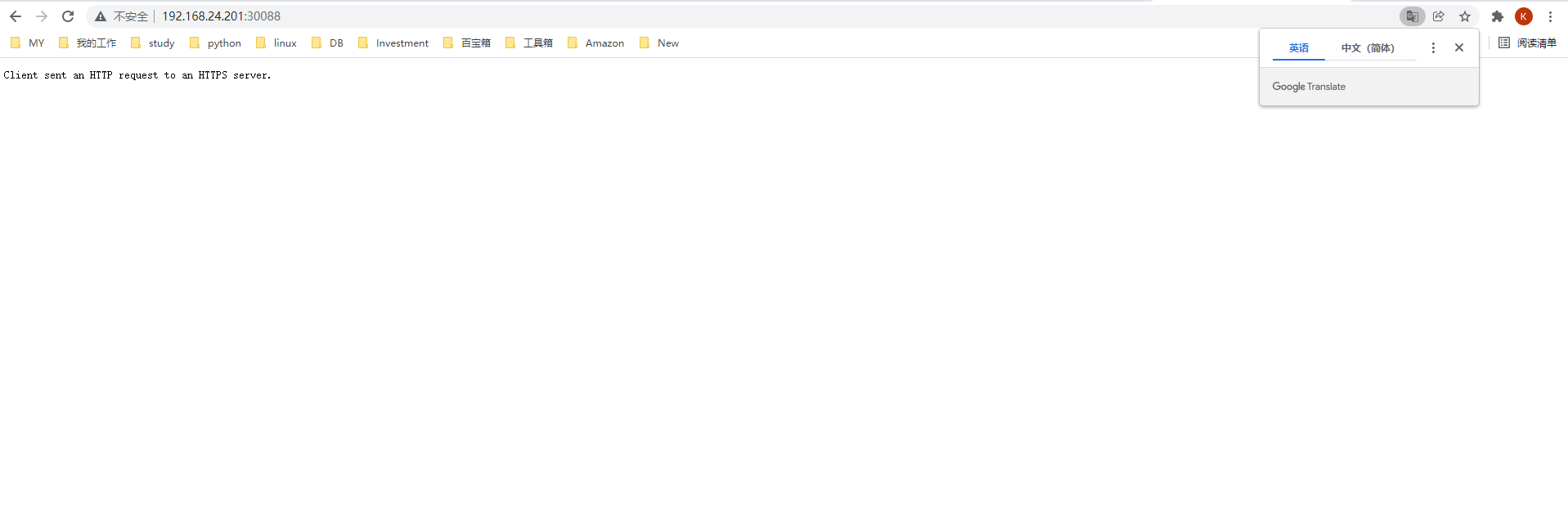

1.1 GitHub下载官方DashBoard并进行安装部署

root@k8s-master1:/usr/local/src# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

root@k8s-master1:/usr/local/src# mv recommended.yaml /root/dashboard-v2.4.0.yaml

root@k8s-master1:~# docker pull kubernetesui/dashboard:v2.4.0

root@k8s-master1:~# docker pull kubernetesui/metrics-scraper:v1.0.7

root@k8s-master1:~# vim dashboard-v2.4.0.yaml

##添加并修改以下内容

39 spec:

40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 30088

45 selector:

46 k8s-app: kubernetes-dashboard

root@k8s-master1:~# kubectl apply -f dashboard-v2.4.0.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (4h10m ago) 6d20h

default net-test2 1/1 Running 1 (4h10m ago) 6d20h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (3h23m ago) 6d20h

kube-system calico-node-2vt85 1/1 Running 0 6d20h

kube-system calico-node-6dcbp 1/1 Running 2 (3h24m ago) 6d20h

kube-system calico-node-trnbj 1/1 Running 1 (4h10m ago) 6d20h

kube-system calico-node-vmm5c 1/1 Running 1 (4h10m ago) 6d20h

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 141m

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 78s

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 78s

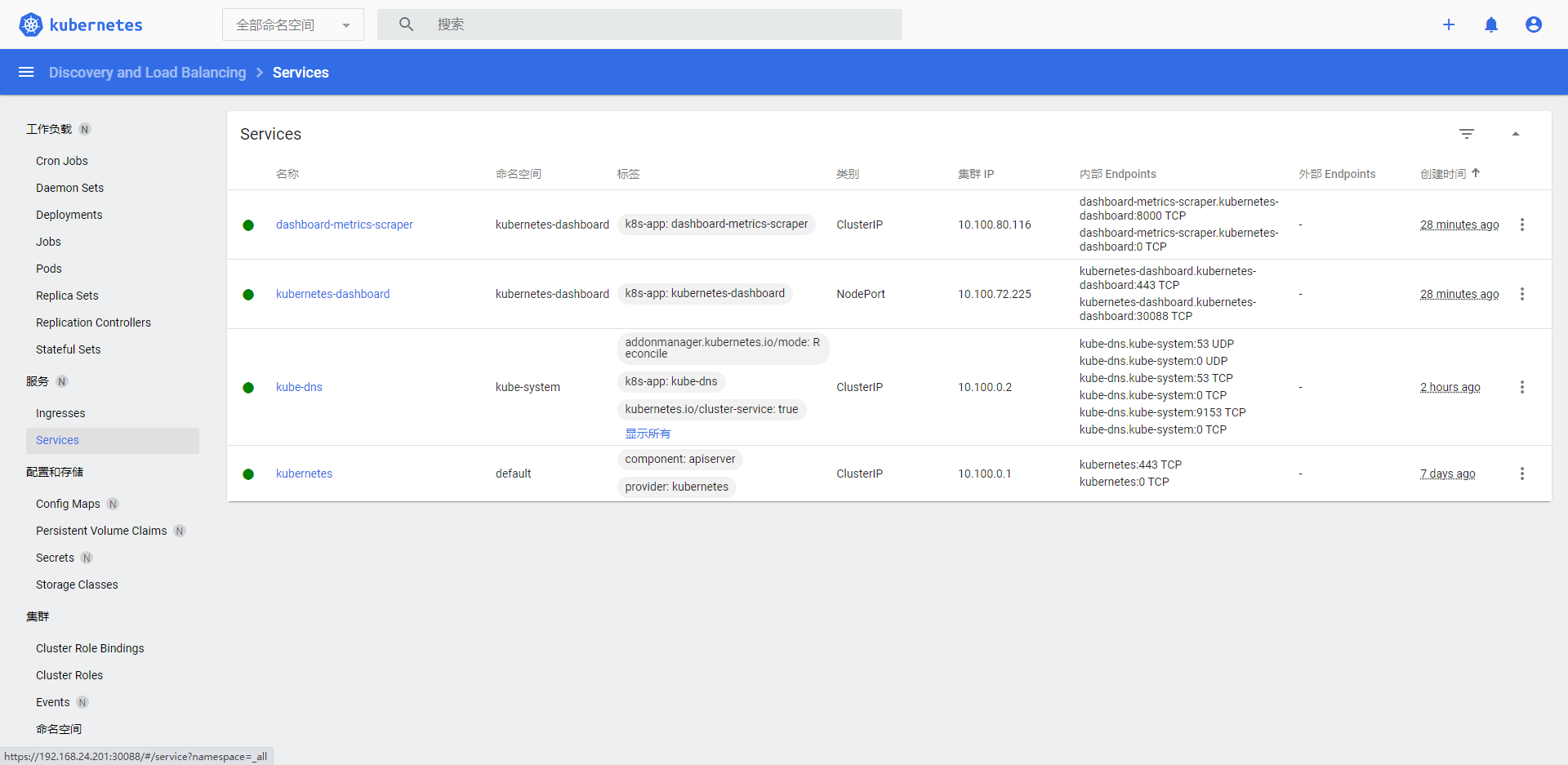

root@k8s-master1:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 7d

kube-system kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 142m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.100.80.116 <none> 8000/TCP 105s

kubernetes-dashboard kubernetes-dashboard NodePort 10.100.72.225 <none> 443:30088/TCP 105s

1.2 配置Token用户信息进行登录

root@k8s-master1:~# vim admin-user.yaml

root@k8s-master1:~# cat admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

root@k8s-master1:~# kubectl apply -f admin-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

root@k8s-master1:~# kubectl get secrets -A | grep admin

kubernetes-dashboard admin-user-token-s4l95 kubernetes.io/service-account-token 3 27s

root@k8s-master1:~# kubectl describe secrets admin-user-token-s4l95 -n kubernetes-dashboard

Name: admin-user-token-s4l95

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 91ce9439-2228-47eb-af95-83acd0159ecf

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1302 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImpBeVdmWnl0b09MUllDRzVfaDRJdURobnhEM3BmWVZKSXJwcWY2WlQ5TFUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXM0bDk1Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5MWNlOTQzOS0yMjI4LTQ3ZWItYWY5NS04M2FjZDAxNTllY2YiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.JoCLzvIyQxI2hXRkYfxeUtwxDCaKciwZ2ZK4k-_3gR_9PCVk4OFCYz1dZ_qIoNYb9Rx_-vuipigADLZdFc2PrUuRdrL3TThyqfseg9uNSbdK_QHFgvEJ_PdyEAZgojwV5gmxw8pohNqjSeNVitgGfZ4lX4p54JjlrYkW5duve12foOxQBC68XsR3oAPDyCyngwYuDy9xzJAW_b-qFNf68ps0Pzz5vCi83HJI8a-culhjpyiyJ-qGBWORXSZ23dSsvnvMLkguoJiGiRoogC5sD8yQFcPUU8C3AiGPK57jal84Rl_FCxTZWbIEfrVuGLYDzk7SSdutEH9Z7I6KV4gmDA

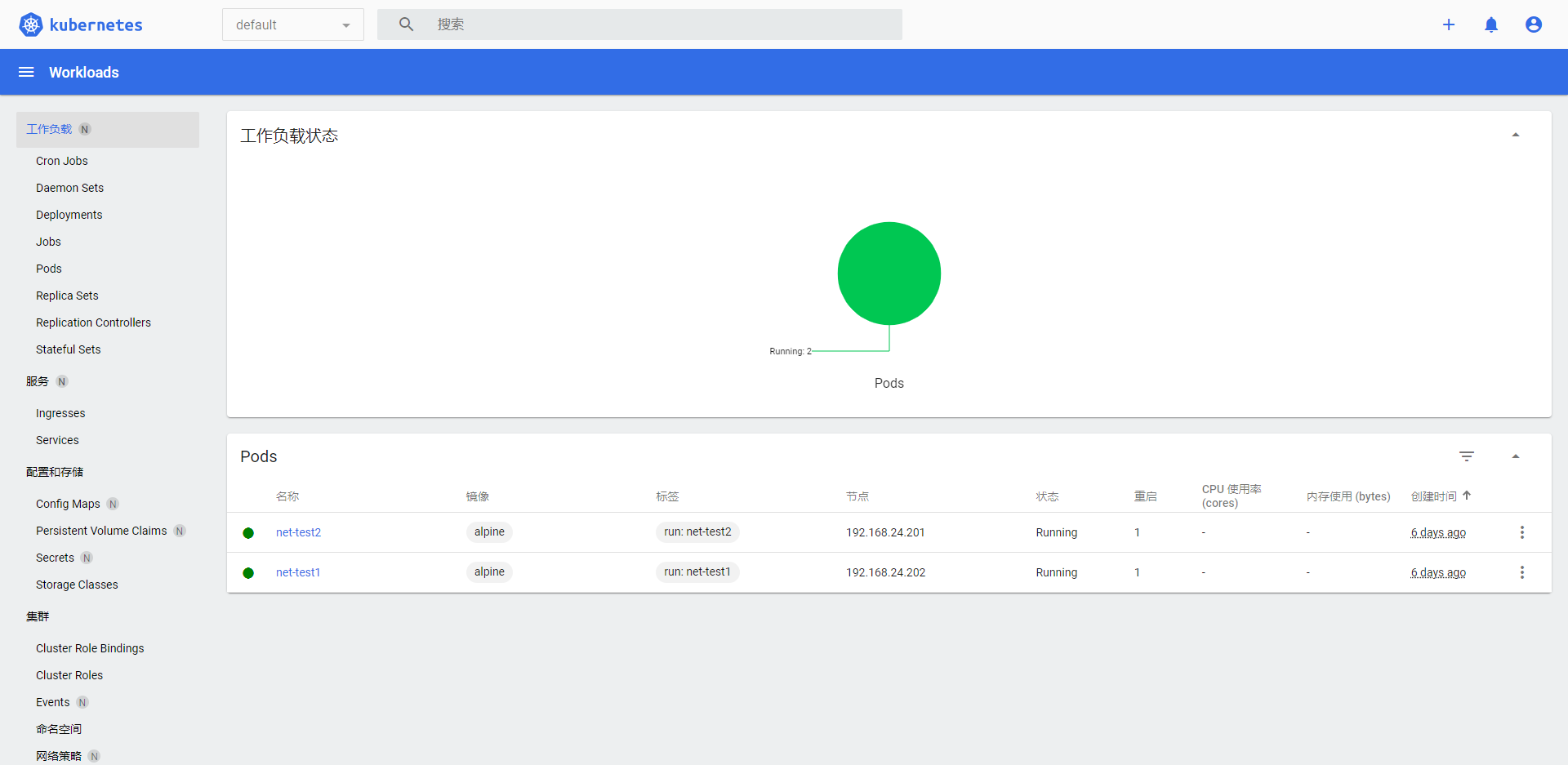

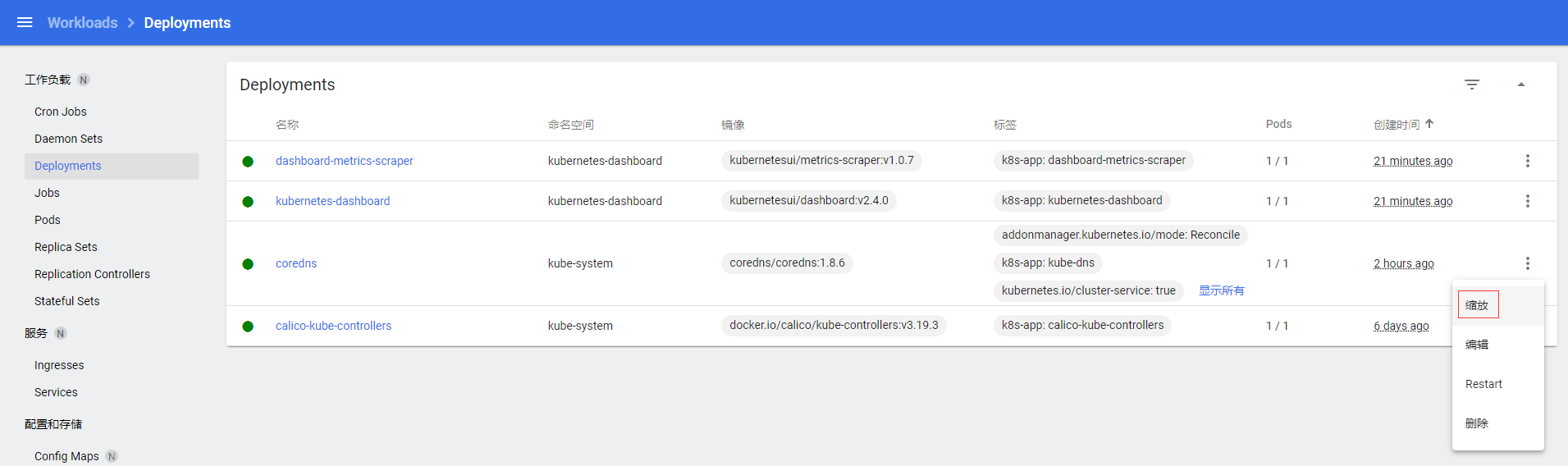

1.3 DashBoard控制Pod节点伸缩

root@k8s-master1:~# kubectl get pod -A |grep coredns

kube-system coredns-69d84cdc49-7nk59 1/1 Running 0 47s

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 163m

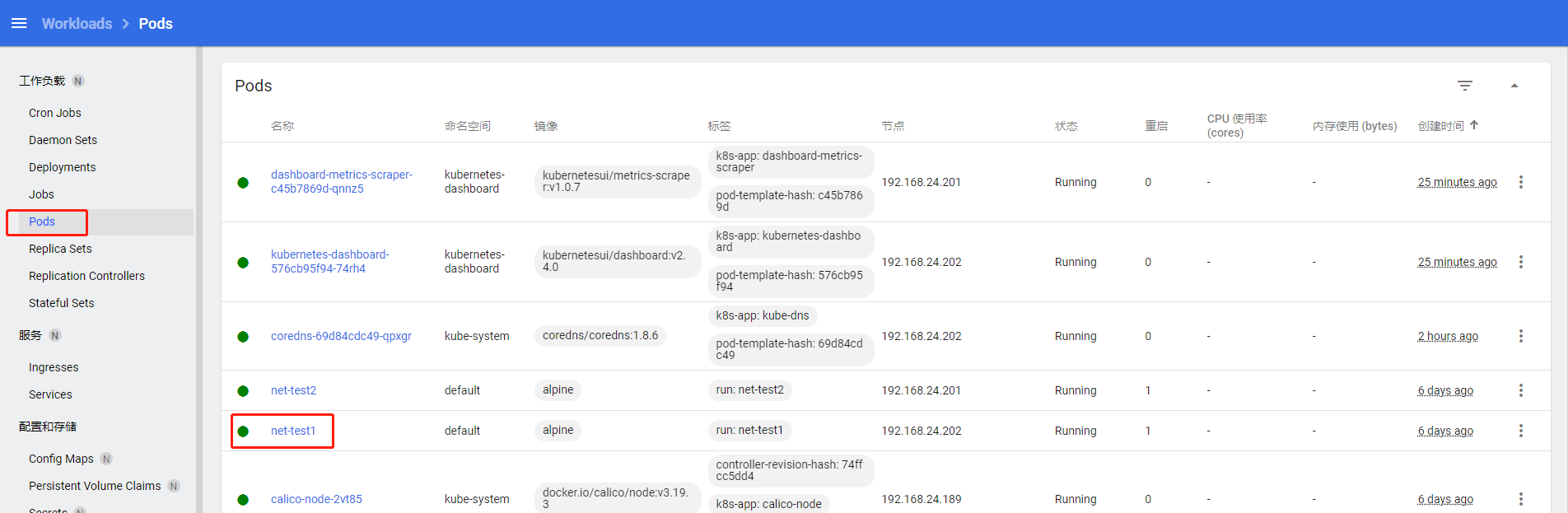

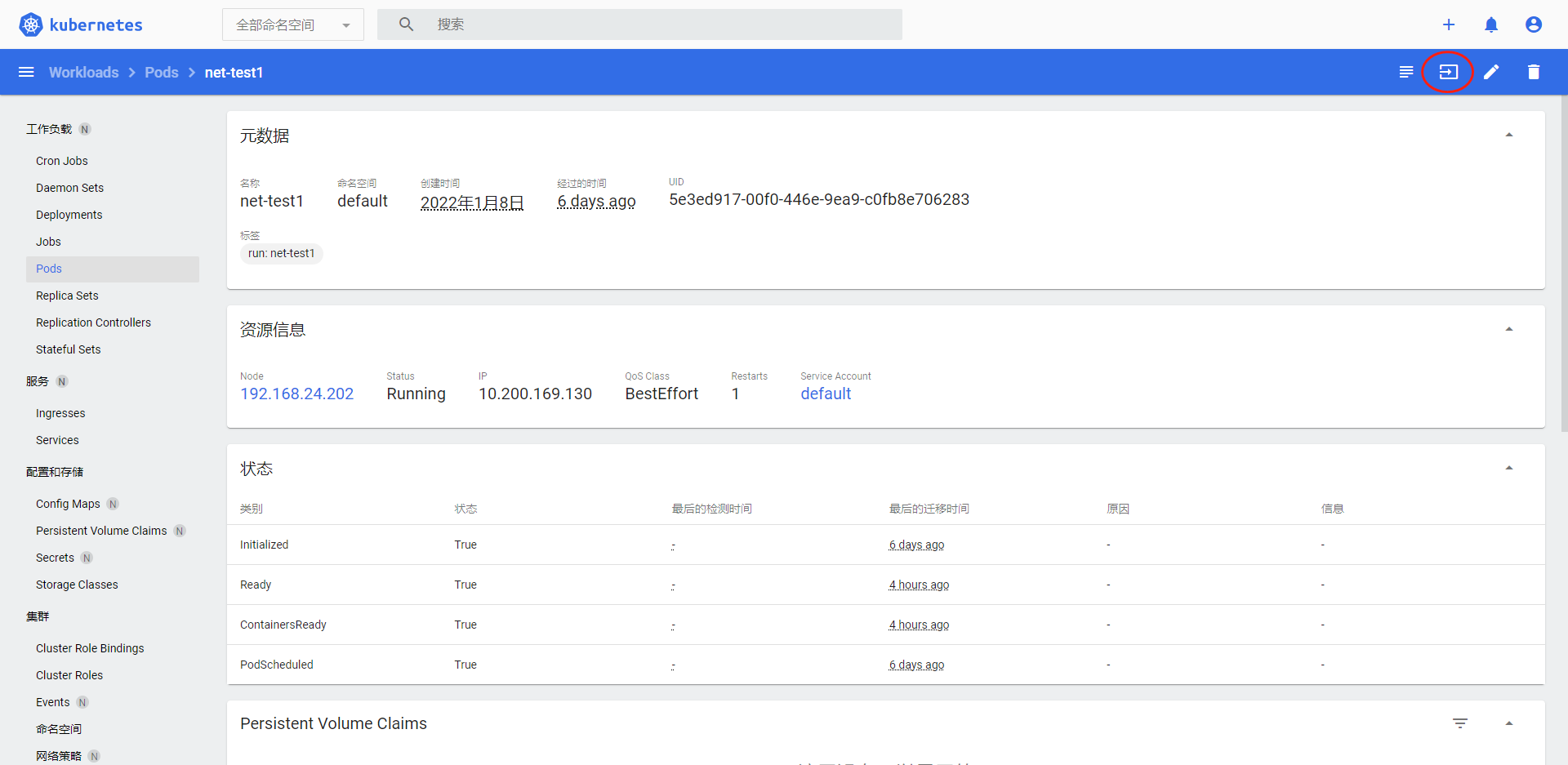

1.4 DashBoard进入Pod节点进行操作

1.5 DashBoard查看Service信息

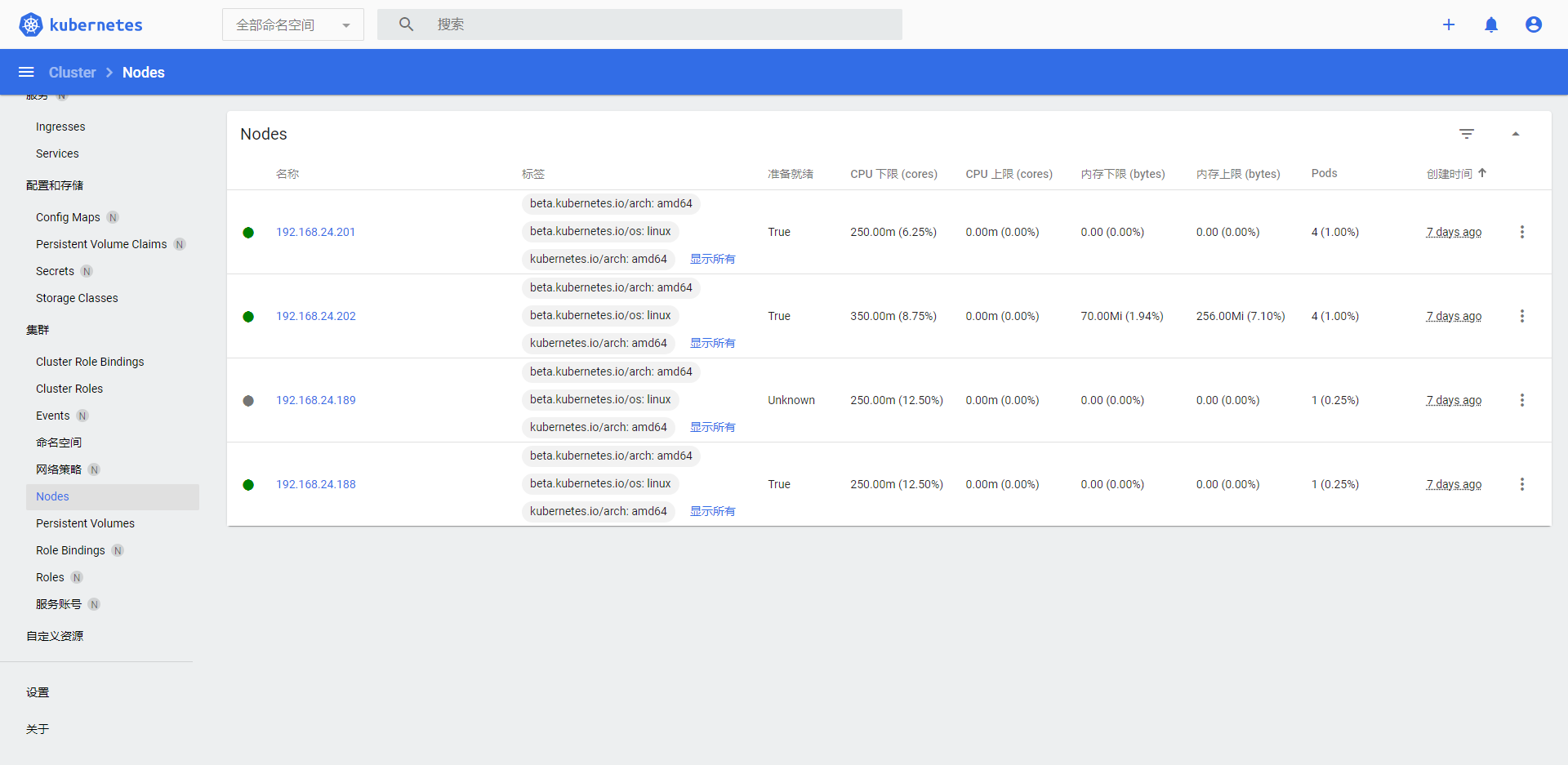

1.6 DashBoard查看Node信息

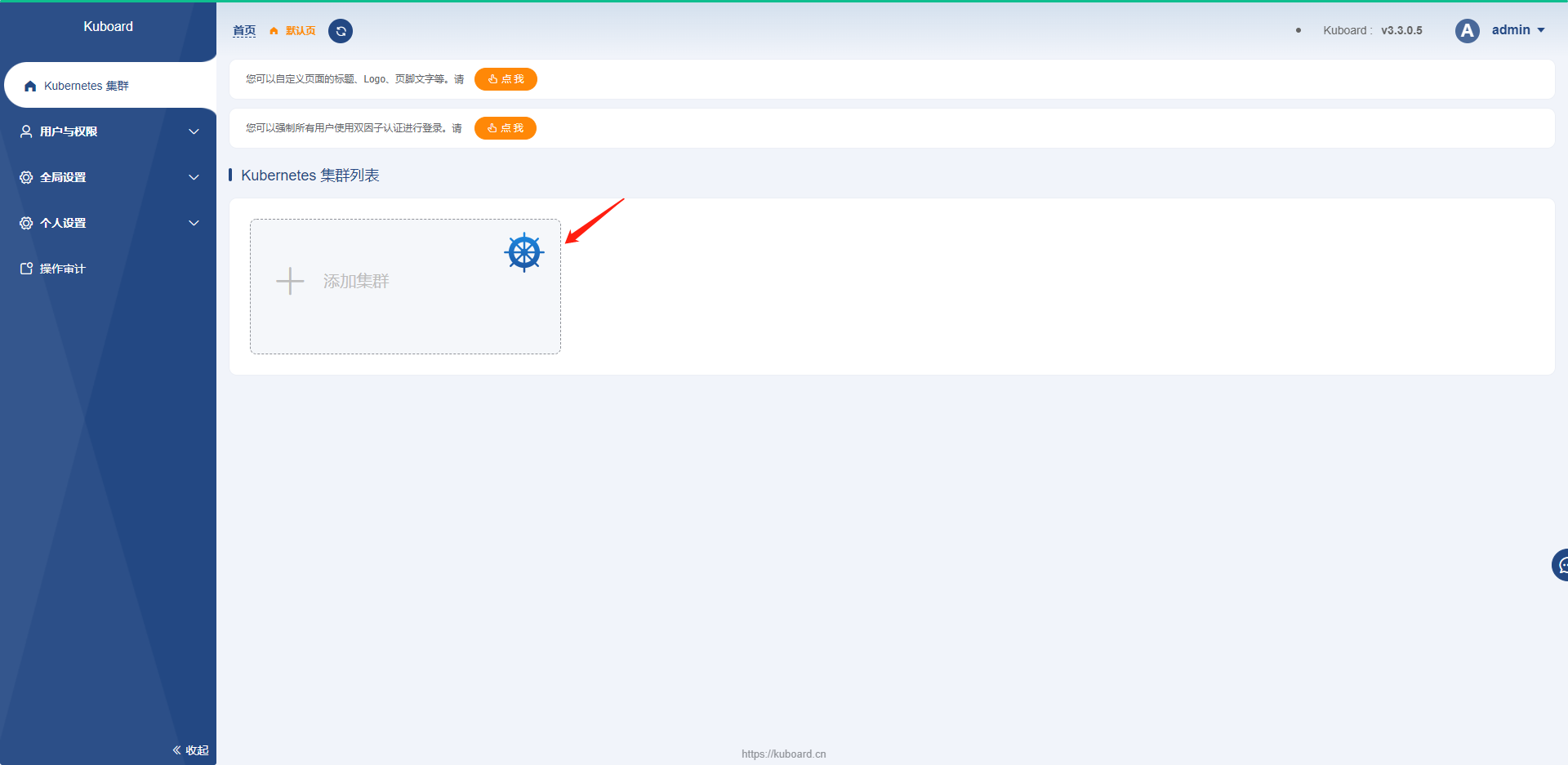

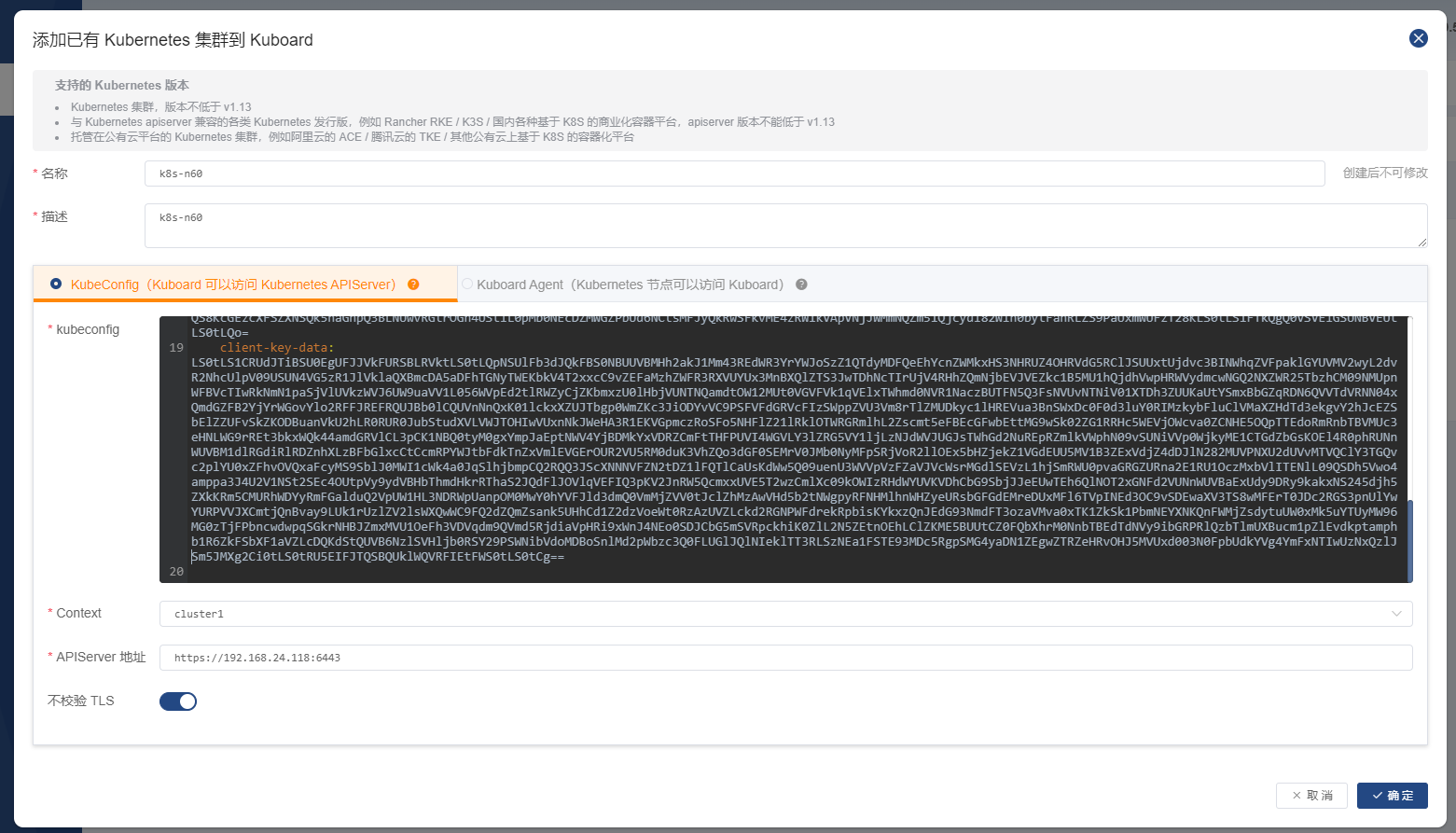

2. 第三方KuBoard部署

2.1 访问官网参照部署教程进行部署

root@docker-server1:~# docker run -d \

> --restart=unless-stopped \

> --name=kuboard \

> -p 80:80/tcp \

> -p 10081:10081/tcp \

> -e KUBOARD_ENDPOINT="http://192.168.24.135:80" \

> -e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

> -v /root/kuboard-data:/data \

> swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3

2.2 登录系统进行配置,默认账号:admin,密码:Kuboard123

三、熟练kubectl 的命令使用

1. get

1.1 查看Pod或Node列表

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (5h59m ago) 6d22h

default net-test2 1/1 Running 1 (5h59m ago) 6d22h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (5h12m ago) 6d22h

kube-system calico-node-2vt85 1/1 Running 0 6d22h

kube-system calico-node-6dcbp 1/1 Running 2 (5h13m ago) 6d22h

kube-system calico-node-trnbj 1/1 Running 1 (5h59m ago) 6d22h

kube-system calico-node-vmm5c 1/1 Running 1 (5h59m ago) 6d22h

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 4h10m

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 110m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 110m

root@k8s-master1:~# kubectl get node -A

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d2h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d2h v1.22.2

192.168.24.201 Ready node 7d2h v1.22.2

192.168.24.202 Ready node 7d2h v1.22.2

1.2 查看Pod或Node详细信息

root@k8s-master1:~# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 1 (6h5m ago) 6d22h 10.200.169.130 192.168.24.202 <none> <none>

default net-test2 1/1 Running 1 (6h5m ago) 6d22h 10.200.36.66 192.168.24.201 <none> <none>

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (5h18m ago) 6d22h 192.168.24.201 192.168.24.201 <none> <none>

kube-system calico-node-2vt85 1/1 Running 0 6d22h 192.168.24.189 192.168.24.189 <none> <none>

kube-system calico-node-6dcbp 1/1 Running 2 (5h19m ago) 6d22h 192.168.24.188 192.168.24.188 <none> <none>

kube-system calico-node-trnbj 1/1 Running 1 (6h5m ago) 6d22h 192.168.24.201 192.168.24.201 <none> <none>

kube-system calico-node-vmm5c 1/1 Running 1 (6h5m ago) 6d22h 192.168.24.202 192.168.24.202 <none> <none>

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 4h17m 10.200.169.131 192.168.24.202 <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 116m 10.200.36.67 192.168.24.201 <none> <none>

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 116m 10.200.169.132 192.168.24.202 <none> <none>

root@k8s-master1:~# kubectl get node -A -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.24.188 Ready,SchedulingDisabled master 7d2h v1.22.2 192.168.24.188 <none> Ubuntu 20.04.3 LTS 5.4.0-94-generic docker://19.3.15

192.168.24.189 NotReady,SchedulingDisabled master 7d2h v1.22.2 192.168.24.189 <none> Ubuntu 20.04.3 LTS 5.4.0-91-generic docker://19.3.15

192.168.24.201 Ready node 7d2h v1.22.2 192.168.24.201 <none> Ubuntu 20.04.3 LTS 5.4.0-91-generic docker://19.3.15

192.168.24.202 Ready node 7d2h v1.22.2 192.168.24.202 <none> Ubuntu 20.04.3 LTS 5.4.0-91-generic docker://19.3.15

1.3 查看所有资源信息

root@k8s-master1:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/net-test1 1/1 Running 1 (6h6m ago) 6d22h

pod/net-test2 1/1 Running 1 (6h6m ago) 6d22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 7d2h

1.4 查看Pod或Node节点标签信息

root@k8s-master1:~# kubectl get pod -A --show-labels

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

default net-test1 1/1 Running 1 (6h8m ago) 6d22h run=net-test1

default net-test2 1/1 Running 1 (6h8m ago) 6d22h run=net-test2

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (5h22m ago) 6d22h k8s-app=calico-kube-controllers,pod-template-hash=864c65f46c

kube-system calico-node-2vt85 1/1 Running 0 6d22h controller-revision-hash=74ffcc5dd4,k8s-app=calico-node,pod-template-generation=1

kube-system calico-node-6dcbp 1/1 Running 2 (5h23m ago) 6d22h controller-revision-hash=74ffcc5dd4,k8s-app=calico-node,pod-template-generation=1

kube-system calico-node-trnbj 1/1 Running 1 (6h8m ago) 6d22h controller-revision-hash=74ffcc5dd4,k8s-app=calico-node,pod-template-generation=1

kube-system calico-node-vmm5c 1/1 Running 1 (6h8m ago) 6d22h controller-revision-hash=74ffcc5dd4,k8s-app=calico-node,pod-template-generation=1

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 4h20m k8s-app=kube-dns,pod-template-hash=69d84cdc49

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 120m k8s-app=dashboard-metrics-scraper,pod-template-hash=c45b7869d

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 120m k8s-app=kubernetes-dashboard,pod-template-hash=576cb95f94

root@k8s-master1:~# kubectl get node -A --show-labels

NAME STATUS ROLES AGE VERSION LABELS

192.168.24.188 Ready,SchedulingDisabled master 7d2h v1.22.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=192.168.24.188,kubernetes.io/os=linux,kubernetes.io/role=master

192.168.24.189 NotReady,SchedulingDisabled master 7d2h v1.22.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=192.168.24.189,kubernetes.io/os=linux,kubernetes.io/role=master

192.168.24.201 Ready node 7d2h v1.22.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=192.168.24.201,kubernetes.io/os=linux,kubernetes.io/role=node

192.168.24.202 Ready node 7d2h v1.22.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=192.168.24.202,kubernetes.io/os=linux,kubernetes.io/role=node

1.5 查看服务的详细信息

root@k8s-master1:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 7d2h

kube-system kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 4h22m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.100.80.116 <none> 8000/TCP 121m

kubernetes-dashboard kubernetes-dashboard NodePort 10.100.72.225 <none> 443:30088/TCP 121m

1.6 查看namespace信息

root@k8s-master1:~# kubectl get ns

NAME STATUS AGE

default Active 7d2h

kube-node-lease Active 7d2h

kube-public Active 7d2h

kube-system Active 7d2h

kubernetes-dashboard Active 124m

2. describe

2.1 显示特定资源的详细信息

root@k8s-master1:~# kubectl describe pod net-test1

Name: net-test1

Namespace: default

Priority: 0

Node: 192.168.24.202/192.168.24.202

Start Time: Sat, 08 Jan 2022 13:42:52 +0000

Labels: run=net-test1

Annotations: <none>

Status: Running

IP: 10.200.169.130

IPs:

IP: 10.200.169.130

Containers:

net-test1:

Container ID: docker://0300a850a7f99f5a313929b2b8bad30f3618f455b6bfa3a828b440f35cbfae94

Image: alpine

Image ID: docker-pullable://alpine@sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

Port: <none>

Host Port: <none>

Args:

sleep

500000

State: Running

Started: Sat, 15 Jan 2022 06:56:57 +0000

Last State: Terminated

Reason: Error

Exit Code: 255

Started: Sat, 08 Jan 2022 13:43:11 +0000

Finished: Sat, 15 Jan 2022 06:25:42 +0000

Ready: True

Restart Count: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-clk47 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-clk47:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

3. logs

3.1 仅输出Pod dashboard中最近的10条日志

root@k8s-master1:~# kubectl logs --tail=10 kubernetes-dashboard-576cb95f94-74rh4 -n kubernetes-dashboard

2022/01/15 11:06:05 [2022-01-15T11:06:05Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 10.200.36.64:19043:

2022/01/15 11:06:05 Getting list of namespaces

2022/01/15 11:06:06 [2022-01-15T11:06:06Z] Outcoming response to 10.200.36.64:19043 with 200 status code

2022/01/15 11:06:08 [2022-01-15T11:06:08Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 10.200.36.64:19043:

2022/01/15 11:06:08 [2022-01-15T11:06:08Z] Outcoming response to 10.200.36.64:19043 with 200 status code

2022/01/15 11:06:10 [2022-01-15T11:06:10Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 10.200.36.64:19043:

2022/01/15 11:06:10 [2022-01-15T11:06:10Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 10.200.36.64:19043:

2022/01/15 11:06:10 Getting list of namespaces

2022/01/15 11:06:10 [2022-01-15T11:06:10Z] Outcoming response to 10.200.36.64:19043 with 200 status code

2022/01/15 11:06:10 [2022-01-15T11:06:10Z] Outcoming response to 10.200.36.64:19043 with 200 status code

4. create

4.1 直接创建一个资源对象,但是yaml文件发生变更后,无法使用create

root@k8s-master1:~# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

root@k8s-master1:~# vim coredns.yaml

142 image: coredns/coredns:1.8.6

##修改镜像版本

142 image: coredns/coredns:1.8.5

root@k8s-master1:~# kubectl create -f coredns.yaml

Error from server (AlreadyExists): error when creating "coredns.yaml": serviceaccounts "coredns" already exists

Error from server (AlreadyExists): error when creating "coredns.yaml": clusterroles.rbac.authorization.k8s.io "system:coredns" already exists

Error from server (AlreadyExists): error when creating "coredns.yaml": clusterrolebindings.rbac.authorization.k8s.io "system:coredns" already exists

Error from server (AlreadyExists): error when creating "coredns.yaml": configmaps "coredns" already exists

Error from server (AlreadyExists): error when creating "coredns.yaml": deployments.apps "coredns" already exists

Error from server (Invalid): error when creating "coredns.yaml": Service "kube-dns" is invalid: spec.clusterIPs: Invalid value: []string{"10.100.0.2"}: failed to allocate IP 10.100.0.2: provided IP is already allocated

4.2 参数--save-config 解决后期能使用apply

root@k8s-master1:~# kubectl create -f coredns.yaml --save-config

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

root@k8s-master1:~# vim coredns.yaml

142 image: coredns/coredns:1.8.5

##修改镜像版本

142 image: coredns/coredns:1.8.6

root@k8s-master1:~# kubectl apply -f coredns.yaml

serviceaccount/coredns unchanged

clusterrole.rbac.authorization.k8s.io/system:coredns unchanged

clusterrolebinding.rbac.authorization.k8s.io/system:coredns unchanged

configmap/coredns unchanged

deployment.apps/coredns configured

service/kube-dns unchanged

5. apply

5.1 与create类似,也是创建一个资源对象,较常使用

root@k8s-master1:~# kubectl apply -f dashboard-v2.4.0.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

5. explain

5.1 显示资源文档信息

root@k8s-master1:~# kubectl explain rs

KIND: ReplicaSet

VERSION: apps/v1

DESCRIPTION:

ReplicaSet ensures that a specified number of pod replicas are running at

any given time.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

If the Labels of a ReplicaSet are empty, they are defaulted to be the same

as the Pod(s) that the ReplicaSet manages. Standard object's metadata. More

info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Spec defines the specification of the desired behavior of the ReplicaSet.

More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Status is the most recently observed status of the ReplicaSet. This data

may be out of date by some window of time. Populated by the system.

Read-only. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

6. delete

6.1 删除一个Pod

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (7h54m ago) 7d

default net-test2 1/1 Running 1 (7h54m ago) 7d

default net-test3 0/1 ContainerCreating 0 9s

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (7h8m ago) 7d

kube-system calico-node-2vt85 1/1 Running 0 7d

kube-system calico-node-6dcbp 1/1 Running 2 (7h9m ago) 7d

kube-system calico-node-trnbj 1/1 Running 1 (7h54m ago) 7d

kube-system calico-node-vmm5c 1/1 Running 1 (7h54m ago) 7d

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 6h6m

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 3h46m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 3h46m

root@k8s-master1:~# kubectl delete pod net-test3

pod "net-test3" deleted

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (7h59m ago) 7d

default net-test2 1/1 Running 1 (7h59m ago) 7d

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (7h12m ago) 7d

kube-system calico-node-2vt85 1/1 Running 0 7d

kube-system calico-node-6dcbp 1/1 Running 2 (7h13m ago) 7d

kube-system calico-node-trnbj 1/1 Running 1 (7h59m ago) 7d

kube-system calico-node-vmm5c 1/1 Running 1 (7h59m ago) 7d

kube-system coredns-69d84cdc49-qpxgr 1/1 Running 0 6h11m

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 3h50m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 3h50m

6.2 删除yaml

root@k8s-master1:~# kubectl delete -f coredns.yaml

serviceaccount "coredns" deleted

clusterrole.rbac.authorization.k8s.io "system:coredns" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:coredns" deleted

configmap "coredns" deleted

deployment.apps "coredns" deleted

service "kube-dns" deleted

7. exec

7.1 进入一个容器,与docker exec类似

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 1 (8h ago) 7d

default net-test2 1/1 Running 1 (8h ago) 7d

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 2 (7h14m ago) 7d

kube-system calico-node-2vt85 1/1 Running 0 7d

kube-system calico-node-6dcbp 1/1 Running 2 (7h15m ago) 7d

kube-system calico-node-trnbj 1/1 Running 1 (8h ago) 7d

kube-system calico-node-vmm5c 1/1 Running 1 (8h ago) 7d

kube-system coredns-69d84cdc49-n6rgv 1/1 Running 0 30s

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 0 3h52m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 0 3h52m

root@k8s-master1:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 06:02:9A:36:1D:0E

inet addr:10.200.169.130 Bcast:10.200.169.130 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:19 errors:0 dropped:0 overruns:0 frame:0

TX packets:14 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2561 (2.5 KiB) TX bytes:1172 (1.1 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ #

8. drain

8.1 驱逐一个Node节点,给定的节点将被标记为不可调度,以防止新的 Pod 到达

root@k8s-master1:~# kubectl drain 192.168.24.202

node/192.168.24.202 cordoned

DEPRECATED WARNING: Aborting the drain command in a list of nodes will be deprecated in v1.23.

The new behavior will make the drain command go through all nodes even if one or more nodes failed during the drain.

For now, users can try such experience via: --ignore-errors

error: unable to drain node "192.168.24.202", aborting command...

There are pending nodes to be drained:

192.168.24.202

cannot delete Pods not managed by ReplicationController, ReplicaSet, Job, DaemonSet or StatefulSet (use --force to override): default/net-test1

cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): kube-system/calico-node-vmm5c

cannot delete Pods with local storage (use --delete-emptydir-data to override): kubernetes-dashboard/kubernetes-dashboard-576cb95f94-74rh4

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d5h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d5h v1.22.2

192.168.24.201 Ready node 7d5h v1.22.2

192.168.24.202 Ready,SchedulingDisabled node 7d5h v1.22.2

##再次调度该Node,使用uncordon

root@k8s-master1:~# kubectl uncordon 192.168.24.202

node/192.168.24.202 uncordoned

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d5h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d5h v1.22.2

192.168.24.201 Ready node 7d5h v1.22.2

192.168.24.202 Ready node 7d5h v1.22.2

四、etcd的客户端使用及数据备份与恢复

1. 备份etcd数据

root@k8s-etcd1:~# mkdir /data/etcd/ -p

root@k8s-etcd1:~# etcdctl snapshot save /data/etcd/etcd-2022-1-16.bak

{"level":"info","ts":1642303409.427254,"caller":"snapshot/v3_snapshot.go:68","msg":"created temporary db file","path":"/data/etcd/etcd-2022-1-16.bak.part"}

{"level":"info","ts":1642303409.4497788,"logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1642303409.4498763,"caller":"snapshot/v3_snapshot.go:76","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":1642303409.5112865,"logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":1642303409.5836961,"caller":"snapshot/v3_snapshot.go:91","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"3.5 MB","took":"now"}

{"level":"info","ts":1642303409.5838625,"caller":"snapshot/v3_snapshot.go:100","msg":"saved","path":"/data/etcd/etcd-2022-1-16.bak"}

Snapshot saved at /data/etcd/etcd-2022-1-16.bak

root@k8s-etcd1:~# ll /data/etcd/ -h

total 3.4M

drwxr-xr-x 2 root root 4.0K Jan 16 03:23 ./

drwxr-xr-x 4 root root 4.0K Jan 16 03:23 ../

-rw------- 1 root root 3.4M Jan 16 03:23 etcd-2022-1-16.bak

2. 恢复etcd数据

root@k8s-etcd1:~# mkdir /opt/etcd-dir/ -p

root@k8s-etcd1:~# etcdctl snapshot restore /data/etcd/etcd-2022-1-16.bak --data-dir=/opt/etcd-dir/

Deprecated: Use `etcdutl snapshot restore` instead.

2022-01-16T03:27:02Z info snapshot/v3_snapshot.go:251 restoring snapshot {"path": "/data/etcd/etcd-2022-1-16.bak", "wal-dir": "/opt/etcd-dir/member/wal", "data-dir": "/opt/etcd-dir/", "snap-dir": "/opt/etcd-dir/member/snap", "stack": "go.etcd.io/etcd/etcdutl/v3/snapshot.(*v3Manager).Restore\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdutl/snapshot/v3_snapshot.go:257\ngo.etcd.io/etcd/etcdutl/v3/etcdutl.SnapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdutl/etcdutl/snapshot_command.go:147\ngo.etcd.io/etcd/etcdctl/v3/ctlv3/command.snapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdctl/ctlv3/command/snapshot_command.go:128\ngithub.com/spf13/cobra.(*Command).execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:856\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:960\ngithub.com/spf13/cobra.(*Command).Execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:897\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.Start\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdctl/ctlv3/ctl.go:107\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.MustStart\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdctl/ctlv3/ctl.go:111\nmain.main\n\t/tmp/etcd-release-3.5.1/etcd/release/etcd/etcdctl/main.go:59\nruntime.main\n\t/home/remote/sbatsche/.gvm/gos/go1.16.3/src/runtime/proc.go:225"}

2022-01-16T03:27:02Z info membership/store.go:141 Trimming membership information from the backend...

2022-01-16T03:27:02Z info membership/cluster.go:421 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"]}

2022-01-16T03:27:02Z info snapshot/v3_snapshot.go:272 restored snapshot {"path": "/data/etcd/etcd-2022-1-16.bak", "wal-dir": "/opt/etcd-dir/member/wal", "data-dir": "/opt/etcd-dir/", "snap-dir": "/opt/etcd-dir/member/snap"}

root@k8s-etcd1:~# ll /opt/etcd-dir/

total 12

drwxr-xr-x 3 root root 4096 Jan 16 03:27 ./

drwxr-xr-x 4 root root 4096 Jan 16 03:26 ../

drwx------ 4 root root 4096 Jan 16 03:27 member/

3. 脚本自动etcd数据

root@k8s-etcd1:~# vim etcd-bak.sh

root@k8s-etcd1:~# cat etcd-bak.sh

#!/bin/bash

source /etc/profile

DATE=`date +%Y-%m-%d_%H-%M-%S`

ETCDCTL_API=3 /usr/bin/etcdctl snapshot save /data/etcd/etcd-snapshot-${DATE}.db

root@k8s-etcd1:~# bash etcd-bak.sh

{"level":"info","ts":1642303834.9888933,"caller":"snapshot/v3_snapshot.go:68","msg":"created temporary db file","path":"/data/etcd/etcd-snapshot-2022-01-16_03-30-34.db.part"}

{"level":"info","ts":1642303834.991853,"logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1642303834.991902,"caller":"snapshot/v3_snapshot.go:76","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":1642303835.0324948,"logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":1642303835.0389755,"caller":"snapshot/v3_snapshot.go:91","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"3.5 MB","took":"now"}

{"level":"info","ts":1642303835.039144,"caller":"snapshot/v3_snapshot.go:100","msg":"saved","path":"/data/etcd/etcd-snapshot-2022-01-16_03-30-34.db"}

Snapshot saved at /data/etcd/etcd-snapshot-2022-01-16_03-30-34.db

root@k8s-etcd1:~# ll /data/etcd/ -h

total 3.4M

drwxr-xr-x 2 root root 4.0K Jan 16 03:30 ./

drwxr-xr-x 4 root root 4.0K Jan 16 03:23 ../

-rw------- 1 root root 3.4M Jan 16 03:30 etcd-snapshot-2022-01-16_03-30-34.db

4. ezctl 备份和恢复etcd集群

4.1 ezctl 备份etcd集群

root@k8s-master1:~# cd /etc/kubeasz/

root@k8s-master1:/etc/kubeasz# ll

total 124

drwxrwxr-x 12 root root 4096 Jan 8 07:49 ./

drwxr-xr-x 105 root root 4096 Jan 15 10:18 ../

-rw-rw-r-- 1 root root 20304 Jan 5 12:19 ansible.cfg

drwxr-xr-x 3 root root 4096 Jan 8 07:26 bin/

drwxr-xr-x 3 root root 4096 Jan 8 07:49 clusters/

drwxrwxr-x 8 root root 4096 Jan 5 12:28 docs/

drwxr-xr-x 2 root root 4096 Jan 8 07:43 down/

drwxrwxr-x 2 root root 4096 Jan 5 12:28 example/

-rwxrwxr-x 1 root root 24716 Jan 5 12:19 ezctl*

-rwxrwxr-x 1 root root 15350 Jan 5 12:19 ezdown*

-rw-rw-r-- 1 root root 301 Jan 5 12:19 .gitignore

drwxrwxr-x 10 root root 4096 Jan 5 12:28 manifests/

drwxrwxr-x 2 root root 4096 Jan 5 12:28 pics/

drwxrwxr-x 2 root root 4096 Jan 8 08:31 playbooks/

-rw-rw-r-- 1 root root 6137 Jan 5 12:19 README.md

drwxrwxr-x 22 root root 4096 Jan 5 12:28 roles/

drwxrwxr-x 2 root root 4096 Jan 5 12:28 tools/

root@k8s-master1:/etc/kubeasz# ll clusters/

total 12

drwxr-xr-x 3 root root 4096 Jan 8 07:49 ./

drwxrwxr-x 12 root root 4096 Jan 8 07:49 ../

drwxr-xr-x 5 root root 4096 Jan 8 08:32 k8s-cluster1/

root@k8s-master1:/etc/kubeasz# ./ezctl backup k8s-cluster1

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/94.backup.yml

2022-01-16 04:00:31 INFO cluster:k8s-cluster1 backup begins in 5s, press any key to abort:

PLAY [localhost] ************************************************************************************************

TASK [Gathering Facts] ******************************************************************************************

ok: [localhost]

TASK [set NODE_IPS of the etcd cluster] *************************************************************************

ok: [localhost]

TASK [get etcd cluster status] **********************************************************************************

changed: [localhost]

TASK [debug] ****************************************************************************************************

ok: [localhost] => {

"ETCD_CLUSTER_STATUS": {

"changed": true,

"cmd": "for ip in 192.168.24.190 192.168.24.191 ;do ETCDCTL_API=3 /etc/kubeasz/bin/etcdctl --endpoints=https://\"$ip\":2379 --cacert=/etc/kubeasz/clusters/k8s-cluster1/ssl/ca.pem --cert=/etc/kubeasz/clusters/k8s-cluster1/ssl/etcd.pem --key=/etc/kubeasz/clusters/k8s-cluster1/ssl/etcd-key.pem endpoint health; done",

"delta": "0:00:00.809782",

"end": "2022-01-16 04:00:45.973653",

"failed": false,

"rc": 0,

"start": "2022-01-16 04:00:45.163871",

"stderr": "",

"stderr_lines": [],

"stdout": "https://192.168.24.190:2379 is healthy: successfully committed proposal: took = 125.125314ms\nhttps://192.168.24.191:2379 is healthy: successfully committed proposal: took = 33.908457ms",

"stdout_lines": [

"https://192.168.24.190:2379 is healthy: successfully committed proposal: took = 125.125314ms",

"https://192.168.24.191:2379 is healthy: successfully committed proposal: took = 33.908457ms"

]

}

}

TASK [get a running ectd node] **********************************************************************************

changed: [localhost]

TASK [debug] ****************************************************************************************************

ok: [localhost] => {

"RUNNING_NODE.stdout": "192.168.24.190"

}

TASK [get current time] *****************************************************************************************

changed: [localhost]

TASK [make a backup on the etcd node] ***************************************************************************

changed: [localhost -> 192.168.24.190]

TASK [fetch the backup data] ************************************************************************************

changed: [localhost -> 192.168.24.190]

TASK [update the latest backup] *********************************************************************************

changed: [localhost]

PLAY RECAP ******************************************************************************************************

localhost : ok=10 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

root@k8s-master1:/etc/kubeasz# ll clusters/k8s-cluster1/backup/

total 6928

drwxr-xr-x 2 root root 4096 Jan 16 04:00 ./

drwxr-xr-x 5 root root 4096 Jan 8 08:32 ../

-rw------- 1 root root 3538976 Jan 16 04:00 snapshot_202201160400.db

-rw------- 1 root root 3538976 Jan 16 04:00 snapshot.db

4.2 ezctl 恢复etcd集群

root@k8s-master1:/etc/kubeasz# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (61m ago) 7d14h

default net-test2 1/1 Running 2 (61m ago) 7d14h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 3 (61m ago) 7d14h

kube-system calico-node-2vt85 1/1 Running 0 7d14h

kube-system calico-node-6dcbp 1/1 Running 3 (13h ago) 7d14h

kube-system calico-node-trnbj 1/1 Running 2 (61m ago) 7d14h

kube-system calico-node-vmm5c 1/1 Running 2 (61m ago) 7d14h

kube-system coredns-69d84cdc49-cvxhz 1/1 Running 1 (61m ago) 13h

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 1 (61m ago) 17h

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 1 (61m ago) 17h

root@k8s-master1:/etc/kubeasz# kubectl delete pod net-test2

pod "net-test2" deleted

root@k8s-master1:/etc/kubeasz# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (62m ago) 7d14h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 3 (62m ago) 7d14h

kube-system calico-node-2vt85 1/1 Running 0 7d14h

kube-system calico-node-6dcbp 1/1 Running 3 (13h ago) 7d14h

kube-system calico-node-trnbj 1/1 Running 2 (62m ago) 7d14h

kube-system calico-node-vmm5c 1/1 Running 2 (62m ago) 7d14h

kube-system coredns-69d84cdc49-cvxhz 1/1 Running 1 (62m ago) 13h

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 1 (62m ago) 17h

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 1 (62m ago) 17h

oot@k8s-master1:/etc/kubeasz# ./ezctl restore k8s-cluster1

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/95.restore.yml

2022-01-16 04:08:15 INFO cluster:k8s-cluster1 restore begins in 5s, press any key to abort:

PLAY [kube_master] **********************************************************************************************

TASK [Gathering Facts] ******************************************************************************************

ok: [192.168.24.188]

[WARNING]: Unhandled error in Python interpreter discovery for host 192.168.24.189: Failed to connect to the

host via ssh: ssh: connect to host 192.168.24.189 port 22: No route to host

fatal: [192.168.24.189]: UNREACHABLE! => {"changed": false, "msg": "Data could not be sent to remote host \"192.168.24.189\". Make sure this host can be reached over ssh: ssh: connect to host 192.168.24.189 port 22: No route to host\r\n", "unreachable": true}

TASK [stopping kube_master services] ****************************************************************************

changed: [192.168.24.188] => (item=kube-apiserver)

changed: [192.168.24.188] => (item=kube-controller-manager)

changed: [192.168.24.188] => (item=kube-scheduler)

PLAY [kube_master,kube_node] ************************************************************************************

TASK [Gathering Facts] ******************************************************************************************

ok: [192.168.24.201]

ok: [192.168.24.202]

TASK [stopping kube_node services] ******************************************************************************

changed: [192.168.24.202] => (item=kubelet)

changed: [192.168.24.201] => (item=kubelet)

changed: [192.168.24.188] => (item=kubelet)

changed: [192.168.24.202] => (item=kube-proxy)

changed: [192.168.24.201] => (item=kube-proxy)

changed: [192.168.24.188] => (item=kube-proxy)

PLAY [etcd] *****************************************************************************************************

TASK [Gathering Facts] ******************************************************************************************

ok: [192.168.24.190]

ok: [192.168.24.191]

TASK [cluster-restore : 停止ectd 服务] ******************************************************************************

changed: [192.168.24.191]

changed: [192.168.24.190]

TASK [cluster-restore : 清除etcd 数据目录] ****************************************************************************

changed: [192.168.24.191]

changed: [192.168.24.190]

TASK [cluster-restore : 生成备份目录] *********************************************************************************

ok: [192.168.24.190]

changed: [192.168.24.191]

TASK [cluster-restore : 准备指定的备份etcd 数据] *************************************************************************

changed: [192.168.24.190]

changed: [192.168.24.191]

TASK [cluster-restore : 清理上次备份恢复数据] *****************************************************************************

ok: [192.168.24.190]

ok: [192.168.24.191]

TASK [cluster-restore : etcd 数据恢复] ******************************************************************************

changed: [192.168.24.191]

changed: [192.168.24.190]

TASK [cluster-restore : 恢复数据至etcd 数据目录] *************************************************************************

changed: [192.168.24.190]

changed: [192.168.24.191]

TASK [cluster-restore : 重启etcd 服务] ******************************************************************************

changed: [192.168.24.191]

changed: [192.168.24.190]

TASK [cluster-restore : 以轮询的方式等待服务同步完成] *************************************************************************

changed: [192.168.24.190]

changed: [192.168.24.191]

PLAY [kube_master] **********************************************************************************************

TASK [starting kube_master services] ****************************************************************************

changed: [192.168.24.188] => (item=kube-apiserver)

changed: [192.168.24.188] => (item=kube-controller-manager)

changed: [192.168.24.188] => (item=kube-scheduler)

PLAY [kube_master,kube_node] ************************************************************************************

TASK [starting kube_node services] ******************************************************************************

changed: [192.168.24.201] => (item=kubelet)

changed: [192.168.24.202] => (item=kubelet)

changed: [192.168.24.188] => (item=kubelet)

changed: [192.168.24.201] => (item=kube-proxy)

changed: [192.168.24.202] => (item=kube-proxy)

changed: [192.168.24.188] => (item=kube-proxy)

PLAY RECAP ******************************************************************************************************

192.168.24.188 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.24.189 : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0

192.168.24.190 : ok=10 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.24.191 : ok=10 changed=8 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.24.201 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.24.202 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

root@k8s-master1:/etc/kubeasz# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (67m ago) 7d14h

default net-test2 1/1 Running 0 7d14h

kube-system calico-kube-controllers-864c65f46c-7gcvt 1/1 Running 4 (108s ago) 7d14h

kube-system calico-node-2vt85 1/1 Running 0 7d14h

kube-system calico-node-6dcbp 1/1 Running 3 (13h ago) 7d14h

kube-system calico-node-trnbj 1/1 Running 2 (67m ago) 7d14h

kube-system calico-node-vmm5c 1/1 Running 2 (67m ago) 7d14h

kube-system coredns-69d84cdc49-cvxhz 1/1 Running 1 (67m ago) 13h

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-qnnz5 1/1 Running 1 (67m ago) 17h

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 2 (114s ago) 17h

五、k8s的集群维护

1. 添加与删除master

1.1 添加master

root@k8s-master1:/etc/kubeasz# ./ezctl add-master k8s-cluster1 192.168.24.201

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d19h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d19h v1.22.2

192.168.24.201 Ready master 7d19h v1.22.2

192.168.24.202 Ready node 7d19h v1.22.2

root@k8s-node2:~# cat /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

server 192.168.24.201:6443 max_fails=2 fail_timeout=3s;

server 192.168.24.188:6443 max_fails=2 fail_timeout=3s;

server 192.168.24.189:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

1.2 删除master

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d20h v1.22.2

192.168.24.201 Ready master 7d20h v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

root@k8s-master1:/etc/kubeasz# ./ezctl del-master k8s-cluster1 192.168.24.201

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d20h v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

2. 添加与删除node

2.1 添加node

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d19h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d19h v1.22.2

192.168.24.201 Ready master 7d19h v1.22.2

192.168.24.202 Ready node 7d19h v1.22.2

root@k8s-master1:/etc/kubeasz# ssh-copy-id 192.168.24.203

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.24.203's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.24.203'"

and check to make sure that only the key(s) you wanted were added.

root@k8s-master1:/etc/kubeasz# ./ezctl add-node k8s-cluster1 192.168.24.203

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d20h v1.22.2

192.168.24.201 Ready master 7d19h v1.22.2

192.168.24.202 Ready node 7d19h v1.22.2

192.168.24.203 Ready node 4m34s v1.22.2

2.2 删除node

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d20h v1.22.2

192.168.24.201 Ready master 7d19h v1.22.2

192.168.24.202 Ready node 7d19h v1.22.2

192.168.24.203 Ready node 4m34s v1.22.2

root@k8s-master1:/etc/kubeasz# ./ezctl del-node k8s-cluster1 192.168.24.203

root@k8s-master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 NotReady,SchedulingDisabled master 7d20h v1.22.2

192.168.24.201 Ready master 7d20h v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

3. 升级master

##提前下载好升级版本的文件包

root@k8s-master1:/usr/local/src/kubernetes/server/bin# ll

total 1095348

drwxr-xr-x 2 root root 4096 Dec 16 08:50 ./

drwxr-xr-x 3 root root 4096 Dec 16 08:54 ../

-rwxr-xr-x 1 root root 53006336 Dec 16 08:50 apiextensions-apiserver*

-rwxr-xr-x 1 root root 45854720 Dec 16 08:50 kubeadm*

-rwxr-xr-x 1 root root 50827264 Dec 16 08:50 kube-aggregator*

-rwxr-xr-x 1 root root 124809216 Dec 16 08:50 kube-apiserver*

-rw-r--r-- 1 root root 8 Dec 16 08:48 kube-apiserver.docker_tag

-rw------- 1 root root 129590272 Dec 16 08:48 kube-apiserver.tar

-rwxr-xr-x 1 root root 118497280 Dec 16 08:50 kube-controller-manager*

-rw-r--r-- 1 root root 8 Dec 16 08:48 kube-controller-manager.docker_tag

-rw------- 1 root root 123278848 Dec 16 08:48 kube-controller-manager.tar

-rwxr-xr-x 1 root root 46936064 Dec 16 08:50 kubectl*

-rwxr-xr-x 1 root root 53911000 Dec 16 08:50 kubectl-convert*

-rwxr-xr-x 1 root root 121213080 Dec 16 08:50 kubelet*

-rwxr-xr-x 1 root root 43479040 Dec 16 08:50 kube-proxy*

-rw-r--r-- 1 root root 8 Dec 16 08:48 kube-proxy.docker_tag

-rw------- 1 root root 105480704 Dec 16 08:48 kube-proxy.tar

-rwxr-xr-x 1 root root 49168384 Dec 16 08:50 kube-scheduler*

-rw-r--r-- 1 root root 8 Dec 16 08:48 kube-scheduler.docker_tag

-rw------- 1 root root 53949952 Dec 16 08:48 kube-scheduler.tar

-rwxr-xr-x 1 root root 1593344 Dec 16 08:50 mounter*

root@k8s-master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.201 Ready node 8m51s v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

##haproxy高可用取消master2调度

root@k8s-ha1:~# vim /etc/haproxy/haproxy.cfg

listen k8s-6443

bind 192.168.24.118:6443

mode tcp

server 192.168.24.188 192.168.24.188:6443 check inter 2s fall 3 rise 3

# server 192.168.24.189 192.168.24.189:6443 check inter 2s fall 3 rise 3

root@k8s-ha1:~# systemctl reload haproxy.service

root@k8s-ha1:~# systemctl restart haproxy.service

##node节点取消master2

root@k8s-node1:~# vim /etc/kube-lb/conf/kube-lb.conf

root@k8s-node1:~# cat /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

server 192.168.24.188:6443 max_fails=2 fail_timeout=3s;

# server 192.168.24.189:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

root@k8s-node1:~# systemctl restart kube-lb.service

root@k8s-node2:~# vim /etc/kube-lb/conf/kube-lb.conf

root@k8s-node2:~# cat /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

server 192.168.24.188:6443 max_fails=2 fail_timeout=3s;

# server 192.168.24.189:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

root@k8s-node2:~# systemctl restart kube-lb.service

##master2停止服务

root@k8s-master2:~# systemctl stop kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler

##从master1拷贝升级文件到master2

root@k8s-master1:/usr/local/src/kubernetes/server/bin# scp kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler 192.168.24.189:/usr/bin

kube-apiserver 100% 119MB 20.5MB/s 00:05

kube-controller-manager 100% 113MB 14.0MB/s 00:08

kubelet 100% 116MB 14.0MB/s 00:08

kube-proxy 100% 41MB 23.6MB/s 00:01

kube-scheduler 100% 47MB 9.6MB/s 00:04

##启动master2上服务,查看版本升级是否成功

root@k8s-master2:~# systemctl start kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler

root@k8s-master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.2

192.168.24.189 Ready,SchedulingDisabled master 7d20h v1.22.5

192.168.24.201 Ready node 28m v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

##替换/etc/kubeasz/bin文件

root@k8s-master1:/usr/local/src/kubernetes/server/bin# cp -f kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler /etc/kubeasz/bin/

4. 升级node

root@k8s-master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.5

192.168.24.189 Ready,SchedulingDisabled master 7d20h v1.22.5

192.168.24.201 Ready node 37m v1.22.2

192.168.24.202 Ready node 7d20h v1.22.2

##停止node上的服务

root@k8s-node1:~# systemctl stop kubelet kube-proxy.service

##从master1拷贝文件到node

root@k8s-master1:/usr/local/src/kubernetes/server/bin# scp kubelet kube-proxy kubectl 192.168.24.201:/usr/bin

kubelet 100% 116MB 54.7MB/s 00:02

kube-proxy 100% 41MB 60.4MB/s 00:00

kubectl 100% 45MB 34.0MB/s 00:01

##重启node服务,并验证升级是否成功

root@k8s-node1:~# systemctl start kubelet kube-proxy.service

root@k8s-master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.24.188 Ready,SchedulingDisabled master 7d20h v1.22.5

192.168.24.189 Ready,SchedulingDisabled master 7d20h v1.22.5

192.168.24.201 Ready node 41m v1.22.5

192.168.24.202 Ready node 7d20h v1.22.2

六、yaml文件基础

1. yaml文件特点

- 大小写敏感

- 缩进时不允许使用Tab键,只允许使用空格

- 缩进的空格数目不重要,只要相同层级的元素左侧对齐即可

- 使用“#”表示注释,从这个字符一直到行尾,都会被解析器忽略

- 比json更适用于配置文件

- K8s中通过kubcectl explan 查看yaml文件格式

2. yaml创建命名空间

root@k8s-master1:/opt/yaml# vim n60-namespace.yaml

root@k8s-master1:/opt/yaml# cat n60-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: n60

root@k8s-master1:/opt/yaml# kubectl get ns

NAME STATUS AGE

default Active 7d21h

kube-node-lease Active 7d21h

kube-public Active 7d21h

kube-system Active 7d21h

kubernetes-dashboard Active 20h

kuboard Active 16h

root@k8s-master1:/opt/yaml# kubectl apply -f n60-namespace.yaml

namespace/n60 created

root@k8s-master1:/opt/yaml# kubectl get ns

NAME STATUS AGE

default Active 7d21h

kube-node-lease Active 7d21h

kube-public Active 7d21h

kube-system Active 7d21h

kubernetes-dashboard Active 20h

kuboard Active 16h

n60 Active 3s

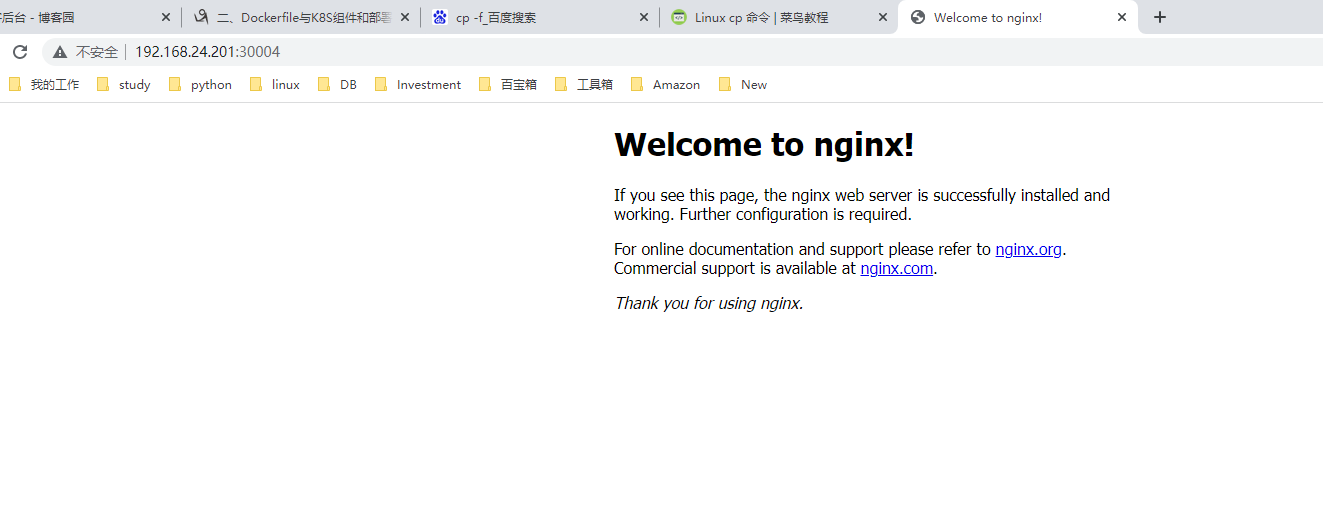

3. yaml创建nginx

root@k8s-master1:/data# vim nginx.yaml

root@k8s-master1:/data# cat nginx.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: n60-nginx-deployment-label

name: n60-nginx-deployment

namespace: n60

spec:

replicas: 1

selector:

matchLabels:

app: n60-nginx-selector

template:

metadata:

labels:

app: n60-nginx-selector

spec:

containers:

- name: n60-nginx-container

image: nginx:1.16.1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: n60-nginx-service-label

name: n60-nginx-service

namespace: n60

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30443

selector:

app: n60-nginx-selector

root@k8s-master1:/data# kubectl apply -f nginx.yaml

deployment.apps/n60-nginx-deployment created

service/n60-nginx-service created

root@k8s-master1:/data# kubectl describe pod n60-nginx-deployment-6b85b6d695-sl4xx -n n60

Name: n60-nginx-deployment-6b85b6d695-sl4xx

Namespace: n60

Priority: 0

Node: 192.168.24.201/192.168.24.201

Start Time: Sun, 16 Jan 2022 07:10:41 +0000

Labels: app=n60-nginx-selector

pod-template-hash=6b85b6d695

Annotations: <none>

Status: Running

IP: 10.200.36.65

IPs:

IP: 10.200.36.65

Controlled By: ReplicaSet/n60-nginx-deployment-6b85b6d695

Containers:

n60-nginx-container:

Container ID: docker://d691704b53eda6f2fdfb0acb36c5965deb8b4b4872ad036c1fdde825a6946f9b

Image: nginx:1.16.1

Image ID: docker-pullable://nginx@sha256:d20aa6d1cae56fd17cd458f4807e0de462caf2336f0b70b5eeb69fcaaf30dd9c

Ports: 80/TCP, 443/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sun, 16 Jan 2022 07:11:16 +0000

Ready: True

Restart Count: 0

Environment:

password: 123456

age: 18

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mv58s (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-mv58s:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 95s default-scheduler Successfully assigned n60/n60-nginx-deployment-6b85b6d695-sl4xx to 192.168.24.201

Normal Pulling 91s kubelet Pulling image "nginx:1.16.1"

Normal Pulled 62s kubelet Successfully pulled image "nginx:1.16.1" in 28.472502199s

Normal Created 61s kubelet Created container n60-nginx-container

Normal Started 60s kubelet Started container n60-nginx-container

root@k8s-master1:/data# kubectl get pod -n n60

NAME READY STATUS RESTARTS AGE

n60-nginx-deployment-6b85b6d695-sl4xx 1/1 Running 0 101s

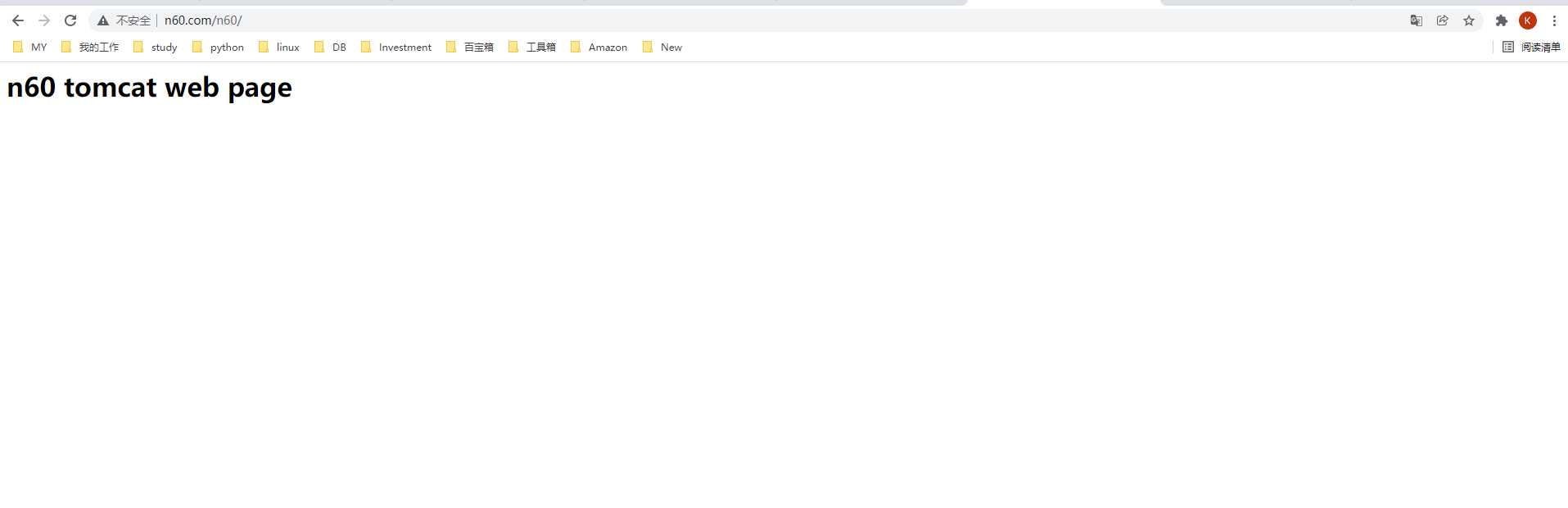

七、基于yaml文件创建nginx及tomcat并实现动静分离

图片在nginx响应

jsp在tomcat响应

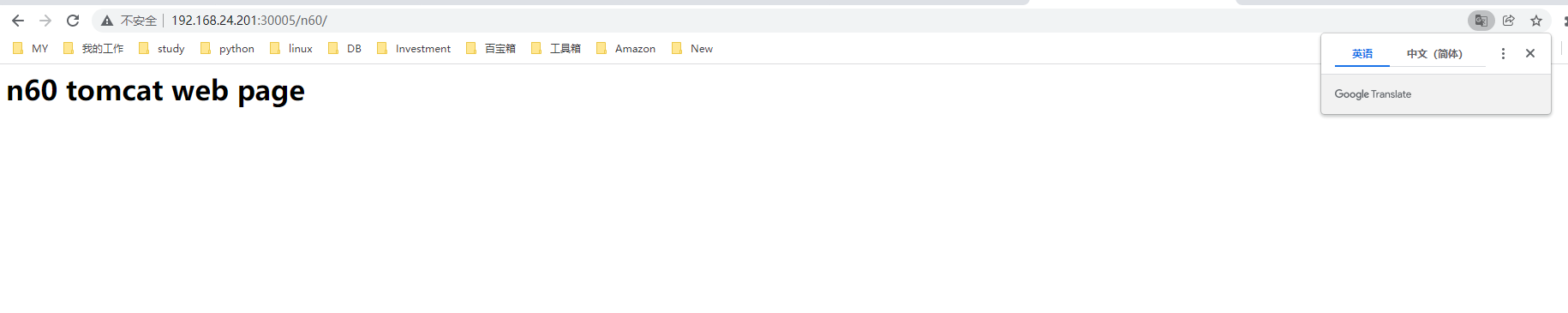

1. yaml安装tomcat,nginx安装参照第六步

root@k8s-master1:/data# vim tomcat.yaml

root@k8s-master1:/data# cat tomcat.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: n60-tomcat-app1-deployment-label

name: n60-tomcat-app1-deployment

namespace: n60

spec:

replicas: 1

selector:

matchLabels:

app: n60-tomcat-app1-selector

template:

metadata:

labels:

app: n60-tomcat-app1-selector

spec:

containers:

- name: n60-tomcat-app1-container

image: tomcat:7.0.109-jdk8-openjdk

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: n60-tomcat-app1-service-label

name: n60-tomcat-app1-service

namespace: n60

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30005

selector:

app: n60-tomcat-app1-selector

root@k8s-master1:/data# kubectl apply -f tomcat.yaml

deployment.apps/n60-tomcat-app1-deployment created

service/n60-tomcat-app1-service created

root@k8s-master1:/data# kubectl get pod -n n60

NAME READY STATUS RESTARTS AGE

n60-nginx-deployment-6b85b6d695-sl4xx 1/1 Running 0 10m

n60-tomcat-app1-deployment-6bc58c677c-vqlkl 1/1 Running 0 93s

root@k8s-master1:/data# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (4h21m ago) 7d17h

kube-system calico-kube-controllers-864c65f46c-gtmns 1/1 Running 0 91m

kube-system calico-node-5crpg 1/1 Running 0 86m

kube-system calico-node-jsd5k 1/1 Running 0 86m

kube-system calico-node-pxndq 1/1 Running 0 86m

kube-system calico-node-vmm5c 1/1 Running 2 (4h21m ago) 7d17h

kube-system coredns-69d84cdc49-cvxhz 1/1 Running 1 (4h21m ago) 16h

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-449mt 1/1 Running 0 91m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 2 (3h16m ago) 20h

n60 n60-nginx-deployment-6b85b6d695-sl4xx 1/1 Running 0 14m

n60 n60-tomcat-app1-deployment-6bc58c677c-vqlkl 1/1 Running 0 6m7s

root@k8s-master1:/data# kubectl exec -it n60-tomcat-app1-deployment-6bc58c677c-vqlkl -n n60 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@n60-tomcat-app1-deployment-6bc58c677c-vqlkl:/usr/local/tomcat# cd webapps

root@n60-tomcat-app1-deployment-6bc58c677c-vqlkl:/usr/local/tomcat/webapps# ls

root@n60-tomcat-app1-deployment-6bc58c677c-vqlkl:/usr/local/tomcat/webapps# mkdir n60

root@n60-tomcat-app1-deployment-6bc58c677c-vqlkl:/usr/local/tomcat/webapps# ls

n60

root@n60-tomcat-app1-deployment-6bc58c677c-vqlkl:/usr/local/tomcat/webapps# echo "<h1>n60 tomcat web page</h1>" >> n60/index.jsp

2. 配置nginx

root@k8s-master1:/data# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (4h28m ago) 7d17h

kube-system calico-kube-controllers-864c65f46c-gtmns 1/1 Running 0 98m

kube-system calico-node-5crpg 1/1 Running 0 93m

kube-system calico-node-jsd5k 1/1 Running 0 93m

kube-system calico-node-pxndq 1/1 Running 0 93m

kube-system calico-node-vmm5c 1/1 Running 2 (4h28m ago) 7d17h

kube-system coredns-69d84cdc49-cvxhz 1/1 Running 1 (4h28m ago) 16h

kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-449mt 1/1 Running 0 98m

kubernetes-dashboard kubernetes-dashboard-576cb95f94-74rh4 1/1 Running 2 (3h23m ago) 20h

n60 n60-nginx-deployment-6b85b6d695-sl4xx 1/1 Running 0 21m

n60 n60-tomcat-app1-deployment-6bc58c677c-vqlkl 1/1 Running 0 13m

root@k8s-master1:/data# kubectl exec -it n60-nginx-deployment-6b85b6d695-sl4xx -n n60 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@n60-nginx-deployment-6b85b6d695-sl4xx:/# cd /usr/share/nginx/html/

root@n60-nginx-deployment-6b85b6d695-sl4xx:/usr/share/nginx/html# echo "nginx web page" > index.html

root@k8s-ha1:~# vim /etc/haproxy/haproxy.cfg

##最后添加以下内容

listen n60-web1

bind 192.168.24.118:80

server 192.168.24.201 192.168.24.201:30004 check inter 2s fall 3 rise 3

server 192.168.24.202 192.168.24.202:30004 check inter 2s fall 3 rise 3

root@k8s-ha1:~# systemctl reload haproxy.service

root@k8s-ha1:~# systemctl restart haproxy.service

##nginx容器中添加图片

root@n60-nginx-deployment-6b85b6d695-sl4xx:/usr/share/nginx/html# mkdir images

root@n60-nginx-deployment-6b85b6d695-sl4xx:/usr/share/nginx/html# cd images/

root@n60-nginx-deployment-6b85b6d695-sl4xx:/usr/share/nginx/html/images# ls

00.jpg

root@n60-nginx-deployment-6b85b6d695-sl4xx:/usr/share/nginx# cd /etc/nginx/conf.d/

root@n60-nginx-deployment-6b85b6d695-sl4xx:/etc/nginx/conf.d# ls

default.conf

root@n60-nginx-deployment-6b85b6d695-sl4xx:/etc/nginx/conf.d# vim default.conf

root@n60-nginx-deployment-6b85b6d695-sl4xx:/etc/nginx/conf.d# cat default.conf

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

location /n60 {

proxy_pass http://n60-tomcat-app1-service:80;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

root@n60-nginx-deployment-6b85b6d695-sl4xx:/etc/nginx/conf.d# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@n60-nginx-deployment-6b85b6d695-sl4xx:/etc/nginx/conf.d# nginx -s reload

2022/01/16 08:19:17 [notice] 2969#2969: signal process started

浙公网安备 33010602011771号

浙公网安备 33010602011771号