2.联邦模式配置---扩容,负载均衡

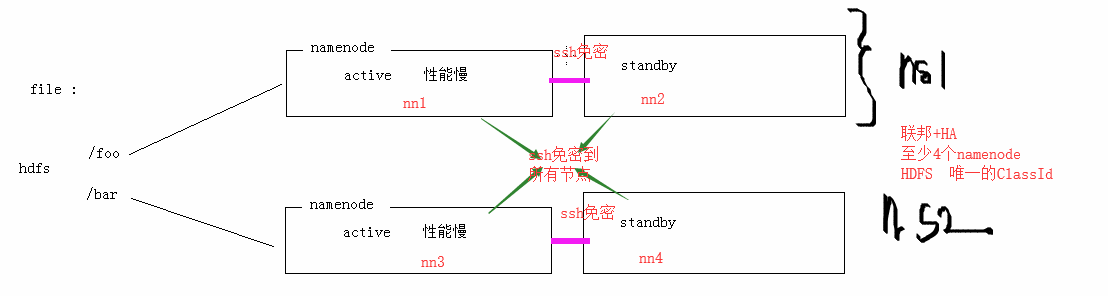

原理图 两个集群---目的:扩容

HA联邦模式解决了单纯HA模式的性能瓶颈(主要指Namenode、ResourceManager),将整个HA集群划分为两个以上的集群,不同的集群之间通过Federation进行连接,使得HA集群拥有了横向扩展的能力。理论上,在该模式下,能够通过增加计算节点以处理无限增长的数据。联邦模式下的配置在原HA模式的基础上做了部分调整。

配置过程

federation

cp -r local/ federation

1.规划集群

ns1:nn1(s101) + nn2(s102)

ns2:nn3(s103) + nn4(s014)

2.准备

[nn1 ~ nn4 ]ssh 所有节点.

3.停止整个集群

4.配置文件

4.1)s101和s102的hdfs-site.xml配置

[hadoop/federation/hdfs-site.xml]

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.nameservices</name>

<value>ns1,ns2</value>

</property>

<!-- **************ns1********************* -->

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>s101:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>s102:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>s101:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>s102:50070</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- **************ns2********************* -->

<property>

<name>dfs.ha.namenodes.ns2</name>

<value>nn3,nn4</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2.nn3</name>

<value>s103:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2.nn4</name>

<value>s104:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ns2.nn3</name>

<value>s103:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ns2.nn4</name>

<value>s104:50070</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--***********************************************-->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://s102:8485;s103:8485;s104:8485/ns1</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/centos/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

4.2)s103和s104的hdfs-site.xml配置

[hadoop/federation/hdfs-site.xml]

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.nameservices</name>

<value>ns1,ns2</value>

</property>

<!-- **************ns1********************* -->

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>s101:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>s102:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>s101:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>s102:50070</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- **************ns2********************* -->

<property>

<name>dfs.ha.namenodes.ns2</name>

<value>nn3,nn4</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2.nn3</name>

<value>s103:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2.nn4</name>

<value>s104:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ns2.nn3</name>

<value>s103:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ns2.nn4</name>

<value>s104:50070</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--***********************************************-->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://s102:8485;s103:8485;s104:8485/ns2</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/centos/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

4.3)s101 ~ s104的core-site.xml配置文件

[hadoop/federation/core-site.xml]

<?xml version="1.0"?>

<configuration xmlns:xi="http://www.w3.org/2001/XInclude">

<xi:include href="mountTable.xml" />

<property>

<name>fs.defaultFS</name>

<value>viewfs://ClusterX</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/centos/hadoop/federation/journalnode</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/centos/hadoop/federation</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>s102:2181,s103:2181,s104:2181</value>

</property>

</configuration>

4.4)mountTable.xml 挂载表文件

[hadoop/federation/mountTable.xml]

<configuration>

<property>

<name>fs.viewfs.mounttable.ClusterX.homedir</name>

<value>/home</value>

</property>

<property>

<name>fs.viewfs.mounttable.ClusterX.link./home</name>

<value>hdfs://ns1/home</value>

</property>

<property>

<name>fs.viewfs.mounttable.ClusterX.link./tmp</name>

<value>hdfs://ns2/tmp</value>

</property>

<property>

<name>fs.viewfs.mounttable.ClusterX.link./projects/foo</name>

<value>hdfs://ns1/projects/foo</value>

</property>

<property>

<name>fs.viewfs.mounttable.ClusterX.link./projects/bar</name>

<value>hdfs://ns2/projects/bar</value>

</property>

</configuration>

5.操作

5.1)删除所有节点的日志和本地临时目录

$>xcall.sh rm -rf /soft/hadoop/logs/*

$>xcall.sh rm -rf /home/centos/hadoop/federation/*

5.2)修改所有节点的hadoop软连接

$>xcall.sh ln -sfT /soft/hadoop/etc/federation /soft/hadoop/etc/hadoop

5.3)对ns1集群进行格式化以及初始工作

a)启动jn集群

登录s102 ~ s104,启动jounalnode进程。

$>hadoop-daemon.sh start journalnode

b)格式化nn1节点

[s101]

$>hdfs namenode -format

c)复制s101的元数据到s102下.

[s101]

$>scp -r

d)在s102上执行引导过程

#s101启动名称节点

$>hadoop-daemon.sh start namenode

# s102执行引导,不要重格(N)

$>hdfs namenode -bootstrapStandby

e)在s102上初始化编辑日志到jn集群(N)

$>hdfs namenode -initializeSharedEdits

f)在s102对zookeeper格式化zkfc(选择Y).

$>hdfs zkfc -formatZK

g)启动s101和s102的namenode和zkfc进程。

[s101]

$>hadoop-daemon.sh start zkfc

[s102]

$>hadoop-daemon.sh start namenode

$>hadoop-daemon.sh start zkfc

h)测试webui

5.4)对ns2集群进行格式化以及初始工作

a)格式nn3,切记使用-clusterId属性,保持和ns1的一致。

[s103]

$>hdfs namenode -format -clusterId CID-e16c5e2f-c0a5-4e51-b789-008e36b7289a

b)复制s103的元数据到s104上。

$>scp -r /home/centos/hadoop/federation centos@s104:/home/centos/hadoop/

c)在s104引导

#在s103启动namenode

$>hadoop-daemon.sh start namenode

#在s104执行引导

$>hdfs namenode -bootstrapStandby

d)在s104上初始化编辑日志

$>hdfs namenode -initializeSharedEdits

e)在s104对zookeeper格式化zkfc(选择Y).

$>hdfs zkfc -formatZK

f)启动s103和s104的namenode和zkfc进程。

[s103]

$>hadoop-daemon.sh start zkfc

[s104]

$>hadoop-daemon.sh start namenode

$>hadoop-daemon.sh start zkfc

5.5)停止集群

$>stop-dfs.sh

5.6)重启dfs集群

$>start-dfs.sh

5.7)创建目录

# 注意加p参数

$>hdfs dfs -mkdir -p /home/data

#上传文件,考察webui

$>hdfs dfs -put 1.txt /home/data

浙公网安备 33010602011771号

浙公网安备 33010602011771号