文本数据挖掘作业 实验1 -----爬取数据

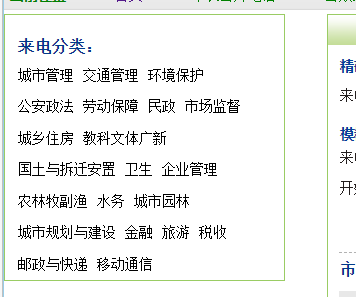

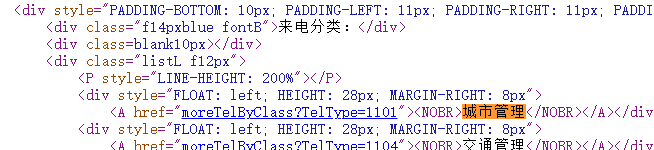

# 1.定位到来电分类分区

# 2. 提取子页面的连接地址 child_href1

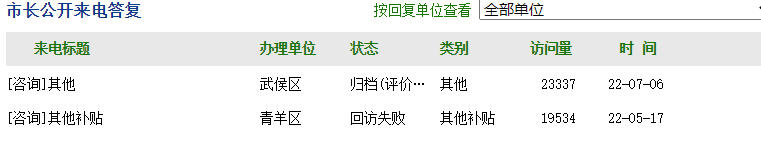

# 3. 在子页面提取想要的数据

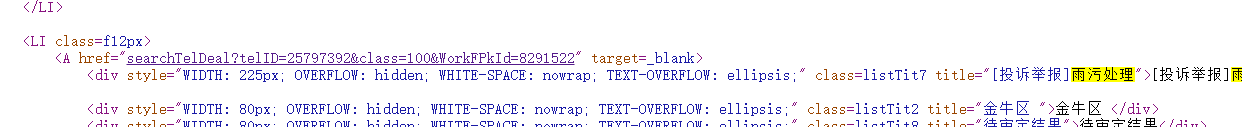

# 4. 再定位到详细来电,进入二重子页面

# 5. 提取二重子页面连接地址 child_href2

# 6. 在二重子页面(来电情况)里提取想要的数据

代码如下:

1 # 1.定位到来电分类分区 2 # 2. 提取子页面的连接地址 child_href1 3 # 3. 在子页面提取想要的数据 4 # 4. 再定位到详细来电,进入二重子页面 5 # 5. 提取二重子页面连接地址 child_href2 6 # 6. 在二重子页面(来电情况)里提取想要的数据 7 # 7. 存放在data.json文件中 8 9 10 import requests 11 from lxml import etree 12 import time 13 import json 14 15 # 1.定位到来电分类分区 16 url = "http://12345.chengdu.gov.cn/moreTelByClass?TelType=1101" 17 domain = "http://12345.chengdu.gov.cn/" 18 domain1 = "http://12345.chengdu.gov.cn/" 19 20 header = { 21 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/106.0.0.0 Safari/537.36 Edg/106.0.1370.52" 22 } 23 24 # get请求 25 resp = requests.get(url, headers=header) 26 resp.encoding = "utf-8" 27 # print(resp.text) 28 29 html = etree.HTML(resp.text) 30 31 child_href1_list = [] # 存放child_href1的列表 32 child_href2_list = [] # 存放child_href2的列表 33 # 创建空字典存放数据 34 dicts = {} 35 # 创建空列表 子页面1 36 tel_titles = [] 37 handle_departs = [] 38 statuss = [] 39 types = [] 40 page_viewss = [] 41 tel_dates = [] 42 # 创建空列表 子页面2 43 tel_conts = [] 44 proc_departss = [] 45 handle_results = [] 46 47 48 # child_href = html.xpath('//*[@id="container1"]/div/div[1]/div[1]/div[3]/div[1]/a/@href')[0] 49 # child_href1 = domain + child_href 50 # print(child_href1) 51 52 53 # 2.提取子页面的连接地址 child_href1 54 child_href1s = html.xpath('//*[@id="container1"]/div/div[1]/div[1]/div[3]/div') 55 for href in child_href1s: 56 child_href1 = domain + href.xpath("./a/@href")[0] 57 # print(child_href1) 58 child_href1_list.append(child_href1) 59 60 # 3. 在子页面提取想要的数据 61 for href1 in child_href1_list: 62 child_resp1 = requests.get(href1, headers=header) 63 child_resp1.encoding = 'utf-8' 64 # print(child_resp1.text) 65 html1 = etree.HTML(child_resp1.text) # 解析html 66 67 # 分别将提取的数据存入相应列表 68 tel_title = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[1]/text()')[0] 69 tel_titles.append(tel_title) 70 71 handle_depart = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[2]/text()')[0] 72 handle_departs.append(handle_depart) 73 74 status = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[3]/text()')[0] 75 statuss.append(status) 76 77 type_ = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[4]/text()')[0] 78 types.append(type_) 79 80 page_views = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[5]/text()')[0] 81 page_viewss.append(page_views) 82 83 tel_date = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[2]/a/div[6]/text()')[0] 84 tel_dates.append(tel_date) 85 86 # 向字典中增加键值对 87 dicts['tel_title'] = tel_titles 88 dicts['handle_depart'] = handle_departs 89 dicts['status'] = statuss 90 dicts['type_'] = types 91 dicts['page_views'] = page_viewss 92 dicts['tel_date'] = tel_dates 93 94 # 4. 再定位到详细来电,进入二重子页面 95 # 5. 提取二重子页面连接地址 child_href2 96 child_href2s = html1.xpath('//*[@id="container1"]/div/div[2]/div[1]/ul/li[@class="f12px"]') # 筛选 97 # print(child_href2s) 98 for href_a in child_href2s: 99 child_href2 = domain1 + href_a.xpath("./a/@href")[0] 100 # print(child_href2) 101 child_href2_list.append(child_href2) 102 103 # 6. 在二重子页面(来电情况)里提取想要的数据 104 for href2 in child_href2_list: 105 child_resp2 = requests.get(href2, headers=header) 106 child_resp2.encoding = 'utf-8' 107 # print(child_resp2.text) 108 html2 = etree.HTML(child_resp2.text) # 解析html 109 110 # 分别将提取的数据存入相应列表 111 tel_cont = html2.xpath('//*[@id="FmContent"]/text()')[0] 112 tel_conts.append(tel_cont) 113 114 proc_departs = html2.xpath('//*[@id="container1"]/div[2]/table/tbody/tr[6]/td[2]/text()')[0] 115 proc_departss.append(proc_departs) 116 117 handle_result = html2.xpath('//*[@id="DOverDesc"]/text()') 118 #print(handle_result) 119 handle_results.append(handle_result) 120 121 # 向字典中增加键值对 122 dicts['tel_cont'] = tel_conts 123 dicts['proc_departs'] = proc_departss 124 dicts['handle_result'] = handle_results 125 126 127 # 保存数据为json格式 128 try: 129 with open('exp_data-2020083156.json', 'a', encoding="utf-8") as f: 130 f.write(json.dumps(dicts, ensure_ascii=False) + "\n") # ensure_ascii=False,则返回值可以包含非ascii值 131 except IOError as e: 132 print(str(e)) 133 134 finally: 135 f.close() 136 137 time.sleep(1) 138 print("over!") 139 resp.close() 140 child_resp1.close()

hello my world

本文来自博客园,作者:slowlydance2me,转载请注明原文链接:https://www.cnblogs.com/slowlydance2me/p/16837776.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号