数据采集第三次作业

作业一

-

要求

指定一个网站,爬取这个网站中的所有的所有图片,例如中国气象网(http://www.weather.com.cn)。分别使用单线程和多线程的方式爬取。

-

单线程代码

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

def imageSpider(start_url):

try:

urls = []

req = urllib.request.Request(start_url,headers = headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data,["utf-8","gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data,"lxml")

images = soup.select("img")

for image in images:

try:

src = image["src"]

url = urllib.request.urljoin(start_url,src)

if url not in urls:

urls.append(url)

print(url)

download(url)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url):

global count

try:

count = count+1

if(url[len(url)-4] == "."):

ext = url[len(url)-4:]

else:

ext = ""

req = urllib.request.Request(url,headers = headers)

data = urllib.request.urlopen(req,timeout=100)

data = data.read()

if count == 39:

fobj = open("images\\" + str(count) + ".png", "wb")

else:

fobj = open("images\\"+str(count)+ext,"wb")

fobj.write(data)

fobj.close()

print("downloaded"+str(count)+ext)

except Exception as err:

print(err)

start_url = "http://www.weather.com.cn/weather/101280601.shtml"

headers = {

"User-Agent":"Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"

}

count = 0

imageSpider(start_url)-

单线程运行结果截图

-

多线程代码

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import threading

def imageSpider(start_url):

global threads

global count

try:

urls = []

req = urllib.request.Request(start_url,headers = headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data,["utf-8","gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data,"lxml")

images = soup.select("img")

for image in images:

try:

src = image["src"]

url = urllib.request.urljoin(start_url,src)

if url not in urls:

print(url)

count = count+1

T = threading.Thread(target=download,args=(url,count))

T.setDaemon(False)

T.start()

threads.append(T)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url,count):

try:

if(url[len(url)-4] == "."):

ext = url[len(url)-4:]

else:

ext = ""

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

if count == 39:

fobj = open("images\\" + str(count) + ".png", "wb")

else:

fobj = open("images\\" + str(count) + ext, "wb")

fobj.write(data)

fobj.close()

print("downloaded" + str(count) + ext)

except Exception as err:

print(err)

start_url = "http://www.weather.com.cn/weather/101280601.shtml"

headers = {

"User-Agent":"Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"

}

count = 0

threads = []

imageSpider(start_url)

for t in threads:

t.join()

print("The End")

-

多线程运行结果截图

-

心得体会

通过将书上的代码进行复现,学习了如何实现多线程爬取信息

作业二

-

要求

使用scrapy框架复现作业①。

-

思路

1.编写数据项目类,根据要爬取的信息格式,设置项目类中的结构

2.编写爬虫程序,基本跟作业一的相同,主要是增加一个yield关键字的使用

3.设置配置文件

4.编写数据管道处理类,处理爬虫程序传递过来的数据,根据自己的需求输出数据

-

单线程代码

- 数据项目类items

import scrapy

class PictureItem(scrapy.Item):

# define the fields for your item here like:

url = scrapy.Field()

pass- 爬虫程序MySpider

import scrapy

from bs4 import UnicodeDammit

from getpicture.items import PictureItem

class MySpider(scrapy.Spider):

name = "mySpider"

def start_requests(self):

url = 'http://www.weather.com.cn/weather/101280601.shtml'

yield scrapy.Request(url=url, callback=self.parse)

def parse(self,response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

srcs = selector.xpath("//img/@src")

for src in srcs:

print(src.extract())

item = PictureItem()

item["url"] = src.extract()

yield item

except Exception as err:

print(err)- 数据管道处理类pipelines

import os

import urllib

class GetpicturePipeline:

count = 1

urllist = []

def process_item(self, item, spider):

GetpicturePipeline.count += 1

try:

if not os.path.exists('images'):

os.makedirs('images')

if item['url'] not in GetpicturePipeline.urllist:

data = urllib.request.urlopen(item['url']).read()

with open('images/'+str(GetpicturePipeline.count)+'.jpg',"wb") as f:

f.write(data)

except Exception as err:

print(err)

return item-

单线程运行结果截图

-

多线程代码

import scrapy

from bs4 import UnicodeDammit

import urllib.request

import threading

import os

class MySpider(scrapy.Spider):

name = "mySpider"

start_urls = ['http://www.weather.com.cn/weather/101280601.shtml']

count = 0

threads = []

for t in threads:

t.join()

headers = {

"User-Agent": "Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"

}

def parse(self,response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

srcs = selector.xpath("//img/@src")

for src in srcs:

print(src.extract())

self.count = self.count + 1

T = threading.Thread(target=self.download, args=(src.extract(), self.count))

T.setDaemon(False)

T.start()

self.threads.append(T)

except Exception as err:

print(err)

def download(self,url, count):

try:

if (url[len(url) - 4] == "."):

ext = url[len(url) - 4:]

else:

ext = ""

if not os.path.exists('images'):

os.makedirs('images')

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("images\\" + str(count) + ext, "wb")

fobj.write(data)

fobj.close()

print("downloaded" + str(count) + ext)

except Exception as err:

print(err)-

多线程运行结果截图

-

心得体会

1.熟悉了对xpath的使用,感觉在使用上xpath更加灵活方便

2.加深了对scrapy框架的理解,以及内部的原理,同时也熟悉了scrapy框架的使用

作业三

-

要求

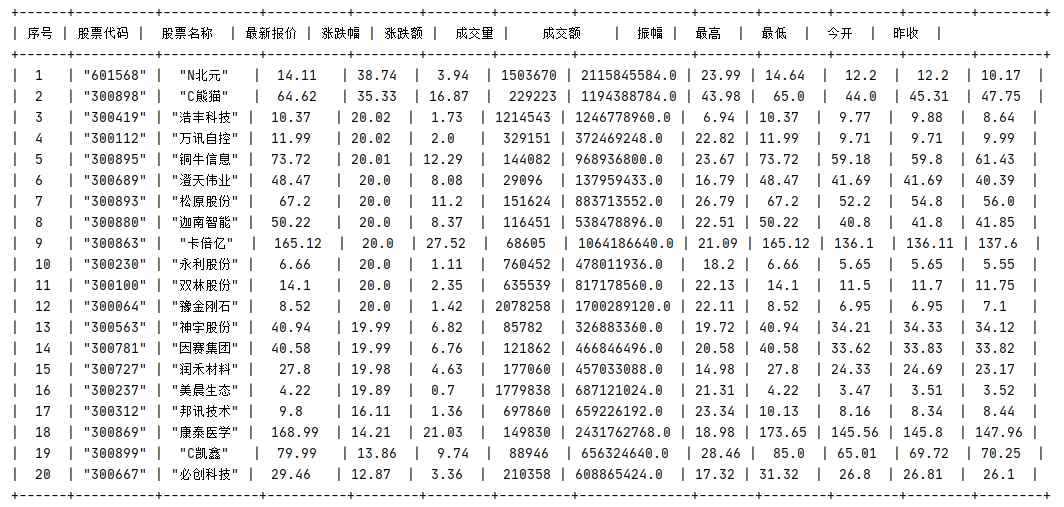

使用scrapy框架爬取股票相关信息。

-

候选网站

东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

-

思路

爬取股票信息的代码因为之前写过,因此只要在之前的基础上稍微改动一点就行。主要还是scrapy框架的构造和使用。

-

代码

- 数据项目类items

import scrapy

class GetstockItem(scrapy.Item):

# define the fields for your item here like:

index = scrapy.Field()

code = scrapy.Field()

name = scrapy.Field()

latestPrice = scrapy.Field()

upDownRange = scrapy.Field()

upDownPrice = scrapy.Field()

turnover = scrapy.Field()

turnoverNum = scrapy.Field()

amplitude = scrapy.Field()

highest = scrapy.Field()

lowest = scrapy.Field()

today = scrapy.Field()

yesterday = scrapy.Field()

pass- 爬虫程序MySpider

import scrapy

import re

from getstock.items import GetstockItem

from getstock.pipelines import GetstockPipeline

class MySpider(scrapy.Spider):

name = "mySpider"

def start_requests(self):

url = 'http://19.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112403324490377009397_1602209502288&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f12,f14,f2,f3,f4,f5,f6,f7,f15,f16,f17,f18&_=1602209502289'

yield scrapy.Request(url=url, callback=self.parse)

def parse(self,response):

try:

r = response.body.decode()

pat = '"diff":\[(.*?)\]'

data = re.compile(pat, re.S).findall(r)

datas = data[0].split("},{")

datas[0] = datas[0].replace('{', '')

datas[-1] = datas[-1].replace('}', '')

for i in range(len(datas)):

item = GetstockItem()

item["index"] = i+1

item["code"] = datas[i].split(",")[6].split(":")[1]

item["name"] = datas[i].split(",")[7].split(":")[1]

item["latestPrice"] = datas[i].split(",")[0].split(":")[1]

item["upDownRange"] = datas[i].split(",")[1].split(":")[1]

item["upDownPrice"] = datas[i].split(",")[2].split(":")[1]

item["turnover"] = datas[i].split(",")[3].split(":")[1]

item["turnoverNum"] = datas[i].split(",")[4].split(":")[1]

item["amplitude"] = datas[i].split(",")[5].split(":")[1]

item["highest"] = datas[i].split(",")[8].split(":")[1]

item["lowest"] = datas[i].split(",")[9].split(":")[1]

item["today"] = datas[i].split(",")[10].split(":")[1]

item["yesterday"] = datas[i].split(",")[11].split(":")[1]

yield item

print(GetstockPipeline.tb)

except Exception as err:

print(err)- 数据管道处理类pipelines

import prettytable as pt

class GetstockPipeline:

tb = pt.PrettyTable(["序号", "股票代码", "股票名称", "最新报价", "涨跌幅", "涨跌额", "成交量", "成交额", "振幅", "最高", "最低", "今开", "昨收"])

def process_item(self, item, spider):

self.tb.add_row(

[item["index"], item["code"], item["name"], item["latestPrice"], item["upDownRange"], item["upDownPrice"],

item["turnover"], item["turnoverNum"], item["amplitude"], item["highest"], item["lowest"], item["today"],

item["yesterday"]])

return item-

运行结果截图

-

心得体会

1.进一步熟悉了对scrapy框架的使用

2.尝试了一下学长发的对齐格式的方法,但是不知道为什么还是不能很好对齐,最后还是用了prettytable,之后再尝试一下能不能完美对齐输出数据的格式。

浙公网安备 33010602011771号

浙公网安备 33010602011771号