Spark job 部署模式

Spark job 的部署有两种模式,Client && Cluster

spark-submit .. --deploy-mode client | cluster

【上传 Jar 包】

[centos@s101 ~]$ hdfs dfs -put myspark.jar data

【Client】

默认值,Driver 运行在 Client 端主机上。

spark-submit --class com.share.scala.mr.TaggenCluster --master spark://s101:7077 myspark.jar /user/centos/temptags.txt

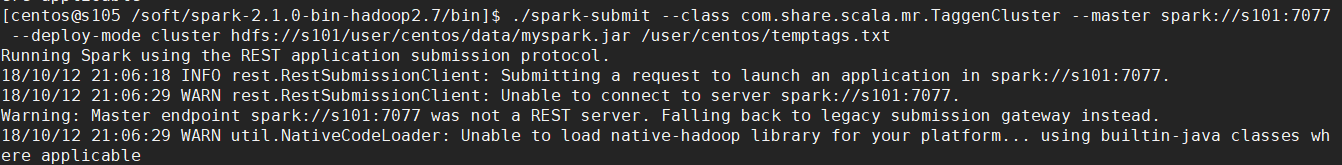

【cluster】

Driver 运行在某个 Worker 节点上。客户端值负责提交 job。

spark-submit --class com.share.scala.mr.TaggenCluster --master spark://s101:7077 --deploy-mode cluster hdfs://mycluster/user/centos/data/myspark.jar /user/centos/temptags.txt

[centos@s101 ~]$ xcall.sh jps ==================== s101 jps =================== 2981 Master 2568 NameNode 2889 DFSZKFailoverController 3915 Jps ==================== s102 jps =================== 2961 CoarseGrainedExecutorBackend 2450 Worker 2325 JournalNode 2246 DataNode 2187 QuorumPeerMain 3005 Jps ==================== s103 jps =================== 2457 Worker 2331 JournalNode 2188 QuorumPeerMain 3292 CoarseGrainedExecutorBackend 2253 DataNode 3310 Jps ==================== s104 jps =================== 2193 QuorumPeerMain 2981 DriverWrapper 3094 Jps 2455 Worker 2328 JournalNode 2252 DataNode 3038 CoarseGrainedExecutorBackend

[centos@s105 /soft/spark-2.1.0-bin-hadoop2.7/bin]$ ./spark-submit --class com.share.scala.mr.TaggenCluster --master spark://s101:7077 --deploy-mode cluster hdfs://s101/user/centos/data/myspark.jar /user/centos/temptags.txt

且将新火试新茶,诗酒趁年华。

浙公网安备 33010602011771号

浙公网安备 33010602011771号