srping cloud alibaba 微服务 + seata实战

nacos+seata(seata高可用docker部署、rancher部署)

说明:记录seata整合

1)整体

- 父依赖

<properties>

<!--项目编译环境-->

<java.version>1.8</java.version>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<maven.compiler.encoding>UTF-8</maven.compiler.encoding>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<!--依赖版本管理-->

<fastjson.version>1.2.73</fastjson.version>

<commons-lang3.version>3.11</commons-lang3.version>

<spring-boot.version>2.2.10.RELEASE</spring-boot.version>

<spring-cloud.version>Hoxton.SR8</spring-cloud.version>

<spring-cloud-alibaba.version>2.2.3.RELEASE</spring-cloud-alibaba.version>

<mysql-connector.version>8.0.16</mysql-connector.version>

<druid-spring-boot-starter.version>1.2.4</druid-spring-boot-starter.version>

<mybatis-plus-boot-starter.version>3.4.1</mybatis-plus-boot-starter.version>

</properties>

<dependencyManagement>

<dependencies>

<!-- SpringBoot -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring-boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- SpringCloud -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- SpringCloud Alibaba -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring-cloud-alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- MySQL数据库连接驱动 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql-connector.version}</version>

</dependency>

<!-- MybatisPlus Mybatis增强插件SpringBoot整合 -->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>${mybatis-plus-boot-starter.version}</version>

</dependency>

<!-- Druid阿里数据库连接池 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>${druid-spring-boot-starter.version}</version>

</dependency>

<!-- 阿里FastJson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<!-- Apache的处理基本数据类型数据的工具包 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>${commons-lang3.version}</version>

</dependency>

<!--Lombok-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.22</version>

<scope>provided</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

-

子依赖

<dependencies>

<!-- nacos -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<!-- 因为兼容版本问题,所以需要剔除它自己的seata的包 -->

<exclusions>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

</exclusions>

</dependency>

<!--引入我们使用的自己的seata对应的版本的依赖,而不是使用starter默认的版本-->

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.3.0</version>

</dependency>

<!--openfeign-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

<version>3.1.0</version>

</dependency>

<!--web启动器-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.4.3.2</version>

</dependency>

<!--alibaba druid-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

</dependency>

<!--mysql-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.25</version>

</dependency>

<!--jdbc-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

<version>2.6.1</version>

</dependency>

<!--lombok-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!--seata_account服务-->

<dependency>

<groupId>org.example</groupId>

<artifactId>seata_account</artifactId>

<version>1.0-SNAPSHOT</version>

<scope>compile</scope>

</dependency>

<!--seata_storage服务-->

<dependency>

<groupId>org.example</groupId>

<artifactId>seata_storage</artifactId>

<version>1.0-SNAPSHOT</version>

<scope>compile</scope>

</dependency>

</dependencies>

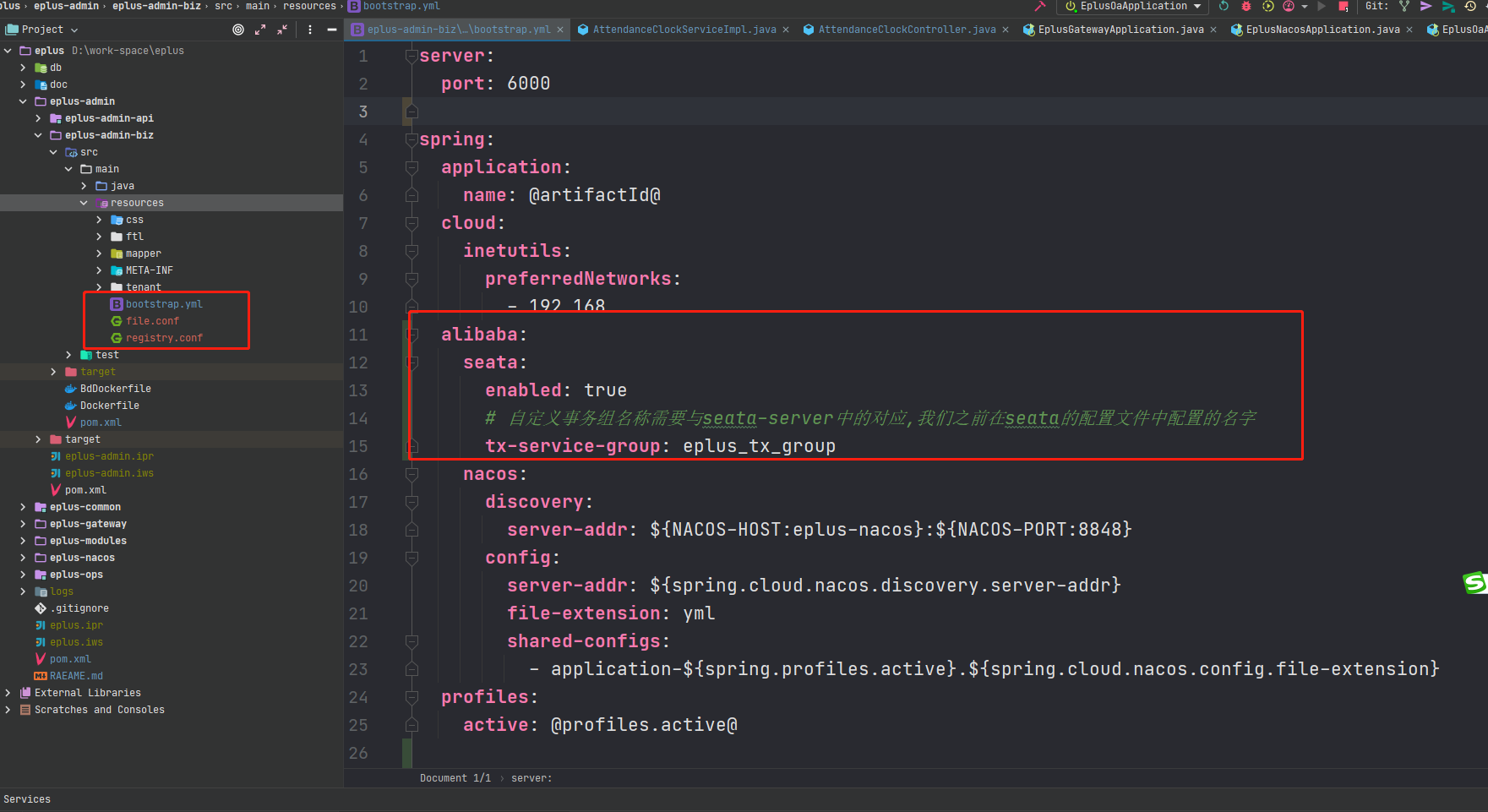

微服务配置文件

说明:出现问题1,需在微服务中添加file.conf和registry.conf文件

- bootstrap.yml

server:

port: 7630

spring:

application:

name: account

cloud:

alibaba:

seata:

# 自定义事务组名称需要与seata-server中的对应,我们之前在seata的配置文件中配置的名字

tx-service-group: order_tx_group

nacos:

discovery:

server-addr: localhost:8860

# namespace: ffee2bbe-5ba5-4e6e-a822-6a00abe11769

datasource:

# 当前数据源操作类型

type: com.alibaba.druid.pool.DruidDataSource

# mysql驱动类

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/seata_account?useUnicode=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=GMT%2B8

username: root

password: 123

logging:

level:

io:

seata: info

mybatis:

mapperLocations: classpath*:mapper/*.xml

- file.conf

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#vgroup->rgroup

vgroupMapping.order_tx_group = "default"

#only support single node

default.grouplist = "127.0.0.1:8091"

#degrade current not support

enableDegrade = false

#disable

disable = false

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

}

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "db"

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://172.16.1.240:33067/eplus_seata?userSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8"

user = "root"

password = "xxx"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

}

- registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8860"

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8860"

namespace = ""

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

}

}

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

seata

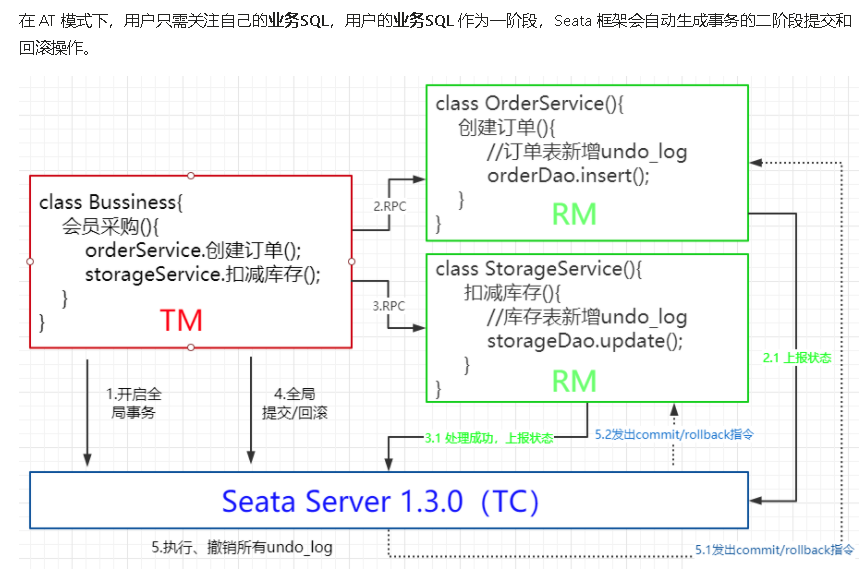

AT模式

TC(Transcation Coordinator)事务协调者:维护全局和分支事务的状态,驱动全局事务的提交或回滚

TM(Transcation Manager)事务管理器:定义全局事务的范围,开始全局事务、提交或回滚全局事务

RM(Resource Manager)资源管理器:管理分支事务处理的资源,与TC交谈以注册分支事务和报告分支事务的状态,并驱动分支事务提交或回滚。

1)seata下载(连接一:seata下载)

版本说明(nacos与seata版本):https://github.com/alibaba/spring-cloud-alibaba/wiki/版本说明

2)文件修改

- file.conf

这里选用db方式

## transaction log store, only used in seata-server

service {

#transaction service group mapping

#指定测试的事务组名称

vgroupMapping.order_tx_group = "default"

#only support when registry.type=file, please don't set multiple addresses

#指定默认组 地址和端口,可以设置多个地址

default.grouplist = "127.0.0.1:8091"

#disable seata

#禁用全局事务=false 即开启服务

disableGlobalTransaction = false

}

store {

## store mode: file、db、redis

mode = "db"

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://172.16.1.240:33067/eplus_seata?userSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8"

user = "root"

password = "xxxx"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

}

- registry.conf

说明:这里我用nacos,为了简洁删除了其它项,其它项可以保留。

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8860"

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8860"

namespace = ""

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

}

}

- config.txt

说明:这里只保留了必要信息,其它已删除,大部分为默认参数,可以不删,根据需要自己配置。

service.vgroupMapping.order_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

store.mode=db

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.cj.jdbc.Driver

store.db.url=jdbc:mysql://172.16.1.240:33067/eplus_seata?userSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8

store.db.user=root

store.db.password=xxx

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

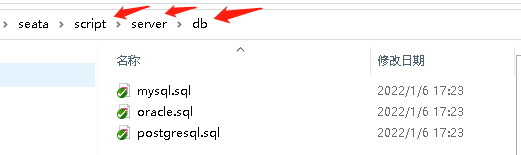

- seata-server数据库

去github下载seata,找到(如图:)目录下的sql文件,选择对应的

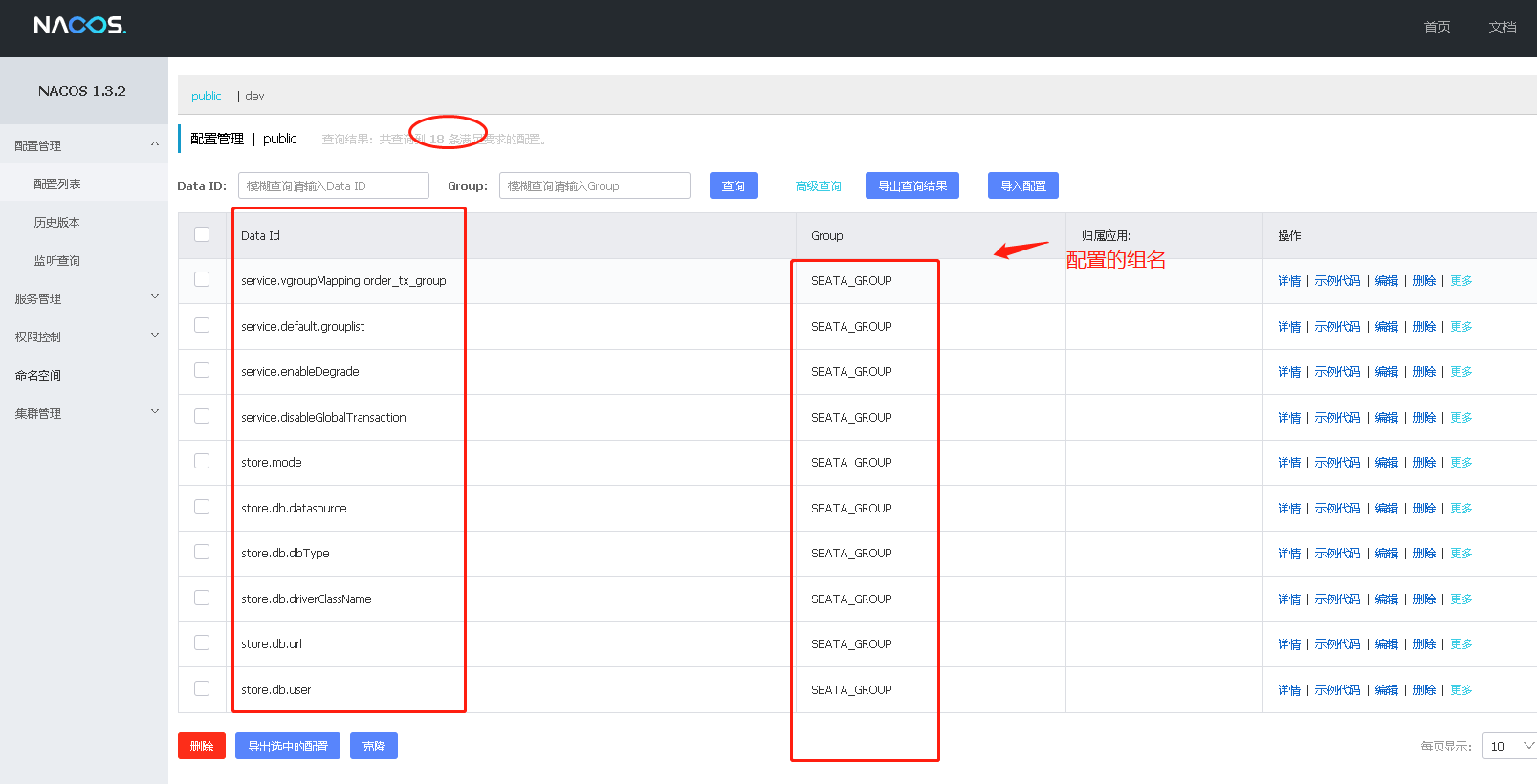

- 向nacos中注册配置并查看

打开git bash或linux类命令行,执行sh脚本(注意脚本是否有执行的权限)

nacos-config.sh -h localhost -p 8860 -g SEATA_GROUP -t f46bbdaa-f11e-414f-9530-e6a18cbf91f6 -u nacos -w nacos

参数说明:

-g SEATA_GROUP(组名)

-t f46bbdaa-f11e-414f-9530-e6a18cbf91f6 (命名空间id) 去掉-t 默认为public

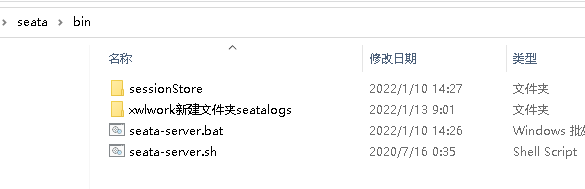

- 启动并查看

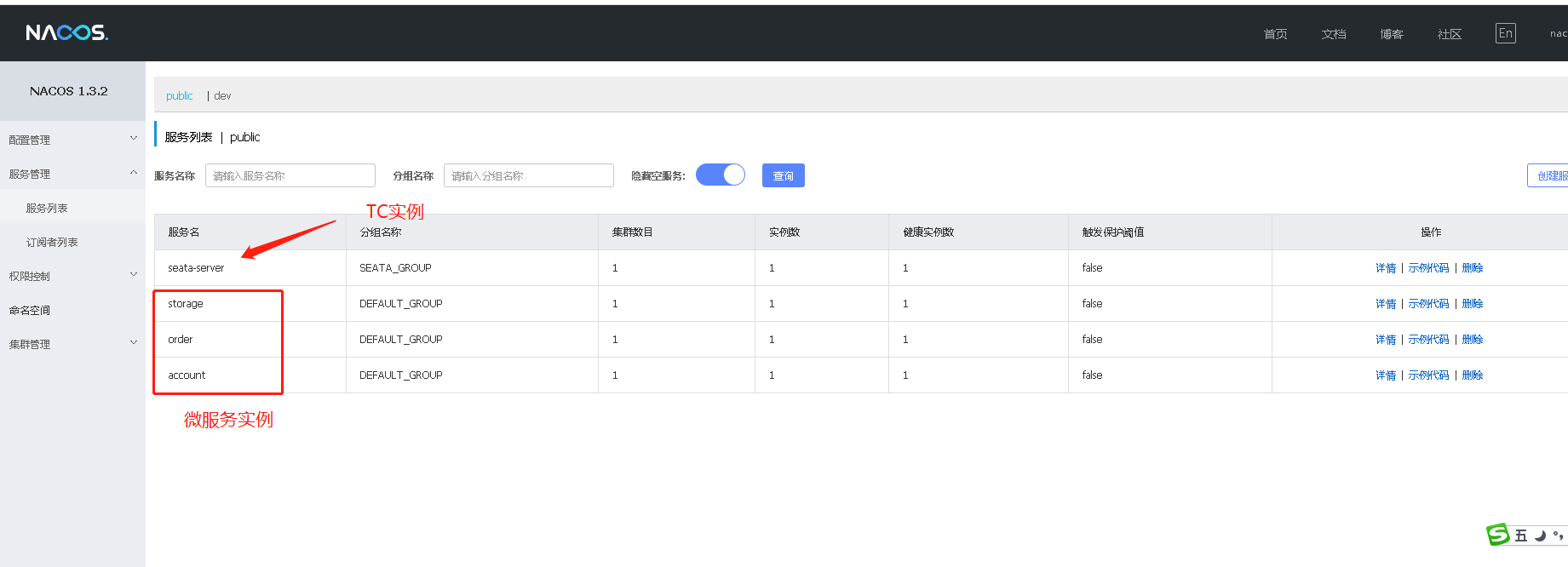

运行效果

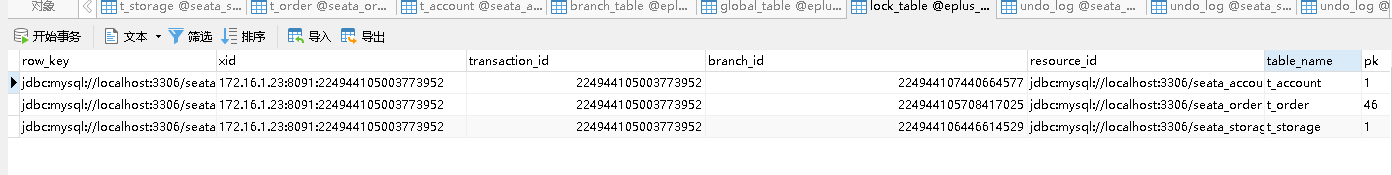

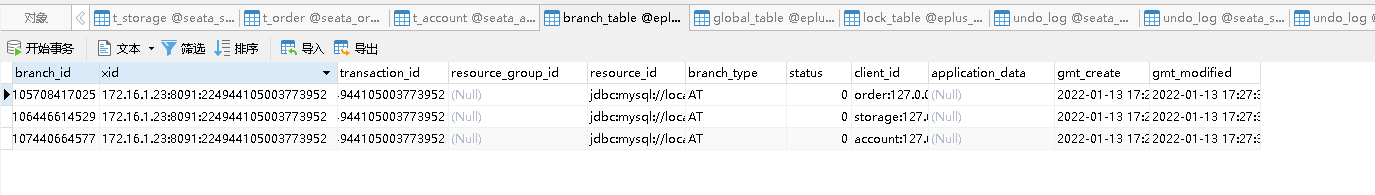

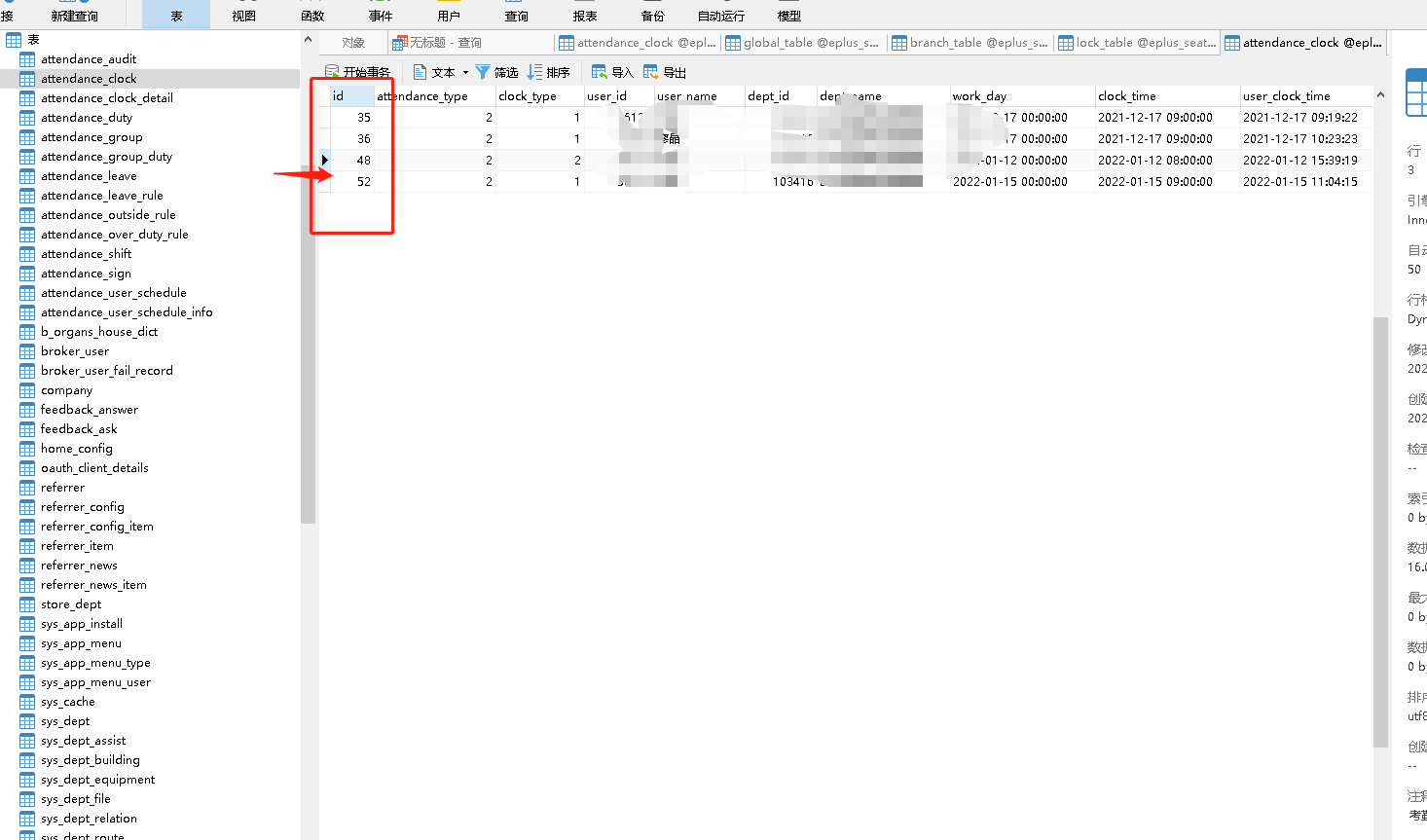

首先,我们的Seata服务器搭建好了之后,一般使用数据库搭建,此时会生成一个SeaTa数据库,里面有三张表:global_table、branch_table、lock_table分别对应全局事务、分支事务、全局锁。

global_table: 每当有一个全局事务发起后,就会在该表中记录全局事务的ID。

branch_table: 记录每一个分支事务的ID,分支事务操作的哪个数据库等信息

lock_table:用于申请全局锁。

springcloud alibaba项目集成(实战案例)效果:

一阶段的步骤:

1)开启一个全局事务,在global_table中生成全局事务的ID,以及在branch_table中生成分支事务的ID。

2)在每个本地事务中:执行前置镜像——>执行业务SQL——>执行后置镜像——>插入回滚日志。

前置镜像:查询执行真正业务SQL之前的数据。

后置镜像:业务SQL执行之后的数据,用于回滚时校验数据是否被其他事务修改。

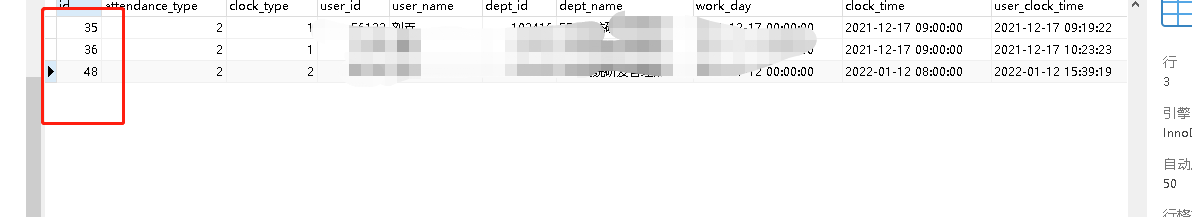

插入回滚数据: 在数据库的UNDO_LOG表中插入SQL执行前后的相关信息(JSON字符串),用于二阶段回滚。

3)本地事务提交:将SQL的执行结果以及UNDO_LOG表的写入数据进行本地事务提交。

二阶段步骤:

1)回滚:若一阶段中任何一个本地事务的提交出错超时等,就执行全局回滚。

通过 XID 和 Branch ID 查找到相应的 UNDO LOG 记录校验当前数据是否与后置镜像数据一致,不一致说明其他事务已经再次修改了数据;若一致则通过UNDO LOG执行回滚语句。执行完回滚日志后提交本地事务。

2)提交:一阶段所有本地事务均成功时,向全局事务管理器TC返回提交成功的结果。

另外,删除对应的UNDO_LOG日志记录。

seata参数及并发问题

https://www.csdn.net/tags/NtTakg4sMTY5NzMtYmxvZwO0O0OO0O0O.html

常见问题

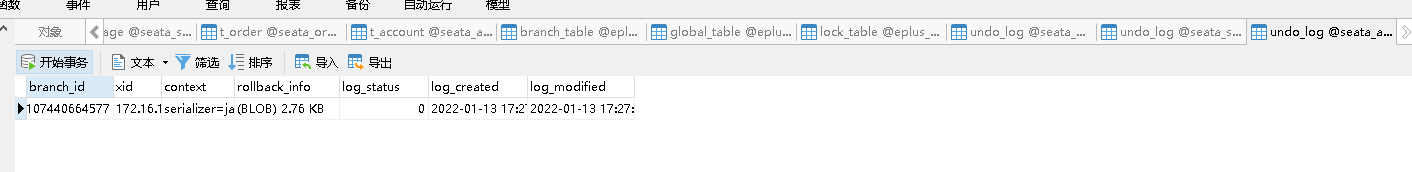

1.报错:endpoint format should like ip:port

解决:(推荐方式二)

方式一:注册全局事务的微服务都添加file.conf和registry.conf文件,内容与服务端类似,且.yml文件配置如下图

客户端口file.conf内容

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#vgroup->rgroup

vgroupMapping.eplus_tx_group = "default"

#only support single node

default.grouplist = "127.0.0.1:8091"

#degrade current not support

enableDegrade = false

#disable

disable = false

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

}

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "db"

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://172.16.1.240:33067/eplus_seata?userSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8"

user = "root"

password = "xxxx"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

}

客户端口registry.conf内容

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

}

}

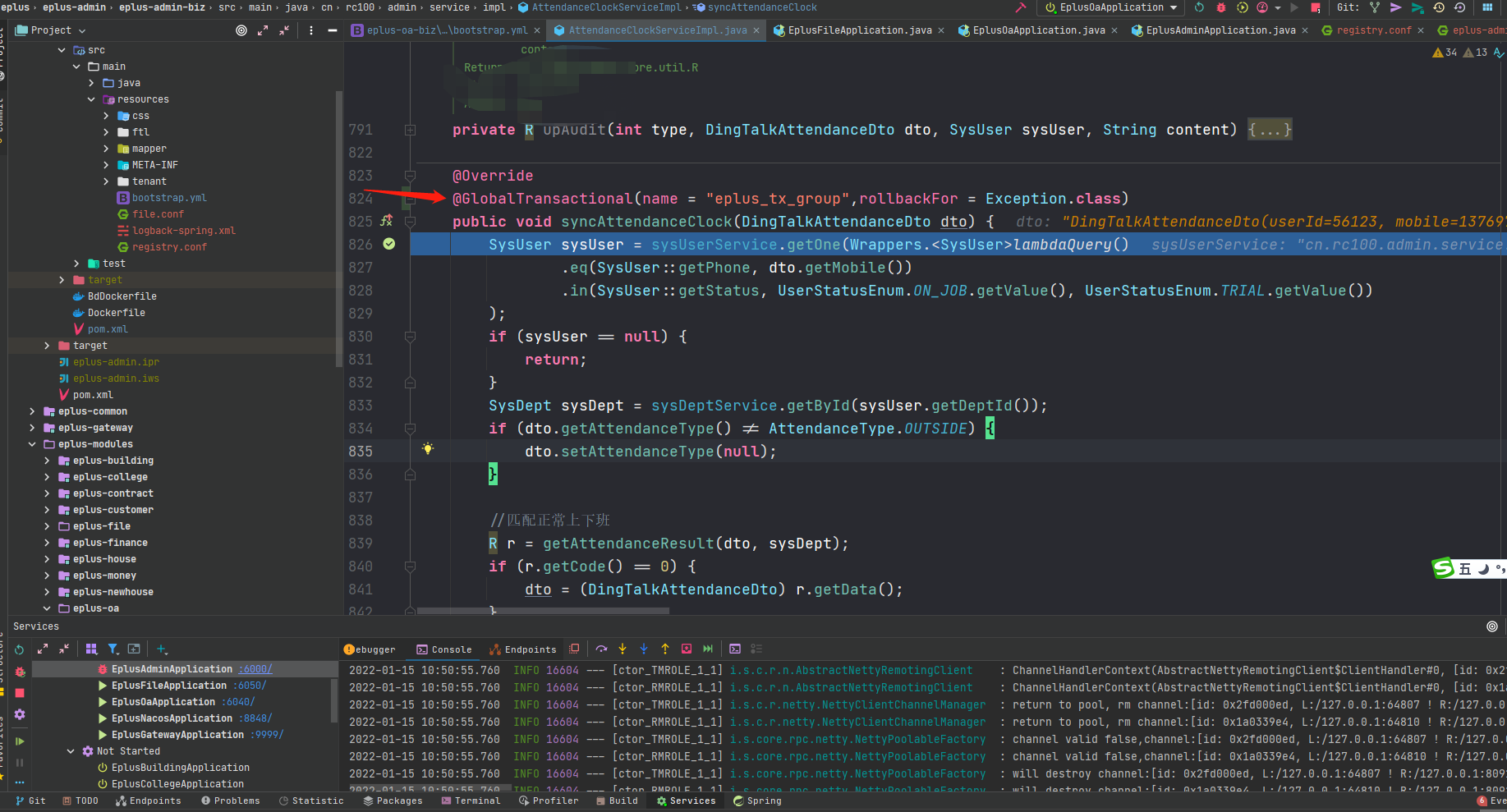

方式二:全部在yml文件配置,内容如图

2.自定义事务组名称需要与seata-server中的对应

tx-service-group: order_tx_group

3.并发下线程不安全,会出现脏读写,并发下商品库存会变为负数的可能

1.首先我们改造一下官网的代码,成为一个商品下单的代码

@GlobalTransactional

public void purchase(String userId, String commodityCode, int orderCount) {

try {

Product product = productService.getById(commodityCode);

if (product.getStock()-orderCount > 0) {

//xxxxx

ordersService.save(orders);

}

} catch (Exception e) {

// TODO: handle exception

throw new RuntimeException();

}

}

我们可以看到,这种情况下线程不安全,会出现脏读写,并发下商品库存会变为负数的可能.

2.改造代码上锁,保证线程安全

@GlobalTransactional

public void purchase(String userId, String commodityCode, int orderCount) {

try {

lock.lock();

Product product = productService.getById(commodityCode);

if (product.getStock()-orderCount > 0) {

//xxxx

ordersService.save(orders);

}

} catch (Exception e) {

// TODO: handle exception

throw new RuntimeException();

} finally {

lock.unlock();

}

}

这时候这段代码是可以正常使用了.但是之后再压测后发现,容易出现事务超时的异常

可以看到出现异常后回滚超时等情况了.

分析原因

1.我们可以按照以上的代码看到,官网的介绍比较多的都是注解形势,api提及比较少,我们首先分析刚才代码

服务调用->发现注解->创建事务->等待锁->获取锁->业务处理

可以发现优先创建了事务,这时候如果再大并发下,一直等待抢不到锁的话......这时候会引发上图所示的各种超时异常.

解决方案

1.再来看下流程:服务调用->发现注解->创建事务->等待锁->获取锁->业务处理

2.发现问题所在了不?只要我们能把等待锁获取锁的操作放在创建事务前,这个问题迎刃而解.

用户请求->等待锁->获取锁->服务调用->发现注解->创建事务->业务处理

@GetMapping(value = "purchase")

public Object purchase() throws TransactionException {

try {

lock.lock();

return demoService.purchase(1,2,3);

} finally {

// TODO: handle finally clause

lock.unlock();

}

}

比如上述代码这样,再调用前直接更改掉,这样服务内的锁也可以去除,把锁换到了接口来.

3.如果有人说,因为是分布式锁不是本地锁,或者我加锁的地方就是要在service内咋办?

没关系,seata还有提供一套事务的api创建方式.

public void xxx(String userId, String commodityCode, int orderCount) {

try {

lock.lock();

Product product = xxxservic.getById(commodityCode);

if (product.getStock()-orderCount > 0) {

GlobalTransaction tx = GlobalTransactionContext.getCurrentOrCreate();

tx.begin(300000, "test-group");

try {

//业务代码

tx.commit();

} catch (Exception e) {

// TODO: handle exception

tx.rollback();

}

}

} finally {

lock.unlock();

}

}

改为上述代码,使用api进行提交跟回滚操作即可,这样保证了抢到锁后才进行事务的创建.

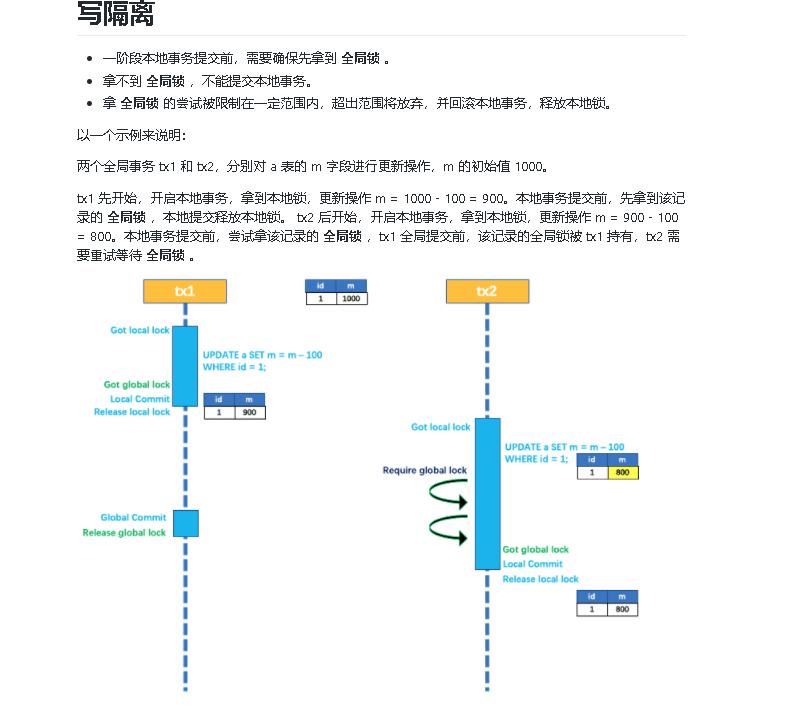

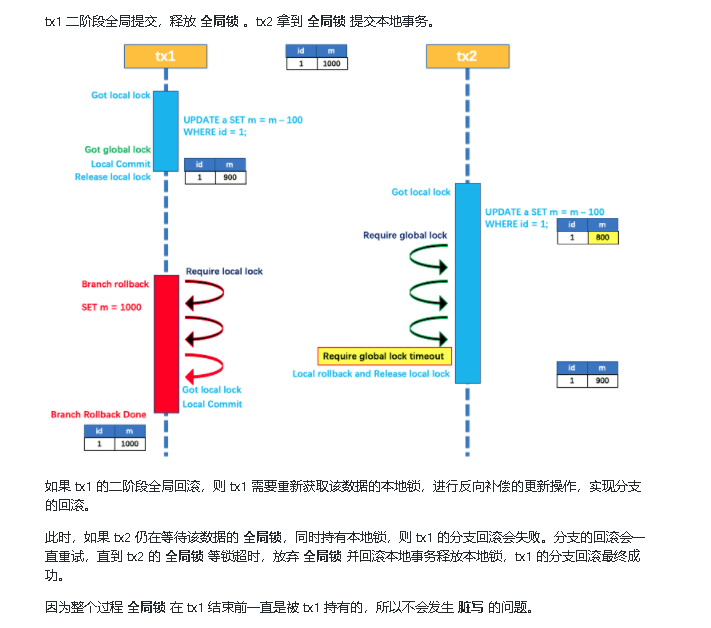

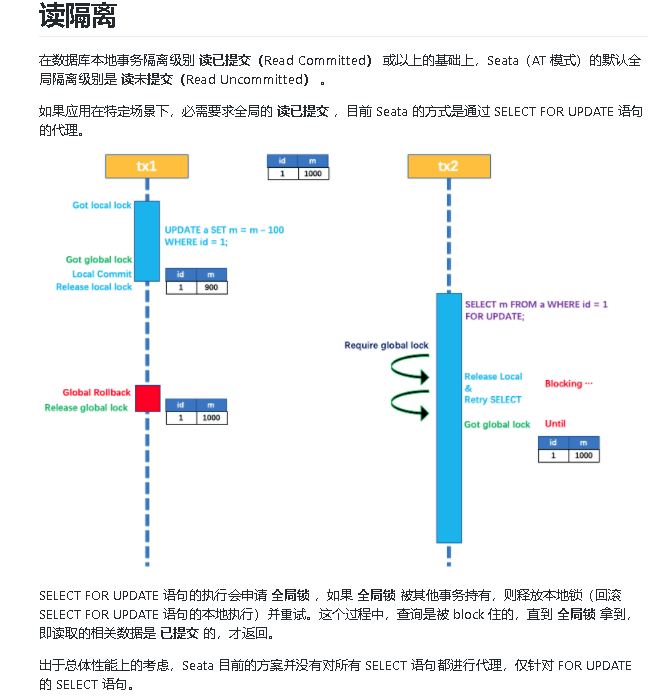

seata的写隔离、读隔离

linux+docker下seata单节点部署

-

启动 :(目录本地挂载)

docker run --name seata -d -p 8091:8091 -v /home/work/seata/resources:/seata-server/resources seataio/seata-server:1.3.0 -

文件拷贝

docker cp 35116:/seata-server/resources /home/work/seata -

查看挂载情况

docker inspect seata

linux+docker下 seata集群部署

-

启动 :(目录本地挂载)

docker run -d --restart always --name seata-server01 -p 8091:8091 -v /home/work/seata/resources:/seata-server/resources -e SEATA_IP=172.16.1.241 -e SEATA_PORT=8091 seataio/seata-server:1.3.0docker run -d --restart always --name seata-server02 -p 8092:8091 -v /home/work/seata/resources2:/seata-server/resources -e SEATA_IP=172.16.1.241 -e SEATA_PORT=8092 seataio/seata-server:1.3.0![image-20220118163202754]()

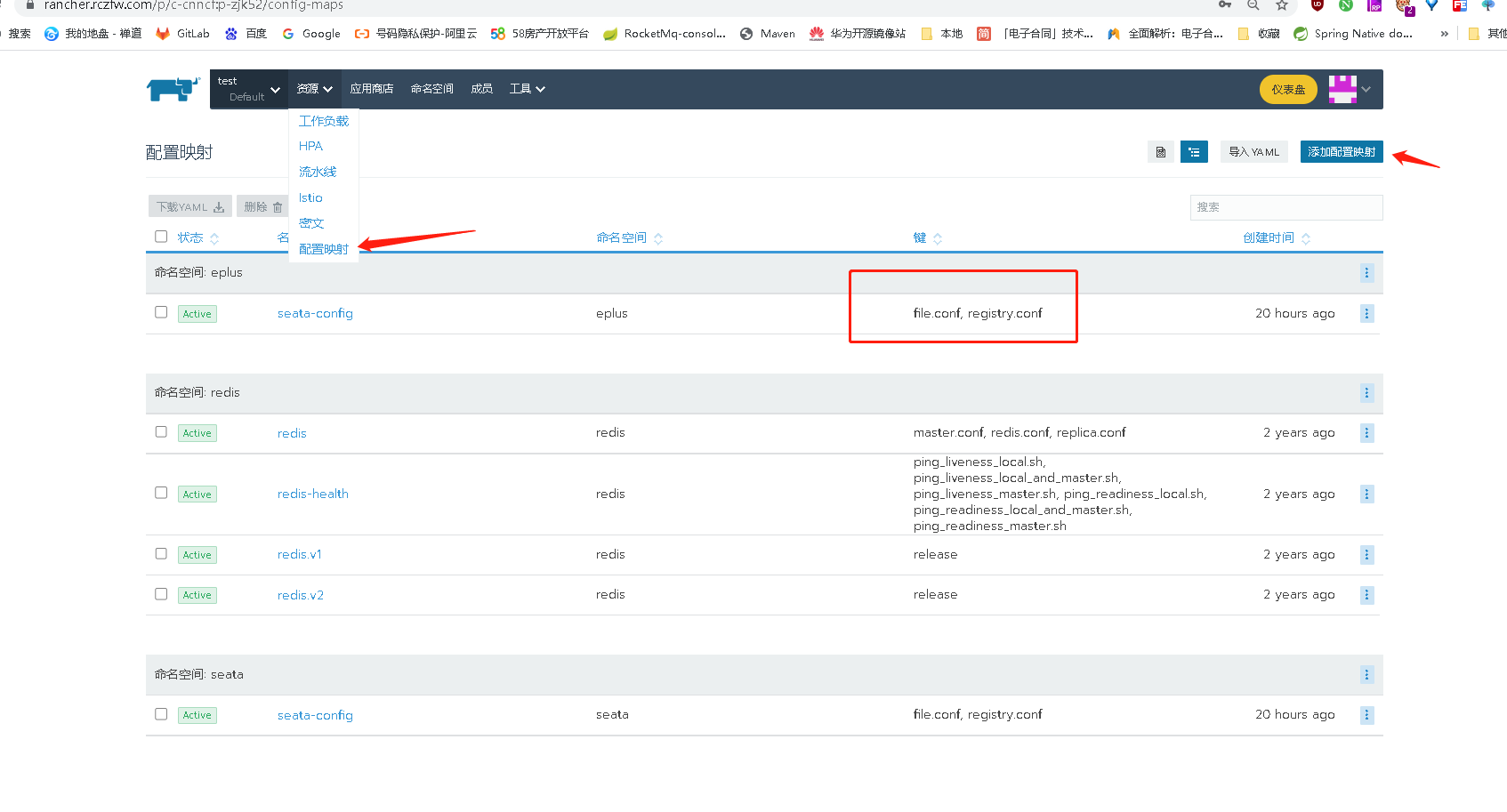

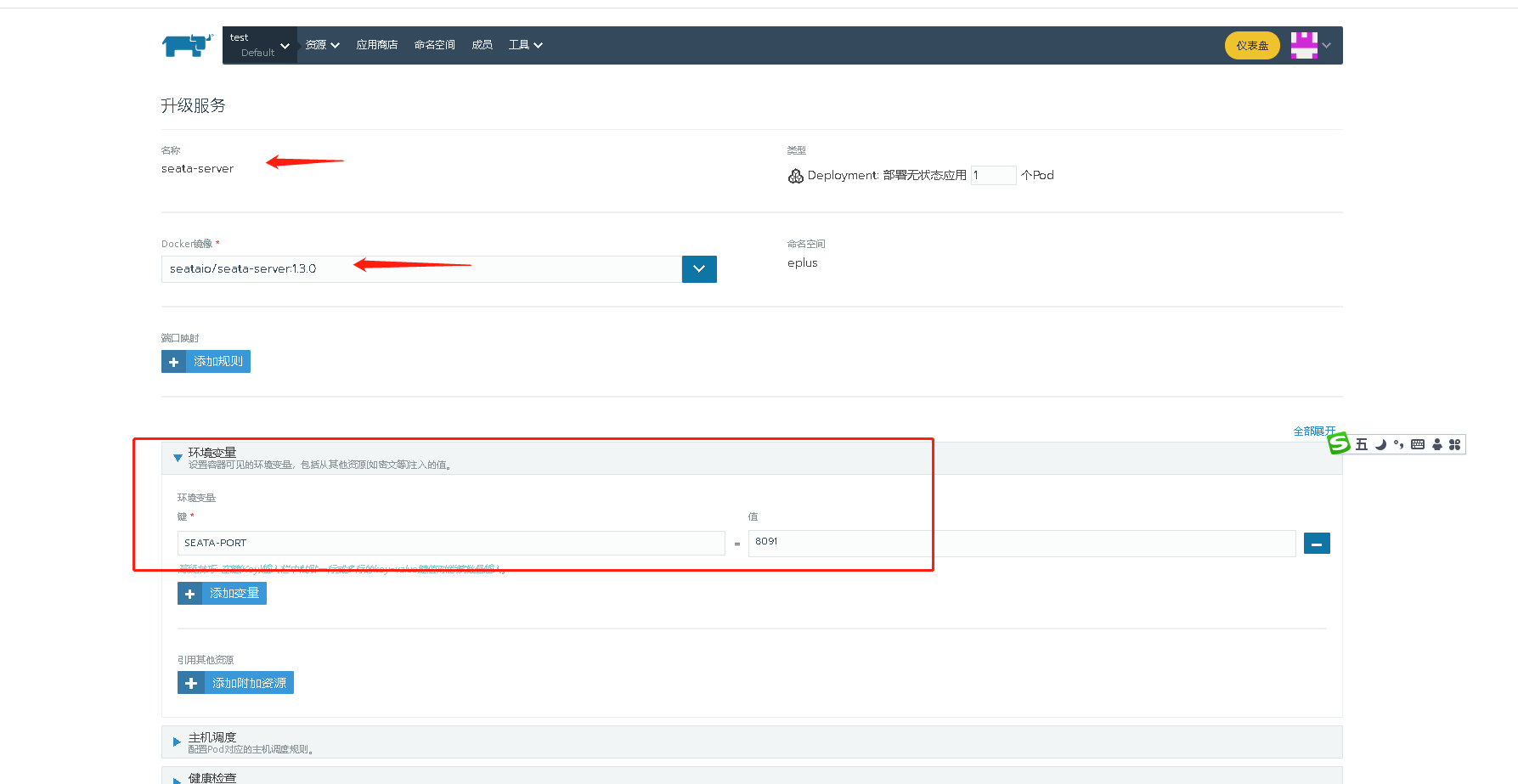

seata ---- Rancher 部署

-

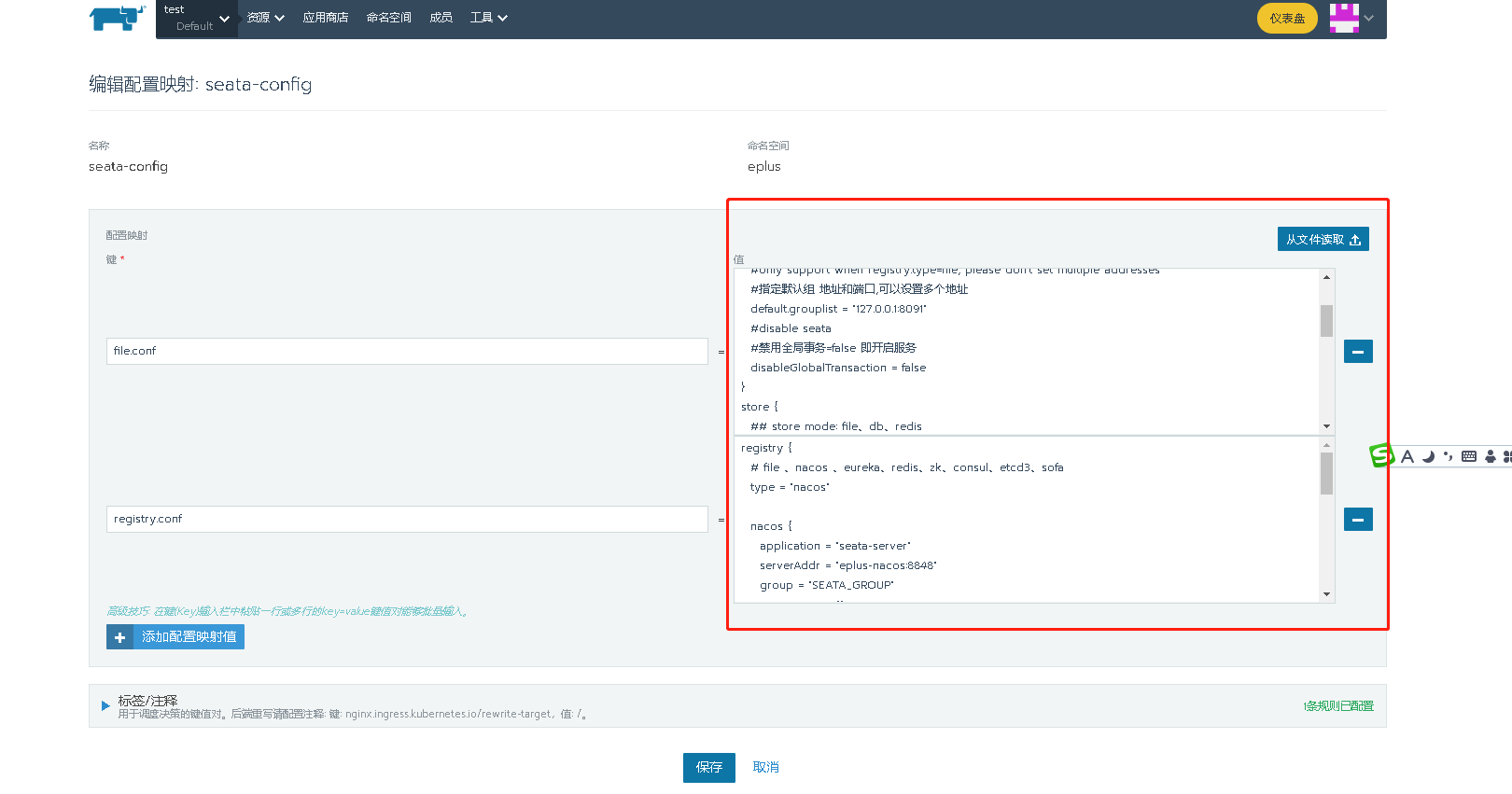

新建配置

![image-20220119104050575]()

-

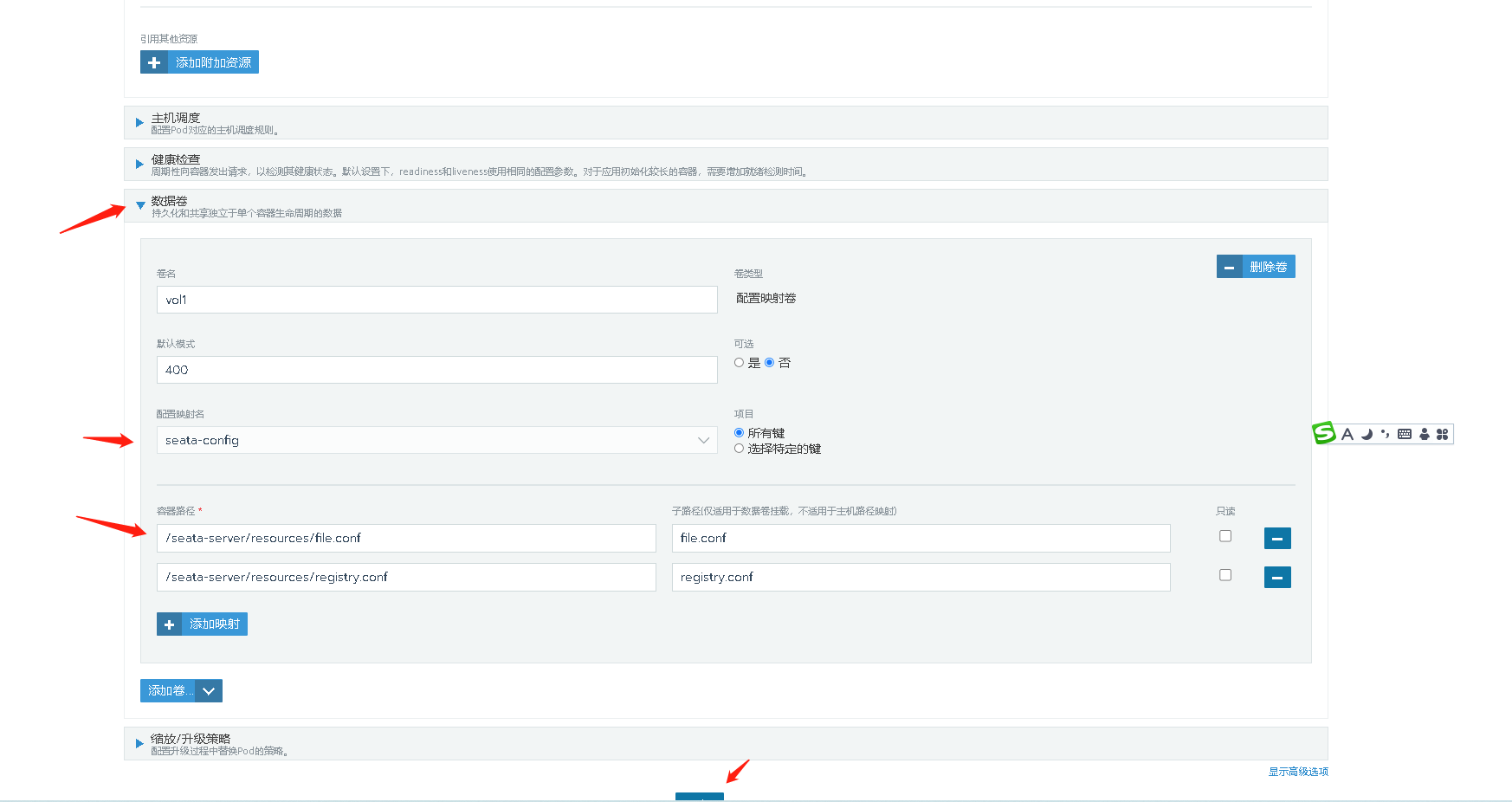

新建seata-server服务,挂载配置

![image-20220119104315946]()

-

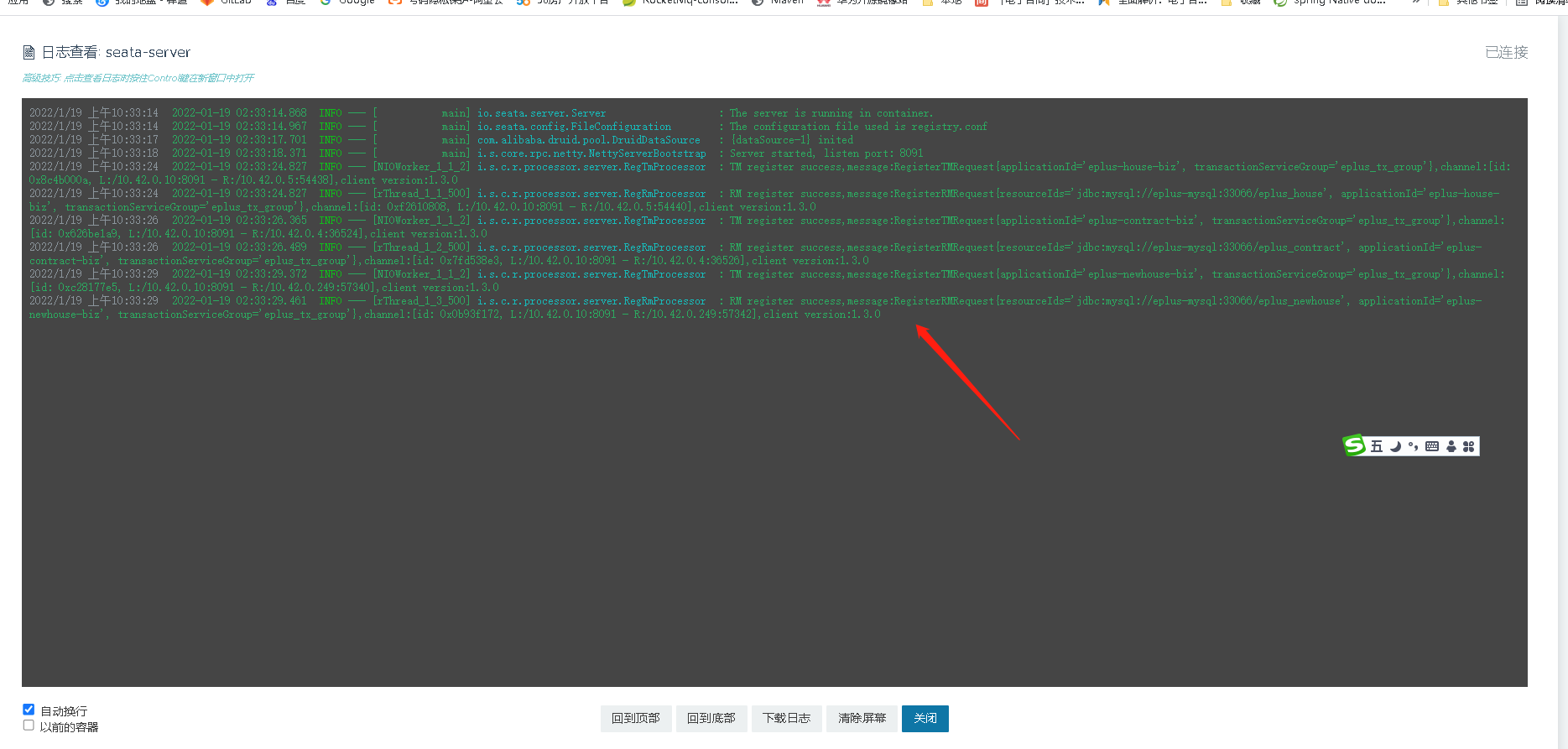

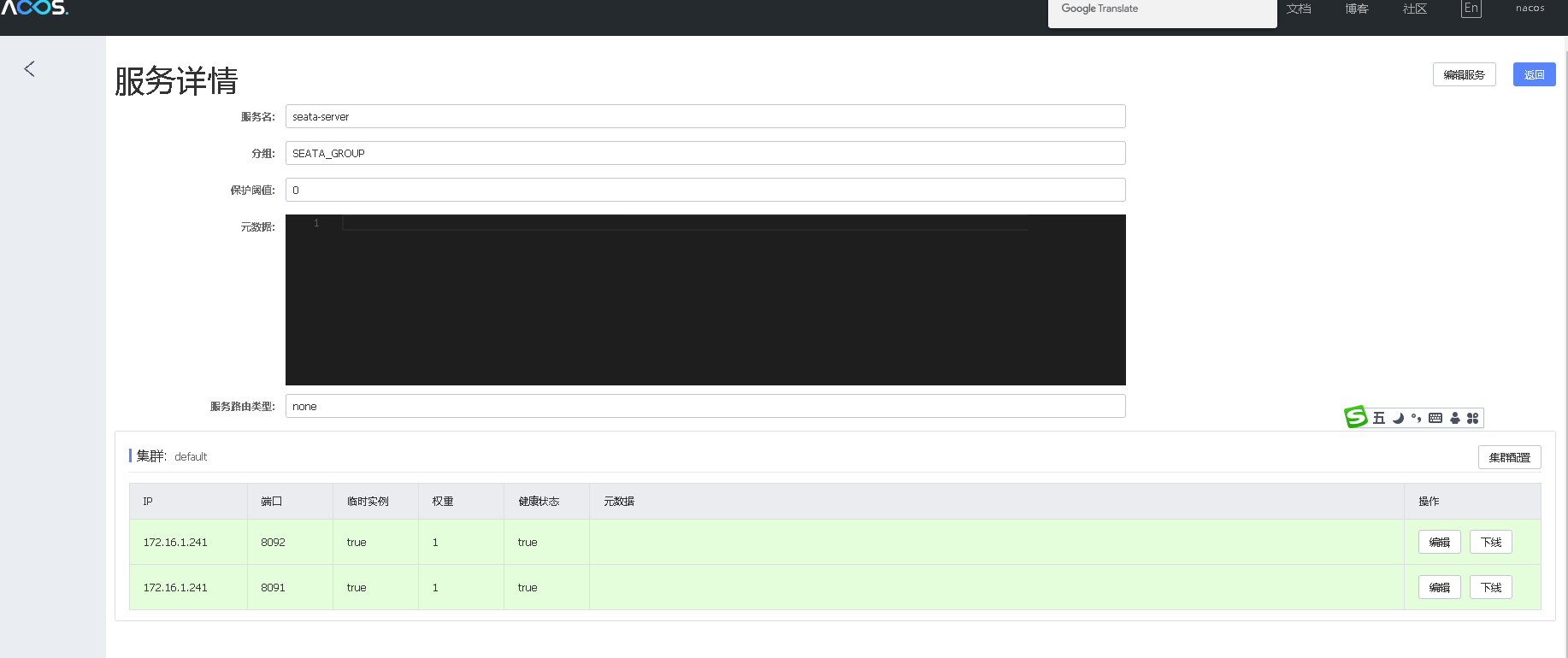

服务RM、TM注册情况

![image-20220119104413282]()

相关链接:

spring Cloud快速集成Seata

https://github.com/seata/seata-samples/blob/master/doc/quick-integration-with-spring-cloud.md

seata下载中心

对应版本说明

详细案例一

https://www.it235.com/高级框架/SpringCloudAlibaba/seata.html#使用nacos作为seata的配置中心

实战详细案例二

运行结果说明 https://www.csdn.net/tags/MtTaEgysMTkzOTEwLWJsb2cO0O0O.html

seata 参数及并发出现的脏读写问题

https://www.csdn.net/tags/NtTakg4sMTY5NzMtYmxvZwO0O0OO0O0O.html

seata官网

seata事务分组详解

https://blog.csdn.net/weixin_39800144/article/details/100740420

seata linux docker下单节点部署

seata linux docker下集群部署

https://www.csdn.net/tags/NtjaQg0sMjczOTItYmxvZwO0O0OO0O0O.html

本文来自博客园,作者:随风笔记,转载请注明原文链接:https://www.cnblogs.com/sfbj/p/15823677.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号