Day5-Kubernetes组件介绍和二进制安装

1、Kubernetes各组件功能

1.1 云原生简介

(1)云原生定义

Cloud native technologies empower organizations to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and observable. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal toil.

The Cloud Native Computing Foundation seeks to drive adoption of this paradigm by fostering and sustaining an ecosystem of open source, vendor-neutral projects. We democratize state-of-the-art patterns to make these innovations accessible for everyone.

云原生技术有利于各组织在公有云、私有云和混合云等新型动态环境中,构建和运行可弹性扩展的应用。云原生的代表技术包括容器、服务网格、微服务、不可变基础设施和声明式API。

这些技术能够构建容错性好、易于管理和便于观察的松耦合系统。结合可靠的自动化手段,云原生技术使工程师能够轻松地对系统作出频繁和可预测的重大变更。

云原生计算基金会(CNCF)致力于培育和维护一个厂商中立的开源生态系统,来推广云原生技术。我们通过将最前沿的模式民主化,让这些创新为大众所用。

官方链接https://github.com/cncf/toc/blob/main/DEFINITION.md

(2)云原生技术栈

容器:以docker为代表的容器运行技术。

服务网络:比如Service Mesh等。

微服务:在微服务体系结构中,一个项目是由多个松耦合且可独立部署的较小组成。

不可变基础设施:不可变基础设施可以理解为一个应用运行所需要的基本运行需求,不可变最基本的就是指运行服务的服务器在完成部署后,就不在进行更改,比如镜像等。

声明式API:描述应用程序的运行状态,并且由系统来决定如何来创建这个环境,例如声明一个pod,会有k8s执行创建并维持副本。

(3)12因素应用

- 基准代码:一份基准代码,多份部署(用同一个代码库进行版本控制,并可进行多次部署)

- 依赖:显式地声明和隔离相互之间的依赖。

- 配置:在环境中存储配置。

- 后端服务:把后端服务当作一种附加资源。

- 构建、发布、运行:对程序执行构建或打包,并严格分离构建和运行。

- 进程:以一个或多个无状态进程运行应用。

- 端口绑定:通过端口绑定提供服务。

- 并发:通过进程模型进行扩展。

- 易处理:快速地启动,优雅地终止,最大程度上保持健壮性。

- 开发环境与线上环境等价:尽可能保持开发,预发布,线上环境相同。

- 日志:将所有运行中进程和后端服务的输出流按照时间 顺序统一收集存储和展示。

- 管理进程:一次性管理进程(数据备份等)应该和正常的常驻进程使用同样的运行环境。

(4)云原生景观图

官方链接:https://landscape.cncf.io/

(5)云原生项目分类

SANDBOX沙箱项目:新立项,方案评估和开发阶段;

INCUBATING孵化中:技术人员和创新者,功能待完善;

GRADUATED毕业项目:可以在线上/生产环境使用;

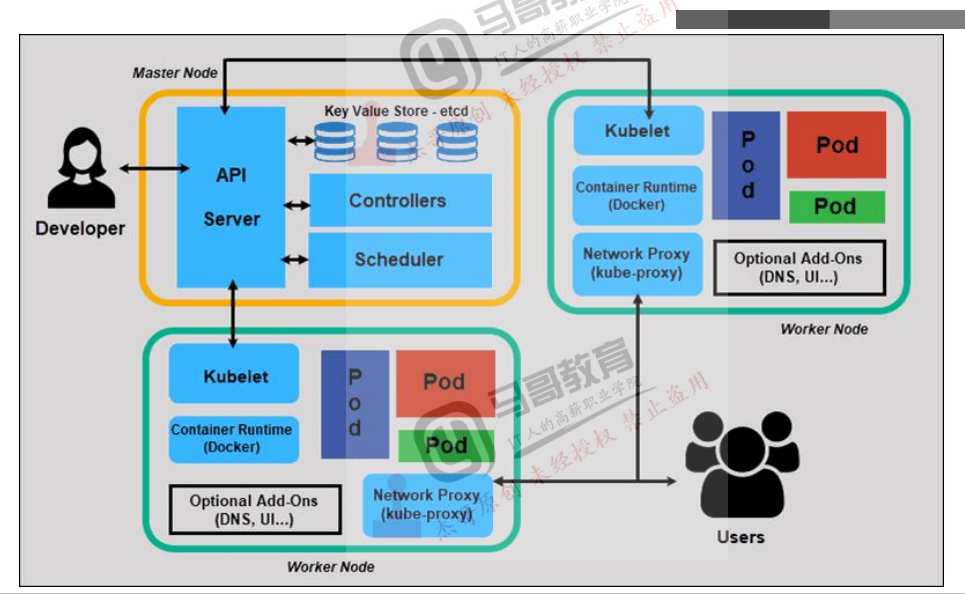

1.2 Kubernetes组件

Kubernetes最初起源于谷歌内部的Borg,Borg是谷歌内部的大规模集群管理系统,负责对谷歌内部很多核心服务的调度和管理,Borg的目的是让用户能够不必操资源管理的问题,让他们专注于自己的核心业务,并且做到跨多个数据中心的资源利用率最大化。

1.2.1 kube-apiserver

Kuberenetes API server提供了k8s各类资源对象的增删改查及watch等HTTP Rest接口,这

些对象包括pods、services、replicationcontrollers等,API Server为REST操作提供服务,并为集群的共享状态提供前端,所有其他组件都通过该前端进行交互。

- RESTful API:是REST风格的网络接口,REST描述的是在网络中client和server的一种交互形式。

- REST:是一种软件架构风格,或者说是一种规范,其强调HTTP应当以资源为中心,并且规范了URI的风格,规范了HTTP请求动作(GET/PUT/POST/DELETE/HEAD/OPTIONS)的使用,且有对应的语义。

(1)kuernetes API Server简介

该端口默认值为6443,可通过启动参数“--secure-port”的值来修改默认值。

默认IP地址为非本地Non-Localhost网络端口,通过启动参数“--bind-address”设置该值

该端口用于接收客户端、dashboard等外部HTTPS请求。

用于其于Token文件或客户端证书及HTTP Base的认证。

用于基于策略的授权。

(2)访问认证

身份认证token --> 验证权限 --> 验证指令 --> 执行操作 --> 返回结果。

(身份认证可以是证书、tocken或用户名密码)

(3)kubernetes API测试

# kubectl get secrets -A | grep admin # kubectl describe secrets admin-user-token-z487q # curl --cacert /etc/kubernetes/ssl/ca.pem -H "Authorization:Bearer 一大串token字符" https://172.0.0.1:6443 # curl 省略 https://172.0.0.1:6443/ 返回所有的API列表 # curl 省略 https://172.0.0.1:6443/apis 分组API # curl 省略 https://172.0.0.1:6443/api/v1 带具体版本号的API # curl 省略 https://172.0.0.1:6443/version API版本信息 # curl 省略 https://172.0.0.1:6443/healthz/etcd 与etcd的心跳监测 # curl 省略 https://172.0.0.1:6443/apis/autoscaling/v1 API的详细信息 # curl 省略 https://172.0.0.1:6443/metrics 指标数据

1.2.2 kube-scheduler

Kubernetes调度器是一个控制面进程,负责将Pods指派到节点上。

1.2.3 kube-controller-manager

kube-controller-manager:Controller Manager还包括一些子控制器(副本控制器、节点控制器、命名空间控制器和服务账号控制器等),控制器作为集群内部的管理控制中心,负责集群内的Node、Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个Node意外宕机时,Controller Manager会及时发现并执行自动化修复流程,确保集群中的pod副本始终处理于预期的工作状态。

1.2.4 kube-proxy

kube-proxy:Kubernetes网络代理运行在node上,它反映了node上Kubernetes API中定义的服务,并可以通过一组后端进行简单的TCP、UDP和SCTP流转发或者在一组后端进行循环TCP、UDP和SCTP转发,用户必须使用apiserver API创建一个服务来配置代理,其实就是kube-proxy通过在主机上维护网络规则并执行连接转发实现Kubernetes服务访问。

kube-proxy运行在每个节点上,监听API Server中服务对象的变化,再通过管理Iptables或者IPVS规则来实现网络的转发。

1.2.5 kubelet

kubelet是运行在每个worker节点的代理组件,它会监视已分配给节点的pod,具体功能如下:

- 向master汇报node节点的状态信息;

- 授受指令并在Pod中创建docker容器;

- 准备pod所需的数据卷;

- 返回pod的运行状态;

- 在node节点执行容器健康检查 ;

(负责POD/容器 生命周期,创建或删除pod)

1.2.6 kubectl

kubectl是一个通过命令行对kubernetes集群进行管理的客户端工具。

kubectl常用命令:

# kubectl get services --all-namespaces -o wide # kubectl get pods --all-namespaces -o wide # kubectl get nodes --all-namespaces -o wide # kubectl get deployment --all-namespaces # kubectl get deployment -n magedu -o wide 更改显示格式 # kubectl desribe pods magedu-tomcat-appy-deployment -n magedu 查看某个资源详细信息 # kubectl create -f tomcat-app1.yaml # kubectl apply -f tomcat-app1.yaml # kubectl delete -f tomcat-app1.yaml # kubectl create -f tomcat-app1.yaml --save-config --record # kubectl apply -f tomcat-app1.yaml --record 推荐命令 # kubectl exec -it magedu-tomcat-app1-deployment-aaabbb-ddd bash -n magedu # kubectl logs magedu-tomcat-app1-deployment-aaabbb-ddd bash -n magedu # kubectl delete pods magedu-tomcat-app1-deployment-aaabbb-ddd bash -n magedu

1.2.7 etcd

etcd是CoreOS公司开发目前是Kubernetes默认使用的key-value数据存储系统,用于保存kubernetes的所有集群数据,etcd支持分布式集群功能,生产环境使用时需要为etcd数据提供定期备份机制。(etcd数据一般几十兆,数据必须备份!!!在一个etcd节点进行备份即可)

1.2.8 DNS

DNS负责为整个集群提供DNS服务,从而实现服务之间的访问。

coredns是目前主流使用的;kube-dns:1.18过渡中;sky-dns早期使用。

1.2.9 Dashboard

Dashboard是基于网页的kubernetes用户界面,可以使用Dashboard获取运行在集群中的应用的概览信息,也可以创建或者修改Kubernetes资源(如Deployment、Job、DaemonSet等等),也可以对Deployment实现弹性伸缩、发起滚动升级、重启Pod或者使用向导创建新的应用。

1.2.10 Container Runtime(Docker)

Container Runtime 是 kubernetes 工作节点上的一个组件,运行在每个节点上。常见的有runc,lxc,Docker,containerd,cri-o。这些中的每一个都是针对不同情况而构建的,并实现了不同的功能。由于docker不是谷歌官方自己的,谷歌k8s官方后续要使用containerd。目前线上环境更多使用的还是docker。

参考资料:https://zhuanlan.zhihu.com/p/338036211

2、Kubernetes中创建pod的调度流程

2.1 kube-scheduler简介

(1)通过调度算法为待调度Pod列表的每个Pod从可用Node列表中选择一个最适合的Node,并将信息写入etcd中。

(2)node节点上的kubelet通过API Server监听到kubernetes Scheduler产生的Pod绑定信息,然后获取对应的Pod清单,下载Image,并启动容器。

2.2 策略

LeastRequestedPriority:优先从备选节点列表中选择资源消耗最小的节点(CPU+内存)。

CalculateNodeLabelPriority:优先选择含有指定Label的节点。

BalancedResoureAllocation:优先从备选节点列表中选择各项资源使用率最均衡的节点。

2.3 创建pod

创建Pod --> 过滤掉资源不足的节点 --> 在剩余可用节点中进行删选 --> 选中节点。

(1)先排除不符合条件的节点;

(2)在剩余的可用选出一个最符合条件的节点。

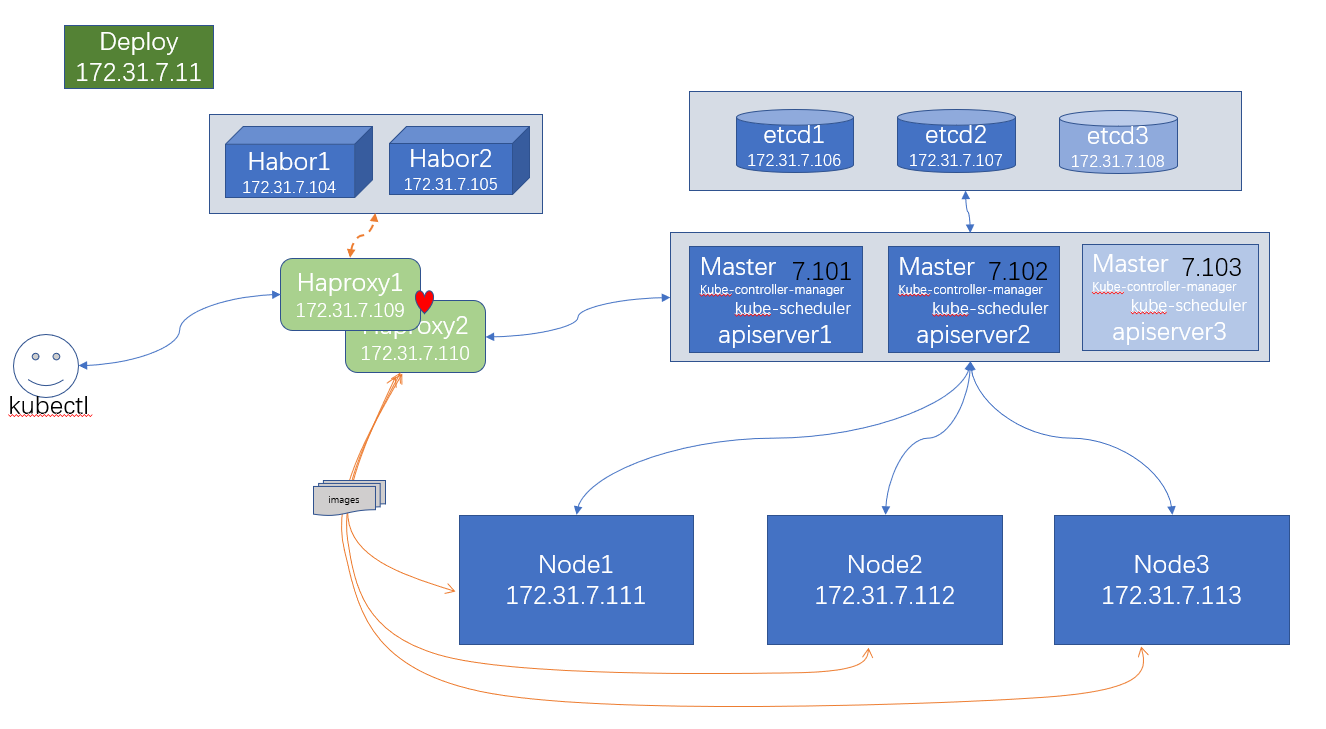

3、基于二进制部署Kubernetes集群

测试节点信息:

本次安装参考文档https://github.com/easzlab/kubeasz进行;

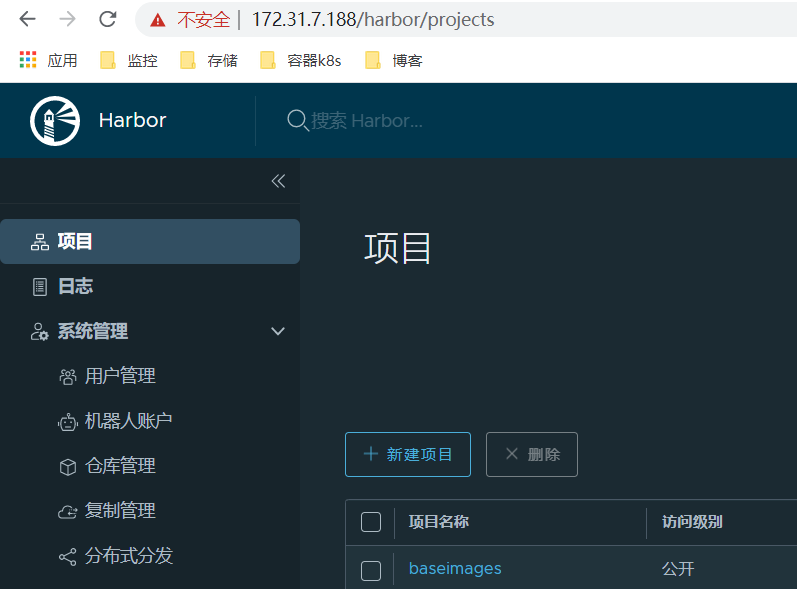

3.1 安装harbor并配置复制策略

安装k8s过程中会访问国外的镜像源,建议部署本地harbor减少访问国外流量;(存在部分国外镜像源访问不到情况)

(1)安装docker和docker-compose root@harbor01:~# apt-get install apt-transport-https ca-certificates curl gnupg lsb-release #配置apt使用https root@harbor01:~# curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - #添加docker GPG key root@harbor01:~# add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" 添加docker的stable安装源 root@harbor01:~# apt-get update root@harbor01:~# apt install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal root@harbor01:~# apt install python3-pip root@harbor01:~# pip3 install docker-compose (2)下载安装harbor; root@k8s-harbor1:~# mkdir -pv /apps/harbor/certs root@k8s-harbor1:/apps/harbor/certs# ls 上传域名证书,这里是有自己的公网域名,从云厂商申请的免费证书;(自己签发证书命令,见文末) harbor.s209.com.crt harbor.s209.com.key root@harbor01:~# cd /usr/local/src/ root@harbor01:/usr/local/src# wget https://github.com/goharbor/harbor/releases/download/v2.3.2/harbor-offline-installer-v2.3.2.tgz root@harbor01:/usr/local/src# tar zxvf harbor-offline-installer-v2.3.2.tgz root@harbor01:/usr/local/src# ln -sv /usr/local/src/harbor /usr/local/ '/usr/local/harbor' -> '/usr/local/src/harbor' root@harbor01:/usr/local/src# cd /usr/local/harbor root@harbor01:/usr/local/harbor# cp harbor.yml.tmpl harbor.yml #设置hostname、注释https、设置网页登录密码 root@k8s-harbor1:/usr/local/harbor# grep -v "#" harbor.yml|grep -v "^$" hostname: harbor.s209.com http: port: 80 https: port: 443 certificate: /apps/harbor/certs/harbor.s209.com.crt private_key: /apps/harbor/certs/harbor.s209.com.key harbor_admin_password: 123456 database: password: root123 max_idle_conns: 100 max_open_conns: 900 data_volume: /data root@harbor01:/usr/local/harbor# ./install.sh --with-trivy 安装harbor root@k8s-harbor1:/usr/local/harbor# cat /usr/lib/systemd/system/harbor.service 设置Harbor开机启动 [Unit] Description=Harbor After=docker.service systemd-networkd.service systemd-resolved.service Requires=docker.service Documentation=http://github.com/vmware/harbor [Service] Type=simple Restart=on-failure RestartSec=5 ExecStart=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up ExecStop=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml down [Install] WantedBy=multi-user.target root@k8s-harbor1:/usr/local/harbor# systemctl enable harbor.service

客户端验证,浏览器登录harbor创建项目;

root@deploy:~# echo "172.31.7.104 harbor.s209.com" >> /etc/hosts root@deploy:~# vi /etc/docker/daemon.json 添加自己的镜像仓库 root@deploy:~# systemctl restart docker.service root@deploy:~# docker login harbor.s209.com

自行签发证书20年(后续使用自签证书操作,云厂商免费证书只有1年有效期)

(1)自签发证书 root@k8s-harbor1:/apps/harbor/certs# openssl genrsa -out harbor-ca.key root@k8s-harbor1:/apps/harbor/certs# openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.s209.com" -days 7120 -out harbor-ca.crt (2)使用自签发证书 root@k8s-harbor1:/usr/local/harbor# grep -v "#" harbor.yml|grep -v "^$" hostname: harbor.s209.com http: port: 80 https: port: 443 certificate: /apps/harbor/certs/harbor-ca.crt private_key: /apps/harbor/certs/harbor-ca.key harbor_admin_password: 123456 (3)重启harbor使用的自签证书; root@k8s-harbor1:/usr/local/harbor# docker-compose stop root@k8s-harbor1:/usr/local/harbor# ./prepare root@k8s-harbor1:/usr/local/harbor# docker-compose start (4)客户端浏览器访问harbor可以看到自签发的证书;为了在Linux docker客户端可以正常访问https://harbor.s209.com,需要把自签发的crt文件拷贝到客户端; root@deploy:~# mkdir /etc/docker/certs.d/harbor.s209.com -p root@k8s-harbor1:/usr/local/harbor# scp /apps/harbor/certs/harbor-ca.crt 172.31.7.10:/etc/docker/certs.d/harbor.s209.com 在/etc/docker/daemon.json中添加仓库harbor.s209.com,重启docker重启; root@deploy:~# echo "172.31.7.104 harbor.s209.com" >> /etc/hosts root@deploy:~# docker login harbor.s209.com

3.2 安装haproxy并配置keepalived

haproxy实现负载均衡:haproxy转发请求到apiserver,haproxy转发请求到harbor;

keepalived实现vip自动切换。

3.2.1安装ha节点1

(1)安装keepalived和haproxy;

root@k8s-ha1:~# apt install keepalived haproxy root@k8s-ha1:~# ls /etc/keepalived/ root@k8s-ha1:~#

(2)修改keepalived配置文件,设置需要的virtual_router_id、priority 100、auth_pass、virtual_ipaddress;这里设置多个vip,便于后续使用,比如给harbor、apiserver分别提供独立的vip;

root@k8s-ha1:~# find /usr/ -name *.vrrp

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp

root@k8s-ha1:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@k8s-ha1:~#

root@k8s-ha1:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.31.7.188 dev eth0 label eth0:0

172.31.7.189 dev eth0 label eth0:1

172.31.7.190 dev eth0 label eth0:2

172.31.7.191 dev eth0 label eth0:3

}

}

root@k8s-ha1:~#

(3)启动keepalived和查看网卡接口ip;

root@k8s-ha1:~# systemctl start keepalived

root@k8s-ha1:~# systemctl enable keepalived

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

root@k8s-ha1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:09:ce:e8 brd ff:ff:ff:ff:ff:ff

inet 172.31.7.109/21 brd 172.31.7.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.31.7.188/32 scope global eth0:0

valid_lft forever preferred_lft forever

inet 172.31.7.189/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet 172.31.7.190/32 scope global eth0:2

valid_lft forever preferred_lft forever

inet 172.31.7.191/32 scope global eth0:3

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe09:cee8/64 scope link

valid_lft forever preferred_lft forever

root@k8s-ha1:~#

(4)修改haproxy配置文件,设置habor和k8s-apiserver的负载均衡监听器;

root@k8s-ha1:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen harbor-80

bind 172.31.7.188:443

mode tcp

balance source

server harbor1 172.31.7.104:443 check inter 3s fall 3 rise 5

server harbor2 172.31.7.105:443 check inter 3s fall 3 rise 5

listen k8s-6443

bind 172.31.7.189:6443

mode tcp

server k8s1 172.31.7.101:6443 check inter 3s fall 3 rise 5

server k8s2 172.31.7.102:6443 check inter 3s fall 3 rise 5

server k8s3 172.31.7.103:6443 check inter 3s fall 3 rise 5

root@k8s-ha1:~#

(5)启动haproxy,查看端口监听状态,并设置开机自启动;

root@k8s-ha1:~# systemctl start haproxy root@k8s-ha1:~# systemctl enable haproxy Synchronizing state of haproxy.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable haproxy root@k8s-ha1:~# root@k8s-ha1:~# ss -tln State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 490 172.31.7.189:6443 0.0.0.0:* LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 490 172.31.7.188:443 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* root@k8s-ha1:~#

(6)从客户端验证访问harbor vip地址https://172.31.7.188/验证和访问apiserver vip验证。

(7)配置sysctl.conf内核参数,允许绑定本机不存在的 IP(非本机ip,即vip);

root@k8s-ha1:~# sysctl net.ipv4.ip_nonlocal_bind 查看参数项配置 net.ipv4.ip_nonlocal_bind = 0 root@k8s-ha1:~# echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf root@k8s-ha1:~# sysctl -p net.ipv4.ip_nonlocal_bind = 1

3.2.2安装ha节点2

(1)设置内核参数允许绑定非本机ip,即keepalived提供的vip;

root@k8s-ha2:~# echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

root@k8s-ha2:~# sysctl -p

(2)安装Hapoxy和keepalived;

root@k8s-ha2:~# apt install keepalived haproxy

(3)拷贝ha1的配置文件到ha2节点,并在ha2节点修改keeplived.conf(修改state和priority 80);

root@k8s-ha1:~# scp /etc/haproxy/haproxy.cfg 172.31.7.110:/etc/haproxy/

root@k8s-ha1:~# scp /etc/keepalived/keepalived.conf 172.31.7.110:/etc/keepalived/

root@k8s-ha2:~# cat /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state SLAVE

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

(4)启动Hapoxy和keepalived,并设置开机启动

root@k8s-ha2:~# systemctl start keepalived.service

root@k8s-ha2:~# systemctl enable keepalived.service

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

root@k8s-ha2:~#

root@k8s-ha2:~# systemctl start haproxy

root@k8s-ha2:~# systemctl enable haproxy

Synchronizing state of haproxy.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable haproxy

root@k8s-ha2:~#

(5)查看haproxy端口监听状态,需要确认是成功监听172.31.7.188:443和172.31.7.189:6443;

root@k8s-ha2:~# ip a | grep 172

inet 172.31.7.110/21 brd 172.31.7.255 scope global eth0

root@k8s-ha2:~# ss -tln

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 490 172.31.7.189:6443 0.0.0.0:*

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 490 172.31.7.188:443 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

ha2节点的keepalived.conf参考:

root@k8s-ha2:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state SLAVE

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.31.7.188 dev eth0 label eth0:0

172.31.7.189 dev eth0 label eth0:1

172.31.7.190 dev eth0 label eth0:2

172.31.7.191 dev eth0 label eth0:3

}

}

3.2.3 验证keepalived vip切换效果;

(1)在ha1节点执行poweroff关机,可以看到vip切换到ha2节点的网卡上,访问harbor https://172.31.7.188/harbor/users仍然可以正常访问;

(2)将ha1节点开机启动,可以看到vip自动切回到ha1节点的网卡上,访问harbor https://172.31.7.188/harbor/users仍然可以正常访问;

3.2.4 配置kubectl客户端访问ha ip;

root@deploy:~# vi /root/.kube/config server: https://172.31.7.189:6443

3.3 在部署节点安装ansible及准备ssh免密登陆

(1)安装ansible;

root@deploy:~# apt install python3-pip -y

root@deploy:~# pip3 install ansible

(2)在ansible中配置其它节点的免密登录,包含自己的;

root@deploy:~# ssh-keygen

root@deploy:~# apt install sshpass

root@deploy:~# cat scp-key.sh

#!/bin/bash

IP="

172.31.7.10

172.31.7.101

172.31.7.102

172.31.7.103

172.31.7.104

172.31.7.105

172.31.7.106

172.31.7.107

172.31.7.108

172.31.7.109

172.31.7.110

172.31.7.111

172.31.7.112

172.31.7.113

"

for node in ${IP};do

sshpass -p www.s209.com ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

root@deploy:~# bash scp-key.sh

3.4 在部署节点编排k8s安装

网络组件、coredns、dashoard

root@deploy:/etc/kubeasz# ./ezctl help setup

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

root@deploy:/etc/kubeasz# ls playbooks/

01.prepare.yml 04.kube-master.yml 07.cluster-addon.yml 21.addetcd.yml 31.deletcd.yml 90.setup.yml 93.upgrade.yml 99.clean.yml

02.etcd.yml 05.kube-node.yml 10.ex-lb.yml 22.addnode.yml 32.delnode.yml 91.start.yml 94.backup.yml

3.4.0 下载kubeasz脚本并配置

下载kubeasz文件,指定docker版本;

new创建k8s集群,生成hosts和config文件,根据需要设定参数;

(1)下载easzlab安装脚本和下载安装文件;

root@deploy:~# export release=3.1.0

root@deploy:~# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@deploy:~# cp ezdown bak.ezdown

root@deploy:~# vi ezdown

DOCKER_VER=19.03.15 #指定的docker版本

K8S_BIN_VER=v1.21.0 #指定k8s版本,这个脚本是到hub.docker.com/easzlab/kubeasz-k8s-bin:v1.21.0对应的版本,可以到hub.docker.com查询要使用的版本;

BASE="/etc/kubeasz" #下载相关配置文件和镜像

option: -{DdekSz}

-C stop&clean all local containers

-D download all into "$BASE"

-P download system packages for offline installing

-R download Registry(harbor) offline installer

-S start kubeasz in a container

-d <ver> set docker-ce version, default "$DOCKER_VER"

-e <ver> set kubeasz-ext-bin version, default "$EXT_BIN_VER"

-k <ver> set kubeasz-k8s-bin version, default "$K8S_BIN_VER"

-m <str> set docker registry mirrors, default "CN"(used in Mainland,China)

-p <ver> set kubeasz-sys-pkg version, default "$SYS_PKG_VER"

-z <ver> set kubeasz version, default "$KUBEASZ_VER"

root@deploy:~# chmod +x ezdown

root@deploy:~# bash ./ezdown -D 会下载所有文件,默认是下载到/etc/kubeasz目录下;

root@deploy:~# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ls

README.md ansible.cfg bin docs down example ezctl ezdown manifests pics playbooks roles tools

root@deploy:/etc/kubeasz# ./ezctl new k8s-s209-01

2021-09-12 16:36:36 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-s209-01

2021-09-12 16:36:36 DEBUG set version of common plugins

2021-09-12 16:36:36 DEBUG cluster k8s-s209-01: files successfully created.

2021-09-12 16:36:36 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-s209-01/hosts'

2021-09-12 16:36:36 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-s209-01/config.yml'

root@deploy:/etc/kubeasz#

root@deploy:/etc/kubeasz# tree clusters/

clusters/

└── k8s-s209-01

├── config.yml

└── hosts

(2)修改配置文件hosts

root@deploy:/etc/kubeasz# vi clusters/k8s-s209-01/hosts 修改自己集群节点的ip

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

172.32.7.106

172.32.7.107

172.32.7.108

# master node(s)

[kube_master]

172.32.7.101

172.32.7.102

# work node(s)

[kube_node]

172.32.7.111

172.32.7.112

# [optional] loadbalance for accessing k8s from outside 负载均衡器

[ex_lb]

172.32.7.109 LB_ROLE=backup EX_APISERVER_VIP=172.32.7.188 EX_APISERVER_PORT=8443

172.32.7.110 LB_ROLE=master EX_APISERVER_VIP=172.32.7.188 EX_APISERVER_PORT=8443

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-65000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="s209.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

:%s/192.168.1/172.31.7/g

(3)镜像下载到本地,上传到自建harbor仓库;这里做的操作是给第4部的配置文件部分使用;

root@deploy:~# docker pull easzlab/pause-amd64:3.4.1

root@deploy:~# docker tag easzlab/pause-amd64:3.4.1 harbor.s209.com/baseimages/pause-amd64:3.4.1

root@deploy:~# docker push harbor.s209.com/baseimages/pause-amd64:3.4.1

(4)修改集群目录配置文件config.yml

root@deploy:/etc/kubeasz# vi clusters/k8s-s209-01/config.yml

# [containerd]基础容器镜像

SANDBOX_IMAGE: "harbor.s209.com/baseimages/pause-amd64:3.4.1"

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# node节点最大pod 数

MAX_PODS: 300

# [docker]信任的HTTP仓库

INSECURE_REG: '["127.0.0.1/8","172.31.7.104"]'

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md

CALICO_IPV4POOL_IPIP: "Always"

# coredns 自动安装

dns_install: "no"

corednsVer: "1.8.0"

ENABLE_LOCAL_DNS_CACHE: false

# metric server 自动安装

metricsserver_install: "no"

# dashboard 自动安装

dashboard_install: "no"

# ingress 自动安装

ingress_install: "no"

# prometheus 自动安装

prom_install: "no"

3.4.1 ./ezctl setup k8s-s209-01 01进行prepare

root@deploy:/etc/kubeasz# vi playbooks/01.prepare.yml 删除以下两项 - ex_lb - chrony 只初始化kube_master,kube_node,etcd节点即可; root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 01 ok: [172.31.7.111] k8s-node1 ok: [172.31.7.112] k8s-node2 ok: [172.31.7.106] k8s-etcd1 ok: [172.31.7.102] k8s-master2 ok: [172.31.7.101] k8s-master1 ok: [172.31.7.108] k8s-etcd3 ok: [172.31.7.107] k8s-etcd2 (k8s-master1和k8s-node3用于操作集群节点扩容)

调用的ansible脚本如下

root@deploy:/etc/kubeasz# tree roles/prepare/

roles/prepare/

├── files

│ └── sctp.conf

├── tasks

│ ├── centos.yml

│ ├── common.yml

│ ├── main.yml

│ ├── offline.yml

│ └── ubuntu.yml

└── templates

├── 10-k8s-modules.conf.j2

├── 30-k8s-ulimits.conf.j2

├── 95-k8s-journald.conf.j2

└── 95-k8s-sysctl.conf.j2

3 directories, 10 files

root@deploy:/etc/kubeasz# ls roles/

calico cilium cluster-addon containerd docker ex-lb harbor kube-master kube-ovn os-harden

chrony clean cluster-restore deploy etcd flannel kube-lb kube-node kube-router prepare

root@deploy:/etc/kubeasz# ls roles/deploy/tasks/

add-custom-kubectl-kubeconfig.yml create-kube-proxy-kubeconfig.yml create-kubectl-kubeconfig.yml

create-kube-controller-manager-kubeconfig.yml create-kube-scheduler-kubeconfig.yml main.yml

3.4.2 ./ezctl setup k8s-s209-01 02安装etcd

root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 02 ...省略... PLAY RECAP ********************************************************************************************************************************************** 172.31.7.106 : ok=10 changed=8 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.31.7.107 : ok=10 changed=8 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.31.7.108 : ok=10 changed=9 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

(1)在etcd节点查看生成的开机启动脚本;

root@k8s-etcd1:~# cat /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/bin/etcd \ --name=etcd-172.31.7.106 \ --cert-file=/etc/kubernetes/ssl/etcd.pem \ --key-file=/etc/kubernetes/ssl/etcd-key.pem \ --peer-cert-file=/etc/kubernetes/ssl/etcd.pem \ --peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --initial-advertise-peer-urls=https://172.31.7.106:2380 \ --listen-peer-urls=https://172.31.7.106:2380 \ --listen-client-urls=https://172.31.7.106:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://172.31.7.106:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=etcd-172.31.7.106=https://172.31.7.106:2380,etcd-172.31.7.107=https://172.31.7.107:2380,etcd-172.31.7.108=https://172.31.7.108:2380 \ --initial-cluster-state=new \ --data-dir=/var/lib/etcd \ --wal-dir= \ --snapshot-count=50000 \ --auto-compaction-retention=1 \ --auto-compaction-mode=periodic \ --max-request-bytes=10485760 \ --quota-backend-bytes=8589934592 Restart=always RestartSec=15 LimitNOFILE=65536 OOMScoreAdjust=-999 [Install] WantedBy=multi-user.target

(2)验证etcd所有成员的当前状态;

root@k8s-etcd1:~# export NODE_IPS="172.31.7.106 172.31.7.107 172.31.7.108"

root@k8s-etcd1:~# for ip in ${NODE_IPS};do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;done

https://172.31.7.106:2379 is healthy: successfully committed proposal: took = 11.633001ms

https://172.31.7.107:2379 is healthy: successfully committed proposal: took = 9.330344ms

https://172.31.7.108:2379 is healthy: successfully committed proposal: took = 10.720901ms

root@k8s-etcd1:~#

3.4.3 ./ezctl setup k8s-s209-01 03安装docker

(1)拷贝harbor自签证书到kube-master和kube-node节点;(这里只需要从harbor下载镜像)

root@deploy:~# cat scp_key_ca.sh

#!/bin/bash

IP="

172.31.7.101

172.31.7.102

172.31.7.103

172.31.7.105

172.31.7.111

172.31.7.112

172.31.7.113

"

for node in ${IP};do

sshpass -p www.s209.com ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

echo "${node} 秘钥copy完成,准备环境初始化...."

ssh ${node} "mkdir /etc/docker/certs.d/harbor.s209.com -p"

echo "Harbor 证书目录创建成功!"

scp /etc/docker/certs.d/harbor.s209.com/harbor-ca.crt ${node}:/etc/docker/certs.d/harbor.s209.com/harbor-ca.crt

echo "Harbor 证书拷贝成功!"

ssh ${node} "echo "172.31.7.104 harbor.s209.com" >> /etc/hosts"

echo "hosts 文件添加完成"

else

echo "${node} 秘钥copy失败"

fi

done

root@deploy:~# bash scp_key_ca.sh

(2)查看初始化过程调用的ansible脚本

root@deploy:/etc/kubeasz# more playbooks/03.runtime.yml

# to install a container runtime

- hosts:

- kube_master

- kube_node

roles:

- { role: docker, when: "CONTAINER_RUNTIME == 'docker'" }

root@deploy:/etc/kubeasz# more roles/docker/templates/daemon.json.j2

"insecure-registries": {{ INSECURE_REG }},

(3)执行step3在kube-master1、2和node1、2节点安装docker

root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 03 ...省略... PLAY RECAP ********************************************************************************************************************************************** 172.31.7.101 : ok=18 changed=13 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0 172.31.7.102 : ok=15 changed=12 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0 172.31.7.111 : ok=15 changed=12 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0 172.31.7.112 : ok=15 changed=12 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0

(4)在node节点验证pull自建harbor仓库镜像是否ok

root@k8s-node1:~# docker pull harbor.s209.com/baseimages/pause-amd64:3.4.1 root@k8s-node1:~# docker images root@k8s-node1:~# docker rmi harbor.s209.com/baseimages/pause-amd64:3.4.1

3.4.4 ./ezctl setup k8s-s209-01 04安装kube-master节点

root@deploy:/etc/kubeasz# ln -sv /usr/bin/docker /usr/local/bin/ '/usr/local/bin/docker' -> '/usr/bin/docker

(1)查看调用ansible脚本;

root@deploy:~# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# cat playbooks/04.kube-master.yml

# to set up 'kube_master' nodes

- hosts: kube_master

roles:

- kube-lb

- kube-master

- kube-node

tasks:

- name: Making master nodes SchedulingDisabled

shell: "{{ bin_dir }}/kubectl cordon {{ inventory_hostname }} " #标记master节点不参与调度

when: "inventory_hostname not in groups['kube_node']"

ignore_errors: true

- name: Setting master role name

shell: "{{ bin_dir }}/kubectl label node {{ inventory_hostname }} kubernetes.io/role=master --overwrite"

ignore_errors: true

root@deploy:/etc/kubeasz#

root@deploy:/etc/kubeasz# more roles/kube-master/tasks/main.yml

(2)执行step4安装master nodes;

root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 04 ...省略... PLAY RECAP ********************************************************************************************************************************************** 172.31.7.101 : ok=58 changed=42 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0 172.31.7.102 : ok=54 changed=39 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0

(3)测试kubectl指令

root@deploy:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 7m39s v1.21.0

172.31.7.102 Ready,SchedulingDisabled master 7m41s v1.21.0

root@deploy:/etc/kubeasz# head /root/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR1RENDQXFDZ0F3SUJBZ0lVV0Maaac2tGMGd3WElQcUpVSS9pQ1lkLzArdXpBR009Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://172.31.7.101:6443

name: cluster1

contexts:

- context:

cluster: cluster1

user: admin

3.4.5 ./ezctl setup k8s-s209-01 05安装kube-node节点(kube-proxy)

(1)查看ansible安装脚本,也可以根据需求修改;

root@deploy:/etc/kubeasz# vi roles/kube-node/tasks/main.yml

root@deploy:/etc/kubeasz# ls roles/kube-node/templates/

cni-default.conf.j2 kube-proxy-config.yaml.j2 kubelet-config.yaml.j2 kubelet.service.j2

haproxy.service.j2 kube-proxy.service.j2 kubelet-csr.json.j2

root@deploy:/etc/kubeasz# vi roles/kube-node/templates/kube-proxy-config.yaml.j2 初始化安装前可以指定scheduler算法,根据安装需求设置;不修改也可以

比如设置

mode: "{{ PROXY_MODE }}"

ipvs:

scheduler: wrr 默认是rr

(2)执行step5安装

root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 05 ...省略... PLAY RECAP ********************************************************************************************************************************************** 172.31.7.111 : ok=37 changed=34 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0 172.31.7.112 : ok=35 changed=33 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0

(3)kubectl查看node节点;

root@deploy:/etc/kubeasz# kubectl get node NAME STATUS ROLES AGE VERSION 172.31.7.101 Ready,SchedulingDisabled master 17m v1.21.0 172.31.7.102 Ready,SchedulingDisabled master 17m v1.21.0 172.31.7.111 Ready node 107s v1.21.0 172.31.7.112 Ready node 107s v1.21.0

3.4.6 ./ezctl setup k8s-s209-01 06安装network

(1)查看ansible脚本,修改使用自建harbor镜像仓库;

root@deploy:/etc/kubeasz# cat playbooks/06.network.yml

# to install network plugin, only one can be choosen

- hosts:

- kube_master

- kube_node

roles:

- { role: calico, when: "CLUSTER_NETWORK == 'calico'" }

- { role: cilium, when: "CLUSTER_NETWORK == 'cilium'" }

- { role: flannel, when: "CLUSTER_NETWORK == 'flannel'" }

- { role: kube-router, when: "CLUSTER_NETWORK == 'kube-router'" }

- { role: kube-ovn, when: "CLUSTER_NETWORK == 'kube-ovn'" }

root@deploy:/etc/kubeasz# ls roles/calico/templates/

calico-csr.json.j2 calico-v3.15.yaml.j2 calico-v3.3.yaml.j2 calico-v3.4.yaml.j2 calico-v3.8.yaml.j2 calicoctl.cfg.j2

root@deploy:/etc/kubeasz# vi roles/calico/templates/calico-v3.15.yaml.j2

image: calico/kube-controllers:v3.15.3 ==>harbor.s209.com/baseimages/calico-kube-controllers:v3.15.3

image: calico/cni:v3.15.3 ==> harbor.s209.com/baseimages/calico-cni:v3.15.3

image: calico/pod2daemon-flexvol:v3.15.3 ==>harbor.s209.com/baseimages/calico-pod2daemon-flexvol:v3.15.3

image: calico/node:v3.15.3 ==>harbor.s209.com/baseimages/calico-node:v3.15.3

(2)执行step6安装network

root@deploy:/etc/kubeasz# ./ezctl setup k8s-s209-01 06 ...省略... PLAY RECAP ********************************************************************************************************************************************** 172.31.7.101 : ok=17 changed=15 unreachable=0 failed=0 skipped=51 rescued=0 ignored=0 172.31.7.102 : ok=12 changed=10 unreachable=0 failed=0 skipped=40 rescued=0 ignored=0 172.31.7.111 : ok=12 changed=10 unreachable=0 failed=0 skipped=40 rescued=0 ignored=0 172.31.7.112 : ok=12 changed=11 unreachable=0 failed=0 skipped=40 rescued=0 ignored=0 root@deploy:/etc/kubeasz#

(3)查看node节点路由;

root@k8s-node1:~# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.31.0.2 0.0.0.0 UG 0 0 0 eth0 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.31.0.0 0.0.0.0 255.255.248.0 U 0 0 0 eth0 root@k8s-node1:~# root@k8s-node1:~# calicoctl node status Calico process is running. IPv4 BGP status +--------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +--------------+-------------------+-------+----------+-------------+ | 172.31.7.112 | node-to-node mesh | up | 15:44:25 | Established | | 172.31.7.101 | node-to-node mesh | up | 15:44:39 | Established | | 172.31.7.102 | node-to-node mesh | up | 15:44:47 | Established | +--------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found. root@k8s-node1:~# root@k8s-node1:~# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.31.0.2 0.0.0.0 UG 0 0 0 eth0 10.200.72.0 0.0.0.0 255.255.255.192 U 0 0 0 * 10.200.135.64 172.31.7.102 255.255.255.192 UG 0 0 0 tunl0 10.200.198.192 172.31.7.101 255.255.255.192 UG 0 0 0 tunl0 10.200.255.192 172.31.7.112 255.255.255.192 UG 0 0 0 tunl0 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.31.0.0 0.0.0.0 255.255.248.0 U 0 0 0 eth0 root@k8s-node1:~#

(4)在k8s集群创建容器测试网络连通性和查看dns;

创建容器测试

root@deploy:/etc/kubeasz# kubectl run net-test1 --image=harbor.s209.com/baseimages/alpine:latest sleep 300000

pod/net-test1 created

root@deploy:/etc/kubeasz# kubectl run net-test2 --image=harbor.s209.com/baseimages/alpine:latest sleep 300000

pod/net-test2 created

root@deploy:/etc/kubeasz# kubectl run net-test3 --image=harbor.s209.com/baseimages/alpine:latest sleep 300000

pod/net-test3 created

root@deploy:/etc/kubeasz#

查看分布的node情况;

root@deploy:/etc/kubeasz# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 0 35s

default net-test2 1/1 Running 0 24s

default net-test3 1/1 Running 0 19s

kube-system calico-kube-controllers-f578df54b-2htdd 1/1 Running 0 15m

kube-system calico-node-2pk7x 1/1 Running 0 15m

kube-system calico-node-hgbnn 1/1 Running 0 15m

kube-system calico-node-m6gwd 1/1 Running 0 15m

kube-system calico-node-zvtdv 1/1 Running 0 15m

root@deploy:/etc/kubeasz# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 58s 10.200.72.6 172.31.7.111 <none> <none>

default net-test2 1/1 Running 0 47s 10.200.255.196 172.31.7.112 <none> <none>

default net-test3 1/1 Running 0 42s 10.200.72.7 172.31.7.111 <none> <none>

kube-system calico-kube-controllers-f578df54b-2htdd 1/1 Running 0 15m 172.31.7.112 172.31.7.112 <none> <none>

kube-system calico-node-2pk7x 1/1 Running 0 15m 172.31.7.102 172.31.7.102 <none> <none>

kube-system calico-node-hgbnn 1/1 Running 0 15m 172.31.7.111 172.31.7.111 <none> <none>

kube-system calico-node-m6gwd 1/1 Running 0 15m 172.31.7.101 172.31.7.101 <none> <none>

kube-system calico-node-zvtdv 1/1 Running 0 15m 172.31.7.112 172.31.7.112 <none> <none>

测试pod间/容器间网络连通性

root@deploy:/etc/kubeasz# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 1e:8b:58:24:52:55 brd ff:ff:ff:ff:ff:ff

inet 10.200.72.6/32 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.200.255.196

PING 10.200.255.196 (10.200.255.196): 56 data bytes

64 bytes from 10.200.255.196: seq=0 ttl=62 time=0.641 ms

^C

--- 10.200.255.196 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.641/0.641/0.641 ms

/ # ping 10.200.72.7

PING 10.200.72.7 (10.200.72.7): 56 data bytes

64 bytes from 10.200.72.7: seq=0 ttl=63 time=0.172 ms

^C

--- 10.200.72.7 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.172/0.172/0.172 ms

/ # ping 223.5.5.5

PING 223.5.5.5 (223.5.5.5): 56 data bytes

64 bytes from 223.5.5.5: seq=0 ttl=127 time=16.761 ms

^C

--- 223.5.5.5 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 16.761/16.761/16.761 ms

/ #

测试域名解析,无法解析

/ # cat /etc/resolv.conf

nameserver 10.100.0.2

search default.svc.s209.local svc.s209.local s209.local

options ndots:5

/ # ping www.baidu.com

ping: bad address 'www.baidu.com'

3.4.7 安装coredns

(1)获取二进制安装文件,使用yaml文件安装coredns;

https://github.com/kubernetes/kubernetes/releases https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md https://dl.k8s.io/v1.21.4/kubernetes.tar.gz https://dl.k8s.io/v1.21.4/kubernetes-client-linux-amd64.tar.gz https://dl.k8s.io/v1.21.4/kubernetes-server-linux-amd64.tar.gz https://dl.k8s.io/v1.21.4/kubernetes-node-linux-amd64.tar.gz

(2)提前准备coredns镜像,上传到本地Harbor中;(直接访问google下载可能存在失败的情况)

root@deploy:~# docker load -i coredns-image-v1.8.3.tar.gz root@deploy:~# docker tag k8s.gcr.io/coredns/coredns:v1.8.3 harbor.s209.com/baseimages/coredns:v1.8.3 root@deploy:~# docker push harbor.s209.com/baseimages/coredns:v1.8.3

(3)解压获取coredns.yaml文件,并修改;(yaml文件和镜像版本要保持一致)

root@deploy:/usr/local/src# ls

kubernetes-client-linux-amd64.tar.gz kubernetes-node-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz kubernetes.tar.gz

root@deploy:/usr/local/src# tar zxvf kubernetes.tar.gz

root@deploy:/usr/local/src# tar zxvf kubernetes-server-linux-amd64.tar.gz

root@deploy:/usr/local/src# tar zxvf kubernetes-node-linux-amd64.tar.gz

root@deploy:/usr/local/src# tar zxvf kubernetes-client-linux-amd64.tar.gz

root@deploy:/usr/local/src# ls kubernetes/cluster/addons/

OWNERS cluster-loadbalancing dns-horizontal-autoscaler kube-proxy node-problem-detector

README.md dashboard fluentd-elasticsearch metadata-agent rbac

addon-manager device-plugins fluentd-gcp metadata-proxy storage-class

calico-policy-controller dns ip-masq-agent metrics-server volumesnapshots

root@deploy:/usr/local/src# ls kubernetes/cluster/addons/dns

OWNERS coredns kube-dns nodelocaldns

root@deploy:/usr/local/src# ls kubernetes/cluster/addons/dns/coredns/

Makefile coredns.yaml.base coredns.yaml.in coredns.yaml.sed transforms2salt.sed transforms2sed.sed

root@deploy:/usr/local/src#

root@deploy:/usr/local/src# cp kubernetes/cluster/addons/dns/coredns/coredns.yaml.base /root/

root@deploy:/usr/local/src# cd

root@deploy:~# mv coredns.yaml.base coredns-s209.yaml

root@deploy:~# vi coredns-s209.yaml

kubernetes __DNS__DOMAIN__ in-addr.arpa ip6.arpa { 修改为自己需要的域名kubernetes s209.local in-addr.arpa ip6.arpa {

forward . /etc/resolv.conf { 根据业务需求修改,比如转发给公司内部dns服务器或者公网dns服务器forward . 223.5.5.5 {

image: k8s.gcr.io/coredns/coredns:v1.8.0修改为本地harbor(需要提前下载好coredns镜像);

memory: __DNS__MEMORY__LIMIT__生效环境一般给4G,这里测试使用256兆memory:256Mi

clusterIP: __DNS__SERVER__修改为10.100.0.2

coredns-s209.yaml示例文件:

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

bind 0.0.0.0

ready

kubernetes s209.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: harbor.s209.com/baseimages/coredns:v1.8.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

type: NodePort

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153

nodePort: 30009

(4)通过yaml文件创建coredns pod;

root@deploy:~# kubectl apply -f coredns-s209.yaml serviceaccount/coredns created clusterrole.rbac.authorization.k8s.io/system:coredns created clusterrolebinding.rbac.authorization.k8s.io/system:coredns created configmap/coredns created deployment.apps/coredns created service/kube-dns created root@deploy:~# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE default net-test1 1/1 Running 0 8h default net-test2 1/1 Running 0 8h default net-test3 1/1 Running 0 8h kube-system calico-kube-controllers-f578df54b-2htdd 1/1 Running 1 42h kube-system calico-node-2pk7x 1/1 Running 1 42h kube-system calico-node-hgbnn 1/1 Running 1 42h kube-system calico-node-m6gwd 1/1 Running 1 42h kube-system calico-node-zvtdv 1/1 Running 1 42h kube-system coredns-f8b7989c7-l7fvq 1/1 Running 0 87s

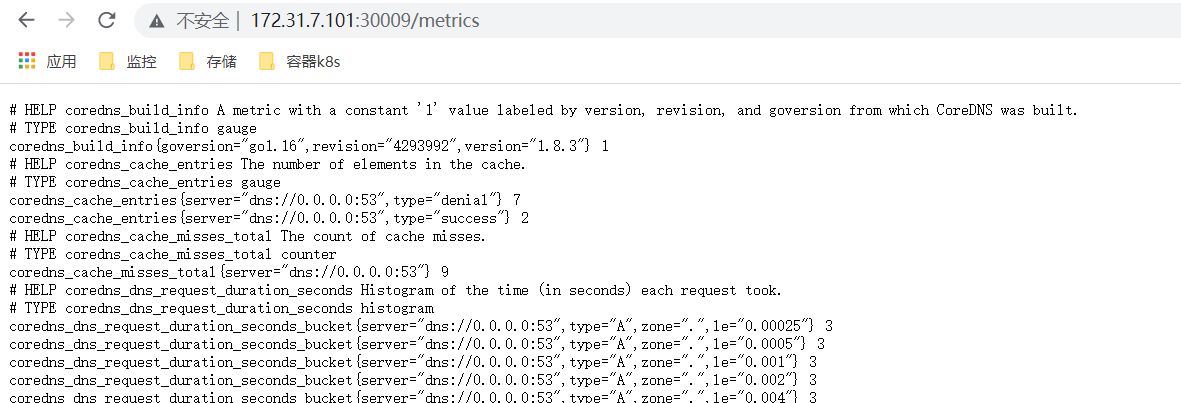

(5)验证pod内域名解析,及master和node节点metrics监控;

root@deploy:~# kubectl exec -it net-test1 sh kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. / # cat /etc/resolv.conf nameserver 10.100.0.2 search default.svc.s209.local svc.s209.local s209.local options ndots:5 / # ping www.mi.com PING www.mi.com (1.180.19.171): 56 data bytes 64 bytes from 1.180.19.171: seq=0 ttl=127 time=19.555 ms 64 bytes from 1.180.19.171: seq=1 ttl=127 time=17.979 ms ^C --- www.mi.com ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 17.979/18.767/19.555 ms / #

浏览器访问节点http://ip:30009/metrics查看监控指标;

3.4.8 安装k8s dashboard

https://github.com/kubernetes/dashboard/releases

要用到的Images镜像:

kubernetesui/dashboard:v2.3.1

kubernetesui/metrics-scraper:v1.0.6

安装方法参考:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

(1)下载镜像,上传到本地harbor;

root@deploy:~# docker pull kubernetesui/dashboard:v2.3.1 root@deploy:~# docker tag kubernetesui/metrics-scraper:v1.0.6 harbor.s209.com/baseimages/metrics-scraper:v1.0.6 root@deploy:~# docker push harbor.s209.com/baseimages/metrics-scraper:v1.0.6 root@deploy:~# docker pull kubernetesui/metrics-scraper:v1.0.6 root@deploy:~# docker tag kubernetesui/dashboard:v2.3.1 harbor.s209.com/baseimages/dashboard:v2.3.1 root@deploy:~# docker push harbor.s209.com/baseimages/dashboard:v2.3.1

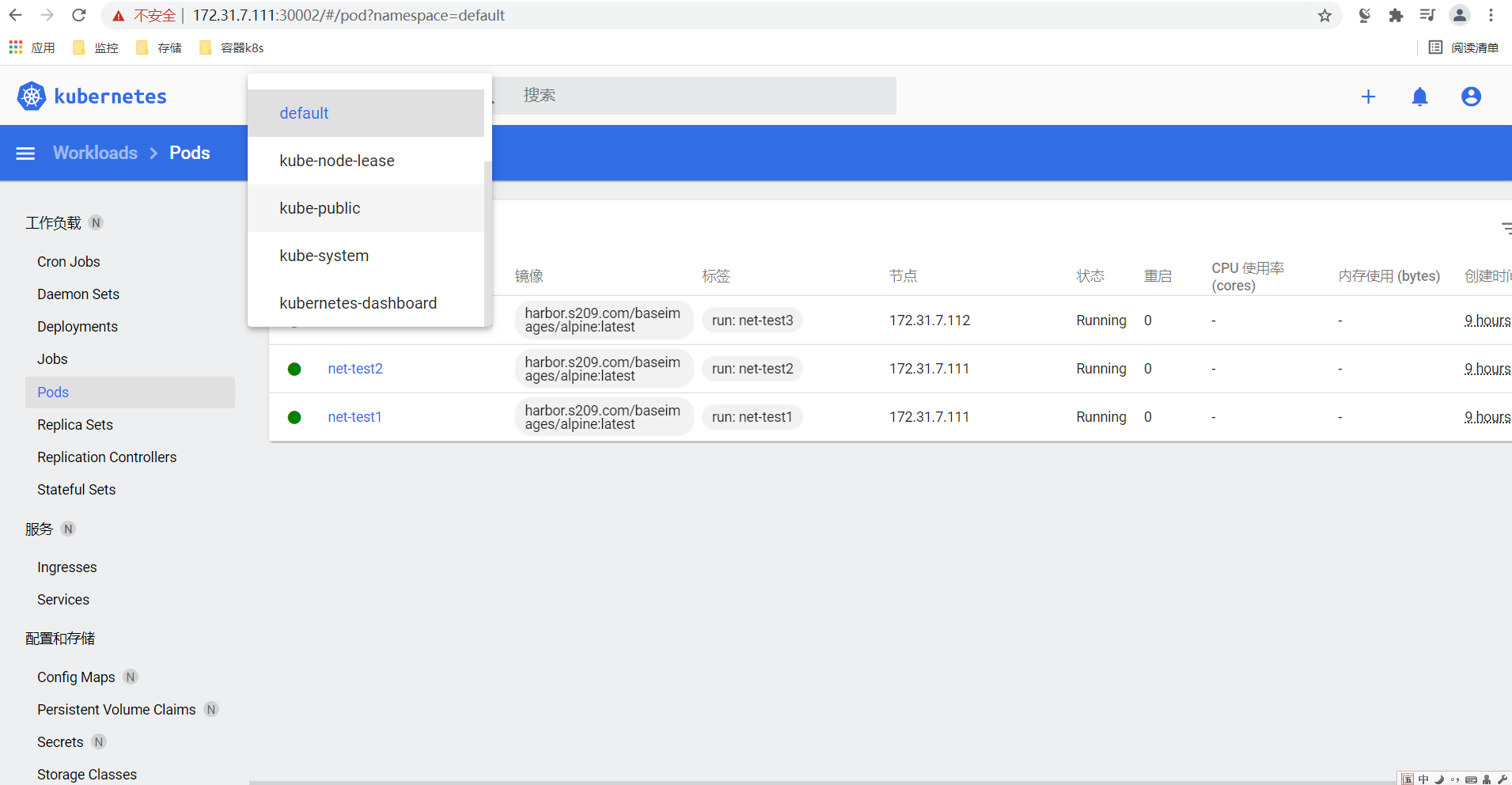

(2)下载和修改dashboard.yaml文件;(添加NodePort设置开放外网访问,修改使用本地harbor下载镜像)

root@deploy:~# kubectl apply -f dashboard-v2.3.1.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created root@deploy:~# kubectl get pod NAME READY STATUS RESTARTS AGE net-test1 1/1 Running 0 8h net-test2 1/1 Running 0 8h net-test3 1/1 Running 0 8h root@deploy:~# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE default net-test1 1/1 Running 0 8h default net-test2 1/1 Running 0 8h default net-test3 1/1 Running 0 8h kube-system calico-kube-controllers-f578df54b-2htdd 1/1 Running 1 43h kube-system calico-node-2pk7x 1/1 Running 1 43h kube-system calico-node-hgbnn 1/1 Running 1 43h kube-system calico-node-m6gwd 1/1 Running 1 43h kube-system calico-node-zvtdv 1/1 Running 1 43h kube-system coredns-f8b7989c7-l7fvq 1/1 Running 0 46m kubernetes-dashboard dashboard-metrics-scraper-7d89477dc9-x87h6 1/1 Running 0 16s kubernetes-dashboard kubernetes-dashboard-86d567f8bf-69fbq 1/1 Running 0 16s root@deploy:~# root@deploy:~# kubectl get pod -A -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default net-test1 1/1 Running 0 8h 10.200.72.12 172.31.7.111 <none> <none> default net-test2 1/1 Running 0 8h 10.200.72.13 172.31.7.111 <none> <none> default net-test3 1/1 Running 0 8h 10.200.255.199 172.31.7.112 <none> <none> kube-system calico-kube-controllers-f578df54b-2htdd 1/1 Running 1 43h 172.31.7.112 172.31.7.112 <none> <none> kube-system calico-node-2pk7x 1/1 Running 1 43h 172.31.7.102 172.31.7.102 <none> <none> kube-system calico-node-hgbnn 1/1 Running 1 43h 172.31.7.111 172.31.7.111 <none> <none> kube-system calico-node-m6gwd 1/1 Running 1 43h 172.31.7.101 172.31.7.101 <none> <none> kube-system calico-node-zvtdv 1/1 Running 1 43h 172.31.7.112 172.31.7.112 <none> <none> kube-system coredns-f8b7989c7-l7fvq 1/1 Running 0 47m 10.200.72.14 172.31.7.111 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-7d89477dc9-x87h6 1/1 Running 0 97s 10.200.72.15 172.31.7.111 <none> <none> kubernetes-dashboard kubernetes-dashboard-86d567f8bf-69fbq 1/1 Running 0 97s 10.200.255.200 172.31.7.112 <none> <none> root@deploy:~#

dashboard-v2.3.1.yaml示例文件;(需要修改8443对外暴露NodePort端口)

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30002

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: harbor.s209.com/baseimages/dashboard:v2.3.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: harbor.s209.com/baseimages/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

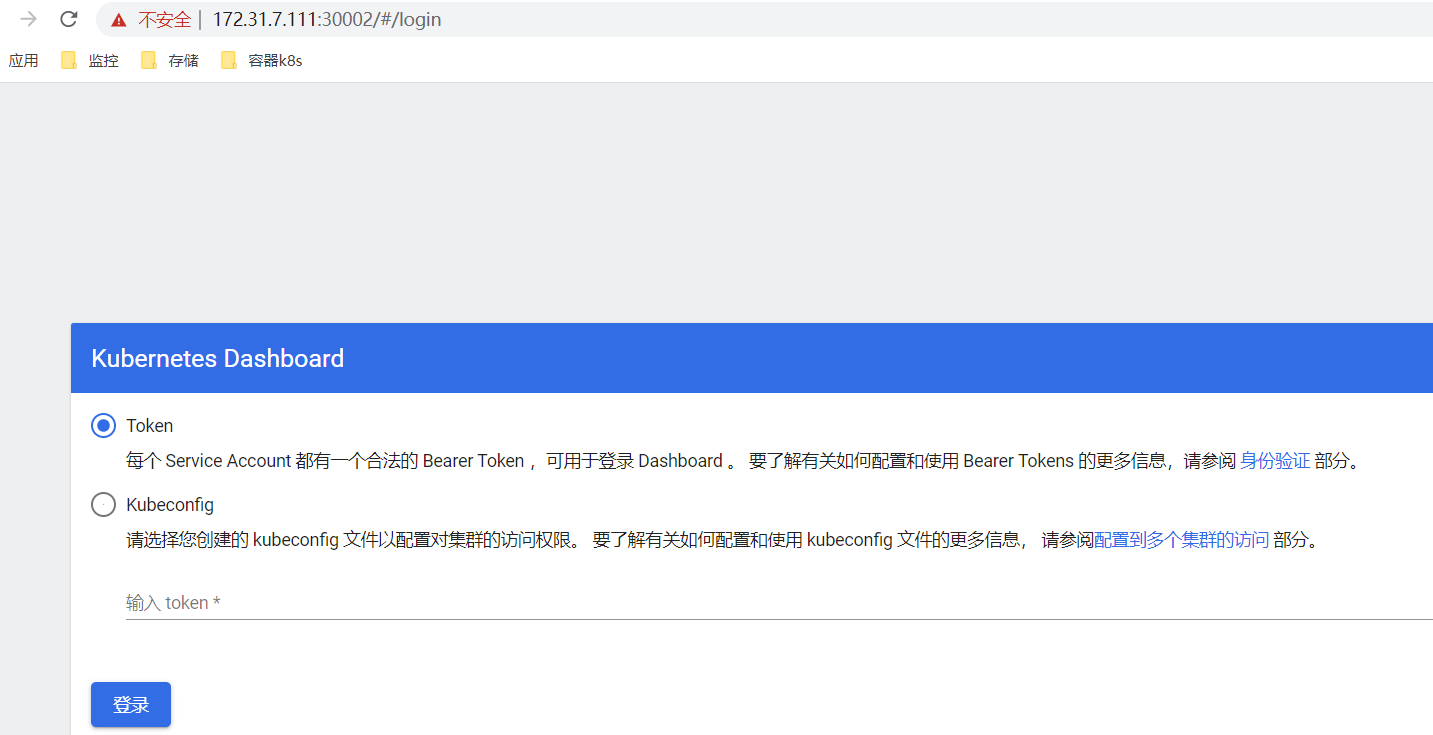

(3)浏览器访问master或node节点ip:30002端口测试;(需要访问https://ip:30002)

直接使用http访问会提示使用https;

使用https访问,登录验证;

(4)通过yaml文件创建dashboard用户,使用token登录验证;

创建用户的yaml示例文件:

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

创建用户和获取tokcen;

root@deploy:~# kubectl apply -f admin-user.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created root@deploy:~# kubectl get secrets -A | grep admin 获取namespace和账号名称 root@deploy:~# kubectl describe secrets admin-user-token-5b7dk -n kubernetes-dashboard 获取这个账号的token,使用token在浏览器登录;

浏览器登录验证;

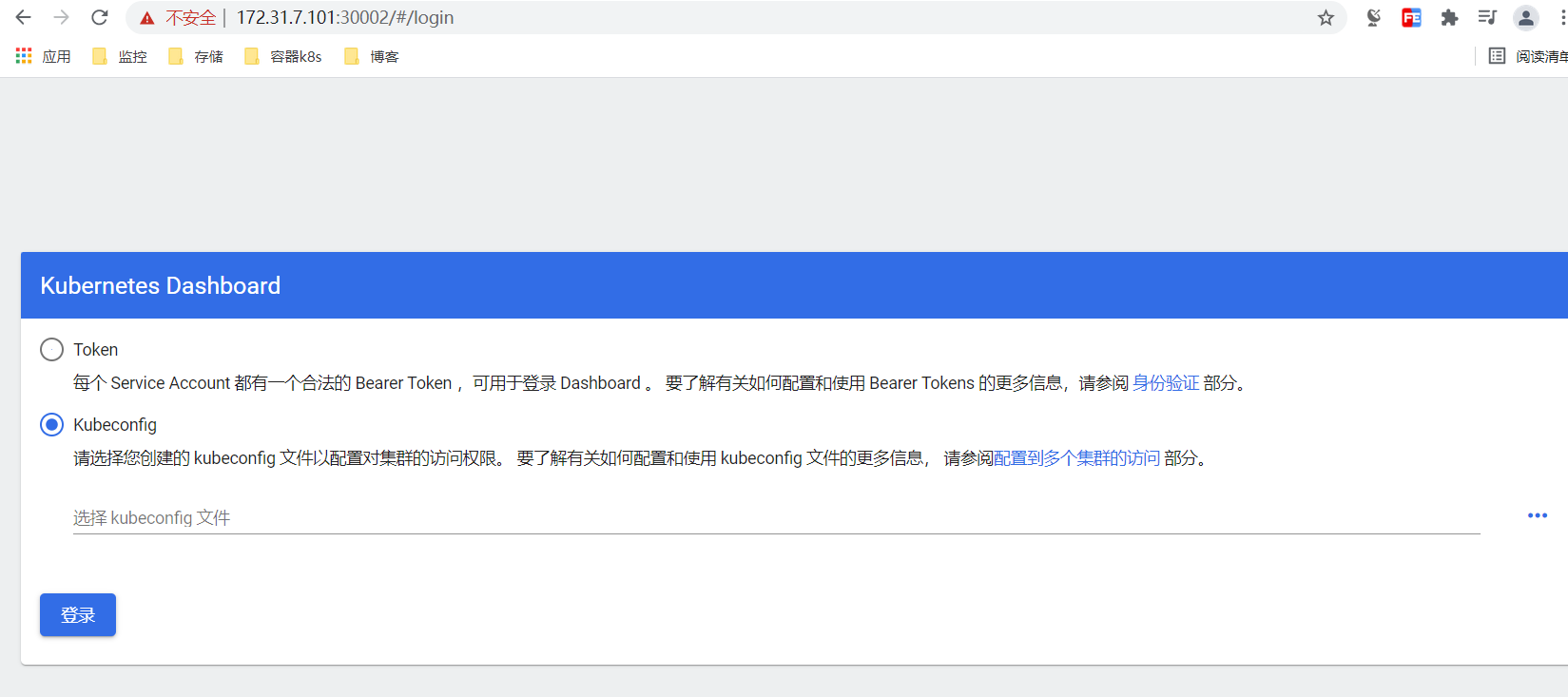

(5)配置使用kubeconfig文件登录dashboard;

root@deploy:/opt# kubectl get secrets -A | grep admin

kubernetes-dashboard admin-user-token-5b7dk kubernetes.io/service-account-token 3 13d

root@deploy:/opt# kubectl describe secrets admin-user-token-5b7dk -n kubernetes-dashboard

...获取token信息

root@deploy:~# cp /root/.kube/config /opt/kubeconfig

root@deploy:~# vi /opt/kubeconfig

......

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkdsQjd2QnRU

在行尾追加一行tocken信息,注意缩进,有4个空格;

然后下载kubeconfig到客户端电脑,使用浏览器登录https://172.31.7.101:30002时,选择kubeconfig 文件登录。

3.4.9 ./ezctl destroy k8s-s209-01删除集群

谨慎操作!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号