Day4-Docker、Dockerfile和Harbor使用

一、Docker简介

1.1、docker的专业叫法是应用容器(Application container);

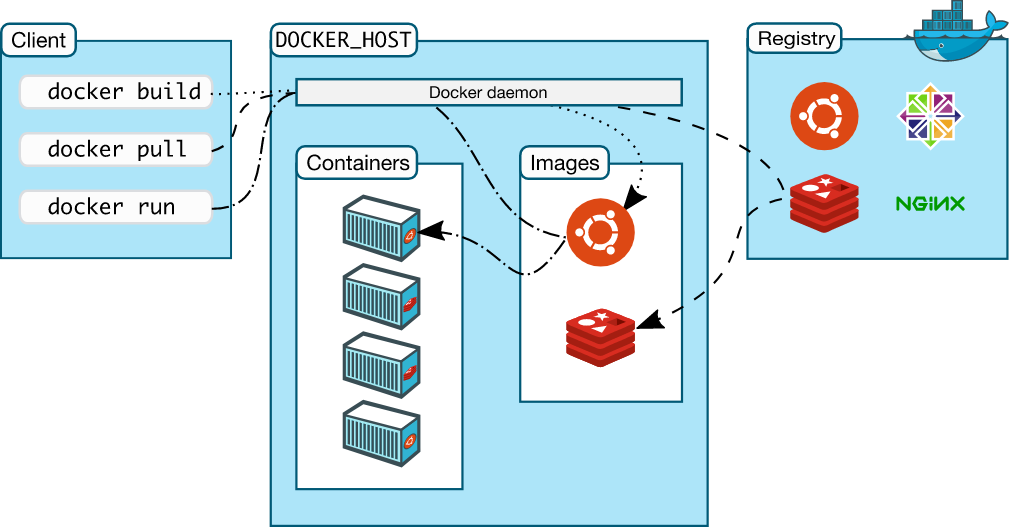

1.2、Docker的组成:Docker主机(Host)、Docker服务端(Server)、Docker客户端(Client)、Docker仓库(Registry)、Docker镜像(Images)、Docker容器(Container);

1.3、Docker优势:资源利用率高(一台物理 机可以运行数百个容器)、开销更小、启动速度更快;

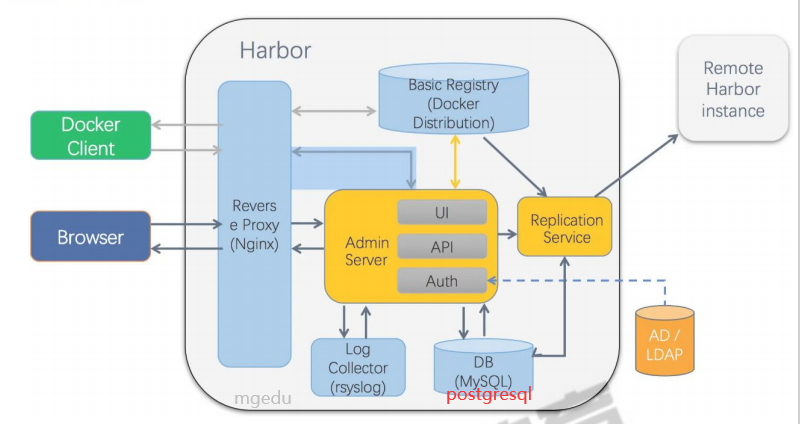

1.4、Docker架构,见下图;(官方文档https://docs.docker.com/get-started/overview/#docker-architecture)

二、Linux Namespace技术

namespace是Linux系统的底层概念,在内核层实现,即有一些不同类型的命名空间被部署在内核中,各个docker容器运行在同一个docker主进程并且共用一个宿主机系统内核,各docker容器运行在宿主机的用户空间,每个容器都要有类似于虚机拟一样的相互隔离的运行空间,但是容器技术是在一个进程内实现运行指定服务的运行环境,并且还可以保护宿主机内核不受其他进程的干扰和影响,如文件系统空间、网络空间、进程空间等,目前主要通过以下技术实现容器运行空间的相互隔离:

| 隔离类型 | 功能 | 系统调用参数 | 内核版本 |

| MNT Namespace(mount) | 提供磁盘挂载点和文件系统的隔离能力 | CLONE_NEWNS | Linux 2.4.19 |

| IPC Namespace(Inter-Process Communication | 提供进程间通信的隔离能力 | CLONE_NEWIPC | Linux 2.6.19 |

| UTS Namespace(UNIX Timesharing System) | 提供主机名隔离能力 | CLONE_NEWUTS | Linux 2.6.19 |

| PID Namespace(Process Identification) | 提供进程隔离能力 | CLONE_NEWPID | Linux 2.6.24 |

| Net Namespace(network) | 提供网络隔离能力 | CLONE_NEWNET | Linux 2.6.29 |

| User Namespace(user) | 提供用户隔离能力 | CLONE_NEWUSER | Linux3.8 |

2.1 MNT Namespace

每个容器都要有独立的根文件系统、有独立的用户空间,以实现在容器里面启动服务并且使用容器的运行环境;容器里面是不能访问宿主机的资源,宿主机是使用了chroot技术把容器锁定到一个指定的运行目录里面;

root@docker01:~# docker run -it --rm centos:7.9.2009 bash [root@783655fccf1e /]# cat /etc/redhat-release CentOS Linux release 7.9.2009 (Core) [root@783655fccf1e /]# ls / #每个容器都有一个独立的根文件系统 anaconda-post.log dev home lib64 mnt proc run srv tmp var bin etc lib media opt root sbin sys usr [root@783655fccf1e /]#

宿主机

root@docker01:~# ls / bin cdrom dev home lib32 libx32 mnt proc run snap swap.img tmp var boot data etc lib lib64 media opt root sbin srv sys usr

2.2 IPC Namespace

一个容器内的进程间通信,允许一个容器内的不同进程的(内存、缓存等)数据访问,但是不能跨容器访问其他容器的数据。

2.3 UTS Namespace

UTS namespace(UNIX Timesharing System包含了运行内核的名称、版本、底层体系结构类型等信息)用于系统标识,其中包含了hostname和域名domainname,它使得一个容器拥有自己hostname标识,这个主机名标识独立于宿主机系统和其上的其他容器。

root@docker01:~# docker run -it --rm centos:7.9.2009 bash [root@783655fccf1e /]# uname -a #这里看到是宿主机内核版本 Linux 783655fccf1e 5.4.0-81-generic #91-Ubuntu SMP Thu Jul 15 19:09:17 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux [root@783655fccf1e /]# hostname 783655fccf1e [root@783655fccf1e /]# cat /etc/issue \S Kernel \r on an \m [root@783655fccf1e /]# cat /etc/redhat-release CentOS Linux release 7.9.2009 (Core) [root@783655fccf1e /]#

HOST宿主机

root@docker01:~# uname -a Linux docker01.s209.local 5.4.0-81-generic #91-Ubuntu SMP Thu Jul 15 19:09:17 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux root@docker01:~# cat /etc/issue Ubuntu 20.04.3 LTS \n \l root@docker01:~#

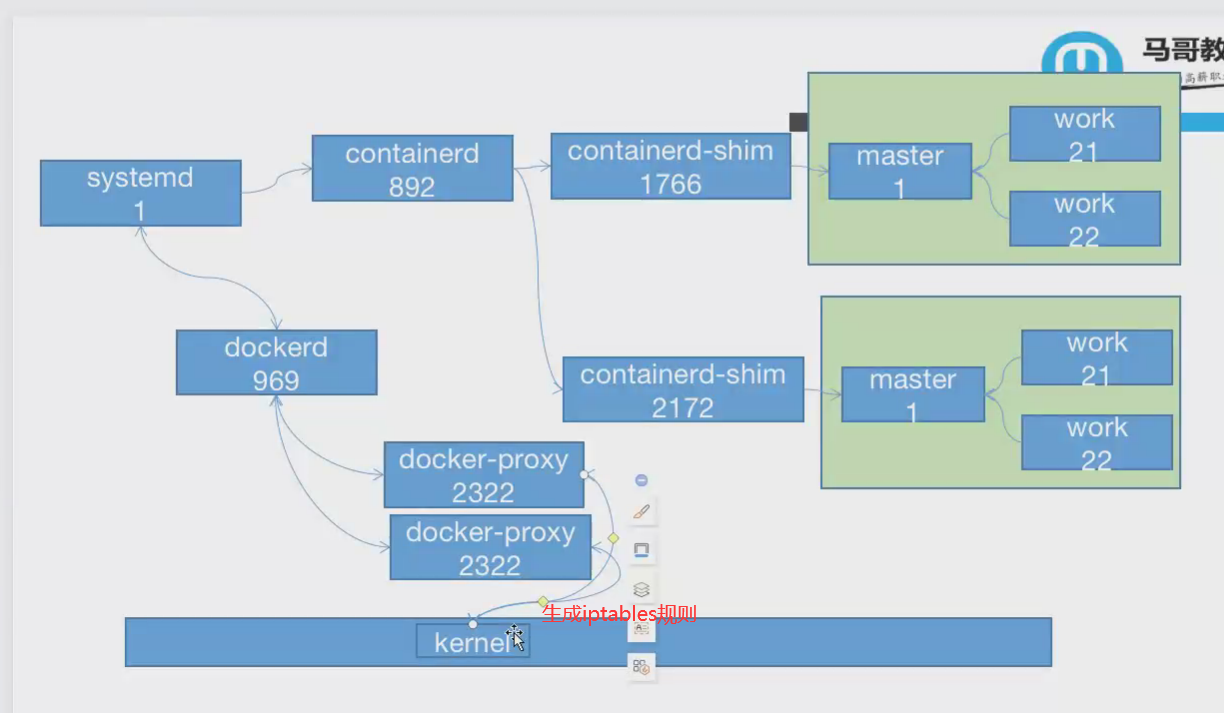

2.4 PID Namespace

Linux系统中,有一个PID为1的进程(init/systemd)是其他所有进程的父进程,那么在每个容器内也要有一个父进程来管理其下属的子进程,那么多个容器的进程通过PID namesapce进程隔离(比如PID编号重复、容器内的主进程生成与回收子进程等)。

root@docker01:~# pstree -p 1

systemd(1)─┬─VGAuthService(706)

├─accounts-daemon(758)─┬─{accounts-daemon}(795)

│ └─{accounts-daemon}(816)

├─agetty(797)

├─atd(782)

├─containerd(784)─┬─containerd-shim(1667)─┬─bash(1692)

│ │ ├─{containerd-shim}(1668)

│ │ ├─{containerd-shim}(1669)

│ │ ├─{containerd-shim}(1670)

│ │ ├─{containerd-shim}(1671)

│ │ ├─{containerd-shim}(1672)

│ │ ├─{containerd-shim}(1674)

│ │ ├─{containerd-shim}(1714)

│ │ └─{containerd-shim}(1845)

│ ├─containerd-shim(1774)─┬─bash(1798)

│ │ ├─{containerd-shim}(1775)

│ │ ├─{containerd-shim}(1776)

│ │ ├─{containerd-shim}(1777)

│ │ ├─{containerd-shim}(1778)

│ │ ├─{containerd-shim}(1779)

│ │ ├─{containerd-shim}(1781)

│ │ ├─{containerd-shim}(1822)

│ │ └─{containerd-shim}(1860)

│ ├─{containerd}(834)

│ ├─{containerd}(835)

│ ├─{containerd}(836)

│ ├─{containerd}(837)

│ ├─{containerd}(866)

│ ├─{containerd}(867)

│ ├─{containerd}(868)

│ ├─{containerd}(1562)

│ └─{containerd}(1772)

├─cron(762)

├─dbus-daemon(763)

├─dockerd(878)─┬─{dockerd}(921)

│ ├─{dockerd}(922)

│ ├─{dockerd}(923)

│ ├─{dockerd}(926)

│ ├─{dockerd}(929)

│ ├─{dockerd}(933)

│ ├─{dockerd}(934)

│ ├─{dockerd}(1590)

│ └─{dockerd}(1604)

├─multipathd(656)─┬─{multipathd}(657)

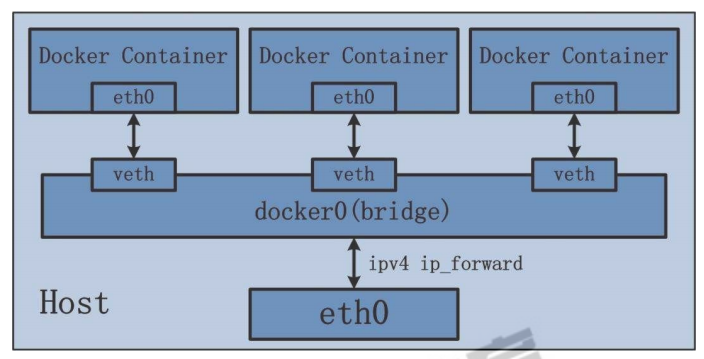

2.5 Net Namespace

每一个容器都类似于虚拟机一样有自己的网卡、监听端口、TCP/IP协议栈等,Docker使用network namespace启动一个vethX接口,这样你的容器将拥有它自己的桥接ip地址,通常是docker0,而docker0实质就是Linux的虚拟网桥,网桥是OSI七层模型的数据链路层的网络设备,通过mac地址对网络进行划分,并且在不同的网络直接传递数据。

root@docker01:~# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242ab7baad8 no veth83adacd

veth9baccd4

vethee01cf9

root@docker01:~# ip a |grep mtu

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

7: vethee01cf9@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

9: veth9baccd4@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

13: veth83adacd@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

root@docker01:~#

root@docker01:~# iptables -nvL

root@docker01:~# iptables -t nat -nvL

实现逻辑网络图:

2.6 User Namespace

各个容器内可能会出现重名的用户名用户组名称,或重复的用户UID或者GID,怎么隔离各个容器内的用户空间呢?

User Namespace允许在各个宿主机的各个容器空间内创建相同的用户名以及相同的用户UID和GID,只是会把用户的作用范围限制在每个容器内,即A容器和B容器可以有相同的用户名称和ID的账户,但是此用户的有效范围仅是当前容器内,不能访问另外一个容器内的文件系统,即相互隔离、互不影响、永不相见。

root@docker01:~# docker exec -it 9fea1012de93 bash root@9fea1012de93:/# id #每个容器内都有超级管理员root以及其他系统账户,且账户ID与其他容器相同 uid=0(root) gid=0(root) groups=0(root) root@9fea1012de93:/# cat /etc/passwd root:x:0:0:root:/root:/bin/bash daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin bin:x:2:2:bin:/bin:/usr/sbin/nologin sys:x:3:3:sys:/dev:/usr/sbin/nologin sync:x:4:65534:sync:/bin:/bin/sync games:x:5:60:games:/usr/games:/usr/sbin/nologin man:x:6:12:man:/var/cache/man:/usr/sbin/nologin lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin mail:x:8:8:mail:/var/mail:/usr/sbin/nologin news:x:9:9:news:/var/spool/news:/usr/sbin/nologin uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin proxy:x:13:13:proxy:/bin:/usr/sbin/nologin www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin backup:x:34:34:backup:/var/backups:/usr/sbin/nologin list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin irc:x:39:39:ircd:/var/run/ircd:/usr/sbin/nologin gnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin _apt:x:100:65534::/nonexistent:/usr/sbin/nologin nginx:x:101:101:nginx user,,,:/nonexistent:/bin/false root@9fea1012de93:/#

三、Docker的cgroup验证

3.1 Linux control groups

在一个容器,如果不对其做任何资源限制,则宿主机会允许其占用无限大的内存空间,有时候会因为代码bug,程序会一直申请内存,直到把宿主机内存占完,为了避免此类的问题出现,宿主机有必要对容器进行资源分配限制,比如CPU、内存等。Linux Cgroups的全称是Linux Control Groups,它最主要的作用,就是限制一个进程组能够使用的资源上限,包括CPU、内存、磁盘、网络带宽等等。此外,还能够对进程进行优先级设置,以及将进程挂起和恢复等操作。

Cgroups在内核层默认已经开启;

验证系统cgroups: root@docker01:~# cat /boot/config-5.4.0-81-generic |grep CGROUP cgroups中的内存模块: root@docker01:~# cat /boot/config-5.4.0-81-generic |grep MEMCG 查看系统cgroups: root@docker01:~# ls /sys/fs/cgroup/

默认情况下,容器没有资源限制,可以使用主机内核调度程序允许的尽可能多的给定资源,Docker提供了控制容器可以限制容器使用多少内存或CPU的方法,设置docker run命令的运行时配置标志。

其中许多功能都要求宿主机的内核支持,要检查 支持可以使用docker info命令,如果内核中禁用了某项功能,可能会在输出结尾处看到警告,比如:WARNING:No swap limit support。

生产环境中建议内存不超分,需要给系统运行预留一些内存;cpu可以1:8或1:4超分,磁盘最好不要超分或1:1.2。

常用参数:-m限制内存,--cpus限制cpu。

这里使用的测试镜像是lorel/docker-stress-ng,可以使用docker run -it --rm -m 128m --name container1 lorel/docker-stress-ng --help查看这个镜像的帮助信息。

root@docker01:~# docker run -it --rm -m 128m --name container1 lorel/docker-stress-ng --help #查看这个镜像的帮助信息

stress-ng, version 0.03.11

Usage: stress-ng [OPTION [ARG]]

--h, --help show help

--affinity N start N workers that rapidly change CPU affinity

--affinity-ops N stop when N affinity bogo operations completed

--affinity-rand change affinity randomly rather than sequentially

--aio N start N workers that issue async I/O requests

--aio-ops N stop when N bogo async I/O requests completed

--aio-requests N number of async I/O requests per worker

-a N, --all N start N workers of each stress test

-b N, --backoff N wait of N microseconds before work starts

-B N, --bigheap N start N workers that grow the heap using calloc()

--bigheap-ops N stop when N bogo bigheap operations completed

--bigheap-growth N grow heap by N bytes per iteration

--brk N start N workers performing rapid brk calls

--brk-ops N stop when N brk bogo operations completed

--brk-notouch don't touch (page in) new data segment page

--bsearch start N workers that exercise a binary search

--bsearch-ops stop when N binary search bogo operations completed

--bsearch-size number of 32 bit integers to bsearch

-C N, --cache N start N CPU cache thrashing workers

--cache-ops N stop when N cache bogo operations completed (x86 only)

--cache-flush flush cache after every memory write (x86 only)

--cache-fence serialize stores

--class name specify a class of stressors, use with --sequential

--chmod N start N workers thrashing chmod file mode bits

--chmod-ops N stop chmod workers after N bogo operations

-c N, --cpu N start N workers spinning on sqrt(rand())

--cpu-ops N stop when N cpu bogo operations completed

-l P, --cpu-load P load CPU by P %%, 0=sleep, 100=full load (see -c)

--cpu-method m specify stress cpu method m, default is all

-D N, --dentry N start N dentry thrashing processes

--dentry-ops N stop when N dentry bogo operations completed

--dentry-order O specify dentry unlink order (reverse, forward, stride)

--dentries N create N dentries per iteration

--dir N start N directory thrashing processes

--dir-ops N stop when N directory bogo operations completed

-n, --dry-run do not run

--dup N start N workers exercising dup/close

--dup-ops N stop when N dup/close bogo operations completed

--epoll N start N workers doing epoll handled socket activity

--epoll-ops N stop when N epoll bogo operations completed

--epoll-port P use socket ports P upwards

--epoll-domain D specify socket domain, default is unix

--eventfd N start N workers stressing eventfd read/writes

--eventfd-ops N stop eventfd workers after N bogo operations

--fault N start N workers producing page faults

--fault-ops N stop when N page fault bogo operations completed

--fifo N start N workers exercising fifo I/O

--fifo-ops N stop when N fifo bogo operations completed

--fifo-readers N number of fifo reader processes to start

--flock N start N workers locking a single file

--flock-ops N stop when N flock bogo operations completed

-f N, --fork N start N workers spinning on fork() and exit()

--fork-ops N stop when N fork bogo operations completed

--fork-max P create P processes per iteration, default is 1

--fstat N start N workers exercising fstat on files

--fstat-ops N stop when N fstat bogo operations completed

--fstat-dir path fstat files in the specified directory

--futex N start N workers exercising a fast mutex

--futex-ops N stop when N fast mutex bogo operations completed

--get N start N workers exercising the get*() system calls

--get-ops N stop when N get bogo operations completed

-d N, --hdd N start N workers spinning on write()/unlink()

--hdd-ops N stop when N hdd bogo operations completed

--hdd-bytes N write N bytes per hdd worker (default is 1GB)

--hdd-direct minimize cache effects of the I/O

--hdd-dsync equivalent to a write followed by fdatasync

--hdd-noatime do not update the file last access time

--hdd-sync equivalent to a write followed by fsync

--hdd-write-size N set the default write size to N bytes

--hsearch start N workers that exercise a hash table search

--hsearch-ops stop when N hash search bogo operations completed

--hsearch-size number of integers to insert into hash table

--inotify N start N workers exercising inotify events

--inotify-ops N stop inotify workers after N bogo operations

-i N, --io N start N workers spinning on sync()

--io-ops N stop when N io bogo operations completed

--ionice-class C specify ionice class (idle, besteffort, realtime)

--ionice-level L specify ionice level (0 max, 7 min)

-k, --keep-name keep stress process names to be 'stress-ng'

--kill N start N workers killing with SIGUSR1

--kill-ops N stop when N kill bogo operations completed

--lease N start N workers holding and breaking a lease

--lease-ops N stop when N lease bogo operations completed

--lease-breakers N number of lease breaking processes to start

--link N start N workers creating hard links

--link-ops N stop when N link bogo operations completed

--lsearch start N workers that exercise a linear search

--lsearch-ops stop when N linear search bogo operations completed

--lsearch-size number of 32 bit integers to lsearch

-M, --metrics print pseudo metrics of activity

--metrics-brief enable metrics and only show non-zero results

--memcpy N start N workers performing memory copies

--memcpy-ops N stop when N memcpy bogo operations completed

--mmap N start N workers stressing mmap and munmap

--mmap-ops N stop when N mmap bogo operations completed

--mmap-async using asynchronous msyncs for file based mmap

--mmap-bytes N mmap and munmap N bytes for each stress iteration

--mmap-file mmap onto a file using synchronous msyncs

--mmap-mprotect enable mmap mprotect stressing

--msg N start N workers passing messages using System V messages

--msg-ops N stop msg workers after N bogo messages completed

--mq N start N workers passing messages using POSIX messages

--mq-ops N stop mq workers after N bogo messages completed

--mq-size N specify the size of the POSIX message queue

--nice N start N workers that randomly re-adjust nice levels

--nice-ops N stop when N nice bogo operations completed

--no-madvise don't use random madvise options for each mmap

--null N start N workers writing to /dev/null

--null-ops N stop when N /dev/null bogo write operations completed

-o, --open N start N workers exercising open/close

--open-ops N stop when N open/close bogo operations completed

-p N, --pipe N start N workers exercising pipe I/O

--pipe-ops N stop when N pipe I/O bogo operations completed

-P N, --poll N start N workers exercising zero timeout polling

--poll-ops N stop when N poll bogo operations completed

--procfs N start N workers reading portions of /proc

--procfs-ops N stop procfs workers after N bogo read operations

--pthread N start N workers that create multiple threads

--pthread-ops N stop pthread workers after N bogo threads created

--pthread-max P create P threads at a time by each worker

-Q, --qsort N start N workers exercising qsort on 32 bit random integers

--qsort-ops N stop when N qsort bogo operations completed

--qsort-size N number of 32 bit integers to sort

-q, --quiet quiet output

-r, --random N start N random workers

--rdrand N start N workers exercising rdrand instruction (x86 only)

--rdrand-ops N stop when N rdrand bogo operations completed

-R, --rename N start N workers exercising file renames

--rename-ops N stop when N rename bogo operations completed

--sched type set scheduler type

--sched-prio N set scheduler priority level N

--seek N start N workers performing random seek r/w IO

--seek-ops N stop when N seek bogo operations completed

--seek-size N length of file to do random I/O upon

--sem N start N workers doing semaphore operations

--sem-ops N stop when N semaphore bogo operations completed

--sem-procs N number of processes to start per worker

--sendfile N start N workers exercising sendfile

--sendfile-ops N stop after N bogo sendfile operations

--sendfile-size N size of data to be sent with sendfile

--sequential N run all stressors one by one, invoking N of them

--sigfd N start N workers reading signals via signalfd reads

--sigfd-ops N stop when N bogo signalfd reads completed

--sigfpe N start N workers generating floating point math faults

--sigfpe-ops N stop when N bogo floating point math faults completed

--sigsegv N start N workers generating segmentation faults

--sigsegv-ops N stop when N bogo segmentation faults completed

-S N, --sock N start N workers doing socket activity

--sock-ops N stop when N socket bogo operations completed

--sock-port P use socket ports P to P + number of workers - 1

--sock-domain D specify socket domain, default is ipv4

--stack N start N workers generating stack overflows

--stack-ops N stop when N bogo stack overflows completed

-s N, --switch N start N workers doing rapid context switches

--switch-ops N stop when N context switch bogo operations completed

--symlink N start N workers creating symbolic links

--symlink-ops N stop when N symbolic link bogo operations completed

--sysinfo N start N workers reading system information

--sysinfo-ops N stop when sysinfo bogo operations completed

-t N, --timeout N timeout after N seconds

-T N, --timer N start N workers producing timer events

--timer-ops N stop when N timer bogo events completed

--timer-freq F run timer(s) at F Hz, range 1000 to 1000000000

--tsearch start N workers that exercise a tree search

--tsearch-ops stop when N tree search bogo operations completed

--tsearch-size number of 32 bit integers to tsearch

--times show run time summary at end of the run

-u N, --urandom N start N workers reading /dev/urandom

--urandom-ops N stop when N urandom bogo read operations completed

--utime N start N workers updating file timestamps

--utime-ops N stop after N utime bogo operations completed

--utime-fsync force utime meta data sync to the file system

-v, --verbose verbose output

--verify verify results (not available on all tests)

-V, --version show version

-m N, --vm N start N workers spinning on anonymous mmap

--vm-bytes N allocate N bytes per vm worker (default 256MB)

--vm-hang N sleep N seconds before freeing memory

--vm-keep redirty memory instead of reallocating

--vm-ops N stop when N vm bogo operations completed

--vm-locked lock the pages of the mapped region into memory

--vm-method m specify stress vm method m, default is all

--vm-populate populate (prefault) page tables for a mapping

--wait N start N workers waiting on child being stop/resumed

--wait-ops N stop when N bogo wait operations completed

--zero N start N workers reading /dev/zero

--zero-ops N stop when N /dev/zero bogo read operations completed

Example: stress-ng --cpu 8 --io 4 --vm 2 --vm-bytes 128M --fork 4 --timeout 10s

Note: Sizes can be suffixed with B,K,M,G and times with s,m,h,d,y

root@docker01:~#

3.2 容器的内存限制

Docker可以在强制执行硬性内存限制,即只允许容器使用给定的内存大小。

Docker也可以执行非硬性内存限制,即容器可以使用尽可能多的内存,除非内核检测到主机上的内存不够用了。

-m or --memory #容器可以使用的最大内存量,如果设置此选项,则允许的最小内存值为4m(4兆字节)。

<1>限制内存使用128M,参数-m 128m; root@docker01:~# docker run -it --rm -m 128m --name container1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M (--vm 2 --vm-bytes 256M是docker-stress-ng镜像用法,即启动两个工作进程,每个工作进程最大允许使用内存256M)

查看限制效果 root@docker01:~# docker stats CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS ae0a79cc885d container1 90.36% 121.1MiB / 128MiB 94.60% 1.32kB / 0B 904MB / 22kB 5 查看容器的限制参数: root@docker01:~# cat /sys/fs/cgroup/memory/docker/ae0a79cc885d98b4f433eeb00c6a7e1e018dbfe356d5497064f7c6b23d5bc3ee/memory.limit_in_bytes 134217728 限制单位换算: root@docker01:~# bc 134217728/1024/1024 128 <2>动态调整内存限制到256M; root@docker01:~# bc 256*1024*1024 268435456 quit root@docker01:~# echo "268435456" > /sys/fs/cgroup/memory/docker/ae0a79cc885d98b4f433eeb00c6a7e1e018dbfe356d5497064f7c6b23d5bc3ee/memory.limit_in_bytes root@docker01:~# 查看效果: CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS ae0a79cc885d container1 92.68% 253.3MiB / 256MiB 98.95% 1.46kB / 0B 4.59GB / 22kB 5

3.3 容器的CPU限制

一个宿主机有几个核心的CPU,但是宿主机上可以同时运行成百上千个不同的进程用以处理不同的任务,多进程共用一个CPU的核心依赖技术就是为可压缩资源,即一个核心的CPU可以通过调度运行多个进程,但是同一个单位时间内只能有一个进程在CPU上运行。Linux kernel进程的调度基于CFS(Completely Fair Scheduler)完全公平高度。

cpu时间片

- 实时优先级:0-99

- 非实时优先级(nice): -20~19,对应100-139的进程优先级

CPU密集型的场景:优先级越低越好,计算密集型任务的特点是要进行大量的计算,消耗CPU资源,比如计算圆周率、数据处理、对视频进行高清解码等等,全靠CPU的运算能力。(负载均衡、Haproxy)

IO密集型的场景:优先级值高点,涉及到网络、磁盘IO的任务都是IO密集型任务,这类任务的特点是CPU消耗很少,任务的大部分时间都在等待IO操作。(nginx、java主要消耗网络io和磁盘io;数据库 io密集型)

--cpus #指定容器可以使用多少CPU资源,例如,如果主机有两个CPU,并且设置了--cpus= "1.5",那么该容器将保证最多可以访问1.5个的CPU;(如果是4核的CPU,那么还可以是4核心上每核用一点,但是总计是1.5核心的CPU)。这相当于设置--cpu-period="100000" (CPU调度周期)和--cpu-quota="150000"(CPU调度限制),--cpus主要在Docker 1.13和更高版本中使用,目的是替代--cpu-period和--cpu-quota两个参数,从而使配置更简单,但是最大不能超出宿主机的CPU总核心数(在操作系统看到CPu超线程后的数值)。

<1>测试机只有1个cpu核时,给容器分配两个cpu报错; root@docker01:~# docker run -it --rm --cpus 2 --name container2 lorel/docker-stress-ng --cpu 4 --vm 4 docker: Error response from daemon: Range of CPUs is from 0.01 to 1.00, as there are only 1 CPUs available. See 'docker run --help'. root@docker01:~# grep processor /proc/cpuinfo processor : 0 root@docker01:~# <2>对测试机增加cpu测试; root@docker01:~# grep processor /proc/cpuinfo processor : 0 processor : 1 processor : 2 processor : 3 root@docker01:~# docker run -it --rm --cpus 2 --name container2 lorel/docker-stress-ng --cpu 4 --vm 4 stress-ng: info: [1] defaulting to a 86400 second run per stressor stress-ng: info: [1] dispatching hogs: 4 cpu, 4 vm 查看限制效果; root@docker01:~# docker stats CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS 1ca0d3322ec7 container2 200.02% 1.013GiB / 1.913GiB 52.94% 1.18kB / 0B 774kB / 0B 查看容器所在HOST宿主机限制参数; root@docker01:~# cat /sys/fs/cgroup/cpu,cpuacct/docker/1ca0d3322ec7c571b77248d82203f4c9edb53b1f0ace547c8f57061be0f850aa/cpu.cfs_quota_us 200000 root@docker01:~# ##200000,20w,每核心CPU会按照1000为单位转换成百分比进行资源划分,2个核心的CPU就是20000/1000=200%,4个核心400000/1000=400%,以此类推。

四、基于Dockerfile构建nginx镜像、tomcat镜像以及应用镜像

4.1 容器规范

容器技术除了docker之外,还有coreOS的rkt,还有阿时的Pouch,为了保证容器生态的标准性和健康可持续发展,包括Linux基金会、Docker、微软、红帽、谷歌、IBM等公司在2015年6月共同成立了一个open container(OCI)的组织,其目的就是制定开放的标准的容器规范,目前OCI一共发布了两个规范,分别是runtime spec 和 image format spec,有了这两个规则,不同的容器公司开发的容器只要兼容这两个规范,就可以保证容器的可移植性和相互可操作性。

(1)容器runtime(runtime spec)

runtime是真正运行容器的地方,因此为了运行不同的容器runtime需要和操作系统内核紧密合作相互在支持,以便为容器提供相应的运行环境。

runtime主要定义了以下规范,并以json格式保存在/run/docker/runtime-runc/moby/容器id/state.json文件,此文件会根据容器的状态实时更新内容:

- 版本信息:存放OCI标准的具体版本号。

- 容器ID:通常是一个哈希值,可以在所有state.json文件中提取出容器ID对容器进行批量操作(关闭、删除等),此文件在容器关闭后被删除,容器启动会自动生成。

- PID:在容器中运行的首个进程在宿主机上的进程号,即将宿主机的那个进程设置为容器的守护进程。

- 容器文件目录:存放容器rootfs及相应配置的目录,外部程序只需读取state.json就可以定位到宿主机上的容器文件目录。

- 容器创建:创建包括文件系统、namespaces、cgroups、用户权限在内的各项内容。

- 容器进程的启动:运行容器启动进程,该文件在/run/containerd/io.containerd.runtime.v1.linux/moby/容器ID/config.json。

- 容器生命周期:容器进程可以被外部程序关停,runtime规范定义了对容器操作信息号的捕获,并做相应资源回收的处理,避免僵尸进程的出现。

目前主流的三种runtime:

lxc:linux上早期的runtime,Docker早期就是采用lxc作为runtime。(lxc是lxd的管理工具)

runc:目前Docker默认的runtime,runc遵守OCI规范,因此可以兼容lxc。(runc的管理工具是docker engine,docker engine包含daemon和cli两部分;经常提到的Docker就是docker engine)

rkt:是CoreOS开发的容器runtime,也符合OCI规范,所以使用rktruntime也要以运行Docker容器。(管理工具是rkt cli)

(2)容器镜像(image format spec)

OCI容器镜像主要包含以下内容:

- 文件系统:定义以layer保存的文件系统,在镜像里面是layer.tar,每个layer保存了和上层之间变化的部分,image format spec定义了layer应该保存哪些文件,怎么表示增加、修改和删除文件等操作。

- manifest文件:描述有哪些layer,tag标签及config文件名称。

- config文件:以是一个以hash命名的json文件,保存了镜像平台,容器运行时运行容器需要的一些信息,比如环境变量、工作目录、命令参数等。

- index文件:可选文件,指向不同平台的manifest文件,这个文件能保证一个镜像可以跨平台使用,每个平台拥有不同的manifest文件使用index作为索引。

- 父镜像:大多数层的元信息结构都包含一个parent字段,指向该镜像的父镜像。

参数:

ID:镜像ID,每一个层都有ID

tag标签:标签用于将用户指定的、具有描述性的名称对应到镜像ID

os:定义类型

architecture:定义CPU架构

author:作者信息

create:镜像创建日期

4.2 容器定义工具

容器定义工具允许用户定义容器的属性和内容,以方便容器能够被保存、共享和重建。

Docker image:是docker容器的模板,runtime依据docker image创建容器。

Dockerfile:包含N个命令的文本文件,通过dockerfile创建出docker image。

ACI(App container image):与docker image类似,是CoreOS开发的rkt容器的镜像格式。

4.3 编排工具

容器编排引擎实现统一管理、动态伸缩、故障自愈、批量执行等功能。容器编排通常包括容器管理、调度、集群定义和服务发现等功能。

- Docker swarm:docker开发的容器编排引擎。

- Kubernetes:google领导开发的容器编排引擎,内部项目为Borg,且其同时支持docker和CoreOS。

- Mesos+Marathon:通用的集群组员调度平台,mesos(资源分配)与marathon(容器编排平台)一起提供容器编排引擎功能。

4.4 构建nginx镜像

(1)编写dockerfile;

root@docker01:~# mkdir /opt/Dockerfile root@docker01:/opt/Dockerfile# mkdir nginx root@docker01:/opt/Dockerfile# cd nginx root@docker01:/opt/Dockerfile/nginx# wget http://nginx.org/download/nginx-1.18.0.tar.gz root@docker01:/opt/Dockerfile/nginx# cat Dockerfile #Centos nginx image FROM centos:7.9.2009 maintainer "tdq dqemail@qq.com" RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop ADD nginx-1.18.0.tar.gz /usr/local/src RUN cd /usr/local/src/nginx-1.18.0 && ./configure --prefix=/apps/nginx && make && make install ADD nginx.conf /apps/nginx/conf/nginx.conf RUN mkdir -p /data/nginx/html ADD www.tar.gz /data/nginx/html CMD ["/apps/nginx/sbin/nginx","-g","daemon off;"] root@docker01:/opt/Dockerfile/nginx# tree . ├── Dockerfile ├── nginx-1.18.0.tar.gz ├── nginx.conf ├── www │ ├── index.html │ └── test.jpg └── www.tar.gz

(2)构建nginx镜像;

root@docker01:/opt/Dockerfile/nginx# docker build -t harbor.s209.com/n56/nginx:v3 .

(3)应用镜像,启动容器测试;

root@docker01:/opt/Dockerfile/nginx# docker run -p 81:80 harbor.s209.com/n56/nginx:v3

(4)客户端访问测试;

ubuntu@harbor01:~$ curl http://172.31.6.20:81 <h1>nginx is working</h1> ubuntu@harbor01:~$ curl http://172.31.6.20:81/test.jpg -I HTTP/1.1 200 OK

(5)添加nginx用户和EXPOSE的Dockerfile文件

root@docker01:/opt/Dockerfile/nginx# cat Dockerfile #Centos nginx image FROM centos:7.9.2009 maintainer "tdq dqemail@qq.com" RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop ADD nginx-1.18.0.tar.gz /usr/local/src RUN cd /usr/local/src/nginx-1.18.0 && ./configure --prefix=/apps/nginx && make && make install ADD nginx.conf /apps/nginx/conf/nginx.conf RUN useradd nginx -s /sbin/nologin

RUN ln -sv /apps/nginx/sbin/nginx /usr/sbin/nginx RUN mkdir -p /data/nginx/html ADD www.tar.gz /data/nginx/html EXPOSE 80 443 CMD ["/apps/nginx/sbin/nginx","-g","daemon off;"]

(6)另外支持先使用centos:7.9.2009创建容器,进入容器,编译安装nginx,然后在Host宿主机上执行docker commit -m "test-nginx-v1" c9bea3273963提交为镜像;再使用此镜像启动容器验证。

4.4 构建tomcat镜像

(1)创建tomcat相关应用目录

root@docker01:/opt/Dockerfile# mkdir /opt/Dockerfile/{web/{nginx,tomcat,jdk,apache},system/{centos,ubuntu,redhat}} -pv

mkdir: created directory '/opt/Dockerfile/web'

mkdir: created directory '/opt/Dockerfile/web/nginx'

mkdir: created directory '/opt/Dockerfile/web/tomcat'

mkdir: created directory '/opt/Dockerfile/web/jdk'

mkdir: created directory '/opt/Dockerfile/web/apache'

mkdir: created directory '/opt/Dockerfile/system'

mkdir: created directory '/opt/Dockerfile/system/centos'

mkdir: created directory '/opt/Dockerfile/system/ubuntu'

mkdir: created directory '/opt/Dockerfile/system/redhat'

root@docker01:/opt/Dockerfile# tree

.

├── system

│ ├── centos

│ ├── redhat

│ └── ubuntu

└── web

├── apache

├── jdk

├── nginx

└── tomcat

11 directories, 6 files

root@docker01:/opt/Dockerfile#

(2)自定义Centos基础镜像

<1>写Dockerfile文件:

root@docker01:/opt/dockerfile/system/centos# cat Dockerfile

# Centos Base Image

#

FROM centos:latest

MAINTAINER tdq dqemail@qq.com

RUN rpm -ivh https://mirrors.yun-idc.com/epel/epel-release-latest-8.noarch.rpm

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

RUN groupadd www -g 2021 && useradd www -u 2021 -g www

root@docker01:/opt/dockerfile/system/centos#

<2>通过脚本构建镜像:

root@docker01:/opt/dockerfile/system/centos# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.s209.com/n56/centos-base:${TAG} .

root@docker01:/opt/dockerfile/system/centos#

<3>构建镜像:

root@docker01:/opt/dockerfile/system/centos# bash build-command.sh v1

(3)执行构建JDK镜像

<1>下载jdk包;(这里以jdk-8u202为例)

root@docker01:/opt/dockerfile/web/jdk# wget https://mirrors.huaweicloud.com/java/jdk/8u202-b08/jdk-8u202-linux-x64.tar.gz

<2>写/etc/profile文件

root@docker01:/opt/dockerfile/web/jdk# cat profile

export JAVA_HOME=/usr/local/src/jdk1.8.0_202

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

<2>通过脚本执行docker build命令;

root@docker01:/opt/dockerfile/web/jdk# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.s209.com/n56/jdk-base:${TAG} .

<2>写Dockerfile文件;(注意文件名称要正确)

root@docker01:/opt/dockerfile/web/jdk# cat Dockerfile

# JDK Base Image

FROM harbor.s209.com/n56/centos-base:v1

MAINTAINER tdq "dqemail@qq.com"

ADD jdk-8u202-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_202 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

RUN rm -rf /etc/localtime && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

<3>构建JDK镜像时需要的文件;

root@docker01:/opt/dockerfile/web/jdk# tree

.

├── Dockerfile

├── build-command.sh

├── jdk-8u212-linux-x64.tar.gz

└── profile

0 directories, 4 files

root@docker01:/opt/dockerfile/web/jdk#

<4>构建镜像

root@docker01:/opt/dockerfile/web/jdk# bash build-command.sh v8.202

<5>使用镜像启动容器,进行验证;

root@docker01:/opt/dockerfile/web/jdk# docker run -it --rm harbor.s209.com/n56/jdk-base:v8.202 bash

[root@f7ee4fd422cb /]# java -version

java version "1.8.0_202"

Java(TM) SE Runtime Environment (build 1.8.0_202-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

[root@f7ee4fd422cb /]# date

Sun Sep 5 20:24:35 CST 2021

(4)从JDK镜像构建tomcat8 Base镜像;

<1>下载apache-tomcat包;

root@docker01:/opt/dockerfile/web/jdk# cd /opt/dockerfile/web/tomcat/

root@docker01:/opt/dockerfile/web/tomcat# wget https://mirrors.huaweicloud.com/apache/tomcat/tomcat-8/v8.5.45/bin/apache-tomcat-8.5.45.tar.gz

<2>写Dockerfile文件;

root@docker01:/opt/dockerfile/web/tomcat# cat Dockerfile

# Tomcat Base Image

FROM harbor.s209.com/n56/jdk-base:v8.202

#env

ENV TZ "Asia/Shanghai"

ENV LANG en_US.UTF-8

ENV TERM xterm

ENV TOMCAT_MAJOR_VERSION 8

ENV TOMCAT_MINOR_VERSION 8.5.45

ENV CATALINA_HOME /apps/tomcat

ENV APP_DIR ${CATALINA_HOME}/webapps

#tomcat

RUN mkdir /apps

ADD apache-tomcat-8.5.45.tar.gz /apps

RUN ln -sv /apps/apache-tomcat-8.5.45 /apps/tomcat

root@docker01:/opt/dockerfile/web/tomcat#

<3>构建镜像;

root@docker01:/opt/dockerfile/web/tomcat# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.s209.com/n56/tomcat-base:${TAG} .

root@docker01:/opt/dockerfile/web/tomcat#

root@docker01:/opt/dockerfile/web/tomcat# bash build-command.sh v8.5.45

<4>使用镜像启动容器,测试验证;

root@docker01:/opt/dockerfile/web/tomcat# docker run -it --rm harbor.s209.com/n56/tomcat-base:v8.5.45 bash

[root@178aa3607ea8 /]# ls /apps/tomcat/

bin conf lib logs README.md RUNNING.txt webapps

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE RELEASE-NOTES temp work

[root@178aa3607ea8 /]# exit

<5>查看tomcat镜像时使用的文件;

root@docker01:/opt/dockerfile/web/tomcat# tree

.

├── Dockerfile

├── apache-tomcat-8.5.45.tar.gz

└── build-command.sh

0 directories, 3 files

root@docker01:/opt/dockerfile/web/tomcat#

(5)基于tomcat自定义基础镜像构建不同业务的tomcat app镜像;

<1>创建tomcat-app1目录,用于构建镜像;

root@docker01:/opt/dockerfile/web/tomcat# mkdir tomcat-app1

root@docker01:/opt/dockerfile/web/tomcat# cd tomcat-app1

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1#

<2>写run_tomcat.sh文件;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# cat run_tomcat.sh

#!/bin/bash

echo "1.1.1.1 abc.test.com" >> /etc/hosts

echo "nameserver 223.5.5.5" >> /etc/resolv.conf

su - www -c "/apps/tomcat/bin/catalina.sh start"

su - www -c "tail -f /etc/hosts"

<3>创建测试使用的web html测试页面;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# mkdir myapp

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# echo "Tomcat Web Page" > myapp/index.html

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# cat myapp/index.html

Tomcat Web Page

<4>写Dockerfile该文件;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# cat Dockerfile

#Tomcat Web Image

FROM harbor.s209.com/n56/tomcat-base:v8.5.45

ADD run_tomcat.sh /apps/tomcat/bin/

ADD myapp/* /apps/tomcat/webapps/myapp/

RUN chown www.www /apps/ -R && chmod +x /apps/tomcat/bin/run_tomcat.sh

EXPOSE 8080 8009

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

<5>通过脚本执行docker build构建镜像;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.s209.com/n56/tomcat-web:${TAG} .

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1#

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# bash build-command.sh app1

<6>使用生成的tomcat-app1镜像,启动容器验证;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# docker run -it -d -p 8080:8080 harbor.s209.com/n56/tomcat-web:app1

<7>从客户端访问http://172.31.6.20:8080/myapp/测试

ubuntu@harbor01:~$ curl http://172.31.6.20:8080/myapp/

Tomcat Web Page

ubuntu@harbor01:~$

<8>构建tomcat-app1应用镜像时用到的文件;

root@docker01:/opt/dockerfile/web/tomcat/tomcat-app1# tree

.

├── Dockerfile

├── build-command.sh

├── myapp

│ └── index.html

└── run_tomcat.sh

1 directory, 4 files

五、Docker 数据的持久化

Docker的镜像是分层设计的,镜像层是只读的,通过镜像启动的容器添加了一层可读写的文件系统,用户写入的数据都保存在这一层中;

如果要将写入到容器的数据永久保存,则需要将容器中的数据保存到宿主机的指定目录,目前Docker的数据类型分为两种:

一是数据卷(data volume),数据卷类似于挂载的一块磁盘;数据卷实际宿主机的目录或者是文件,可以被直接mount到容器中使用。

二是数据卷容器(Data volume container),数据卷容器是将宿主机的目录挂载至一个专门的数据卷容器,然后让其他的容器通过数据卷容器读写宿主机的数据。 可以让数据在多个docker容器之间共享;即可以让B容器访问 A容器的内容,而容器C也可以访问A容器的内容,即先要创建一个后台运行的容器作为Sever,用于卷提供,这个卷可以为其他容器提供数据存储服务,其他使用此卷的容器作为client端。

-v参数:将宿主机目录映射到容器内部,默认是可读写的,ro标示在容器内对该目录只读。

root@docker01:~# docker inspect 95fcd7e59377

...省略...

"Data": {

"LowerDir": "/data/docker/overlay2/50a718cc6179eac2c7a84a3698306107ab8e11a237dbfe4fb50d1280e5613e81-init/diff:/data/docker/overlay2/1b2c41ad09e16088646a15aa57875cb6b738ab382f1ab36e824ac4868ad7e02b/diff:/data/docker/overlay2/e0d48a0fce0a6dfe4152c71000e02b73ef0f1713a2b3dc622ee831b87d5fb235/diff:/data/docker/overlay2/735d27abb299828d42cd61b3a6df43fa2d8f3b7d2aaee7ef4612480c63ba5156/diff:/data/docker/overlay2/ab2fdbb45e2b25e6f326e328444cf25ab3cd92c3dc3de0435d3630a3e9965be5/diff:/data/docker/overlay2/e9d3d22453efab36303f3bc316e3f3cfde43f848203343227568da8b47209ea0/diff:/data/docker/overlay2/f383e4b7b001d3858a0d1d9a9c1f38a667d2ef8c29155f266a11104429671f09/diff",

"MergedDir": "/data/docker/overlay2/50a718cc6179eac2c7a84a3698306107ab8e11a237dbfe4fb50d1280e5613e81/merged",

"UpperDir": "/data/docker/overlay2/50a718cc6179eac2c7a84a3698306107ab8e11a237dbfe4fb50d1280e5613e81/diff",

"WorkDir": "/data/docker/overlay2/50a718cc6179eac2c7a84a3698306107ab8e11a237dbfe4fb50d1280e5613e81/work"

},

"Name": "overlay2"

...省略...

- Lower Dir:image镜像层(镜像本身,只读);

- Upper Dir:容器的上层(读写);

- Merged Dir:容器的文件系统,使用Union FS(联合文件系统)将lowerdir和upperDir合并给容器使用;

- Work Dir:容器在宿主机的工作目录;

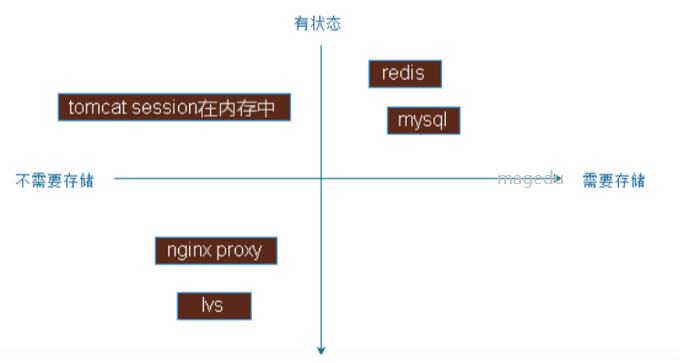

实际生效环境中,需要针对不同类型的服务、不同类型的数据存储要求做相应的规划,最终保证服务的可扩展性、稳定性以及数据的安全性。

如下图:

左侧是状态的http请求数据,右侧为有状态;

下层为不需要存储的服务,上丑为需要存储的部分服务。

数据卷的特点及使用:

- 数据卷是宿主机的目录或者文件,并且可以在多个容器之间共同使用。

- 在宿主机对数据卷更改数据后会在所有容器里面会立即更新。

- 数据卷的数据可以持久保存,即使删除使用该数据卷的容器也不影响。

- 在容器里面写入数据不会影响到镜像本身。

5.1 使用-v参数挂载单个目录

<1>停止所有容器和删除所有容器; root@docker01:~# docker stop `docker ps -a -q` root@docker01:~# docker rm `docker ps -a -q` <2>在host宿主机创建测试目录和web测试页面; root@docker01:~# mkdir /data/testapp -p root@docker01:~# echo "Apple13 is coming" > /data/testapp/index.html root@docker01:~# cat /data/testapp/index.html Apple13 is coming root@docker01:~# <3>启动容器和验证数据 注意:使用-v参数,将宿主机目录映射到容器内部,web2的ro标示在容器内对该目录只读,默认是可读写的 root@docker01:~# docker run -d --name web1 -v /data/testapp/:/apps/tomcat/webapps/testapp -p 8080:8080 harbor.s209.com/n56/tomcat-web:app1 b6dbb1ebe31f1dd9f5c8711dfdfdf8f9bc1b7608022a88d1df490e760b64cfd9 root@docker01:~# root@docker01:~# docker run -d --name web2 -v /data/testapp/:/apps/tomcat/webapps/testapp:ro -p 8081:8080 harbor.s209.com/n56/tomcat-web:app1 3e245aae437fe1bd0b151e7fff9318c21a4a2c31cb350f12a25dfd7cd31523f7 ubuntu@harbor01:~$ curl http://172.31.6.20:8080/testapp/ Apple13 is coming ubuntu@harbor01:~$ curl http://172.31.6.20:8081/testapp/ Apple13 is coming <4>进入到容器内测试写入数据 root@docker01:~# docker exec -it web1 bash [root@b6dbb1ebe31f /]# cat /apps/tomcat/webapps/testapp/index.html Apple13 is coming [root@b6dbb1ebe31f /]# echo web1 >> /apps/tomcat/webapps/testapp/index.html #验证web1向宿主机目录写入数据是成功的; [root@b6dbb1ebe31f /]# cat /apps/tomcat/webapps/testapp/index.html Apple13 is coming web1 [root@b6dbb1ebe31f /]# exit exit root@docker01:~# docker exec -it web2 bash [root@3e245aae437f /]# echo web2 >> /apps/tomcat/webapps/testapp/index.html #验证web2向宿主机目录写入数据报错只读; bash: /apps/tomcat/webapps/testapp/index.html: Read-only file system [root@3e245aae437f /]#

5.2 文件挂载

文件挂载用于很少更改文件的内容场景,比如nginx的配置文件,tomcat的配置文件等;

root@docker01:~# docker run -d --name web2 -v /data/testapp/catalina.sh:/apps/tomcat/bin/catalina.sh:ro -p 8081:8080 harbor.s209.com/n56/tomcat-web:app1 1f0218e8fe66ef21ae58d9b603a6845bdf1bdaada5dcc44d1df597c1e676652f root@docker01:~# root@docker01:~# docker exec -it web2 bash [root@1f0218e8fe66 /]# head -3 /apps/tomcat/bin/catalina.sh #!/bin/sh # tdq test dqemail@qq.com [root@1f0218e8fe66 /]# echo "#apple tdq" >> /apps/tomcat/bin/catalina.sh #测试读写 bash: /apps/tomcat/bin/catalina.sh: Read-only file system [root@1f0218e8fe66 /]#

5.3 一次挂载多个目录

多个目录可以位于不同的目录下;(挂载示例参考,这里catalina.sh在容器内对应属主是root,会影响tomcat启动)

root@docker01:~# docker run -d --name web2 -v /data/testapp/catalina.sh:/apps/tomcat/bin/catalina.sh:ro -v /data/magedu/:/apps/tomcat/webapps/testapp -p 8081:8080 harbor.s209.com/n56/tomcat-web:app1

5.4 数据卷容器

数据卷容器功能是可以让数据在多个docker容器之间共享,即可以让B容器访问A容器的内容,而容器C也可以访问A容器的内容,即先要创建一个后台运行的容器做为Server,用于卷提供,这个卷可以为其他容器提供数据存储服务,其他使用此卷的容器作为client端。

数据卷容器可以作为共享的方式为其他容器提供文件共享,类似于NFS共享,可以在生产中启动一个安全实例挂载本地目录,然后其他的容器分别挂载此容器的目录,即可保证各容器之间的数据一致性。(可以以复制的方式创建容器B,和容器A挂载相同的数据卷)

即使把提供卷的容器Server删除,已经运行的容器Client依然可以使用挂载的卷,因为容器是通过挂载访问数据的,但是无法创建新的卷容器客户端,但是再把卷容器Server创建后即可正常的创建卷容器Client,此方式可以用于线上共享数据目录等环境,因为即使数据卷容器被删除了,其他已经运行的容器依然可以挂载使用。

(1)启动一个卷容器Server:

root@docker01:~# ls /opt/dockerfile/nginx/www index.html test.jpg root@docker01:~# docker run -d --name volume-server1 -v /opt/dockerfile/nginx/www/:/usr/share/nginx/html/ -v /etc/hosts:/mnt/hosts:ro nginx:1.20 3b1d7dbe133e534759e2f782adf5087fa7a5bee0b00cc1298fb2aee339ba92ac

(2)启动两个容器Client;

root@docker01:~# docker run -d --name web1 -p 8001:80 --volumes-from volume-server1 nginx:1.20 root@docker01:~# docker run -d --name web2 -p 8002:80 --volumes-from volume-server1 nginx:1.20

(3)分别进入容器测试读写;

<1>测试容器web1; root@docker01:~# docker exec -it web1 bash root@c5e1a0b0c852:/# cat /usr/share/nginx/html/index.html <h1>nginx is working</h1> root@c5e1a0b0c852:/# echo "web1" >> /usr/share/nginx/html/index.html root@c5e1a0b0c852:/# cat /mnt/hosts 127.0.0.1 localhost 127.0.1.1 temp01 root@c5e1a0b0c852:/# echo "1.2.3.4 web1" >>/mnt/hosts bash: /mnt/hosts: Read-only file system <2>测试容器web2; root@docker01:~# docker exec -it web2 bash root@64f58f003396:/# echo "web2" >> /usr/share/nginx/html/index.html root@64f58f003396:/# echo "2.2.2.2 web2" >> /mnt/hosts bash: /mnt/hosts: Read-only file system root@64f58f003396:/#

(4)测试访问&&验证宿主机数据;

root@docker01:~# cat /opt/dockerfile/nginx/www/index.html <h1>nginx is working</h1> web1 web2 root@docker01:~# ubuntu@harbor01:~$ curl 172.31.6.20:8001 <h1>nginx is working</h1> web1 web2 ubuntu@harbor01:~$ curl 172.31.6.20:8002 <h1>nginx is working</h1> web1 web2 ubuntu@harbor01:~$

(5)关闭卷容器Server测试能否启动新容器;

root@docker01:~# docker stop volume-server1 volume-server1 root@docker01:~# docker ps root@docker01:~# docker run -d --name web3 -p 8003:80 --volumes-from volume-server1 nginx:1.20 ubuntu@harbor01:~$ curl 172.31.6.20:8003 <h1>nginx is working</h1> web1 web2

(6)测试删除源卷容器Server创建容器;

root@docker01:~# docker rm volume-server1 volume-server1 root@docker01:~# docker ps -a root@docker01:~# docker run -d --name web4 -p 8004:80 --volumes-from volume-server1 nginx:1.20 docker: Error response from daemon: No such container: volume-server1. See 'docker run --help'.

(7)基于容器web1的数据卷创建容器;

root@docker01:~# docker run -d --name web5 -p 8005:80 --volumes-from web1 nginx:1.20 root@docker01:~# docker exec -it web5 bash root@f2bb7b03ea01:/# echo web5 >> /usr/share/nginx/html/index.html root@f2bb7b03ea01:/# echo "5.5.5.5 web5" >>/mnt/hosts bash: /mnt/hosts: Read-only file system root@f2bb7b03ea01:/# ubuntu@harbor01:~$ curl 172.31.6.20:8005 <h1>nginx is working</h1> web1 web2 web5

(7)重新创建容器卷Server;

root@docker01:~# docker run -d --name volume-server1 -v /opt/dockerfile/nginx/www/:/usr/share/nginx/html/ -v /etc/hosts:/mnt/hosts:ro nginx:1.20

六、实现基于反向代理(LVS或HAProxy)的harbor镜像仓库高可用

Harbor是一个用于存储和分发Docker镜像的企业级Registry服务器,由vmware开通,其通过添加一个企业必须的功能特性,例如安全、标识和管理等,扩展了开源Docker Distribution。作为一个企业级私有Registry服务器,Harbor提供了更好的性能和安全。提升用户使用Registry构建和运行环境传输镜像的效率。Harbor支持安装在多个Registry节点的镜像资源复制,镜像全部保存在私有Registry中,确保数据和知识产权在公司内部网络中管控,另外Harbor也提供了高级的安全特性,诸如用户管理,访问控制和活动审计、镜像安全扫描等。

vmware官方开源服务列表地址:https://vmware.github.io/harbor/cn

harbor官方github地址:https://github.com/vmware/harbor

harbor官方网址:https://goharbor.io/

- nginx:harbor的一个反向代理组,代理registry、ui、tocker等服务。这个代理会转发harbor web和docker client的各种请求到后端服务上。

- harbor-adminserver:harbor系统管理接口,可以修改系统配置以及获取系统信息。

- harbor-db:存储项目的元数据、用户、规则、复制策略等信息。

- harbor-jobservice:harbor里面主要是为了镜像仓库之前同步使用的。

- harbor-log:收到harbor的日志信息。

- harbor-ui:一个用户界面模块,用来管理registry。

- registry:存储docker images的服务,并且提供pull/push服务。

- redis:存储缓存信息。

- webhook:当registry中的image状态发生变化的时候去记录更新日志、复制等操作。

- token service:当docker client 进行pull/push的时候负责tocker的发放。

6.1 安装harbor

6.1.1 下载安装harbor

(1)下载harbor; root@harbor01:~# cd /usr/local/src/ root@harbor01:/usr/local/src# wget https://github.com/goharbor/harbor/releases/download/v2.3.2/harbor-offline-installer-v2.3.2.tgz (2)安装docker和docker-compose root@harbor01:~# apt install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal

root@harbor01:~# apt install python3-pip root@harbor01:~# pip3 install docker-compose (3) root@harbor01:/usr/local/src# tar zxvf harbor-offline-installer-v2.3.2.tgz root@harbor01:/usr/local/src# ln -sv /usr/local/src/harbor /usr/local/ '/usr/local/harbor' -> '/usr/local/src/harbor' root@harbor01:/usr/local/src# cd /usr/local/harbor root@harbor01:/usr/local/harbor# cp harbor.yml.tmpl harbor.yml #设置hostname、注释https、设置网页登录密码 root@harbor01:/usr/local/harbor# ./install.sh --with-trivy #开启安全扫描模块;如果安装时忘记开启,可以./prepare --with-trivy命令开启(需要先docker-compose down) ...省略.... ✔ ----Harbor has been installed and started successfully.---- (4)报错参考: root@harbor01:/usr/local/harbor# ./install.sh --with-trivy [Step 0]: checking if docker is installed ... Note: docker version: 19.03.15 [Step 1]: checking docker-compose is installed ... ✖ Need to install docker-compose(1.18.0+) by yourself first and run this script again.

6.1.2 设置harbor开机启动

root@harbor01:/usr/local/harbor# cat /usr/lib/systemd/system/harbor.service [Unit] Description=Harbor After=docker.service systemd-networkd.service systemd-resolved.service Requires=docker.service Documentation=http://github.com/vmware/harbor [Service] Type=simple Restart=on-failure RestartSec=5 ExecStart=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up ExecStop=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml down [Install] WantedBy=multi-user.target root@harbor01:/usr/local/harbor# root@harbor01:/usr/local/harbor# systemctl enable harbor.service Created symlink /etc/systemd/system/multi-user.target.wants/harbor.service → /lib/systemd/system/harbor.service.

6.1.3 查看harbor创建的容器和下载的镜像

root@harbor01:/usr/local/harbor# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a9645d8ad572 goharbor/nginx-photon:v2.3.2 "nginx -g 'daemon of…" 2 minutes ago Up 2 minutes (healthy) 0.0.0.0:80->8080/tcp nginx 371cd33464bc goharbor/harbor-jobservice:v2.3.2 "/harbor/entrypoint.…" 2 minutes ago Up 2 minutes (healthy) harbor-jobservice b602450c1162 goharbor/harbor-core:v2.3.2 "/harbor/entrypoint.…" 2 minutes ago Up 2 minutes (healthy) harbor-core c761d6563d67 goharbor/trivy-adapter-photon:v2.3.2 "/home/scanner/entry…" 2 minutes ago Up 2 minutes (healthy) trivy-adapter 1944503c8c08 goharbor/harbor-registryctl:v2.3.2 "/home/harbor/start.…" 2 minutes ago Up 2 minutes (healthy) registryctl 1c3348444083 goharbor/registry-photon:v2.3.2 "/home/harbor/entryp…" 2 minutes ago Up 2 minutes (healthy) registry 2fefcd2a3ee6 goharbor/redis-photon:v2.3.2 "redis-server /etc/r…" 2 minutes ago Up 2 minutes (healthy) redis 6d76af94265f goharbor/harbor-portal:v2.3.2 "nginx -g 'daemon of…" 2 minutes ago Up 2 minutes (healthy) harbor-portal 43da6a48cc14 goharbor/harbor-db:v2.3.2 "/docker-entrypoint.…" 2 minutes ago Up 2 minutes (healthy) harbor-db f7bd0c552100 goharbor/harbor-log:v2.3.2 "/bin/sh -c /usr/loc…" 2 minutes ago Up 2 minutes (healthy) 127.0.0.1:1514->10514/tcp harbor-log root@harbor01:/usr/local/harbor# docker images REPOSITORY TAG IMAGE ID CREATED SIZE goharbor/harbor-exporter v2.3.2 37f41f861e77 2 weeks ago 81.1MB goharbor/chartmuseum-photon v2.3.2 ad5cd42a4977 2 weeks ago 179MB goharbor/redis-photon v2.3.2 812c6f5c260b 2 weeks ago 155MB goharbor/trivy-adapter-photon v2.3.2 3f07adb2e138 2 weeks ago 130MB goharbor/notary-server-photon v2.3.2 49aadd974d6d 2 weeks ago 110MB goharbor/notary-signer-photon v2.3.2 6051589deaf9 2 weeks ago 108MB goharbor/harbor-registryctl v2.3.2 0e551984a22c 2 weeks ago 133MB goharbor/registry-photon v2.3.2 193d952b4f10 2 weeks ago 81.9MB goharbor/nginx-photon v2.3.2 83bd32904c30 2 weeks ago 45MB goharbor/harbor-log v2.3.2 dae52c0f300e 2 weeks ago 159MB goharbor/harbor-jobservice v2.3.2 5841788c17a4 2 weeks ago 211MB goharbor/harbor-core v2.3.2 cf6ad69c2dd4 2 weeks ago 193MB goharbor/harbor-portal v2.3.2 4f68bc2f0a41 2 weeks ago 58.2MB goharbor/harbor-db v2.3.2 3cc534e09148 2 weeks ago 227MB goharbor/prepare v2.3.2 c2bd99a13157 2 weeks ago 252MB root@harbor01:/usr/local/harbor#

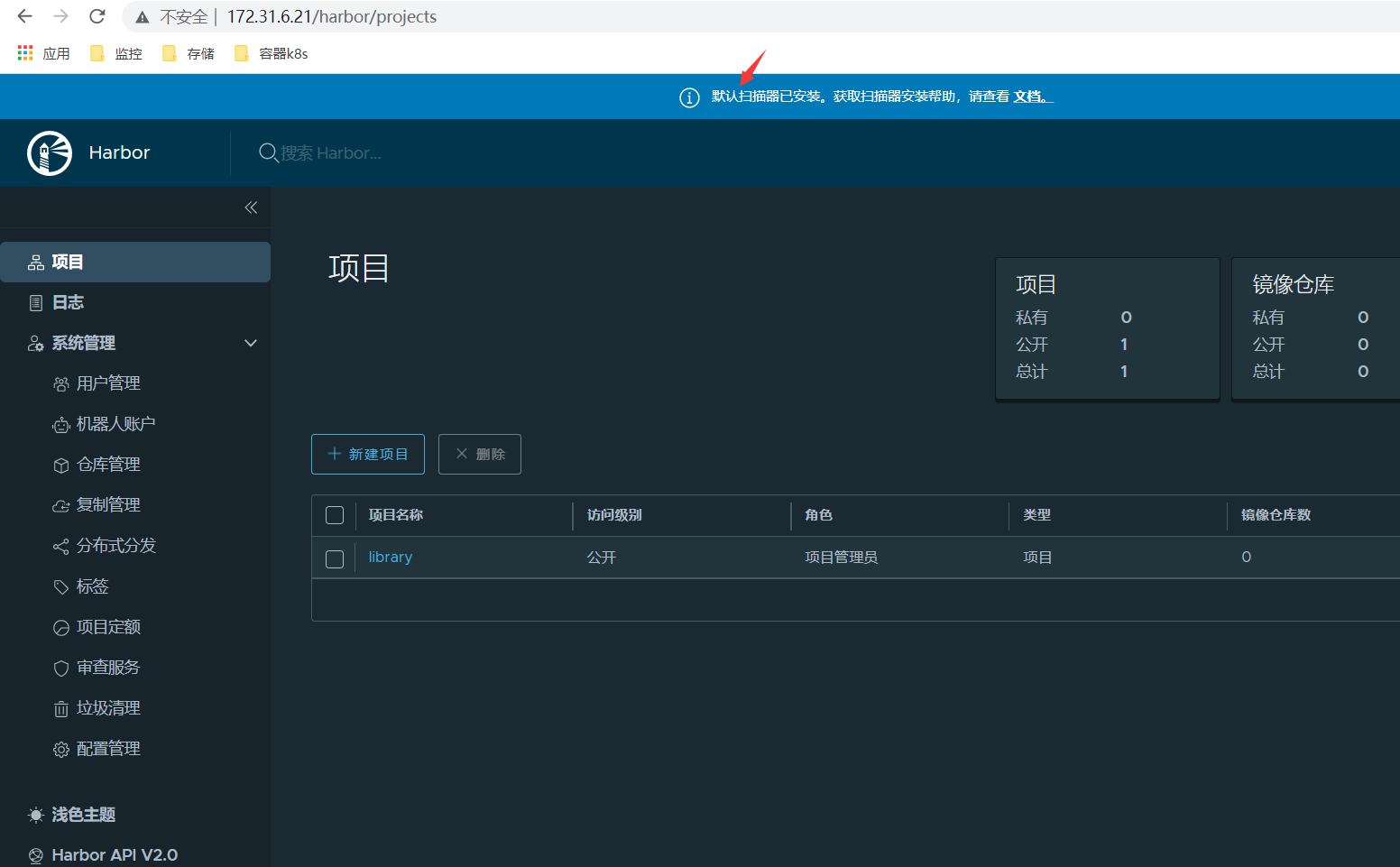

6.1.4 客户端浏览器访问harbor登录页面验证,创建项目

6.2 Harbor使用push/pull镜像

<1>关闭harbor使用的容器;

root@harbor01:~# cd /usr/local/harbor #先进到有docker-compose.yml配置文件的目录;

root@harbor01:/usr/local/harbor# docker-compose down

Stopping nginx ... done

Stopping harbor-jobservice ... done

Stopping harbor-core ... done

Stopping redis ... done

Stopping harbor-portal ... done

Stopping harbor-db ... done

Stopping harbor-log ... done

Removing nginx ... done

Removing harbor-jobservice ... done

Removing harbor-core ... done

Removing trivy-adapter ... done

Removing registryctl ... done

Removing registry ... done

Removing redis ... done

Removing harbor-portal ... done

Removing harbor-db ... done

Removing harbor-log ... done

Removing network harbor_harbor

root@harbor01:/usr/local/harbor#

<2>开启harbor使用的容器,并放在后台运行;

root@harbor01:/usr/local/harbor# docker-compose start

Starting log ... done

Starting registry ... done

Starting registryctl ... done

Starting postgresql ... done

Starting portal ... done

Starting redis ... done

Starting core ... done

Starting jobservice ... done

Starting proxy ... done

Starting trivy-adapter ... done

root@harbor01:/usr/local/harbor#

<3>浏览器访问harbor所在机器ip,登录harbor后创建项目,可设置项目为公开权限做测试;

<4>在客户端机器设置hosts文件,通过域名上传;

root@docker01:~# echo "172.31.6.21 harbor.s209.com" >>/etc/hosts

root@docker01:~# cat /etc/docker/daemon.json #添加非安全的http harbor源

{

"registry-mirrors": ["https://8otyy3fq.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"data-root": "/data/docker",

"insecure-registries": ["harbor.s209.com"]

}

root@docker01:~# systemctl restart docker #重启docker服务,使新添加的harbor源生效;

root@docker01:~# docker info | tail

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries: #验证添加的harbor是否生效

harbor.s209.com

127.0.0.0/8

Registry Mirrors:

https://8otyy3fq.mirror.aliyuncs.com/

Live Restore Enabled: false

root@docker01:~# docker login harbor.s209.com #登录新添加的harbor源

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@docker01:~# cat /root/.docker/config.json

{

"auths": {

"harbor.s209.com": {

"auth": "YWRtaW46MTIzNDU2"

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.15 (linux)"

}

}root@docker01:~#

<5>上传镜像到harbor01;

root@docker01:~# docker push harbor.s209.com/n56/tomcat-web:app1

The push refers to repository [harbor.s209.com/n56/tomcat-web]

5983239af13e: Pushed

...

631a99e13e8b: Pushing [=================================================> ] 396.5MB/402.5MB

159aca821972: Pushed

6def8b631e52: Pushing [==============================> ] 215MB/350.4MB

1baff6e29dcd: Pushed

2653d992f4ef: Pushing [=====================================> ] 157.6MB/209.3MB

上传完成后会提示:

app1: digest: sha256:86780109cbeb7d902ba55bd010b81e0834d72fbd40dfb343d03500bbaf231b93 size: 3245

<6>使用另外一台客户端机器,配置harbor源,拉取镜像测试;

root@ceph-client1-ubuntu:~# 安装docker

apt update

apt -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

add-apt-repository "deb [arch=amd64] https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

apt update

apt -y install docker-ce=5:19.03.5~3-0~ubuntu-bionic docker-ce-cli=5:19.03.5~3-0~ubuntu-bionic

root@ceph-client1-ubuntu:~#

root@ceph-client1-ubuntu:~# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://8otyy3fq.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"insecure-registries": ["harbor.s209.com"]

}

root@ceph-client1-ubuntu:~# systemctl restart docker

root@ceph-client1-ubuntu:~# docker info | tail

root@ceph-client1-ubuntu:~# echo "172.31.6.21 harbor.s209.com" >>/etc/hosts

root@ceph-client1-ubuntu:~# docker pull harbor.s209.com/n56/tomcat-web:app1

app1: Pulling from n56/tomcat-web

7a0437f04f83: Pull complete

...

2644ad46c8e4: Pull complete

Digest: sha256:86780109cbeb7d902ba55bd010b81e0834d72fbd40dfb343d03500bbaf231b93

Status: Downloaded newer image for harbor.s209.com/n56/tomcat-web:app1

harbor.s209.com/n56/tomcat-web:app1

root@ceph-client1-ubuntu:~# docker run -d -p 81:8080 harbor.s209.com/n56/tomcat-web:app1

5aa6c64859684e4cb045ea19a163844284a8a203eae1468a2e8137b8e49b4455

root@ceph-client1-ubuntu:~#

root@ceph-client1-ubuntu:~# hostname -I

172.31.6.160

访问镜像创建的容器:

ubuntu@harbor01:~$ curl http://172.31.6.160:81/myapp/

Tomcat Web Page

ubuntu@harbor01:~$

6.3 配置两台Harbor实现双向同步

(1)在Harbor2机器安装harbor;

<1>拷贝harbor安装包到harbor2机器; root@harbor01:~# scp /usr/local/src/harbor-offline-installer-v2.3.2.tgz ubuntu@172.31.6.22: root@harbor01:~# scp /usr/local/harbor/harbor.yml ubuntu@172.31.6.22: <2>安装docker和docker-compose root@harbor02:~# apt install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal root@harbor02:~# apt install python3-pip root@harbor02:~# pip3 install docker-compose <3>安装harbor; ubuntu@harbor02:~$ ls harbor-offline-installer-v2.3.2.tgz harbor.yml ubuntu@harbor02:~$ sudo -i root@harbor02:~# mv /home/ubuntu/harbor-offline-installer-v2.3.2.tgz /usr/local/src/ root@harbor02:~# mv /home/ubuntu/harbor.yml /usr/local/harbor/ 需要修改配置文件中的hostname root@harbor02:~# cd /usr/local/src/ root@harbor02:/usr/local/src# tar zxvf harbor-offline-installer-v2.3.2.tgz root@harbor02:/usr/local/src# ln -sv /usr/local/src/harbor /usr/local/ '/usr/local/harbor' -> '/usr/local/src/harbor' root@harbor02:~# cd /usr/local/harbor root@harbor02:/usr/local/harbor# ./install.sh --with-trivy ...省略... [Step 5]: starting Harbor ... Creating network "harbor_harbor" with the default driver Creating harbor-log ... done Creating harbor-portal ... done Creating redis ... done Creating registryctl ... done Creating harbor-db ... done Creating registry ... done Creating trivy-adapter ... done Creating harbor-core ... done Creating nginx ... done Creating harbor-jobservice ... done ✔ ----Harbor has been installed and started successfully.---- root@harbor02:/usr/local/harbor# ss -tln State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 4096 127.0.0.1:1514 0.0.0.0:* LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 4096 *:80 *:* root@harbor02:/usr/local/harbor# hostname -I 172.31.6.22 <4>浏览器登录harbor操作 在网页浏览器访问http://172.31.6.22登录harbor操作,创建项目,配置复制策略;

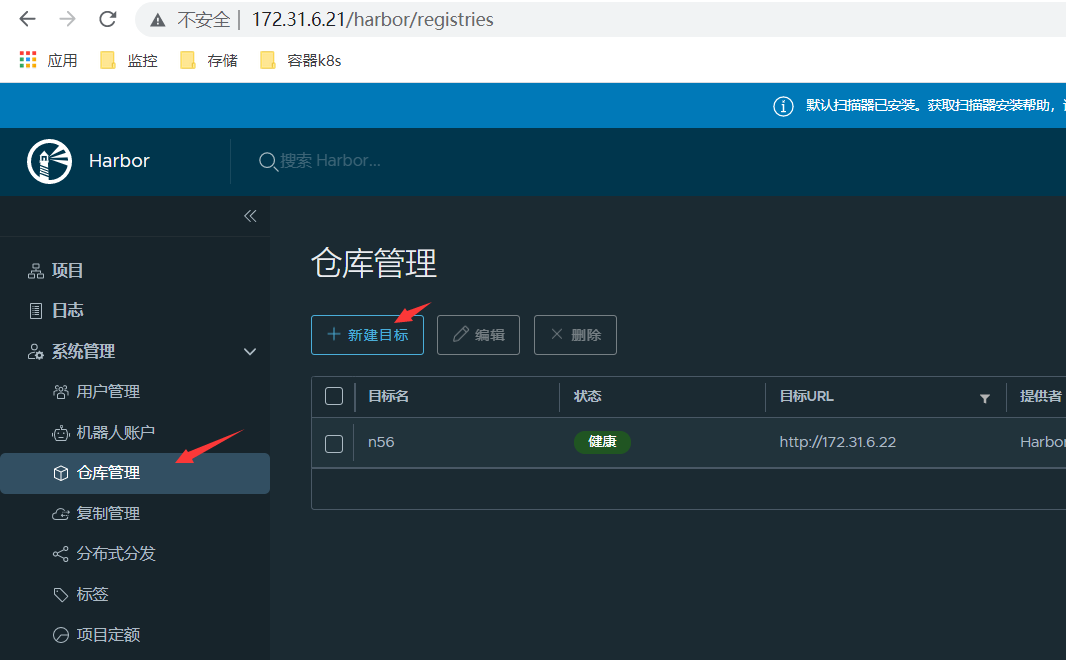

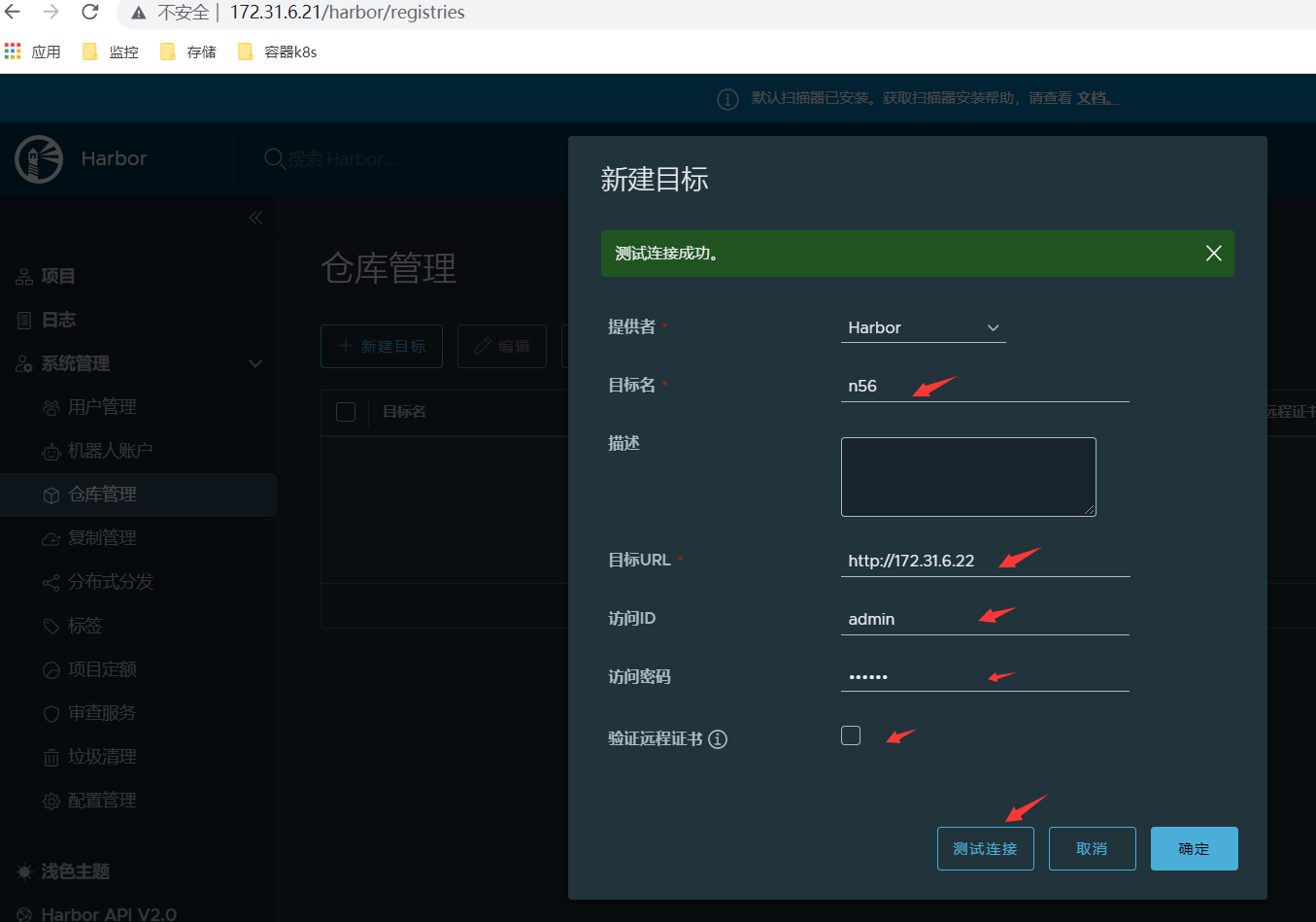

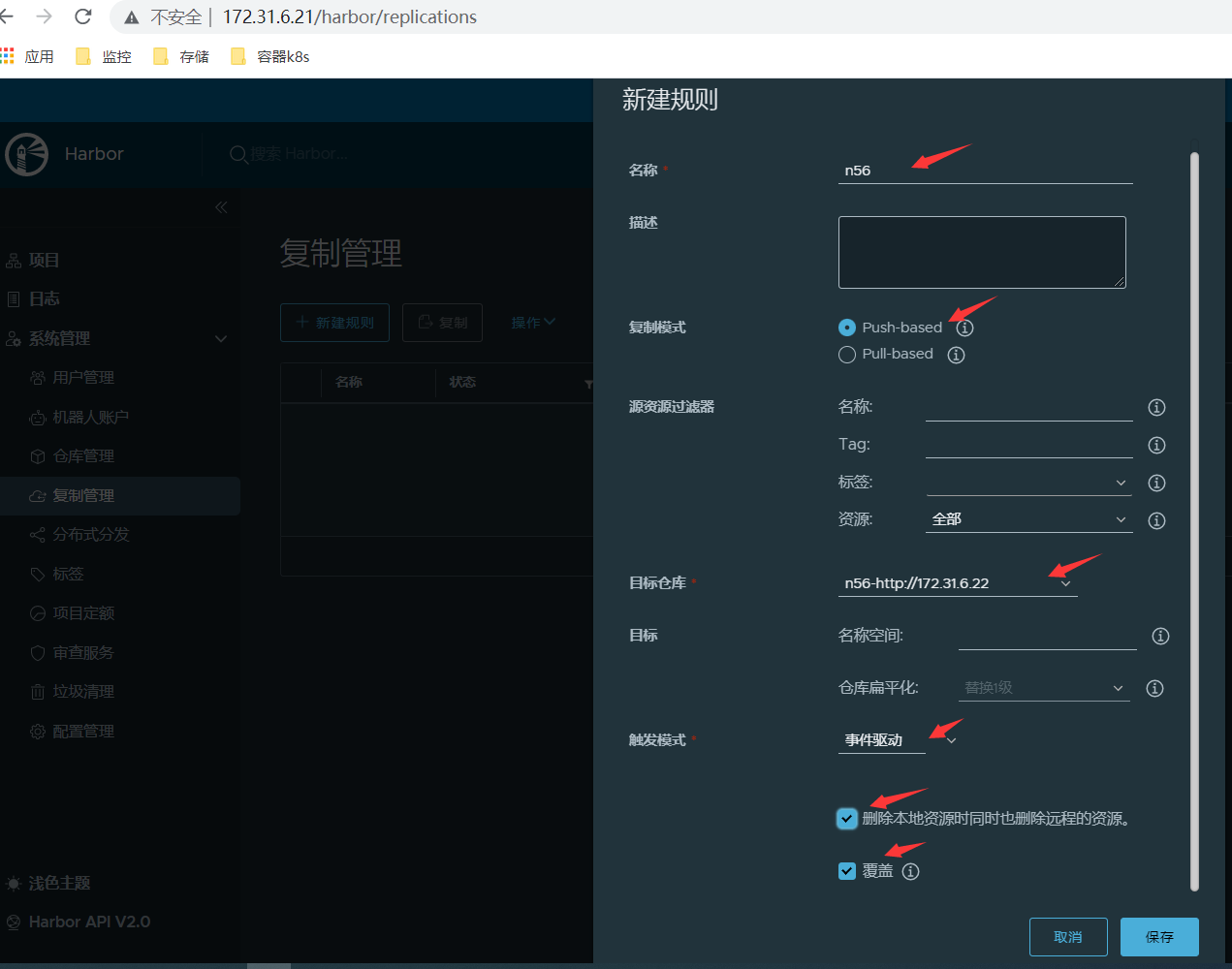

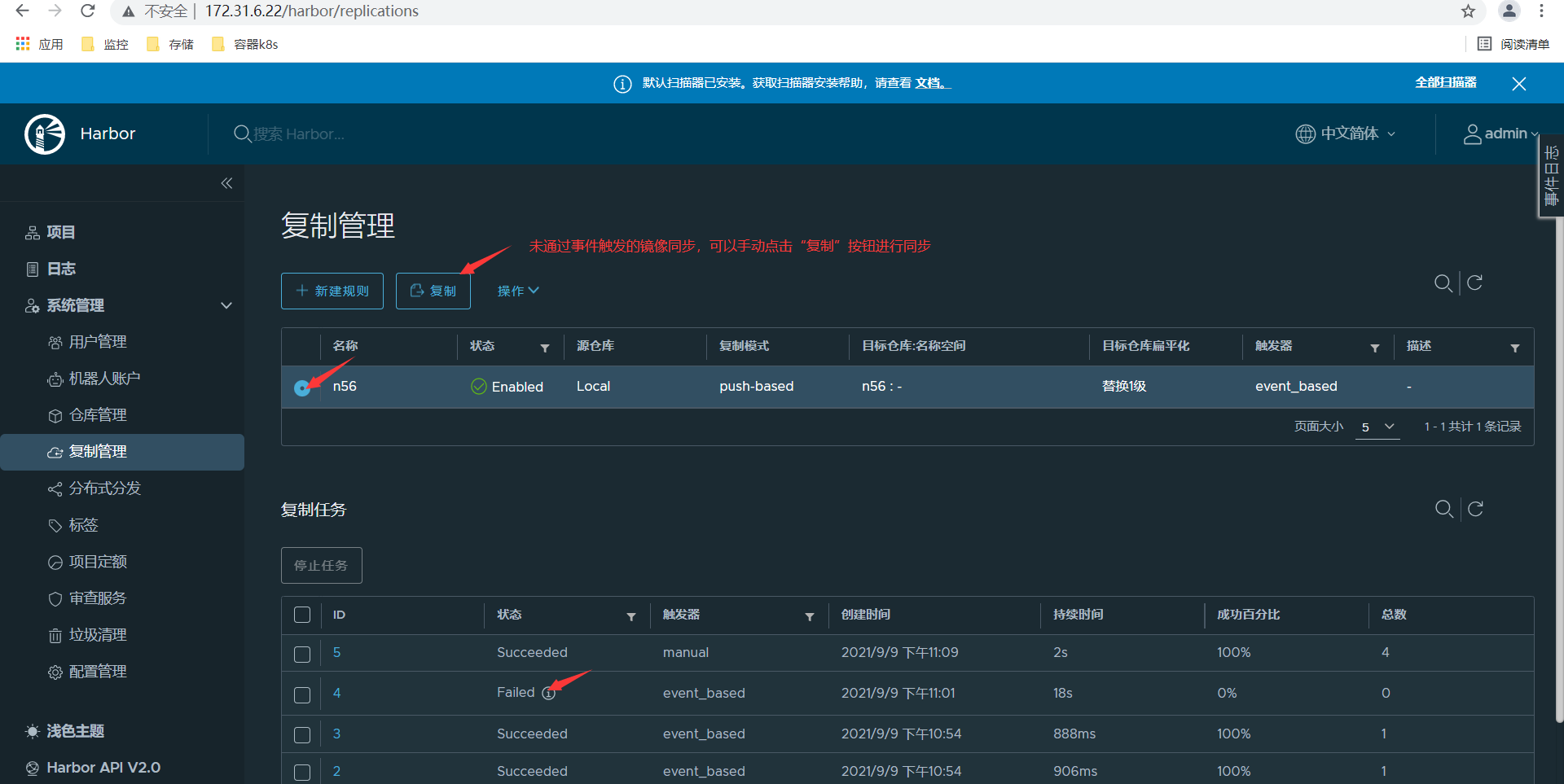

(2)分别在两台Harbor机器的网页前端配置复制策略;

主要包含以下两步:(两台harbor机器分别配置)

<1>仓库管理-新建目标;

<2>复制管理-新建规则;

<3>再次上传镜像nginx:v2到harbor01节点,查看harbor02节点也可以看到这个nginx镜像;

root@docker01:~# docker push harbor.s209.com/n56/nginx:v2

6.4配置Harbor前端Haproxy实现harbor镜像仓库高可用

<1>安装proxy,设置proxy配置文件; root@harbor-fe:~# apt install haproxy root@harbor-fe:~# tail /etc/haproxy/haproxy.cfg listen harbor-80 bind 172.31.6.29:80 mode tcp balance source server harbor01 172.31.6.21:80 check inter 3s fall 3 rise 5 server harbor02 172.31.6.22:80 check inter 3s fall 3 rise 5 root@harbor-fe:~# systemctl restart haproxy root@harbor-fe:~# ss -tln State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 2000 172.31.6.29:80 0.0.0.0:* LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* root@harbor-fe:~# hostname -I 172.31.6.29 <2>客户端设置hosts文件访问172.31.6.29; root@docker01:~# tail -n 1 /etc/hosts 172.31.6.29 harbor.s209.com root@docker01:~# 修改镜像tag; root@docker01:~# docker tag lorel/docker-stress-ng:latest harbor.s209.com/n56/docker-stress-ng:latest root@docker01:~# docker tag nginx:1.20 harbor.s209.com/n56/nginx:1.20 通过hapoxy ip上传镜像; root@docker01:~# docker push harbor.s209.com/n56/docker-stress-ng:latest root@docker01:~# docker push harbor.s209.com/n56/nginx:1.20 <3>在另外一台客户端下载镜像验证; root@ceph-client1-ubuntu:~# docker pull harbor.s209.com/n56/nginx:1.20 <4>关闭harbor01,测试上传镜像; root@harbor01:~# poweroff root@docker01:~# docker tag alpine:latest harbor.s209.com/n56/alpine:latest root@docker01:~# docker push harbor.s209.com/n56/alpine:latest 正常上传; 对harbor02开机后,查看并没有自动同步alpine:latest镜像;在harbor1上可以看到同步任务失败,可以手动点击“复制”按钮,同步两台harbor机器内的镜像;

七、Docker基本命令

root@docker01:~# docker info Client: Debug Mode: false Server: Containers: 4 #当前主机运行的容器总数 Running: 3 #有几个容器是正在运行的 Paused: 0 #有几个容器是暂停的 Stopped: 1 #有几个容器是停止的 Images: 6 #当前服务器的镜像数 Server Version: 19.03.15 #服务端版本 Storage Driver: overlay2 #正在使用的存储引擎 Backing Filesystem: xfs #后端文件系统,即服务器的磁盘文件系统(Host主机的文件系统) Supports d_type: true #是否支持d_type Native Overlay Diff: true #是否支持差异数据存储 Logging Driver: json-file #日志类型 Cgroup Driver: cgroupfs #Cgroups类型 Plugins: #插件 Volume: local #卷 Network: bridge host ipvlan macvlan null overlay #overlay跨主机通信 Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog #日志类型 Swarm: inactive #是否支持swarm Runtimes: runc #已安装的容器运行时 Default Runtime: runc #默认使用的容器运行时 Init Binary: docker-init #初始化容器的守护进程,即pid为1的进程 containerd version: e25210fe30a0a703442421b0f60afac609f950a3 #版本 runc version: v1.0.1-0-g4144b63 #runc版本 init version: fec3683 #init版本 Security Options: #安全选项 apparmor #安全模块,https://docs.docker.com/engine/security/apparmor/ seccomp #审计(操作),https://docs.docker.com/engine/security/seccomp/ Profile: default #默认的配置文件 Kernel Version: 5.4.0-81-generic #宿主机内核版本 Operating System: Ubuntu 20.04.3 LTS #宿主机内核操作系统 OSType: linux #宿主机操作系统类型 Architecture: x86_64 #宿主机架构 CPUs: 1 #宿主机cpu数量 Total Memory: 1.913GiB #宿主机总内存 Name: docker01.s209.local #宿主机hostname ID: PA7N:B25Q:Y2LY:AH4Y:K7XJ:DFOQ:WRXE:3F2L:BPSL:5Q67:4MTV:F67I #宿主机ID Docker Root Dir: /data/docker #宿主机数据保存目录 Debug Mode: false #client端/server端是否开启debug Registry: https://index.docker.io/v1/ #镜像仓库 Labels: #其他标签 Experimental: false #是否测试版 Insecure Registries: #非安全的镜像仓库 127.0.0.0/8 Registry Mirrors: #镜像加速 https://8otyy3fq.mirror.aliyuncs.com/ Live Restore Enabled: false #是否开启活动重启(重启docker-daemon不关闭容器)

WARNING:No swap limit support #系统警告信息(没有开启swap资源限制),建议对Host宿主机关闭swap分区 root@docker01:~#

(1)拉取镜像

root@docker01:~# docker pull nginx:1.20

root@docker01:~# docker pull centos:7.8.2003

root@docker01:~# docker pull centos:7.9.2008

root@docker01:~# docker pull centos:7.2.1511

root@docker01:~# docker pull ubuntu:20.04

root@docker01:~# docker pull alpine

root@docker01:~# docker pull nginx

root@docker01:~# docker pull lorel/docker-stress-ng(2)查看镜像

root@docker01:~# docker images

root@docker01:~# docker image ls(3)删除镜像

root@docker01:~# docker rmi nginx:latest(4)导出镜像

root@docker01:~# docker save nginx:1.20 -o nginx-1.20.tar.gz

root@docker01:~# docker save nginx:1.20 > nginx-1.20.tar.gzroot@docker01:~# mkdir nginx-test

root@docker01:~# mv nginx-1.20.tar.gz nginx-test/

root@docker01:~# cd nginx-test/

root@docker01:~/nginx-test# tar xvf nginx-1.20.tar.gz #解压查看分层信息

root@docker01:~/nginx-test# ls(5)导入镜像(比如拷贝到另一台HOST内导入)

root@docker01:~/nginx-test# docker rmi nginx:1.20

root@docker01:~/nginx-test# docker load -i nginx-1.20.tar.gz

或者root@docker01:~/nginx-test# docker load < nginx-1.20.tar.gz(6)修改镜像名字/tag

root@docker01:~/nginx-test# docker tag nginx:1.20 nginx:1.20-baseimage

root@docker01:~/nginx-test# docker tag nginx:1.20 harbor.s209.local/nginx:1.20(7)启动容器运行后台

root@docker01:~# docker run -d --name xiaoma-c1 -p 9800:80 nginx:1.20 #宿主机端口9800,容器端口80;进入容器

root@docker01:~# docker exec -it xiaoma-c1 bash

或者root@docker01:~# docker exec -it 9fea1012de93 bash(8)启动容器,退出容器是自动删除

root@docker01:~# docker run -it --rm -p 9801:80 nginx:1.20

root@docker01:~# docker run -it --rm alpine:latest sh(9)批量删除容器

root@docker01:~# docker ps -a -q

04b1ccccd692

90ab2a4e5e54

root@docker01:~# docker rm `docker ps -a -q`

04b1ccccd692

90ab2a4e5e54(10)批量关闭正在运行的容器(正常关闭)

root@docker01:~# docker stop $(docker ps -a -q)(11)批量强制关闭正在运行的容器

root@docker01:~# docker kill $(docker ps -a -q)(12)批量删除已退出容器

root@docker01:~# docker rm `docker ps -aq -f status=exited`(13)本地端口81映射到容器80端口

root@docker01:~# docker run -p 81:80 --name nginx-test-port1 nginx:1.20(14)本地IP:本地端口:容器端口

root@docker01:~# docker run -p 172.31.6.20:82:80 --name nginx-test-port2 nginx:1.20(15)本地IP:本地随机端口:容器端口

root@docker01:~# docker run -p 172.31.6.20::80 --name nginx-test-port3 nginx:1.20(16)本地IP:本地随机端口:容器端口/协议,默认为tcp协议

root@docker01:~# docker run -p 172.31.6.20:83:80/udp --name nginx-test-port4 nginx:1.20(17)一次性映射多个端口+协议

root@docker01:~# docker run -p 86:80/tcp -p 443:443/tcp -p 53:53/udp --name nginx-test-port5 nginx:1.20(18)从容器内拷贝文件到HOST主机,或者从Host主机拷贝文件到容器内

root@docker01:/opt/Dockerfile/nginx# docker cp 558d9aab5ca5:/apps/nginx/conf/nginx.conf .

root@docker01:/opt/Dockerfile/nginx# docker cp nginx.conf 558d9aab5ca5:/apps/nginx/conf/

浙公网安备 33010602011771号

浙公网安备 33010602011771号