记一次kafka消息积压异常及处理方式

1. 背景

一大早起来信息,kafka消息积压了五十亿,赶紧打开电脑处理。

这段程序是kafka实时消息经java代码处理后写入hbase,java代码大半年一直没出问题,推测是下游hbase异常。

2. 处理方式

查看日志

22/02/22 10:06:42 WARN internals.ConsumerCoordinator: Auto-commit of offsets {SMS_MSG_SIGNAL_KAFKA-1=OffsetAndMetadata{offset=6252858693, metadata=''}} failed for group kafka2hbase_imsi_86: Commit cannot be completed since the group has already rebalanced and assigned the partitions to another member. This means that the time between subsequent calls to poll() was longer than the configured max.poll.interval.ms, which typically implies that the poll loop is spending too much time message processing. You can address this either by increasing the session timeout or by reducing the maximum size of batches returned in poll() with max.poll.records.

确实是poll一次的消息处理时长较长,超过了max.poll.interval.ms,导致上面的日志。

所以检查hbase入库情况,发现hbase入库速度只有不到1万/秒,正常情况大约有7万上下,所以下游堵塞导致上游积压。

反馈到大数据平台运维团队,处理后hbase入库速度上来了,但是入库速度不足以处理积压的信令量。

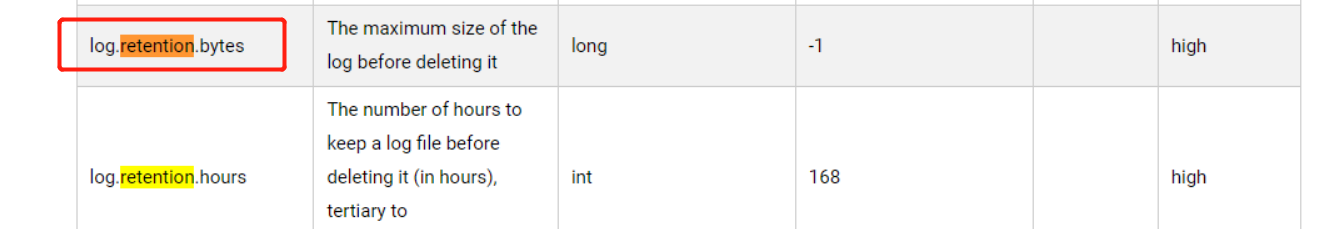

一时半会想不到更好的办法加快hbase入库速度,所以先把kafka的offset重置为latest,但是0.10.0版本的偏移量存储在kafka集群自己的topic,修改的话只能代码改(shell命令不支持)

但是奇怪的是,明明设置了auto.offset.reset=latest但是没生效,仍旧从上一次消费的偏移量处开始消费,

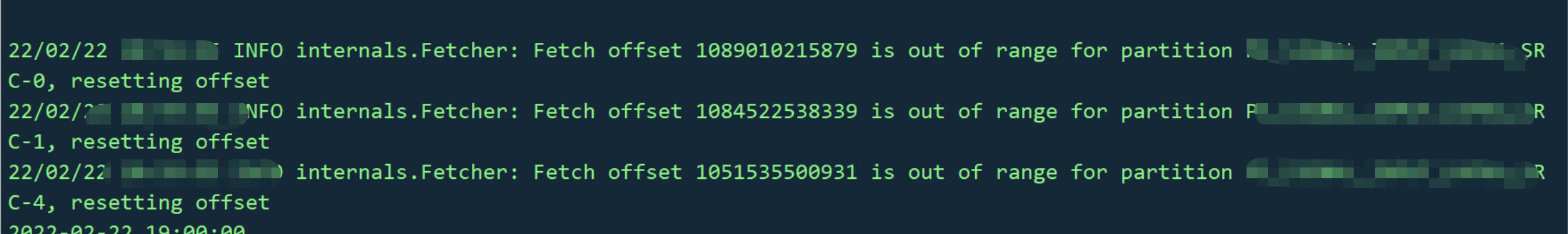

直到发生了如下

超过了range自动重置offset,然后发现0,1,4分区的offset为latest

3. 参考

kafka重置偏移量

Kafka之Fetch offset xxx is out of range for partition xxx,resetting offset情况总结

浙公网安备 33010602011771号

浙公网安备 33010602011771号