机器学习15卷积神经网络处理手写数字图片

1.手写数字数据集

- from sklearn.datasets import load_digits

- digits = load_digits()

from sklearn.datasets import load_digits digits = load_digits()

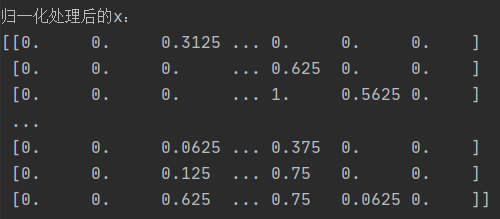

2.图片数据预处理

- x:归一化MinMaxScaler()

- y:独热编码OneHotEncoder()或to_categorical

- 训练集测试集划分

- 张量结构

# 2、图片预处理 from sklearn.preprocessing import MinMaxScaler from sklearn.preprocessing import OneHotEncoder from sklearn.model_selection import train_test_split #将x转化为浮点用于归一化 x = digits.data.astype(np.float32) # 将Y_data变为一列用于onehot y= digits.target.astype(np.float32).reshape(-1, 1) # 将属性缩放到一个指定的最大和最小值(通常是1-0之间) # x:归一化MinMaxScaler() scaler = MinMaxScaler() x = scaler.fit_transform(x) print("归一化处理后的x:") print(x) # y:独热编码OneHotEncoder 张量结构todense # 进行oe-hot编码 y = OneHotEncoder().fit_transform(y).todense() print("one hot处理后的期望值:") print(y) #转换为图片的格式(batch, height, width, channels) x = x.reshape(-1, 8, 8, 1) # 训练集测试集划分 X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=0, stratify=y) print('X_train.shape, X_test.shape, y_train.shape, y_test.shape:', X_train.shape, X_test.shape, y_train.shape, y_test.shape)

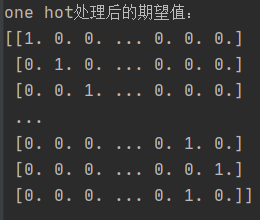

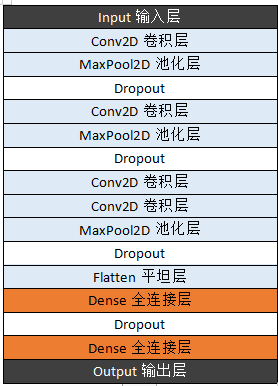

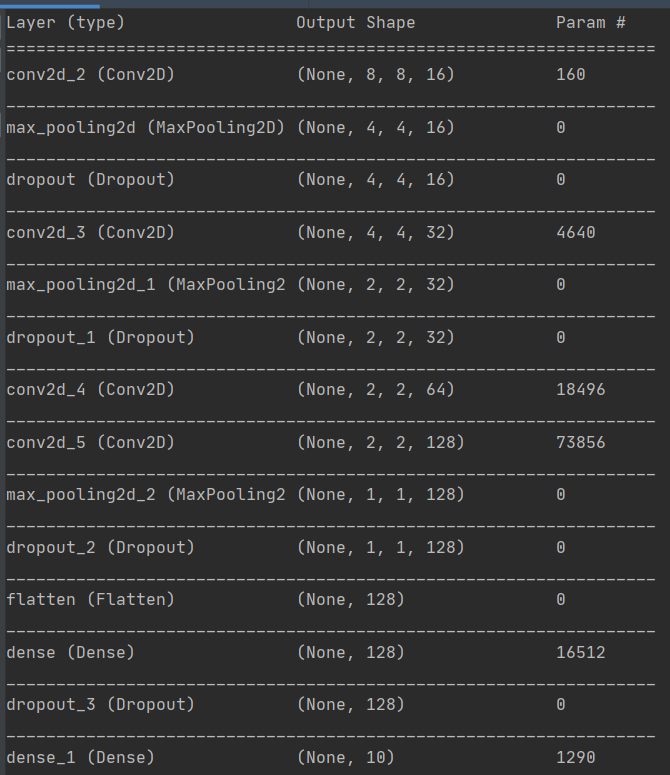

3.设计卷积神经网络结构

- 绘制模型结构图,并说明设计依据。

依据经典模型,在根据图片维数8乘8,选择4层卷积,3层池化。 为防止过拟合在其中加入Dropout层

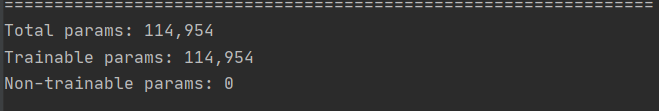

from tensorflow.keras.models import Sequential from tensorflow.keras.layers import MaxPool2D, Dropout, Flatten, Dense model = Sequential() ks = (3, 3) # x input_shape = x.shape[1:] # 一层卷积,padding='same',tensorflow会对输入自动补0 model.add(Conv2D(filters=16, kernel_size=ks, padding='same', input_shape=input_shape, activation='relu')) # 池化层1 model.add(MaxPool2D(pool_size=(2, 2))) # 防止过拟合,随机丢掉连接 model.add(Dropout(0.25)) # 二层卷积 model.add(Conv2D(filters=32, kernel_size=ks, padding='same', activation='relu')) # 池化层2 model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(0.25)) # 三层卷积 model.add(Conv2D(filters=64, kernel_size=ks, padding='same', activation='relu')) # 四层卷积 model.add(Conv2D(filters=128, kernel_size=ks, padding='same', activation='relu')) # 池化层3 model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(0.25)) # 平坦层 model.add(Flatten()) # 全连接层 model.add(Dense(128, activation='relu')) model.add(Dropout(0.25)) # 激活函数softmax model.add(Dense(10, activation='softmax')) print(model.summary())

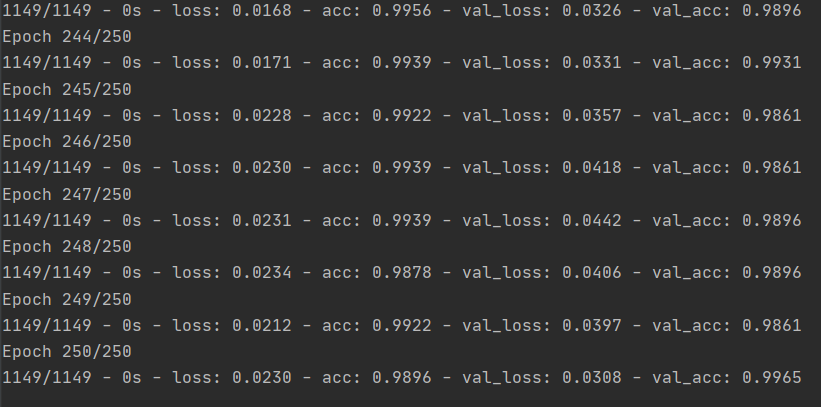

4.模型训练

# 训练模型 # 损失函数:categorical_crossentropy,优化器:adam ,用准确率accuracy衡量模型 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # 划分20%作为验证数据,每次训练300个数据,训练迭代150轮 train_history = model.fit(x=X_train, y=y_train, validation_split=0.2, batch_size=300, epochs=150, verbose=2)

import matplotlib.pyplot as plt

#自定义绘制函数

def show_train_history(train_history, train, validation):

plt.plot(train_history.history[train])

plt.plot(train_history.history[validation])

plt.title('Train History')

plt.ylabel('train')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

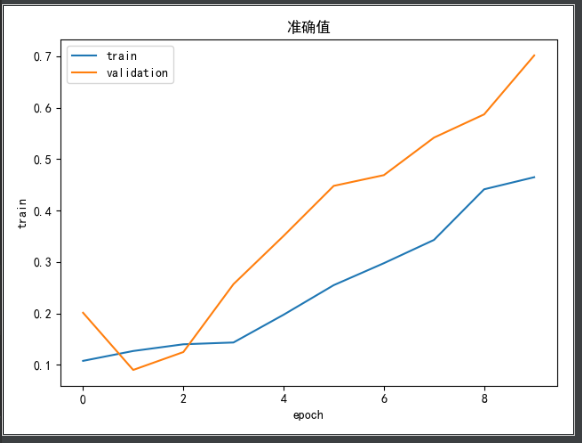

show_train_history(train_history, 'accuracy', 'val_accuracy',"准确值")

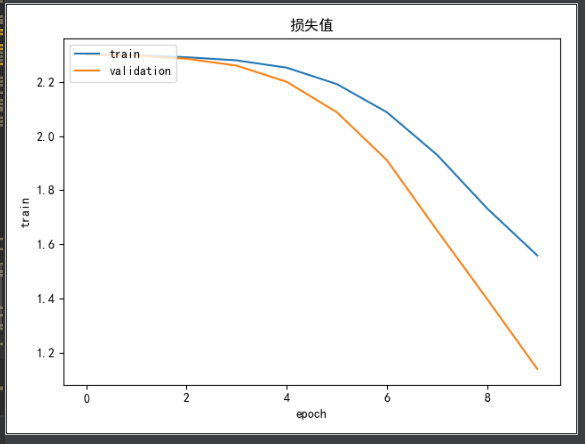

show_train_history(train_history, 'loss', 'val_loss',"损失值")

5.模型评价

- model.evaluate()

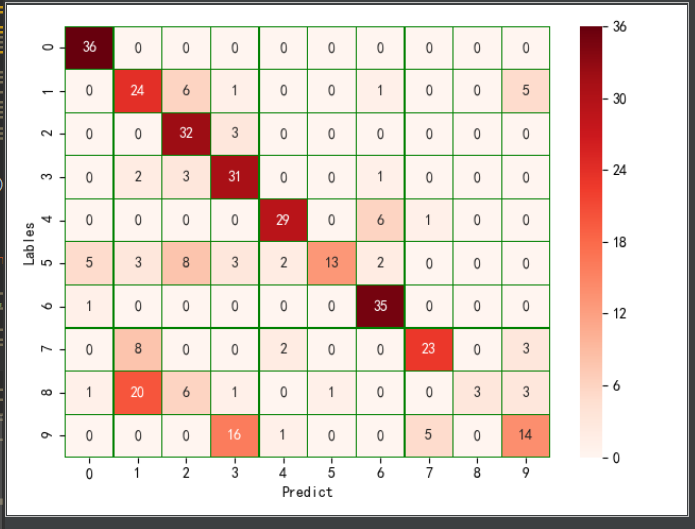

- 交叉表与交叉矩阵

- pandas.crosstab

- seaborn.heatmap

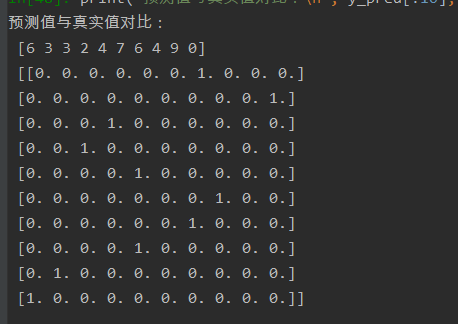

from boto import sns import pandas as pd # 5、模型评价 # model.evaluate() score = model.evaluate(X_test, y_test) print('score:', score) # 预测值 y_pred = model.predict_classes(X_test) print('y_pred:', y_pred[:10]) # 交叉表与交叉矩阵 y_test1 = np.argmax(y_test, axis=1).reshape(-1) y_true = np.array(y_test1)[0] # 交叉表查看预测数据与原数据对比 # pandas.crosstab pd.crosstab(y_true, y_pred, rownames=['true'], colnames=['predict']) # 交叉矩阵 # seaborn.heatmap y_test1 = y_test1.tolist()[0] a = pd.crosstab(np.array(y_test1), y_pred, rownames=['Lables'], colnames=['Predict']) # 转换成属dataframe df = pd.DataFrame(a) sns.heatmap(df, annot=True, cmap="Reds", linewidths=0.2, linecolor='G') plt.show()

浙公网安备 33010602011771号

浙公网安备 33010602011771号