kubernetes集群之高可用实现

部署coredns

[root@master-1 ~]# cat coredns.yaml apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.7.0 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.255.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP

执行

[root@master-1 ~]# kubectl apply -f coredns.yaml

查看

[root@master-1 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-6949477b58-2mfdh 1/1 Running 1 11h calico-node-tlb6d 1/1 Running 1 11h coredns-7bf4bd64bd-9fqkz 1/1 Running 0 11m

查看集群状态

[root@master-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready <none> 12h v1.20.7

运行tomcat 测试

[root@master-1 ~]# cat tomcat.yaml

apiVersion: v1 #pod属于k8s核心组v1

kind: Pod #创建的是一个Pod资源

metadata: #元数据

name: demo-pod #pod名字

namespace: default #pod所属的名称空间

labels:

app: myapp #pod具有的标签

env: dev #pod具有的标签

spec:

containers: #定义一个容器,容器是对象列表,下面可以有多个name

- name: tomcat-pod-java #容器的名字

ports:

- containerPort: 8080

image: tomcat:8.5-jre8-alpine #容器使用的镜像

imagePullPolicy: IfNotPresent

- name: busybox

image: busybox:latest

command: #command是一个列表,定义的时候下面的参数加横线

- "/bin/sh"

- "-c"

- "sleep 3600"

您在 /var/spool/mail/root 中有新邮件

[root@master-1 ~]# kubectl apply -f tomcat.yaml

pod/demo-pod created

您在 /var/spool/mail/root 中有新邮件

[root@master-1 ~]# cat tomcat-service.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30080

selector:

app: myapp

env: dev

[root@master-1 ~]# kubectl apply -f tomcat-service.yaml

service/tomcat unchanged

您在 /var/spool/mail/root 中有新邮件

[root@master-1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.255.0.1 <none> 443/TCP 13h

tomcat NodePort 10.255.92.3 <none> 8080:30080/TCP 80s

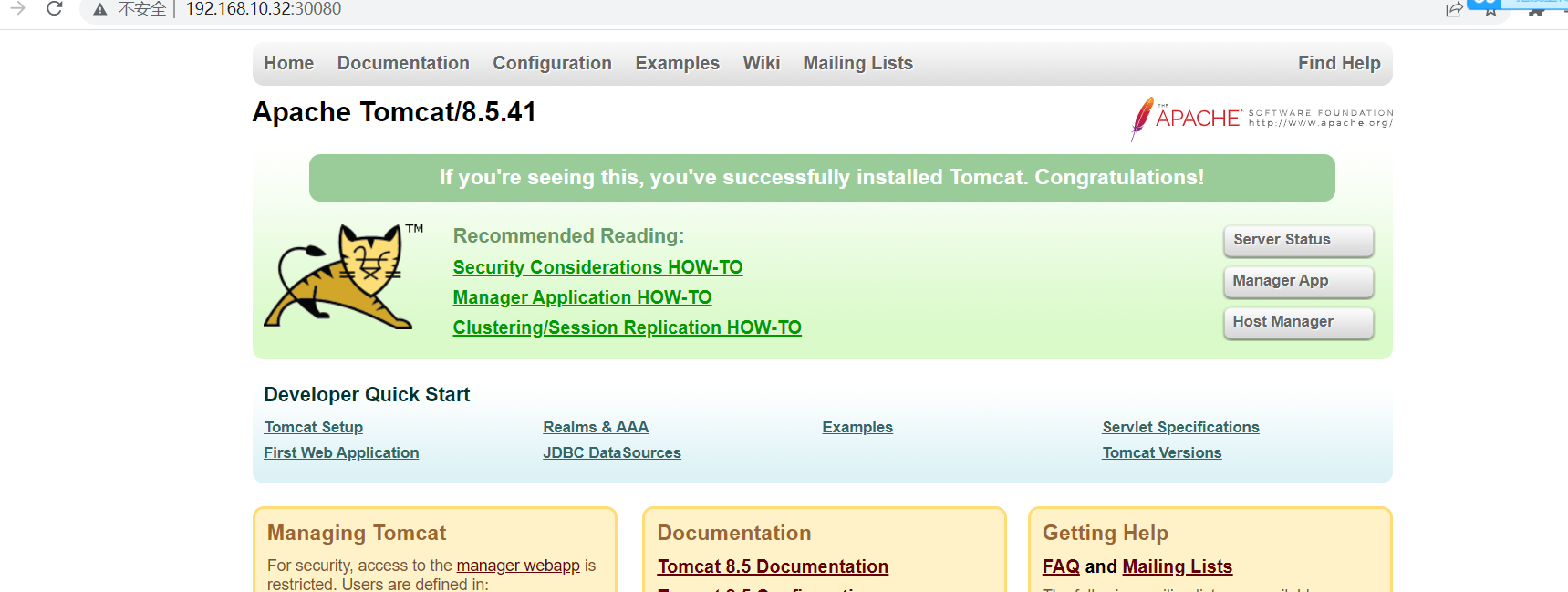

在浏览器访问node-1节点的ip:30080即可请求到浏览器http://192.168.10.32:30080/

测试DNS

[root@master-1 ~]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh If you don't see a command prompt, try pressing enter. / # ping www.baidu.co ping: bad address 'www.baidu.co' / # ping www.baidu.com PING www.baidu.com (103.235.46.40): 56 data bytes 64 bytes from 103.235.46.40: seq=0 ttl=127 time=27.786 ms 64 bytes from 103.235.46.40: seq=1 ttl=127 time=26.360 ms ^C --- www.baidu.com ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 26.360/27.073/27.786 ms

安装keepalived+nginx实现k8s apiserver高可用 设置两个master节点允许监听非本机端口

echo "net.ipv4.ip_nonlocal_bind=1" >> /etc/sysctl.conf sysctl -p

安装nginx nginx-mod-stream (四层模块)

yum -y install nginx keepalived nginx-mod-stream

ng主从配置一样

[root@master-1 ~]# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.10.29:6443; # Master1 APISERVER IP:PORT

server 192.168.10.30:6443; # Master2 APISERVER IP:PORT

server 192.168.10.31:6443; # Master3 APISERVER IP:PORT

}

server {

listen 192.168.10.28:16443; # 由于nginx与master节点复用,这个IP是vip监听

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

启动ng

[root@master-1 ~]# systemctl start nginx.service

[root@master-1 ~]# ss -lntp |grep 192.168.10.28

LISTEN 0 128 192.168.10.28:16443 *:* users:(("nginx",pid=21422,fd=7),("nginx",pid=21421,fd=7),("nginx",pid=21420,fd=7))

[root@master-2 ~]# systemctl start nginx.service

您在 /var/spool/mail/root 中有新邮件

[root@master-2 ~]# ss -lntp | grep 192.168.10.28

LISTEN 0 128 192.168.10.28:16443 *:* users:(("nginx",pid=21263,fd=7),("nginx",pid=21262,fd=7),("nginx",pid=21261,fd=7))

配置高可用主节点

vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_from ka1@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

vrrp_mcast_group4 224.111.111.111

}

vrrp_script chk_ng {

script "ss -lntp | grep 192.168.10.28"

interval 2

weight -10

fall 2

rise 2

}

vrrp_instance External_1 {

state MASTER

interface ens33

virtual_router_id 171

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1402b1b5

}

virtual_ipaddress {

192.168.10.28/24

}

track_script {

chk_ng

}

}

从节点

cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_from ka2@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

vrrp_mcast_group4 224.111.111.111

}

vrrp_script chk_ng {

script "ss -lntp | grep 192.168.10.28"

interval 2

weight -10

fall 2

rise 2

}

vrrp_instance External_1 {

state BACKUP

interface ens33

virtual_router_id 171

priority 95

advert_int 1

authentication {

auth_type PASS

auth_pass 1402b1b5

}

virtual_ipaddress {

192.168.10.28/24

}

track_script {

chk_ng

}

}

启动高可用服务并查看VIP是否正常

[root@master-1 ~]# systemctl start keepalived.service [root@master-2 ~]# systemctl start keepalived.service [root@master-2 ~]# systemctl enable keepalived.service Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service. [root@master-2 ~]# systemctl enable nginx.service Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service. [root@master-1 ~]# systemctl enable keepalived.service Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service. [root@master-1 ~]# systemctl enable nginx.service Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service. [root@master-1 ~]# hostname -I 192.168.10.29 192.168.10.28 [root@master-2 ~]# hostname -I 192.168.10.30

停止主节点ng 查看vip是否飘走

[root@master-1 ~]# systemctl stop nginx.service [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-2 ~]# hostname -I 192.168.10.30 192.168.10.28

启动主节点ng 查看vip

[root@master-1 ~]# systemctl start nginx.service 您在 /var/spool/mail/root 中有新邮件 [root@master-1 ~]# hostname -I 协商过程 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 192.168.10.29 [root@master-1 ~]# hostname -I 成功飘回来 192.168.10.29 192.168.10.28 [root@master-2 ~]# hostname -I 从节点vip 移动走 192.168.10.30 您在 /var/spool/mail/root 中有新邮件

目前所有的Worker Node组件连接都还是master1 Node,如果不改为连接VIP走负载均衡器,那么Master还是单点故障。

因此接下来就是要改所有Worker Node(kubectl get node命令查看到的节点)组件配置文件,由原来192.168.10.29修改为192.168.10.28(VIP)。

[root@node-1 ~]# sed -i 's#192.168.10.29:6443#192.168.10.28:16443#' /etc/kubernetes/kubelet-bootstrap.kubeconfig

[root@node-1 ~]# sed -i 's#192.168.10.29:6443#192.168.10.28:16443#' /etc/kubernetes/kubelet.json

[root@node-1 ~]# sed -i 's#192.168.10.29:6443#192.168.10.28:16443#' /etc/kubernetes/kubelet.kubeconfig

您在 /var/spool/mail/root 中有新邮件

[root@node-1 ~]# sed -i 's#192.168.10.29:6443#192.168.10.28:16443#' /etc/kubernetes/kube-proxy.yaml

[root@node-1 ~]# sed -i 's#192.168.10.29:6443#192.168.10.28:16443#' /etc/kubernetes/kube-proxy.kubeconfig

[root@node-1 ~]# systemctl restart kubelet kube-proxy

您在 /var/spool/mail/root 中有新邮件

[root@node-1 ~]# systemctl status kubelet kube-proxy

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-08-12 09:42:56 CST; 10s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 67657 (kubelet)

Tasks: 12

Memory: 57.4M

CGroup: /system.slice/kubelet.service

└─67657 /usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig --cert-dir=/etc/kubernetes/ssl --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --config=/etc/kub...

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144370 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "calico-node-token-k7k4g" (UniqueName: "kubernetes.io/s...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144507 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "config-volume" (UniqueName: "kubernetes.io/configmap/c...1958d0692677")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144540 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "lib-modules" (UniqueName: "kubernetes.io/host-path/762...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144627 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "coredns-token-fn5th" (UniqueName: "kubernetes.io/secre...1958d0692677")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144651 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "flexvol-driver-host" (UniqueName: "kubernetes.io/host-...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144732 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "default-token-xmj6q" (UniqueName: "kubernetes.io/secre...df99ab8e6be9")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144754 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "sysfs" (UniqueName: "kubernetes.io/host-path/762c1818-...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144766 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "cni-bin-dir" (UniqueName: "kubernetes.io/host-path/762...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144780 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "cni-net-dir" (UniqueName: "kubernetes.io/host-path/762...92371e6afa06")

8月 12 09:42:58 node-1 kubelet[67657]: I0812 09:42:58.144792 67657 operation_generator.go:672] MountVolume.SetUp succeeded for volume "var-run-calico" (UniqueName: "kubernetes.io/host-path/...92371e6afa06")

● kube-proxy.service - Kubernetes Kube-Proxy Server

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-08-12 09:42:56 CST; 10s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 67658 (kube-proxy)

Tasks: 6

Memory: 18.9M

CGroup: /system.slice/kube-proxy.service

└─67658 /usr/local/bin/kube-proxy --config=/etc/kubernetes/kube-proxy.yaml --alsologtostderr=true --logtostderr=false --log-dir=/var/log/kubernetes --v=2

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692151 67658 proxier.go:1036] Not syncing ipvs rules until Services and Endpoints have been received from master

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692160 67658 shared_informer.go:247] Caches are synced for service config

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692669 67658 service.go:390] Adding new service port "default/kubernetes:https" at 10.255.0.1:443/TCP

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692685 67658 service.go:390] Adding new service port "kube-system/kube-dns:dns" at 10.255.0.2:53/UDP

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692690 67658 service.go:390] Adding new service port "kube-system/kube-dns:dns-tcp" at 10.255.0.2:53/TCP

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692694 67658 service.go:390] Adding new service port "kube-system/kube-dns:metrics" at 10.255.0.2:9153/TCP

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692699 67658 service.go:390] Adding new service port "default/tomcat" at 10.255.92.3:8080/TCP

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.692767 67658 proxier.go:1067] Stale udp service kube-system/kube-dns:dns -> 10.255.0.2

8月 12 09:42:56 node-1 kube-proxy[67658]: I0812 09:42:56.738753 67658 proxier.go:2243] Opened local port "nodePort for default/tomcat" (:30080/tcp)

8月 12 09:43:04 node-1 kube-proxy[67658]: I0812 09:43:04.257220 67658 proxier.go:1067] Stale udp service kube-system/kube-dns:dns -> 10.255.0.2

Hint: Some lines were ellipsized, use -l to show in full.

查看集群状态

[root@master-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready <none> 13h v1.20.7 您在 /var/spool/mail/root 中有新邮件

草都可以从石头缝隙中长出来更可况你呢

浙公网安备 33010602011771号

浙公网安备 33010602011771号