Spark和Java API(七)计算PageRank

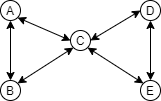

本文介紹如何基于Spark和Java来计算PageRan。我们为以下图求解PageRank:

创建工程

创建一个Maven工程,pom.xml文件如下:

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.github.ralgond</groupId>

<artifactId>spark-java-api</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.1.1</version>

<scope>provided</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

编写java类PageRank

创建一个包com.github.ralgond.sparkjavaapi,在该包下创建一个名为PageRank的类,该类内容如下:

package com.github.ralgond.sparkjavaapi;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import scala.Tuple2;

public class PageRank {

public static void main(String args[]) {

SparkConf conf = new SparkConf().setAppName("PageRank Application");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> data1 = sc.parallelize(Arrays.asList("A B,C", "B A,C", "C A,B,D,E", "D C,E", "E C,D"));

JavaPairRDD<String, List<String>> links = data1.mapToPair(x -> {

String[] a = x.split("\\s+", 2);

String[] b = a[1].split(",");

return new Tuple2<String, List<String>>(a[0], Arrays.asList(b));

});

JavaPairRDD<String, Double> ranks = links.mapValues(x -> 1.0);

for (int i = 0; i < 10; i++) {

JavaPairRDD<String, Tuple2<List<String>, Double>> tmp = links.join(ranks);

JavaPairRDD<String, Double> contributions = tmp.flatMapToPair(x -> {

String pageId = x._1;

List<String> ls = x._2._1;

Double rank = x._2._2;

List<Tuple2<String, Double>> ret = new ArrayList<>();

for (String dest : ls) {

ret.add(new Tuple2<String, Double>(dest, rank/ls.size()));

}

return ret.iterator();

});

ranks = contributions.reduceByKey((x, y)-> x + y).mapValues(v -> 0.15 + 0.85 * v);

}

System.out.println(ranks.collect());

sc.close();

}

}

编译并运行

通过mvn clean package编译出jar包spark-java-api-0.0.1-SNAPSHOT.jar。

到spark安装目录里,执行如下命令:

bin\spark-submit --class com.github.ralgond.sparkjavaapi.PageRank {..}\spark-java-api-0.0.1-SNAPSHOT.jar

便可以看到结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号