记录一次查询数据库数据写入磁盘文件的优化

项目场景:

项目上有个需求,需要把自己系统上数据库的数据写入到共享盘的txt文件给其他系统供数,这里称作 卸数,所以下文也都称之为 卸数

问题描述

刚开始做卸数功能,就是流水线下去,jdbc查询数据库->转换数据格式->存到List集合->写入磁盘

刚开始上线的时候,数据量不大,一切都是那么的美好。但是随着时间的推移,数据开始逐渐增多,由于表中存在大字段,所以即使十几万的数据写到磁盘也有好几个G,程序运行大概需要二十几分钟,线上就开始不断超时预警

如果数据量继续增长,由于是全部放到List集合中,很可能出现OOM

原因分析:

先看下第一版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

/**

* 第一版,单线程先读后写

* 数据量:50000

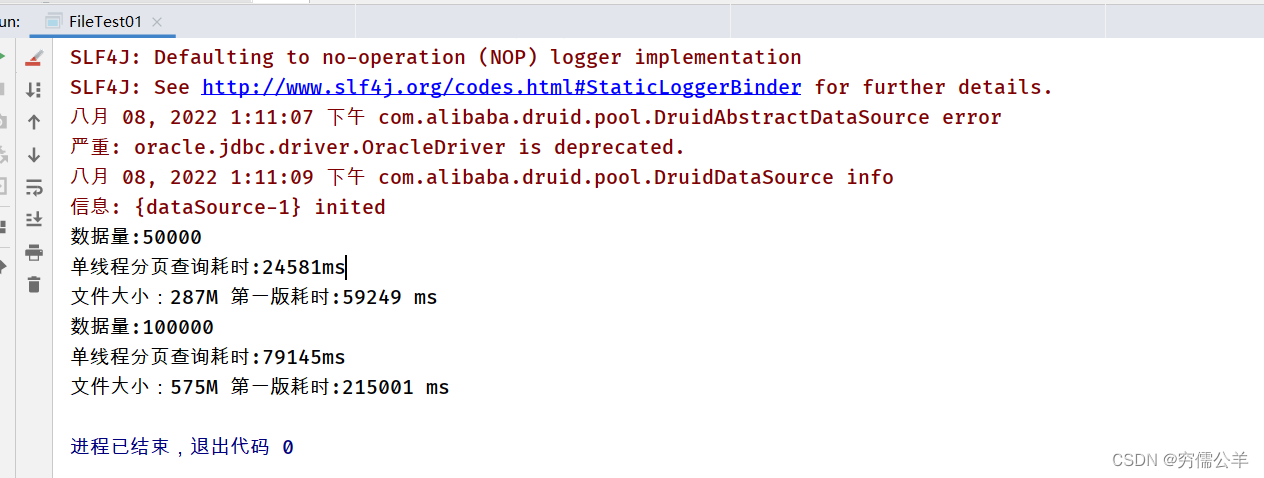

* 单线程分页查询耗时:25078ms

* 文件大小:287M 第一版耗时:62643 ms

* 数据量:100000

* 单线程分页查询耗时:81071ms

* 文件大小:575M 第一版耗时:218091 ms

*

* @throws Exception

*/

private static void first() throws Exception {

File file = new File("test.del");

file.delete();

long line = 0;

long start = System.currentTimeMillis();

getData(select());

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!data.isEmpty()) {

Map<String, Object> map = data.poll();

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第一版耗时:" + (end - start) + " ms ");

}

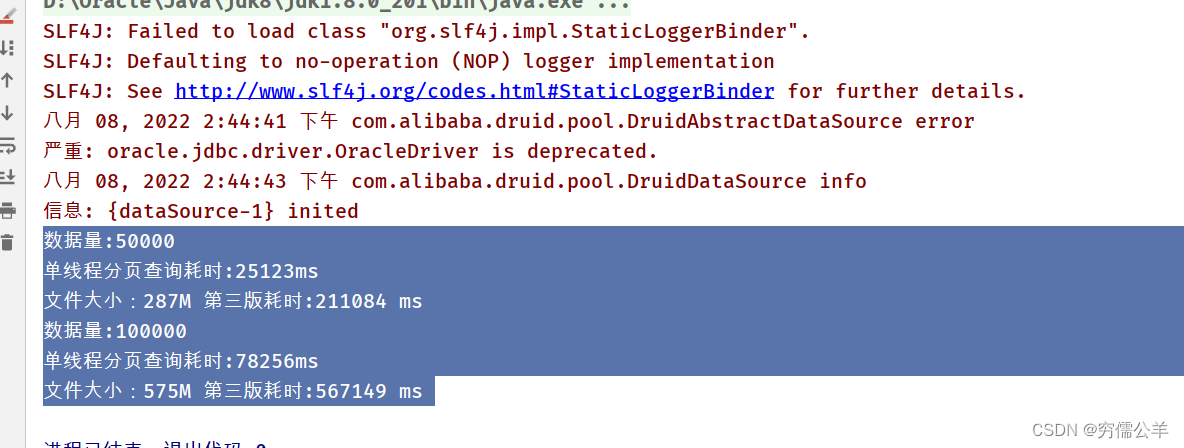

本地模拟运行情况,如下:

实际运行情况,如下:

云上5w数据用了7分钟左右,10w用了12分钟左右。首先怀疑是串行循环写入速度太慢,先把循环和写入分离为两个线程

第二版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

/**

* 第二版:读写分离

* 数据量:50000

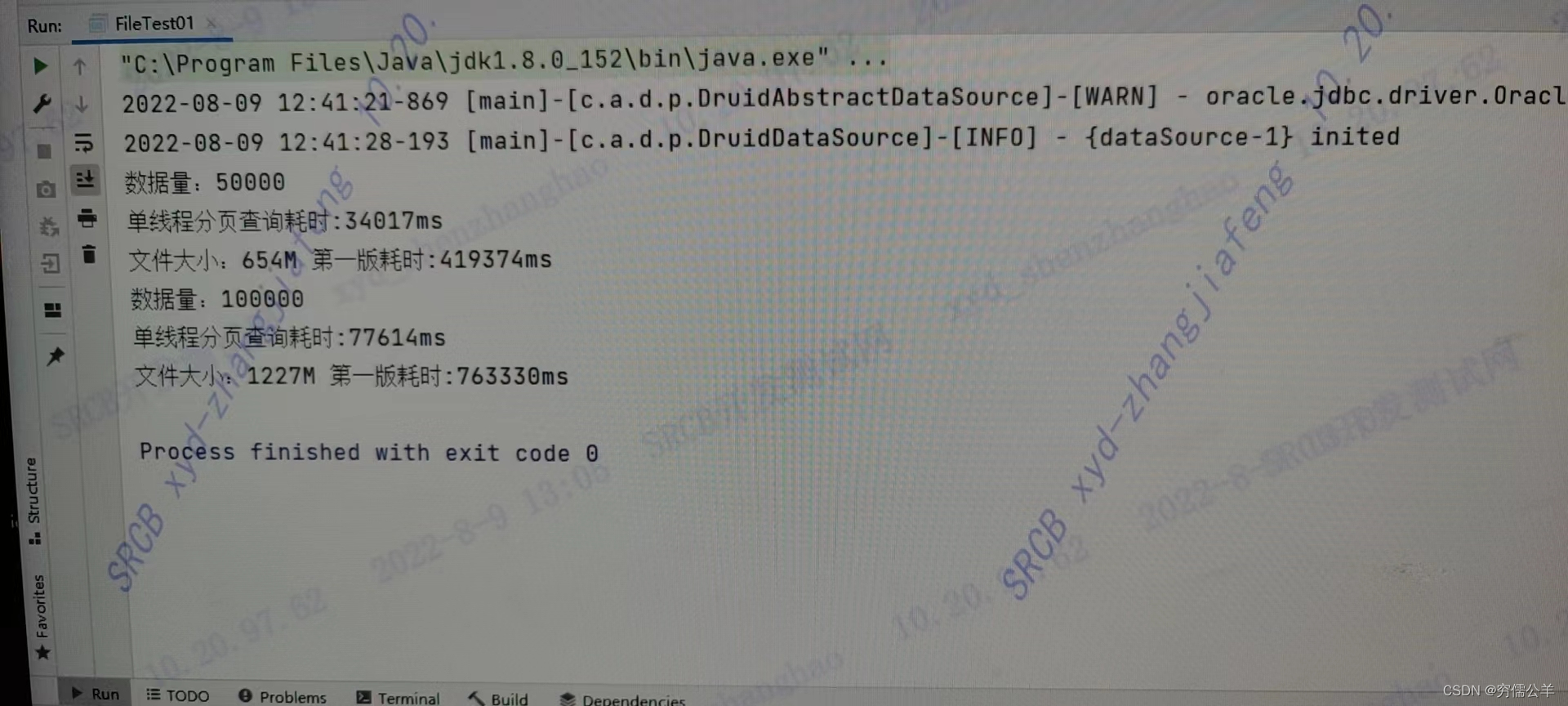

* 单线程分页查询耗时:25921ms

* 文件大小:287M 第二版耗时:63351 ms

* 数据量:100000

* 单线程分页查询耗时:78366ms

* 文件大小:575M 第二版耗时:204218 ms

*/

private static void second() throws Exception {

File file = new File("test1.del");

file.delete();

long start = System.currentTimeMillis();

getData(select());

ExecutorService executorService = Executors.newSingleThreadExecutor();

BlockingQueue<StringJoiner> queue = new LinkedBlockingQueue<>();

AtomicBoolean flag = new AtomicBoolean(false);

executorService.submit(() -> {

int line = 0;

while (!data.isEmpty()) {

Map<String, Object> map = data.poll();

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

queue.add(sj);

}

flag.set(true);

});

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!flag.get() || !queue.isEmpty()) {

StringJoiner sj = queue.poll();

if (sj != null) {

bf.write(sj.toString());

} else {

Thread.sleep(5_00);

}

}

} catch (Exception e) {

e.printStackTrace();

}

executorService.shutdown();

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第二版耗时:" + (end - start) + " ms ");

}

本地模拟运行情况,如下:

实际运行情况,如下:

发现无论是本地还是云上没有实质性的提升,云上甚至更慢了,负优化了属于是

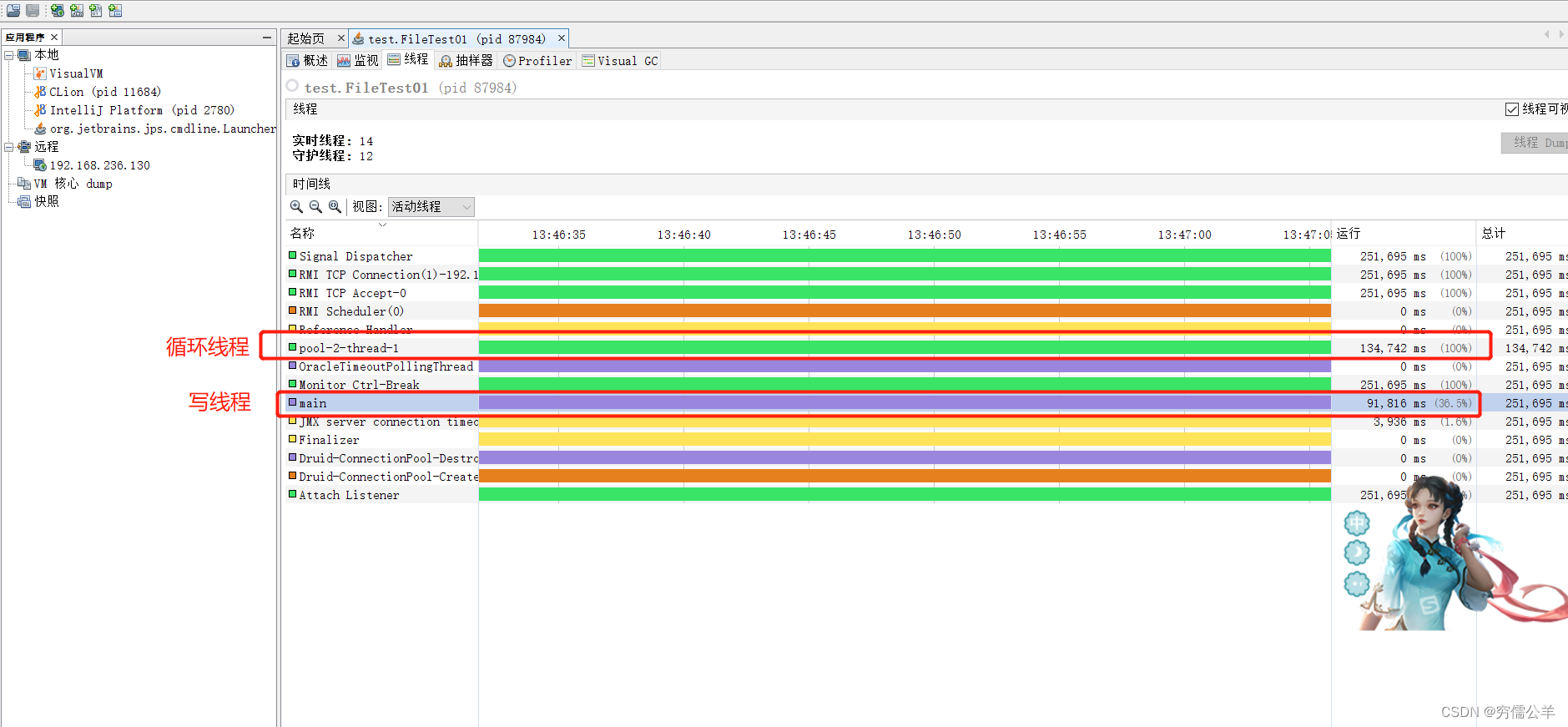

使用jvisualvm查看了下读写线程的情况,发现写线程大部分时间处于休眠状态,怀疑是循环转换大字段过慢导致的,于是把foreach循环改成并行流循环

第三版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

/**

* 第三版:并行流加快循环速度写入

* 数据量:50000

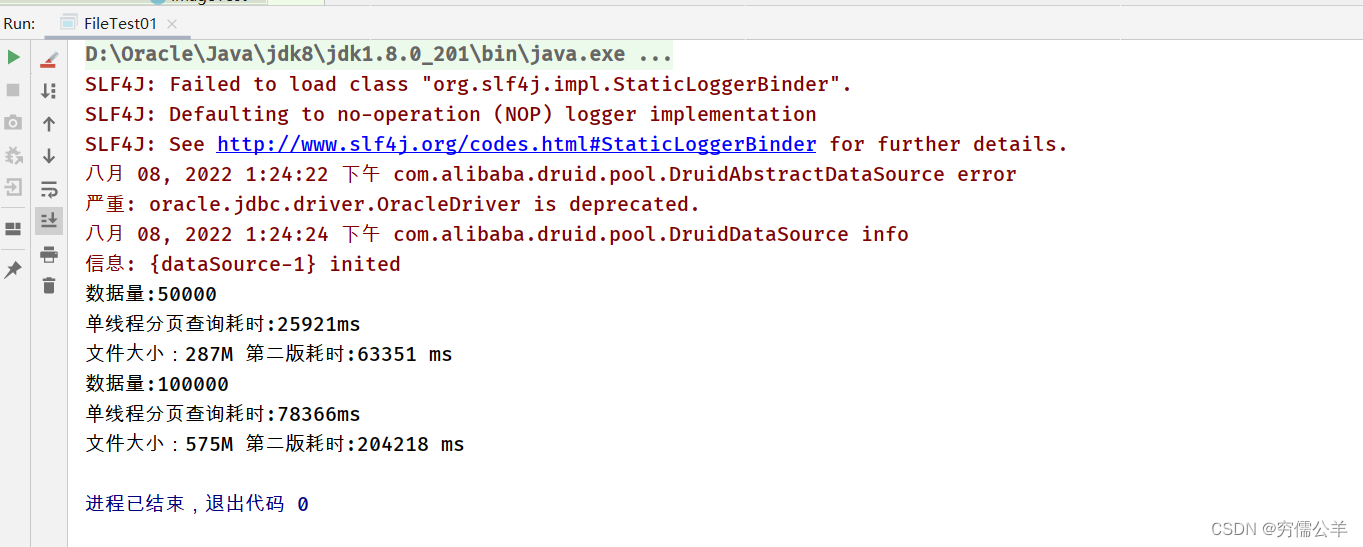

* 单线程分页查询耗时:24282ms

* 文件大小:287M 第三版耗时:165395 ms

* 数据量:100000

* 单线程分页查询耗时:78425ms

* 文件大小:575M 第三版耗时:492103 ms

*

* @throws Exception

*/

private static void third() throws Exception {

File file = new File("test2.del");

file.delete();

long start = System.currentTimeMillis();

getData(select());

ExecutorService executorService = Executors.newSingleThreadExecutor();

AtomicLong line = new AtomicLong(0);

AtomicBoolean flag = new AtomicBoolean(false);

BlockingQueue<StringJoiner> queue = new LinkedBlockingQueue<>();

executorService.submit(() -> {

data.parallelStream().forEach(map -> {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line.incrementAndGet()));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

queue.add(sj);

});

flag.set(true);

});

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!flag.get() || !queue.isEmpty()) {

StringJoiner sj = queue.poll();

if (sj != null) {

bf.write(sj.toString());

}

}

} catch (Exception e) {

e.printStackTrace();

}

executorService.shutdown();

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第三版耗时:" + (end - start) + " ms ");

data.clear();

}

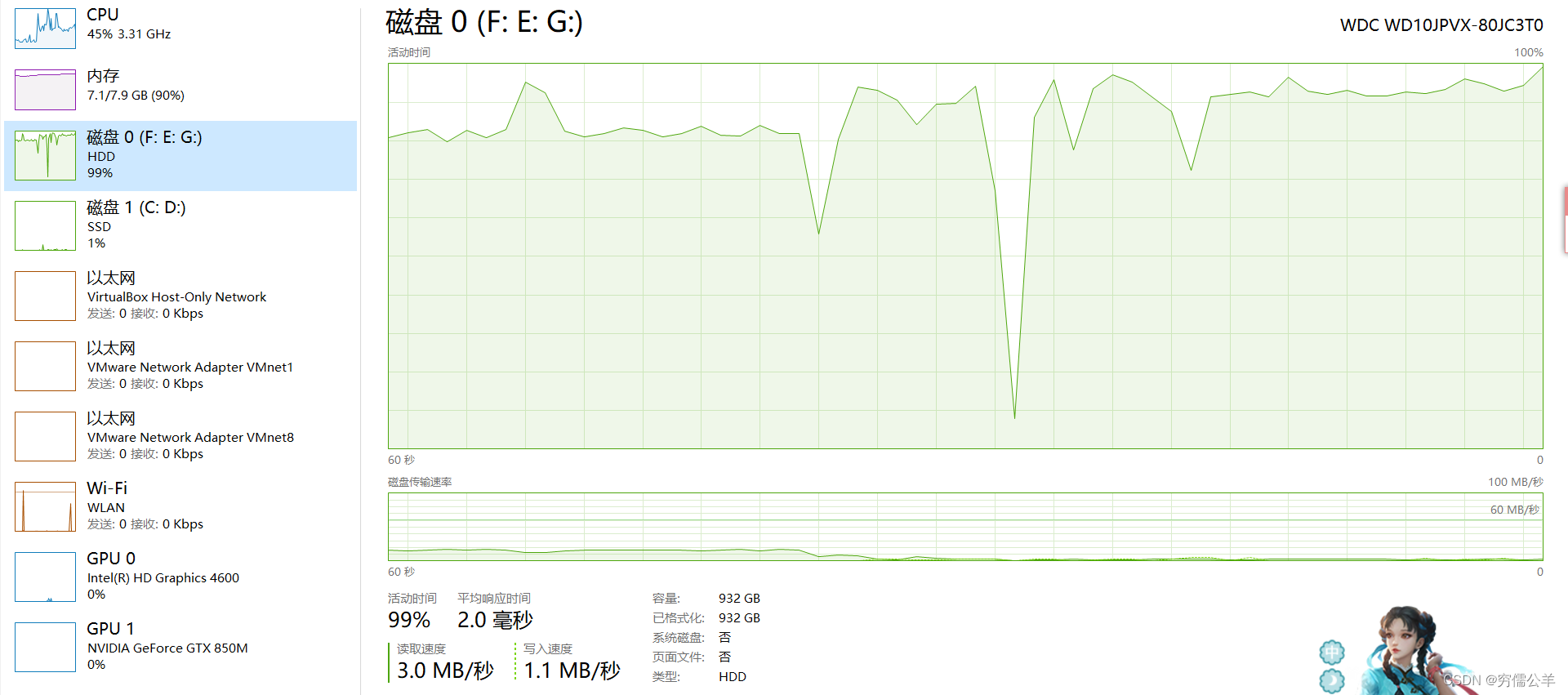

本地模拟运行情况,如下:

本地由于磁盘跑不动了,所以,还不如以前的

实际运行情况,如下:

云上明显有所好转,比之之前快了许多。但是明显还不是最优解,由于现在查询和写入是串行的,查询之后又要额外循环一遍,而且存在OOM的情况。所以可以把读写并行化,一边查询,一边写入

第四版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

private static volatile boolean isFinish = false;

private static void parallelGetData(ResultSet rs, CountDownLatch cdl, boolean isPark) throws SQLException {

try {

long start = System.currentTimeMillis();

int columnCount = rs.getMetaData().getColumnCount();

while (rs.next()) {

Map<String, Object> map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

if (rs.getObject(i) instanceof Clob) {

map.put(rs.getMetaData().getColumnName(i), clobToString((Clob) rs.getObject(i)));

} else {

map.put(rs.getMetaData().getColumnName(i), rs.getObject(i));

}

}

if (isPark) {

if (data.size() > 5_0000) {

LockSupport.park();

}

}

data.add(map);

}

long end = System.currentTimeMillis();

System.out.println("单线程分页查询耗时:" + (end - start) + "ms");

} catch (Exception e) {

e.printStackTrace();

} finally {

rs.close();

rs.getStatement().close();

if (cdl != null)

cdl.countDown();

}

}

/**

* 第四版:bio读写并行

* 数据量:50000

* 单线程分页查询耗时:32781ms

* 文件大小:287M 第四版耗时:34207 ms

* 数据量:100000

* 单线程分页查询耗时:125139ms

* 文件大小:575M 第四版耗时:125863 ms

*

* @throws Exception

*/

private static void four() {

isFinish = false;

File file = new File("test3.del");

file.delete();

ExecutorService executorService = Executors.newSingleThreadExecutor();

long start = System.currentTimeMillis();

executorService.submit(() -> {

try {

parallelGetData(select(), null, false);

} catch (SQLException e) {

e.printStackTrace();

} finally {

isFinish = true;

}

});

long line = 0;

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map == null) {

continue;

}

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

sj.add("\"" + map.get(key) + "\"");

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第四版耗时:" + (end - start) + " ms ");

}

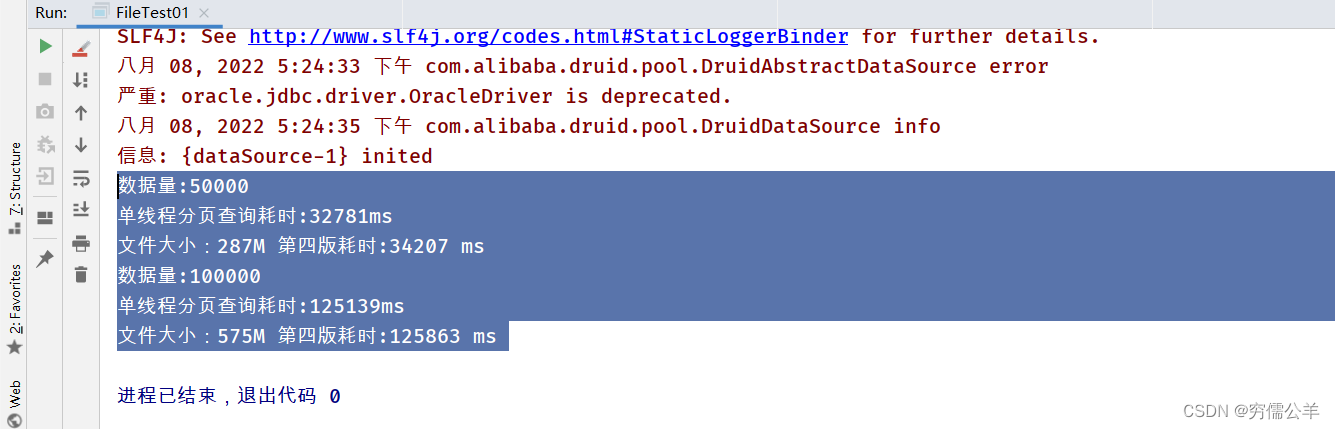

本地模拟运行情况,如下:

实际运行情况,如下:

较之第三版,又快了一丢丢。从结果上看,写不比读慢多少,主要时间还是在查询上边,所以尝试把单线程查询改成多线程查询

第五版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

private static volatile boolean isFinish = false;

/**

* 第五版:多线程读,单线程写(bio)

* 数据量:50000

* 单线程分页查询耗时:150690ms

* 单线程分页查询耗时:165977ms

* 单线程分页查询耗时:176273ms

* 单线程分页查询耗时:171153ms

* 单线程分页查询耗时:157838ms

* 文件大小:287M 第五版耗时:187235 ms

* 数据量:100000

* 单线程分页查询耗时:184424ms

* 单线程分页查询耗时:207333ms

* 单线程分页查询耗时:186972ms

* 单线程分页查询耗时:370479ms

* 单线程分页查询耗时:226568ms

* 文件大小:575M 第五版耗时:395681 ms

*/

private static void five(int threadNum, int pageSize) {

isFinish = false;

File file = new File("test5.del");

long start = System.currentTimeMillis();

read(threadNum, pageSize);

long line = 0;

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map == null) {

continue;

}

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

} finally {

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第五版耗时:" + (end - start) + " ms ");

}

private static void parallelGetData(ResultSet rs, CountDownLatch cdl, boolean isPark) throws SQLException {

try {

long start = System.currentTimeMillis();

int columnCount = rs.getMetaData().getColumnCount();

while (rs.next()) {

Map<String, Object> map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

if (rs.getObject(i) instanceof Clob) {

map.put(rs.getMetaData().getColumnName(i), clobToString((Clob) rs.getObject(i)));

} else {

map.put(rs.getMetaData().getColumnName(i), rs.getObject(i));

}

}

if (isPark) {

if (data.size() > 5_0000) {

LockSupport.park();

}

}

data.add(map);

}

long end = System.currentTimeMillis();

System.out.println("单线程分页查询耗时:" + (end - start) + "ms");

} catch (Exception e) {

e.printStackTrace();

} finally {

rs.close();

rs.getStatement().close();

if (cdl != null)

cdl.countDown();

}

}

private static void read(final int threadNum, final int pageSize) {

CountDownLatch cdl = new CountDownLatch(threadNum);

ExecutorService executorService = Executors.newFixedThreadPool(threadNum + 1);

for (int i = 0; i < threadNum; i++) {

int startPage = i * pageSize;

int endPage = (i + 1) * pageSize;

executorService.submit(() -> {

try {

parallelGetData(select(startPage, endPage), cdl, false);

} catch (SQLException e) {

e.printStackTrace();

}

});

}

executorService.submit(() -> {

try {

cdl.await();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

executorService.shutdown();

isFinish = true;

}

});

}

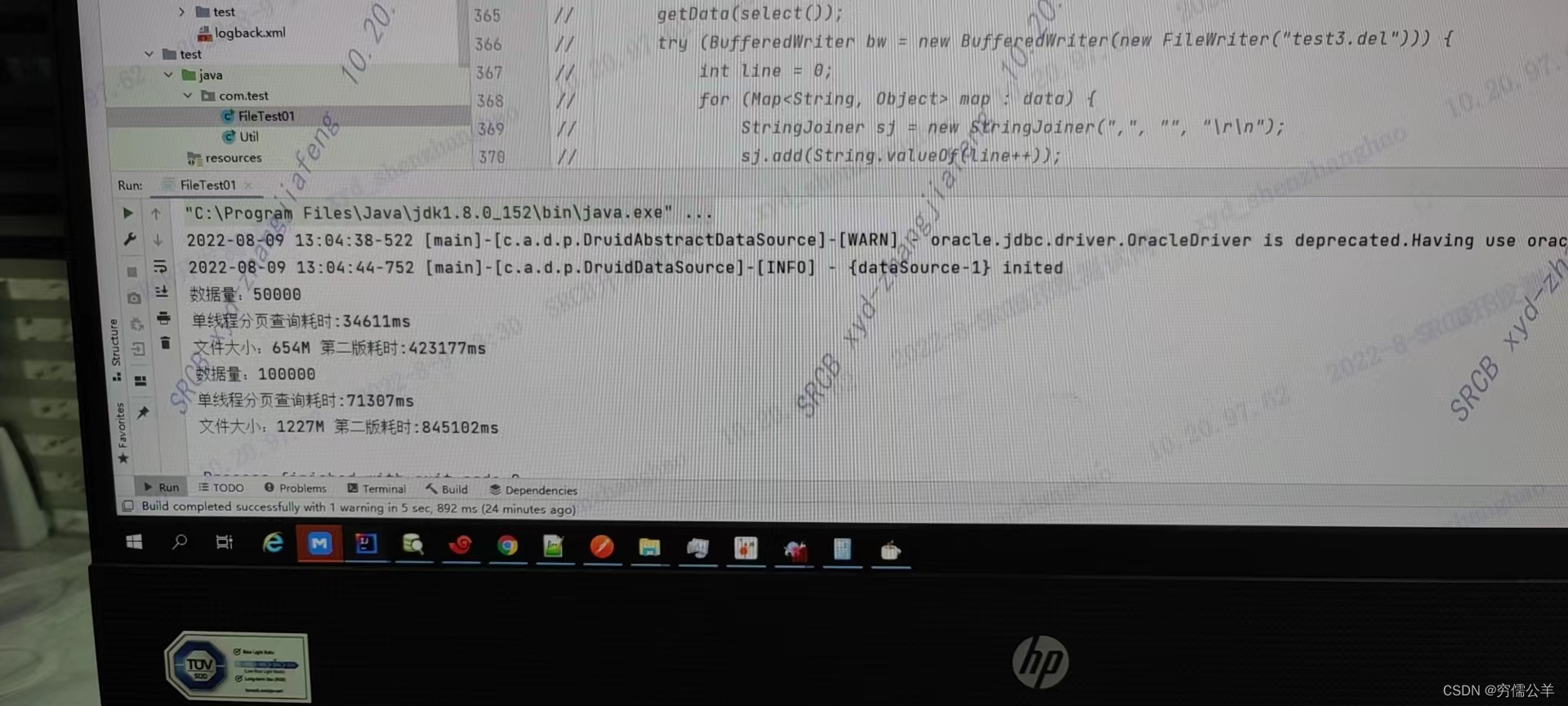

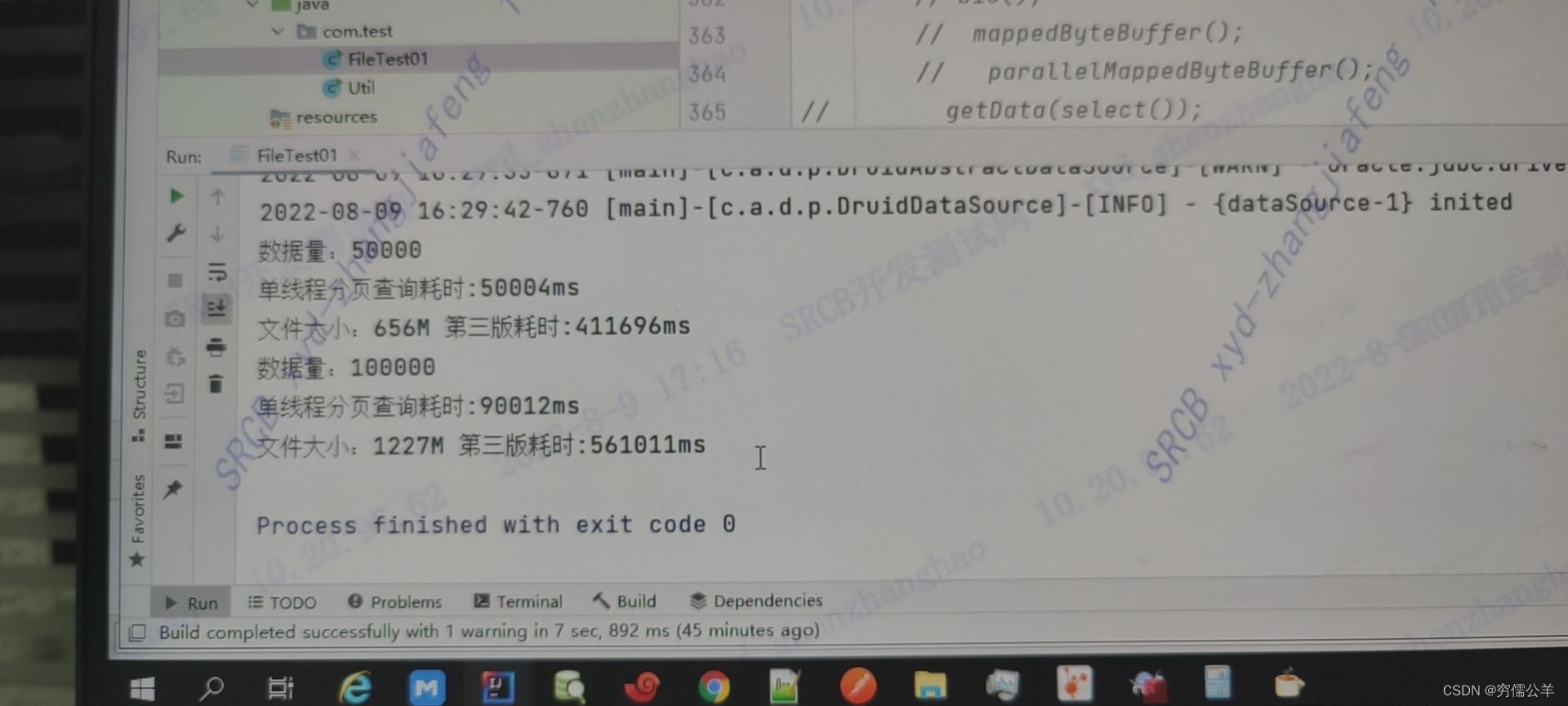

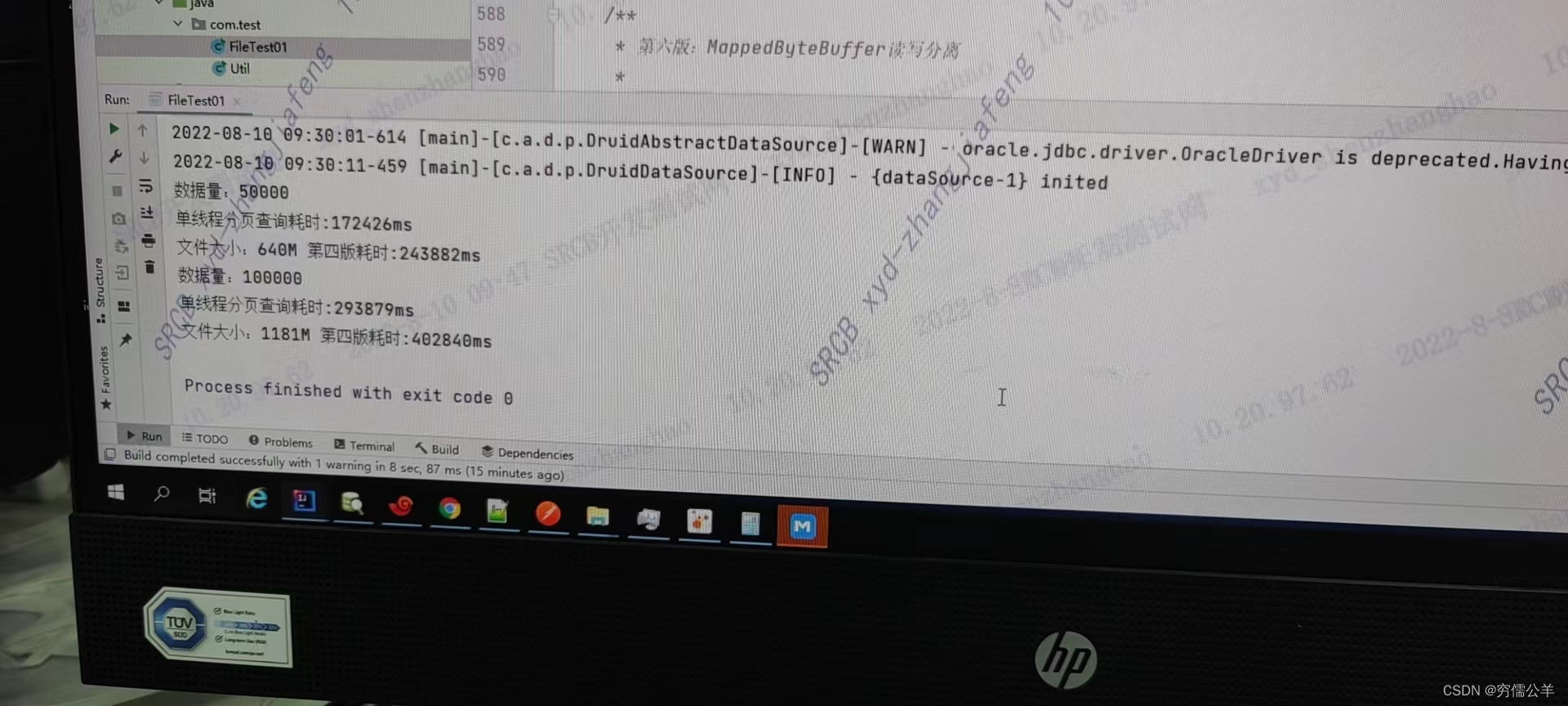

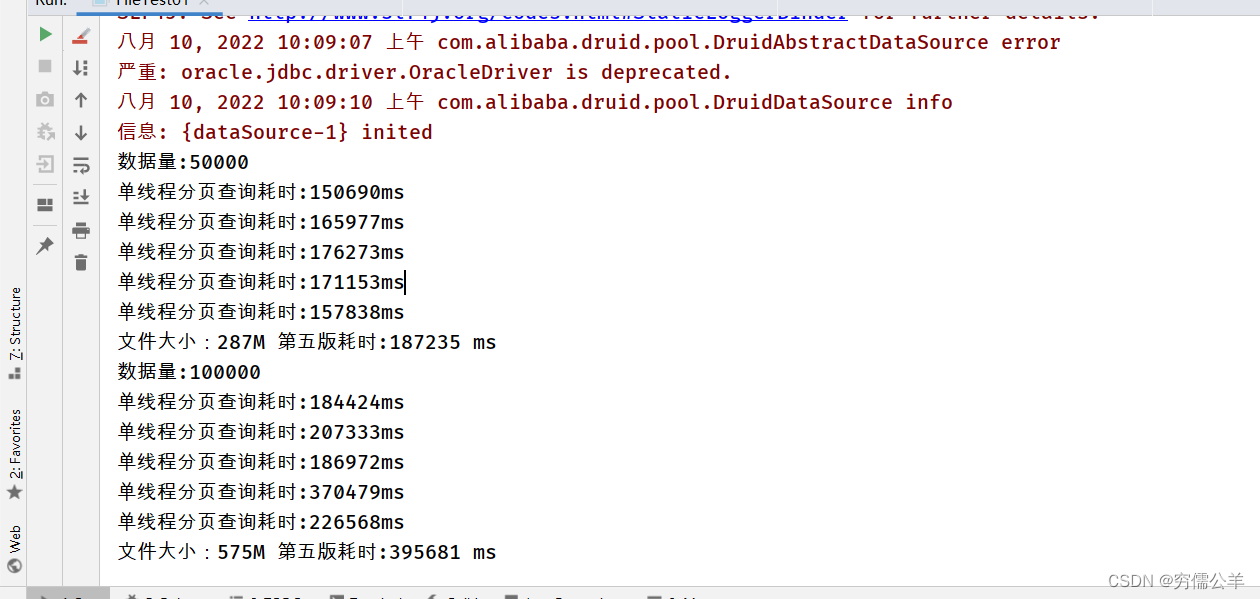

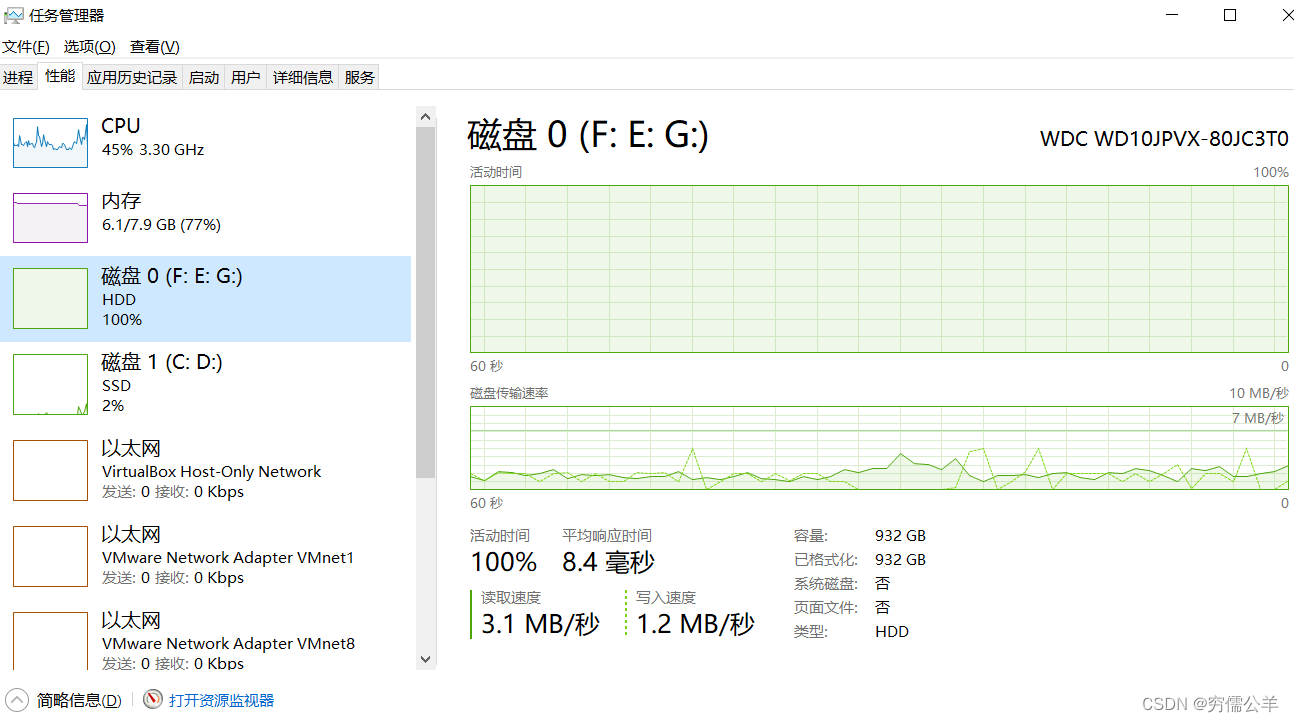

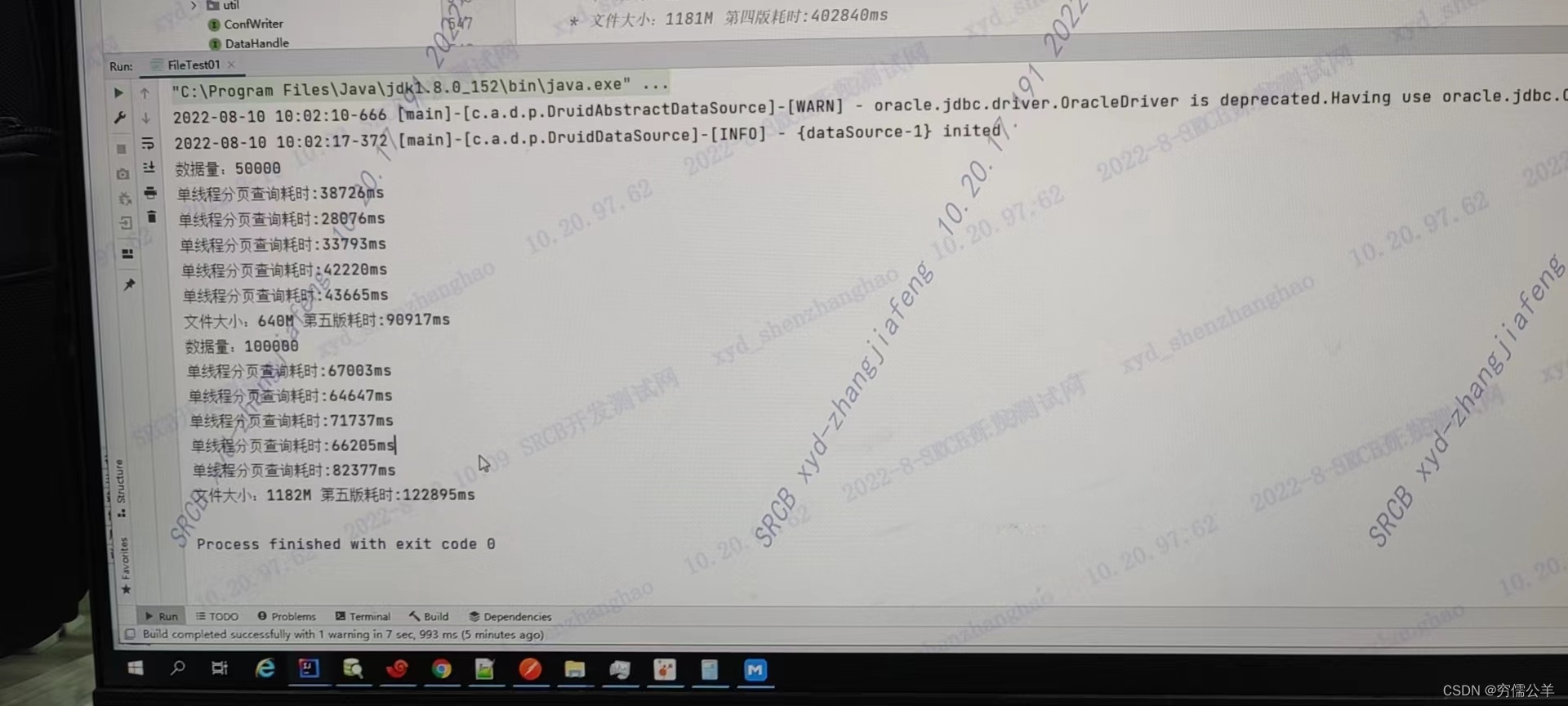

本地模拟运行情况,如下:

本地还是因为磁盘成为瓶颈,多线程读并未提高查询的速度

实际运行情况,如下:

云上可以很明显的看出差距了,5w数据只要1.5分钟左右,10w数据2分钟左右。数据量增长一倍,耗时却没有翻倍

在看结果,发现最慢的查询线程要82377ms,但是最终耗时却在122895ms,尝试用NIO提高写入速度

第六版的代码,如下:

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

private static volatile boolean isFinish = false;

private static void read(final int threadNum, final int pageSize) {

CountDownLatch cdl = new CountDownLatch(threadNum);

ExecutorService executorService = Executors.newFixedThreadPool(threadNum + 1);

for (int i = 0; i < threadNum; i++) {

int startPage = i * pageSize;

int endPage = (i + 1) * pageSize;

executorService.submit(() -> {

try {

parallelGetData(select(startPage, endPage), cdl, false);

} catch (SQLException e) {

e.printStackTrace();

}

});

}

executorService.submit(() -> {

try {

cdl.await();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

executorService.shutdown();

isFinish = true;

}

});

}

private static void parallelGetData(ResultSet rs, CountDownLatch cdl, boolean isPark) throws SQLException {

try {

long start = System.currentTimeMillis();

int columnCount = rs.getMetaData().getColumnCount();

while (rs.next()) {

Map<String, Object> map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

if (rs.getObject(i) instanceof Clob) {

map.put(rs.getMetaData().getColumnName(i), clobToString((Clob) rs.getObject(i)));

} else {

map.put(rs.getMetaData().getColumnName(i), rs.getObject(i));

}

}

if (isPark) {

if (data.size() > 5_0000) {

LockSupport.park();

}

}

data.add(map);

}

long end = System.currentTimeMillis();

System.out.println("单线程分页查询耗时:" + (end - start) + "ms");

} catch (Exception e) {

e.printStackTrace();

} finally {

rs.close();

rs.getStatement().close();

if (cdl != null)

cdl.countDown();

}

}

/**

* 第六版:多线程读,单线程写(nio)

*/

private static void six() {

isFinish = false;

File file = new File("test6.del");

long start = System.currentTimeMillis();

read(5, 3_0000);

long line = 0;

try (RandomAccessFile memoryAccessFile = new RandomAccessFile(file, "rw")) {

FileChannel fileChannel = memoryAccessFile.getChannel();

long position = 0;

MappedByteBuffer mappedByteBuffer = null;

List<byte[]> deque = new LinkedList<>();

long length = 0;

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map != null) {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

byte[] bs = sj.toString().getBytes(StandardCharsets.UTF_8);

if (length + bs.length > MAX_SIZE) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

deque.clear();

position += length;

length = 0;

}

length += bs.length;

deque.add(bs);

}

}

if (!deque.isEmpty()) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

position += length;

deque.clear();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第六版耗时:" + (end - start) + " ms ");

}

本地已经没有试的必要了,直接看云上的情况吧

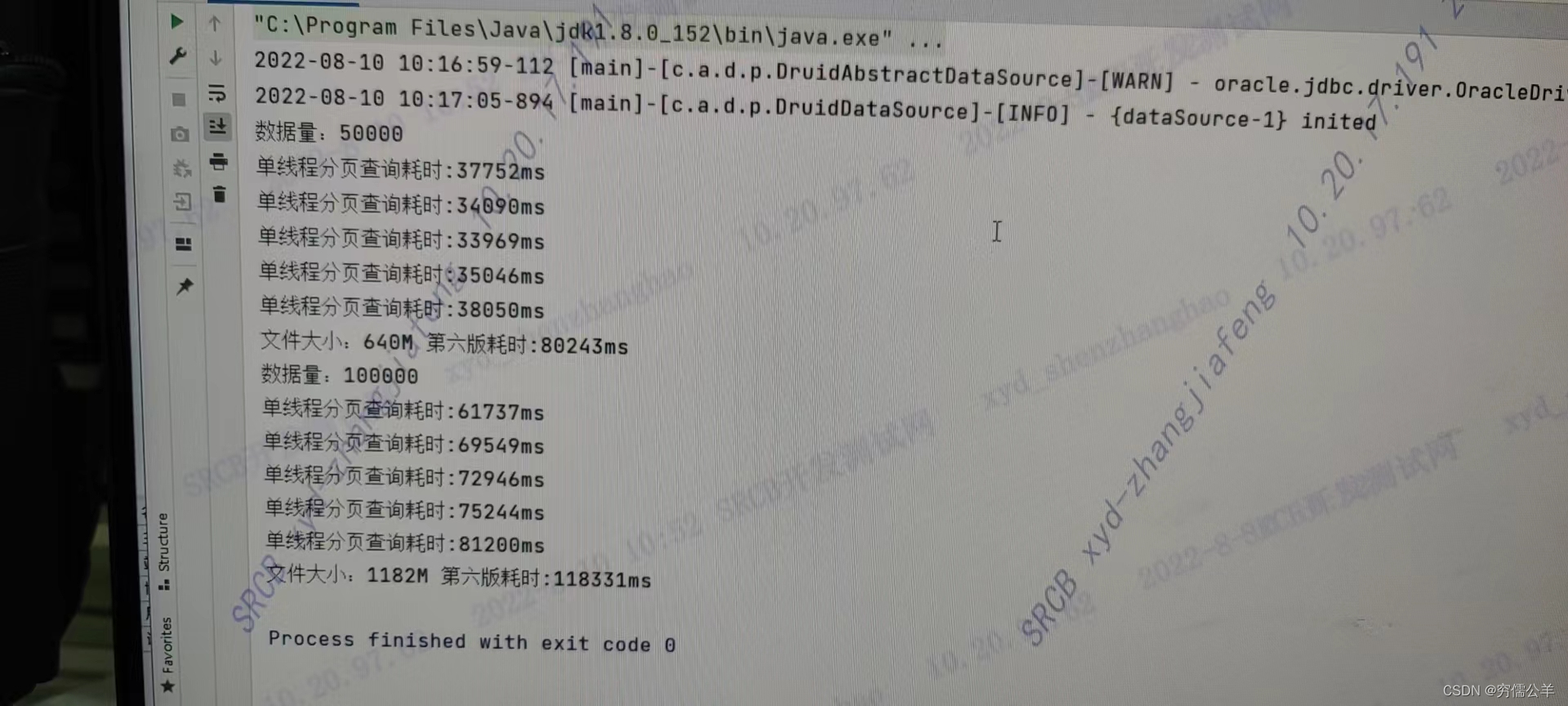

实际运行情况,如下:

貌似又快了一丢丢

解决方案:

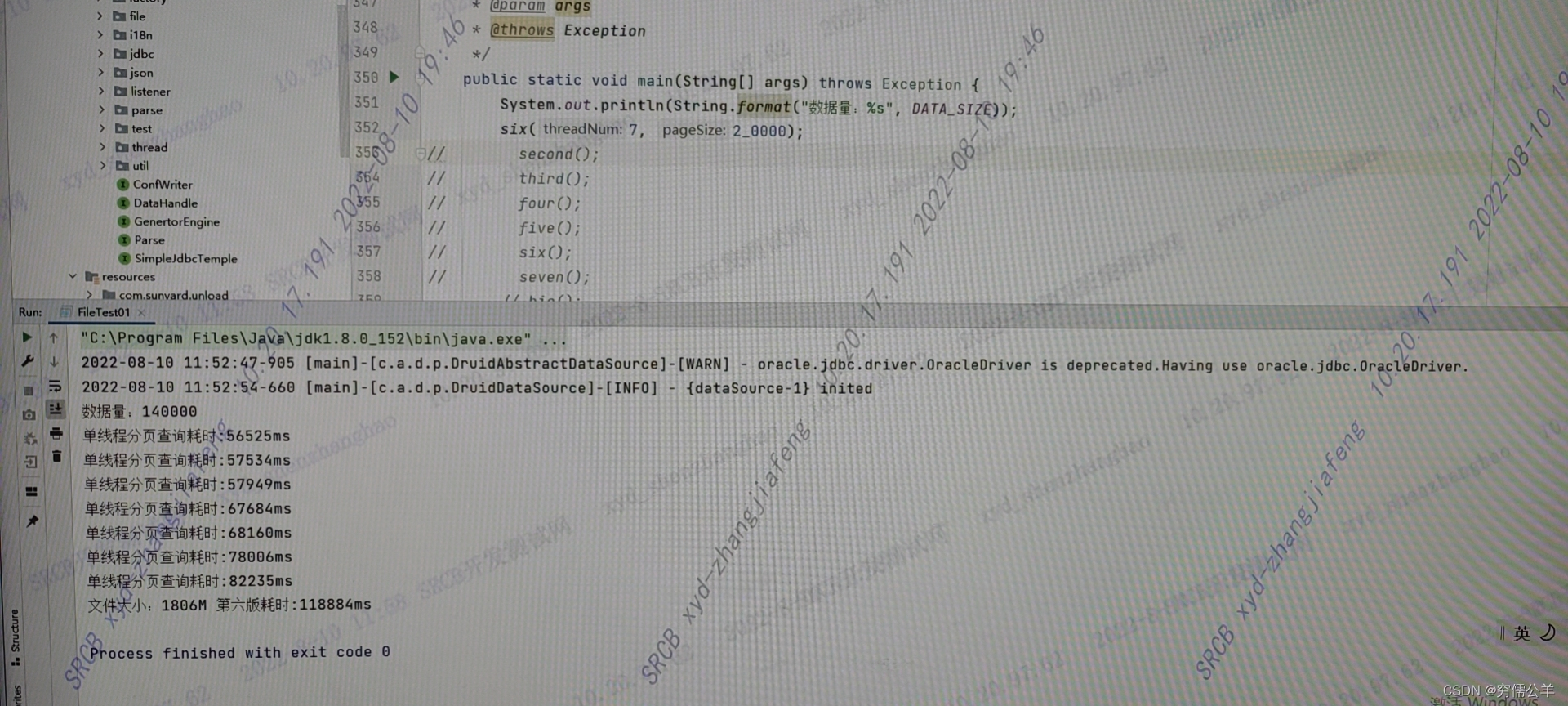

采用最终版对全量数据做个测试,大概14w左右的数据量,开7个查询线程

最终运行结果,如下:

14w数据,大约2g左右,执行时间大概在2分钟左右,如果还想再快,是不是还可以采用多线程写入?不过现在差强人意,应该足够使用了

注意:这个读写速度差不多,是因为数据库存在大字段,如果没有存在大字段,很快就查询完毕了,所以还是会有OOM的问题,出现这种情况,可以尝试多线程写入,或者是让读线程达到阈值后park一下

全部测试代码:

package test;

import com.alibaba.druid.pool.DruidDataSourceFactory;

import javax.sql.DataSource;

import java.io.*;

import java.nio.ByteBuffer;

import java.nio.MappedByteBuffer;

import java.nio.channels.FileChannel;

import java.nio.charset.StandardCharsets;

import java.sql.Clob;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.*;

import java.util.concurrent.*;

import java.util.concurrent.atomic.AtomicBoolean;

import java.util.concurrent.atomic.AtomicLong;

import java.util.concurrent.atomic.AtomicReference;

import java.util.concurrent.locks.LockSupport;

/**

* @author hqd

* @title: FileTest01

* @projectName test-001

* @description: TODO

* @date 2022-07-26

*/

public class FileTest01 {

private static final BlockingQueue<Map<String, Object>> data = new LinkedBlockingQueue<>();

private static volatile boolean isFinish = false;

private static final int MAX_SIZE = 1024 * 1024 * 8;

private static final int PARALLE_MAX_SIZE = 1024 * 1024 * 64;

private static int DATA_SIZE = 5_0000;

static DataSource ds = null;

static {

Properties pro = new Properties();

try (InputStream is = FileTest01.class.getClassLoader().getResourceAsStream("druid.properties");) {

pro.load(is);

//2.获取连接池对象

ds = DruidDataSourceFactory.createDataSource(pro);

ds.getConnection();

//3.获取连接

} catch (Exception e) {

e.printStackTrace();

}

}

private static void bio() {

isFinish = false;

new File("test.del").delete();

final AtomicReference<Thread> readThread = new AtomicReference<>();

ExecutorService executorService = Executors.newSingleThreadExecutor();

long start = System.currentTimeMillis();

executorService.submit(() -> {

try {

readThread.set(Thread.currentThread());

getData(select());

} catch (SQLException e) {

e.printStackTrace();

} finally {

isFinish = true;

}

});

long line = 0;

try (BufferedWriter bf = new BufferedWriter(new FileWriter("test.del"))) {

while (!isFinish || !data.isEmpty()) {

if (data.size() < 1_000 && readThread.get() != null) {

LockSupport.unpark(readThread.get());

}

Map<String, Object> map = data.poll();

if (map == null) {

continue;

}

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

sj.add("\"" + map.get(key) + "\"");

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

long end = System.currentTimeMillis();

System.out.println("bio耗时:" + (end - start) + "ms");

data.clear();

}

private static void channel(RandomAccessFile memoryAccessFile, ResultSet resultSet) throws SQLException, IOException {

long start = System.currentTimeMillis();

FileChannel fileChannel = memoryAccessFile.getChannel();

ByteBuffer buf = ByteBuffer.allocate(1024 * 1024 * 4);

int line = 0;

while (resultSet.next()) {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

String id = resultSet.getString(1);

String clob = clobToString(resultSet.getClob(2));

sj.add(String.valueOf(line++)).add("\"" + id + "\"").add("\"" + clob + "\"");

byte[] bs = sj.toString().getBytes(StandardCharsets.UTF_8);

if (buf.position() + bs.length > buf.capacity()) {

buf.flip();

while (buf.hasRemaining()) {

fileChannel.write(buf);

}

buf.clear();

}

buf.put(bs);

}

long end = System.currentTimeMillis();

System.out.println("fc耗时:" + (end - start) + "ms");

}

private static void parallelMappedByteBuffer() throws Exception {

new File("test2.del").delete();

long appStart = System.currentTimeMillis();

getData(select());

try (RandomAccessFile memoryAccessFile = new RandomAccessFile("test2.del", "rw")) {

FileChannel fileChannel = memoryAccessFile.getChannel();

parallelInvoke(fileChannel);

} catch (IOException e) {

e.printStackTrace();

} finally {

}

long appEnd = System.currentTimeMillis();

System.out.println("并行流耗时:" + (appEnd - appStart) + "ms");

}

private static void mappedByteBuffer() {

isFinish = false;

new File("test1.del").delete();

long appStart = System.currentTimeMillis();

ExecutorService executorService = Executors.newSingleThreadExecutor();

final AtomicReference<Thread> readThread = new AtomicReference<>();

executorService.submit(() -> {

try {

readThread.set(Thread.currentThread());

getData(select());

} catch (SQLException e) {

e.printStackTrace();

} finally {

isFinish = true;

}

});

try (RandomAccessFile memoryAccessFile = new RandomAccessFile("test1.del", "rw")) {

FileChannel fileChannel = memoryAccessFile.getChannel();

invoke(fileChannel, readThread.get());

} catch (IOException e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

long appEnd = System.currentTimeMillis();

System.out.println("读写并行耗时:" + (appEnd - appStart) + "ms");

}

private static void parallelInvoke(FileChannel fileChannel) {

final AtomicLong line = new AtomicLong(0);

AtomicBoolean flag = new AtomicBoolean(false);

BlockingQueue<StringJoiner> deque = new LinkedBlockingQueue<>();

ExecutorService executorService = Executors.newSingleThreadExecutor();

executorService.submit(() -> {

data.parallelStream().forEach(map -> {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line.getAndIncrement()));

for (String key : map.keySet()) {

sj.add("\"" + map.get(key) + "\"");

}

deque.add(sj);

});

flag.set(true);

});

MappedByteBuffer mappedByteBuffer = null;

List<byte[]> list = new LinkedList<>();

long length = 0;

long position = 0;

try {

while (!flag.get() || !deque.isEmpty()) {

StringJoiner sj = deque.poll();

if (sj == null) {

continue;

}

byte[] bytes = sj.toString().getBytes(StandardCharsets.UTF_8);

if (length + bytes.length > PARALLE_MAX_SIZE) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

mappedByteBuffer.force();

list.clear();

position += length;

length = 0;

}

length += bytes.length;

list.add(bytes);

}

if (!list.isEmpty()) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : list) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

list.clear();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

}

private static void invoke(FileChannel fileChannel, Thread readThread) {

try {

long line = 0;

long position = 0;

MappedByteBuffer mappedByteBuffer = null;

List<byte[]> deque = new LinkedList<>();

long length = 0;

while (!isFinish || !data.isEmpty()) {

if (data.size() < 1_000 && readThread != null) {

LockSupport.unpark(readThread);

}

Map<String, Object> map = data.poll();

if (map != null) {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

sj.add("\"" + map.get(key) + "\"");

}

byte[] bs = sj.toString().getBytes(StandardCharsets.UTF_8);

if (length + bs.length > MAX_SIZE) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

deque.clear();

position += length;

length = 0;

}

length += bs.length;

deque.add(bs);

}

}

if (!deque.isEmpty()) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

position += length;

deque.clear();

}

} catch (Exception e) {

e.printStackTrace();

}

}

private static void read(final int threadNum, final int pageSize) {

CountDownLatch cdl = new CountDownLatch(threadNum);

ExecutorService executorService = Executors.newFixedThreadPool(threadNum + 1);

for (int i = 0; i < threadNum; i++) {

int startPage = i * pageSize;

int endPage = (i + 1) * pageSize;

executorService.submit(() -> {

try {

parallelGetData(select(startPage, endPage), cdl, false);

} catch (SQLException e) {

e.printStackTrace();

}

});

}

executorService.submit(() -> {

try {

cdl.await();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

executorService.shutdown();

isFinish = true;

}

});

}

/**

* 30w 查询耗时:2499023ms 循环耗时:1736967ms Mapped耗时:1776848ms

*

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

// long end = System.currentTimeMillis();

// System.out.println("多线程分页查询耗时:" + (end - start) + "ms");

// getData(select());

// bio();

// mappedByteBuffer();

// parallelMappedByteBuffer();

System.out.println(String.format("数据量:%s", DATA_SIZE));

five(5, 1_0000);

DATA_SIZE = 10_0000;

System.out.println(String.format("数据量:%s", DATA_SIZE));

five(5, 2_0000);

// second();

// third();

// four();

// five();

// six();

}

/**

* 第一版,单线程先读后写

* 数据量:50000

* 单线程分页查询耗时:25078ms

* 文件大小:287M 第一版耗时:62643 ms

* 数据量:100000

* 单线程分页查询耗时:81071ms

* 文件大小:575M 第一版耗时:218091 ms

*

* @throws Exception

*/

private static void first() throws Exception {

File file = new File("test.del");

file.delete();

long line = 0;

long start = System.currentTimeMillis();

getData(select());

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!data.isEmpty()) {

Map<String, Object> map = data.poll();

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第一版耗时:" + (end - start) + " ms ");

}

/**

* 第二版:读写分离

* 数据量:50000

* 单线程分页查询耗时:25921ms

* 文件大小:287M 第二版耗时:63351 ms

* 数据量:100000

* 单线程分页查询耗时:78366ms

* 文件大小:575M 第二版耗时:204218 ms

*/

private static void second() throws Exception {

File file = new File("test1.del");

file.delete();

long start = System.currentTimeMillis();

getData(select());

ExecutorService executorService = Executors.newSingleThreadExecutor();

BlockingQueue<StringJoiner> queue = new LinkedBlockingQueue<>();

AtomicBoolean flag = new AtomicBoolean(false);

executorService.submit(() -> {

int line = 0;

while (!data.isEmpty()) {

Map<String, Object> map = data.poll();

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

queue.add(sj);

}

flag.set(true);

});

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!flag.get() || !queue.isEmpty()) {

StringJoiner sj = queue.poll();

if (sj != null) {

bf.write(sj.toString());

} else {

Thread.sleep(5_00);

}

}

} catch (Exception e) {

e.printStackTrace();

}

executorService.shutdown();

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第二版耗时:" + (end - start) + " ms ");

}

/**

* 第三版:并行流加快循环速度写入

* 数据量:50000

* 单线程分页查询耗时:24282ms

* 文件大小:287M 第三版耗时:165395 ms

* 数据量:100000

* 单线程分页查询耗时:78425ms

* 文件大小:575M 第三版耗时:492103 ms

*

* @throws Exception

*/

private static void third() throws Exception {

File file = new File("test2.del");

file.delete();

long start = System.currentTimeMillis();

getData(select());

ExecutorService executorService = Executors.newSingleThreadExecutor();

AtomicLong line = new AtomicLong(0);

AtomicBoolean flag = new AtomicBoolean(false);

BlockingQueue<StringJoiner> queue = new LinkedBlockingQueue<>();

executorService.submit(() -> {

data.parallelStream().forEach(map -> {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line.incrementAndGet()));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

queue.add(sj);

});

flag.set(true);

});

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!flag.get() || !queue.isEmpty()) {

StringJoiner sj = queue.poll();

if (sj != null) {

bf.write(sj.toString());

}

}

} catch (Exception e) {

e.printStackTrace();

}

executorService.shutdown();

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第三版耗时:" + (end - start) + " ms ");

data.clear();

}

/**

* 第四版:bio读写并行

* 数据量:50000

* 单线程分页查询耗时:32781ms

* 文件大小:287M 第四版耗时:34207 ms

* 数据量:100000

* 单线程分页查询耗时:125139ms

* 文件大小:575M 第四版耗时:125863 ms

*

* @throws Exception

*/

private static void four() {

isFinish = false;

File file = new File("test3.del");

file.delete();

ExecutorService executorService = Executors.newSingleThreadExecutor();

long start = System.currentTimeMillis();

executorService.submit(() -> {

try {

parallelGetData(select(), null, false);

} catch (SQLException e) {

e.printStackTrace();

} finally {

isFinish = true;

}

});

long line = 0;

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map == null) {

continue;

}

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

sj.add("\"" + map.get(key) + "\"");

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第四版耗时:" + (end - start) + " ms ");

}

/**

* 第五版:多线程读,单线程写(bio)

* 数据量:50000

* 单线程分页查询耗时:150690ms

* 单线程分页查询耗时:165977ms

* 单线程分页查询耗时:176273ms

* 单线程分页查询耗时:171153ms

* 单线程分页查询耗时:157838ms

* 文件大小:287M 第五版耗时:187235 ms

* 数据量:100000

* 单线程分页查询耗时:184424ms

* 单线程分页查询耗时:207333ms

* 单线程分页查询耗时:186972ms

* 单线程分页查询耗时:370479ms

* 单线程分页查询耗时:226568ms

* 文件大小:575M 第五版耗时:395681 ms

*/

private static void five(int threadNum, int pageSize) {

isFinish = false;

File file = new File("test5.del");

long start = System.currentTimeMillis();

read(threadNum, pageSize);

long line = 0;

try (BufferedWriter bf = new BufferedWriter(new FileWriter(file))) {

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map == null) {

continue;

}

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

bf.write(sj.toString());

}

} catch (Exception e) {

e.printStackTrace();

} finally {

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第五版耗时:" + (end - start) + " ms ");

}

/**

* 第六版:多线程读,单线程写(nio)

*/

private static void six() {

isFinish = false;

File file = new File("test6.del");

long start = System.currentTimeMillis();

read(5, 3_0000);

long line = 0;

try (RandomAccessFile memoryAccessFile = new RandomAccessFile(file, "rw")) {

FileChannel fileChannel = memoryAccessFile.getChannel();

long position = 0;

MappedByteBuffer mappedByteBuffer = null;

List<byte[]> deque = new LinkedList<>();

long length = 0;

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map != null) {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

byte[] bs = sj.toString().getBytes(StandardCharsets.UTF_8);

if (length + bs.length > MAX_SIZE) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

deque.clear();

position += length;

length = 0;

}

length += bs.length;

deque.add(bs);

}

}

if (!deque.isEmpty()) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

position += length;

deque.clear();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第六版耗时:" + (end - start) + " ms ");

}

/**

* nio读写并行

* 数据量:50000

* 单线程分页查询耗时:32250ms

* 文件大小:287M 第五版耗时:33274 ms

* 数据量:100000

* 单线程分页查询耗时:91807ms

* 文件大小:575M 第五版耗时:92478 ms

*

* @throws Exception

*/

private static void seven() {

isFinish = false;

File file = new File("test4.del");

file.delete();

long start = System.currentTimeMillis();

ExecutorService executorService = Executors.newSingleThreadExecutor();

executorService.submit(() -> {

try {

parallelGetData(select(), null, false);

} catch (SQLException e) {

e.printStackTrace();

} finally {

isFinish = true;

}

});

try (RandomAccessFile memoryAccessFile = new RandomAccessFile(file, "rw")) {

FileChannel fileChannel = memoryAccessFile.getChannel();

long line = 0;

long position = 0;

MappedByteBuffer mappedByteBuffer = null;

List<byte[]> deque = new LinkedList<>();

long length = 0;

while (!isFinish || !data.isEmpty()) {

Map<String, Object> map = data.poll();

if (map != null) {

StringJoiner sj = new StringJoiner(",", "", "\r\n");

sj.add(String.valueOf(line++));

for (String key : map.keySet()) {

if (map.get(key) instanceof Clob) {

sj.add("\"" + clobToString((Clob) map.get(key)) + "\"");

} else {

sj.add("\"" + map.get(key) + "\"");

}

}

byte[] bs = sj.toString().getBytes(StandardCharsets.UTF_8);

if (length + bs.length > MAX_SIZE) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

deque.clear();

position += length;

length = 0;

}

length += bs.length;

deque.add(bs);

}

}

if (!deque.isEmpty()) {

mappedByteBuffer = fileChannel.map(FileChannel.MapMode.READ_WRITE, position, length);

for (byte[] b : deque) {

mappedByteBuffer.put(b);

}

mappedByteBuffer.force();

position += length;

deque.clear();

}

} catch (IOException e) {

e.printStackTrace();

} finally {

executorService.shutdown();

}

long end = System.currentTimeMillis();

System.out.println("文件大小:" + (file.length() / 1024 / 1024) + "M 第六版耗时:" + (end - start) + " ms ");

}

private static ResultSet select() throws SQLException {

return select(0, DATA_SIZE);

}

private static ResultSet select(int start, int end) throws SQLException {

PreparedStatement pstmt = ds.getConnection().prepareStatement("SELECT * FROM " +

"( SELECT A.*, ROWNUM RN FROM (SELECT empno,ename,job,mgr,hiredate,sal,comm,deptno,INFO FROM TEST_EMP ) A WHERE ROWNUM < ? ) WHERE RN >= ?");

pstmt.setInt(1, end);

pstmt.setInt(2, start);

pstmt.setFetchSize(1_000);

return pstmt.executeQuery();

}

private static void getData(ResultSet rs) throws SQLException {

getData(rs, null, false);

}

private static void getData(ResultSet rs, CountDownLatch cdl) throws SQLException {

getData(rs, cdl, false);

}

private static void parallelGetData(ResultSet rs, CountDownLatch cdl, boolean isPark) throws SQLException {

try {

long start = System.currentTimeMillis();

int columnCount = rs.getMetaData().getColumnCount();

while (rs.next()) {

Map<String, Object> map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

if (rs.getObject(i) instanceof Clob) {

map.put(rs.getMetaData().getColumnName(i), clobToString((Clob) rs.getObject(i)));

} else {

map.put(rs.getMetaData().getColumnName(i), rs.getObject(i));

}

}

if (isPark) {

if (data.size() > 5_0000) {

LockSupport.park();

}

}

data.add(map);

}

long end = System.currentTimeMillis();

System.out.println("单线程分页查询耗时:" + (end - start) + "ms");

} catch (Exception e) {

e.printStackTrace();

} finally {

rs.close();

rs.getStatement().close();

if (cdl != null)

cdl.countDown();

}

}

private static void getData(ResultSet rs, CountDownLatch cdl, boolean isPark) throws SQLException {

try {

long start = System.currentTimeMillis();

int columnCount = rs.getMetaData().getColumnCount();

while (rs.next()) {

Map<String, Object> map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

map.put(rs.getMetaData().getColumnName(i), rs.getObject(i));

}

if (isPark) {

if (data.size() > 5_0000) {

LockSupport.park();

}

}

data.add(map);

}

long end = System.currentTimeMillis();

System.out.println("单线程分页查询耗时:" + (end - start) + "ms");

} catch (Exception e) {

e.printStackTrace();

} finally {

rs.close();

rs.getStatement().close();

if (cdl != null)

cdl.countDown();

}

}

private static String clobToString(Clob clob) {

try {

Reader is = clob.getCharacterStream();// 得到流

BufferedReader br = new BufferedReader(is);

String s = br.readLine();

StringBuffer sb = new StringBuffer();

while (s != null) {// 执行循环将字符串全部取出付值给StringBuffer由StringBuffer转成STRING

sb.append(s + "\n");

s = br.readLine();

}

return sb.toString();

} catch (Exception e) {

}

return "";

}

}

连接池配置

driverClassName=oracle.jdbc.driver.OracleDriver

url=jdbc:oracle:thin:@127.0.0.1:1521/orcl

username=scott

password=scott

#初始化连接数量

initialSize=10

#最大连接数

maxActive=20

#最大等待时间

maxWait=3000

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.hqd</groupId>

<artifactId>test-001</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.6</version>

</dependency>

<dependency>

<groupId>com.oracle</groupId>

<artifactId>ojdbc6</artifactId>

<version>11.2.0.3</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>utf8</encoding>

</configuration>

</plugin>

</plugins>

</build>

</project>

如果数据量继续增长,瓶颈在IO或者是网络,这时候是否就得考虑分库分表和集群了?( ̄▽ ̄)~

浙公网安备 33010602011771号

浙公网安备 33010602011771号