spline-spark-agent收集Iceberg(Spark程序)血缘

一、背景

使用Spark操作Iceberg(HiveCataLog的方式),使用Spline-Agent收集Spark作业的血缘。

二、编译

1、下载源码包:https://github.com/AbsaOSS/spline-spark-agent.git

2、经过测试,发现了一些Bug,影响到了Spark作业的正常执行,因此做了一些修改,整个修改好的Agent源码地址如下,直接下载编译即可(选择2.0版本!!!)

https://gitee.com/LzMingYueShanPao/spline-spark-agent.git

3、版本说明

Spark:3.0.3

Iceberg:0.12.1

三、编译

1、将代码上传至Linux,进入spline-spark-agent目录,执行编译命令

mvn clean install -DskipTests

2、第一步编译成功后,进入spline-spark-agent/bundle-3.0目录,执行编译命令

mvn clean install -DskipTests

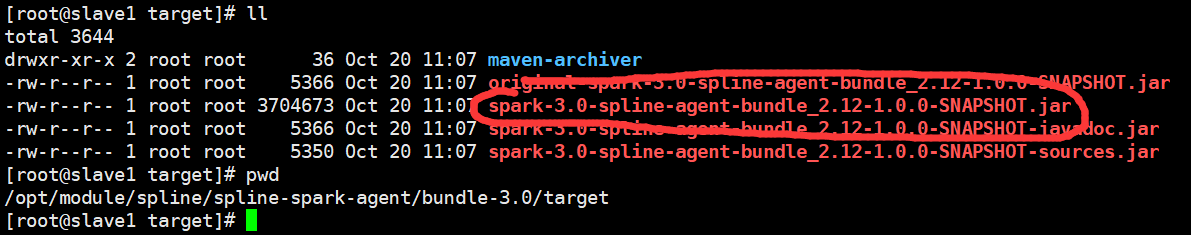

3、查看结果

4、参考地址:https://github.com/AbsaOSS/spline-spark-agent

四、使用

mkdir -p /opt/module/spline

2、上传spark-3.0-spline-agent-bundle_2.12-1.0.0-SNAPSHOT.jar至/opt/module/spline

3、血缘日志接入Kafka

spark-shell \ --jars /opt/module/spline/spark-3.0-spline-agent-bundle_2.12-1.0.0-SNAPSHOT.jar \ --conf spark.sql.queryExecutionListeners=za.co.absa.spline.harvester.listener.SplineQueryExecutionListener \ --conf spark.spline.lineageDispatcher=kafka \ --conf spark.spline.lineageDispatcher.kafka.topic=spline_test \ --conf spark.spline.lineageDispatcher.kafka.producer.bootstrap.servers=xxx.xxx.xxx.100:9092,xxx.xxx.xxx.101:9092,xxx.xxx.xxx.102:9092 \ --conf spark.spline.lineageDispatcher.kafka.sasl.mechanism=SCRAM-SHA-256 \ --conf spark.spline.lineageDispatcher.kafka.security.protocol=PLAINTEXT \ --conf spark.spline.lineageDispatcher.kafka.sasl.jaas.config=

4、血缘日志打印在控制台

spark-shell \ --jars /opt/module/spline/spark-2.4-spline-agent-bundle_2.11-1.0.0-SNAPSHOT.jar \ --conf spark.sql.queryExecutionListeners=za.co.absa.spline.harvester.listener.SplineQueryExecutionListener \ --conf spark.spline.lineageDispatcher=console

5、数据血缘收集Iceberg

iceberg-spark3-runtime-0.12.1.jar下载地址:https://search.maven.org/remotecontent?filepath=org/apache/iceberg/iceberg-spark3-runtime/0.12.1/iceberg-spark3-runtime-0.12.1.jar

bin/spark-shell --deploy-mode client --jars /opt/module/iceberg/iceberg-spark3-runtime-0.12.1.jar,/opt/module/spline/spark-3.0-spline-agent-bundle_2.12-1.0.0-SNAPSHOT.jar \ --conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions \ --conf spark.sql.catalog.hive_prod=org.apache.iceberg.spark.SparkCatalog \ --conf spark.sql.catalog.hive_prod.type=hive \ --conf spark.sql.catalog.hive_prod.uri=thrift://192.168.xxx.xxx:9083 \ --conf spark.sql.queryExecutionListeners=za.co.absa.spline.harvester.listener.SplineQueryExecutionListener \ --conf spark.spline.lineageDispatcher=kafka \ --conf spark.spline.lineageDispatcher.kafka.topic=spline_test \ --conf spark.spline.lineageDispatcher.kafka.producer.bootstrap.servers=192.168.xxx.xxx:9092,192.168.xxx.xxx:9092,192.168.xxx.xxx:9092 \ --conf spark.spline.lineageDispatcher.kafka.sasl.mechanism=SCRAM-SHA-256 \ --conf spark.spline.lineageDispatcher.kafka.security.protocol=PLAINTEXT \ --conf spark.spline.lineageDispatcher.kafka.sasl.jaas.config=

6、查看kafka的topic:spline_test

得到如下数据:

{ "id": "3d2c9289-ac47-5672-babf-d4a67bde5d14", "name": "Spark shell", "operations": { "write": { "outputSource": "hdfs://192.168.xxx.xxx:8020/user/hive/warehouse/iceberg_hadoop_db.db/tbresclientfollower65", "append": false, "id": "op-0", "name": "OverwriteByExpression", "childIds": [ "op-1" ], "params": { "table": { "identifier": "hive_prod.iceberg_hadoop_db.tbresclientfollower65", "output": [ { "__attrId": "attr-8" }, { "__attrId": "attr-9" }, { "__attrId": "attr-10" }, { "__attrId": "attr-11" }, { "__attrId": "attr-12" }, { "__attrId": "attr-13" }, { "__attrId": "attr-14" }, { "__attrId": "attr-15" } ] }, "isByName": false, "deleteExpr": { "__exprId": "expr-0" } }, "extra": { "destinationType": "iceberg" } }, "reads": [ { "inputSources": [ "hdfs://192.168.xxx.xxx:8020/user/hive/warehouse/iceberg_hadoop_db.db/tbresclientfollower64" ], "id": "op-3", "name": "DataSourceV2Relation", "output": [ "attr-0", "attr-1", "attr-2", "attr-3", "attr-4", "attr-5", "attr-6", "attr-7" ], "params": { "table": { "identifier": "hive_prod.iceberg_hadoop_db.tbresclientfollower64" }, "identifier": "iceberg_hadoop_db.tbresclientfollower64", "options": "org.apache.spark.sql.util.CaseInsensitiveStringMap@1f" }, "extra": { "sourceType": "iceberg" } } ], "other": [ { "id": "op-2", "name": "SubqueryAlias", "childIds": [ "op-3" ], "output": [ "attr-0", "attr-1", "attr-2", "attr-3", "attr-4", "attr-5", "attr-6", "attr-7" ], "params": { "identifier": "hive_prod.iceberg_hadoop_db.tbresclientfollower64" } }, { "id": "op-1", "name": "Project", "childIds": [ "op-2" ], "output": [ "attr-0", "attr-1", "attr-2", "attr-3", "attr-4", "attr-5", "attr-6", "attr-7" ], "params": { "projectList": [ { "__attrId": "attr-0" }, { "__attrId": "attr-1" }, { "__attrId": "attr-2" }, { "__attrId": "attr-3" }, { "__attrId": "attr-4" }, { "__attrId": "attr-5" }, { "__attrId": "attr-6" }, { "__attrId": "attr-7" } ] } } ] }, "attributes": [ { "id": "attr-0", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_tbresclientfollower_uuid" }, { "id": "attr-1", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "clientid" }, { "id": "attr-2", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "id" }, { "id": "attr-3", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "objid" }, { "id": "attr-4", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "objname" }, { "id": "attr-5", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "type" }, { "id": "attr-6", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_createtime" }, { "id": "attr-7", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_lst_upd_time" }, { "id": "attr-8", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_tbresclientfollower_uuid" }, { "id": "attr-9", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "clientid" }, { "id": "attr-10", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "id" }, { "id": "attr-11", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "objid" }, { "id": "attr-12", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "objname" }, { "id": "attr-13", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "type" }, { "id": "attr-14", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_createtime" }, { "id": "attr-15", "dataType": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "bd_lst_upd_time" } ], "expressions": { "constants": [ { "id": "expr-0", "dataType": "75fe27b9-9a00-5c7d-966f-33ba32333133", "extra": { "simpleClassName": "Literal", "_typeHint": "expr.Literal" }, "value": true } ] }, "systemInfo": { "name": "spark", "version": "3.0.3" }, "agentInfo": { "name": "spline", "version": "1.0.0-SNAPSHOT+unknown" }, "extraInfo": { "appName": "Spark shell", "dataTypes": [ { "_typeHint": "dt.Simple", "id": "e63adadc-648a-56a0-9424-3289858cf0bb", "name": "string", "nullable": true }, { "_typeHint": "dt.Simple", "id": "75fe27b9-9a00-5c7d-966f-33ba32333133", "name": "boolean", "nullable": false } ], "appId": "application_1665743023238_0122", "startTime": 1666249345168 } }

7、解析JSON数据

Spark作业通过Spline的Agent程序解析之后,会将血缘信息发送至spline_test主题,此主题中的数据可根据自己公司的实际情况来进行使用。

我这边会对spline_test主题中的数据进行清洗,消费spline_test主题,解析数据,提取出Spark作业的上下游信息,然后根据这些信息就可以知道Spark使用了哪些表,输出了哪些表,然后将数据导入图数据库HugeGraph即可。

8、我之前也写过收集Spark作业血缘的一篇文章,大家也可以参考一下

浙公网安备 33010602011771号

浙公网安备 33010602011771号