jenkins+kubernetes+helm+spring boot实践

目录

一、介绍

(一)、简述

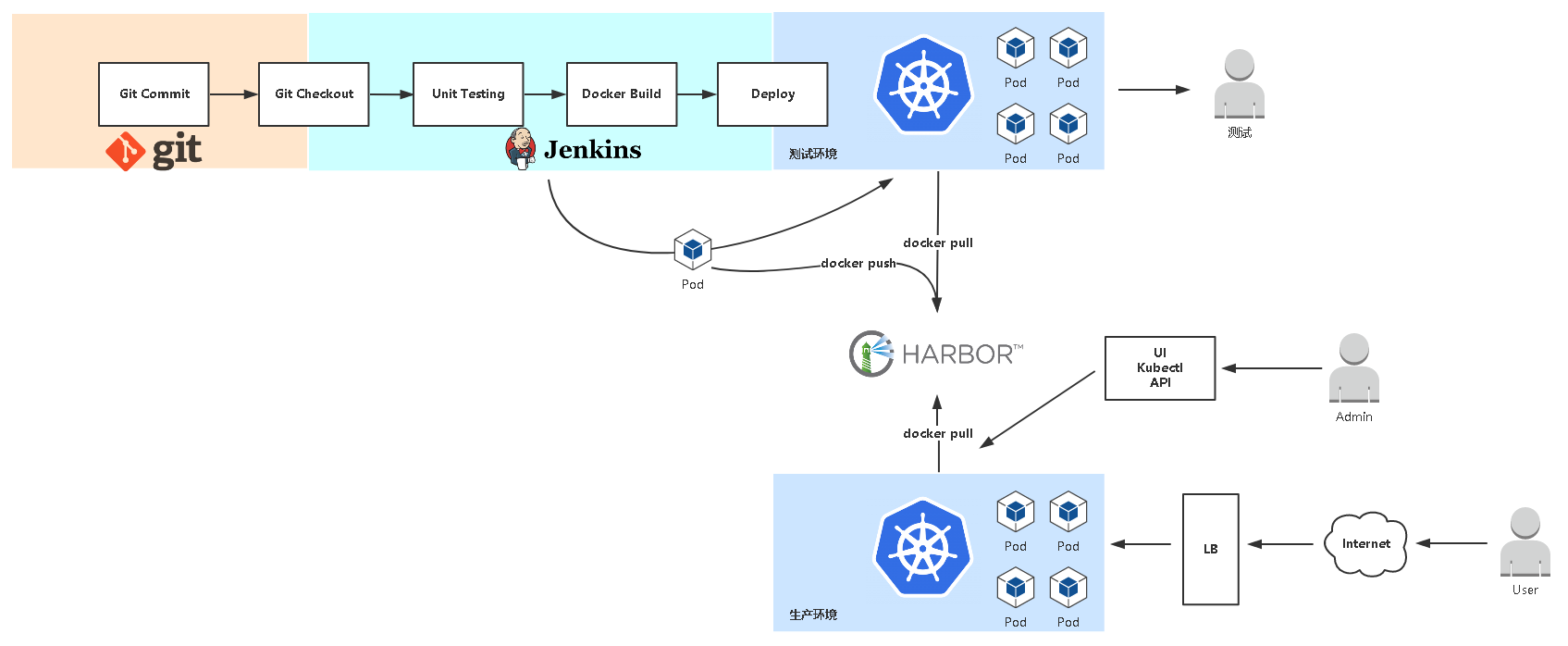

持续构建和发布时工作中不可缺少的重要步骤,大多数采用Jenkins集群来建设CICD持续流程。然而传统的jenkins-salve一主多从集群模式存在一些弊端。(单点故障,各个slave环境不一致,资源分配不均匀等)

基于kubernetes容器技术来实现CICD流程,极大的解决了传统部署流程的相关弊端。

(二)、流程

- 手动或者自动构建任务

- Jenkins调用k8s api

- 动态生成Jenkins slave pod

- slave pod执行流水线任务(获取git代码,编译,构建镜像,推送镜像,部署到k8s各个环境)

- slave任务执行完成,pod自动销毁

二、环境准备

(一)、基础环境

- kubernetes 1.18 +

- helm 3.0 +

- docker 19.03 +

(二)、pv供给之nfs存储

-

服务端安装和配置

# 安装 yum install nfs-utils -y # 配置 vim /etc/exports /ifs/kubernetes *(rw,sync,no_subtree_check,no_root_squash,no_all_squash,insecure) # 创建目录 mkdir -pv /ifs/kubernetes # 启动 systemctl start nfs systemctl enable nfs -

客户端工具

# 所有node节点需要安装nfs-utils包,mount挂载时需要使用 yum install nfs-utils -y -

插件安装nfs-client-provisioner

# 参考文档 https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client/deploy # 部署文件 . ├── class.yaml ├── deployment.yaml └── rbac.yaml# 修改 nfs服务器地址 x.x.x.x vim deployment.yamlkind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: x.x.x.x - name: NFS_PATH value: /ifs/kubernetes volumes: - name: nfs-client-root nfs: server: x.x.x.x path: /ifs/kubernetes# 部署 # cd $home/nfs-client kubectl apply -f . -

验证

kubectl get sc managed-nfs-storage NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE managed-nfs-storage fuseim.pri/ifs Delete Immediate false 50d

(三)、镜像仓库harbor

-

环境准备

docker 19.03 +

docker-compose 1.26 +

-

下载安装包

# 地址 https://github.com/goharbor/harbor/releases # 下载 wget https://github.com/goharbor/harbor/releases/download/v2.1.3/harbor-offline-installer-v2.1.3.tgz -

配置

# 解压 tar -zxvf harbor-offline-installer-v2.1.3.tgz # 配置 vim harbor.ymlhostname: harbor.test.info # 域名或者ip地址 http: port: 80 harbor_admin_password: Harbor12345 # admin密码 data_volume: # 数据目录 -

启动

# 预执行 ./prepare # 启动 ./install.sh --with-chartmuseum # --with-chartmuseum 参数开启helm包管理仓库 # 验证 docker-compose ps

三、jenkins准备

(一)、master节点

-

部署文件

jenkins/ ├── deployment.yml ├── ingress.yml ├── rbac.yml ├── service-account.yml └── service.yml -

service-account.yml

--- apiVersion: v1 kind: ServiceAccount metadata: name: jenkins --- kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: jenkins rules: - apiGroups: [""] resources: ["pods"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/exec"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/log"] verbs: ["get","list","watch"] - apiGroups: [""] resources: ["secrets"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: jenkins roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: jenkins subjects: - kind: ServiceAccount name: jenkins -

rbac.yml

--- # 创建名为jenkins的ServiceAccount apiVersion: v1 kind: ServiceAccount metadata: name: jenkins --- # 创建名为jenkins的Role,授予允许管理API组的资源Pod kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: jenkins rules: - apiGroups: [""] resources: ["pods"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/exec"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/log"] verbs: ["get","list","watch"] - apiGroups: [""] resources: ["secrets"] verbs: ["get"] --- # 将名为jenkins的Role绑定到名为jenkins的ServiceAccount apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: jenkins roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: jenkins subjects: - kind: ServiceAccount name: jenkins -

deployment.yml

apiVersion: apps/v1 kind: Deployment metadata: name: jenkins labels: name: jenkins spec: replicas: 1 selector: matchLabels: name: jenkins template: metadata: name: jenkins labels: name: jenkins spec: terminationGracePeriodSeconds: 10 serviceAccountName: jenkins containers: - name: jenkins image: jenkins/jenkins:lts imagePullPolicy: Always ports: - containerPort: 8080 - containerPort: 50000 resources: limits: cpu: 1 memory: 1Gi requests: cpu: 0.5 memory: 500Mi env: - name: LIMITS_MEMORY valueFrom: resourceFieldRef: resource: limits.memory divisor: 1Mi - name: JAVA_OPTS value: -Xmx$(LIMITS_MEMORY)m -XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0 -Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85 volumeMounts: - name: jenkins-home mountPath: /var/jenkins_home livenessProbe: httpGet: path: /login port: 8080 initialDelaySeconds: 60 timeoutSeconds: 5 failureThreshold: 12 readinessProbe: httpGet: path: /login port: 8080 initialDelaySeconds: 60 timeoutSeconds: 5 failureThreshold: 12 securityContext: fsGroup: 1000 volumes: - name: jenkins-home persistentVolumeClaim: claimName: jenkins-home --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: jenkins-home spec: storageClassName: "managed-nfs-storage" accessModes: ["ReadWriteOnce"] resources: requests: storage: 50Gi -

service.yml

apiVersion: v1 kind: Service metadata: name: jenkins spec: selector: name: jenkins type: NodePort ports: - name: http port: 80 targetPort: 8080 protocol: TCP nodePort: 30006 - name: agent port: 50000 protocol: TCP -

ingress.yml

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: jenkins annotations: nginx.ingress.kubernetes.io/ssl-redirect: "true" nginx.ingress.kubernetes.io/proxy-body-size: 100m spec: rules: - host: jenkins.test.info http: paths: - path: / backend: serviceName: jenkins servicePort: 80 -

部署

kubectl apply -f . -

验证

NAME READY STATUS RESTARTS AGE jenkins-754b6fb4b9-5pwl9 1/1 Running 0 24h

(二)、插件

-

Git

-

Pipeline

-

Config File Provider Plugin

-

Kubernetes

(三)、配置

-

git认证

-

harbor认证

-

kubernetes认证

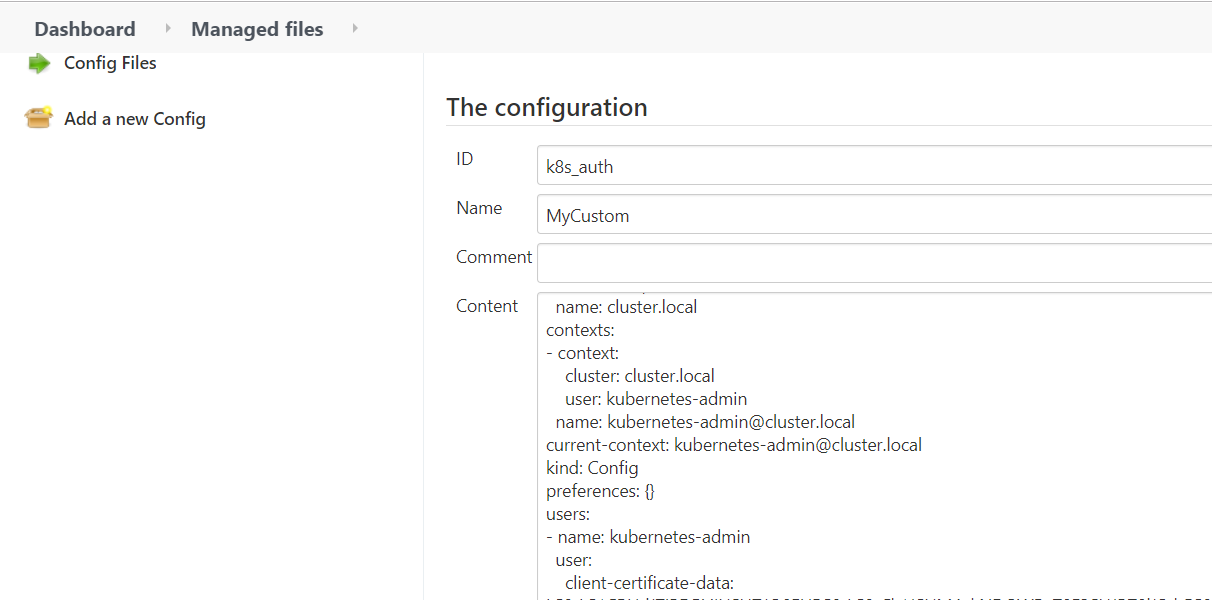

## 系统管理 => Managed files => Add a new config => custom config # ID k8s_auth # kubeconfig内容 # k8s主机可查看 cat root/.kube/config![]()

-

系统cloud配置

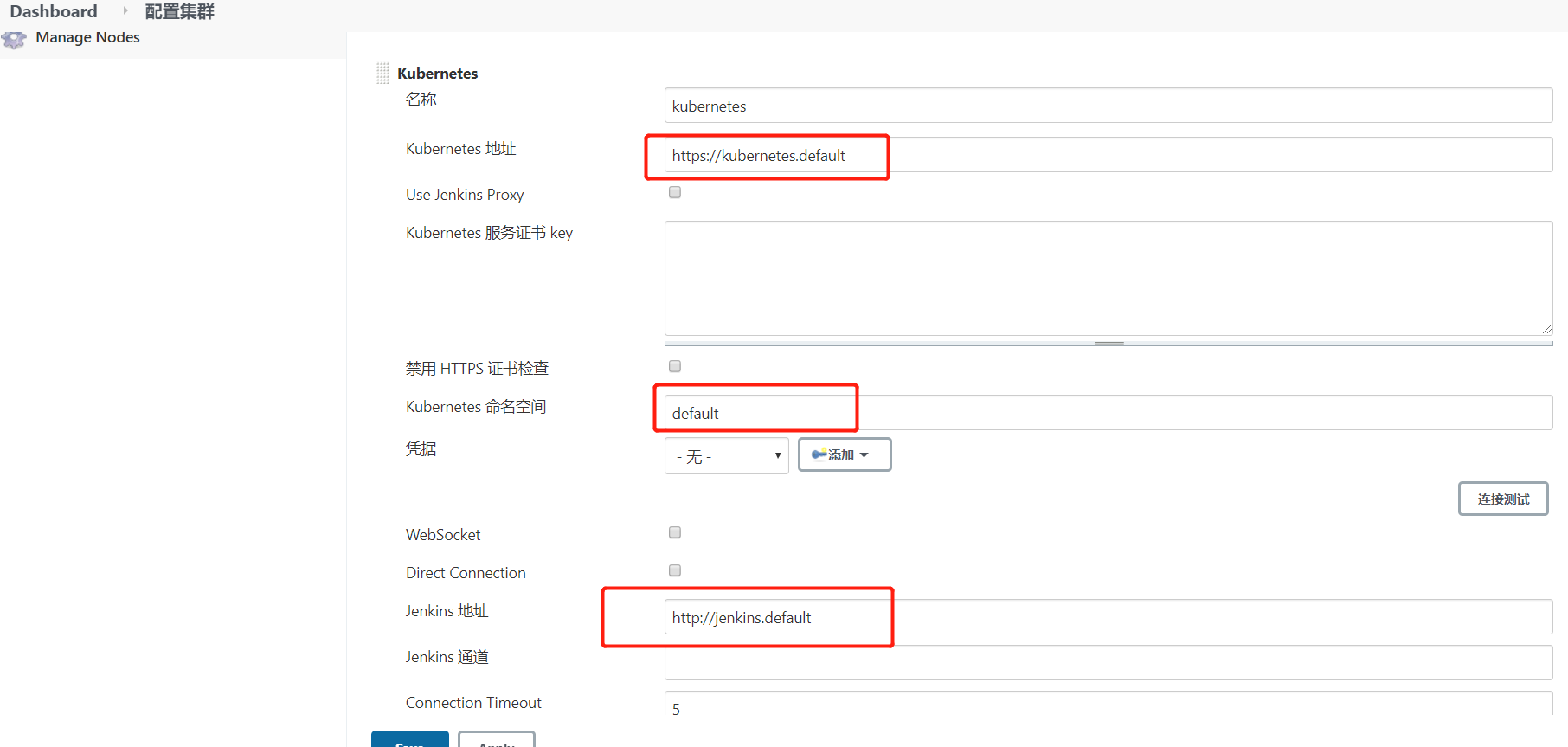

## 系统管理 => 系统配置 => Cloud => Add a new cloud => kubernetes![]()

(四)、jenkins-slave节点准备

-

镜像准备

jenkins-slave/ ├── Dockerfile ├── helm ├── jenkins-slave ├── kubectl ├── settings.xml └── slave.jarFROM centos:7 LABEL maintainer qms19 RUN yum install -y java-1.8.0-openjdk maven curl git libtool-ltdl-devel && \ yum clean all && \ rm -rf /var/cache/yum/* && \ mkdir -p /usr/share/jenkins COPY slave.jar /usr/share/jenkins/slave.jar COPY jenkins-slave /usr/bin/jenkins-slave COPY settings.xml /etc/maven/settings.xml COPY helm kubectl /usr/bin/ RUN chmod +x /usr/bin/helm && chmod +x /usr/bin/jenkins-slave && chmod +x /usr/bin/kubectl ENTRYPOINT ["jenkins-slave"]# 构建镜像 docker build -t harbor.test.info/base/jenkins-slave:v1 . -

推送harbor私有仓库

# 登录 docker login -u admin -p Harbor12345 harbor.test.info # push docker push harbor.test.info/library/jenkins-slave:v1

四、集成java应用

(一)、自定义Helm

-

新建项目

helm create java-demo -

项目结构

java-demo/ ├── charts ├── Chart.yaml # 描述基本版本和配置信息 ├── templates # 模板 │ ├── deployment.yaml # deployment部署 │ ├── _helpers.tpl # 参数模板 │ ├── hpa.yaml # 弹性伸缩 │ ├── ingress.yaml # 路由 │ ├── NOTES.txt # 说明 │ ├── serviceaccount.yaml # 用户 │ ├── service.yaml # 服务暴露 │ └── tests # 测试 │ └── test-connection.yaml └── values.yaml # 变量 -

模板定义

vim templates/_helpers.tpl{{- define "demo.fullname" -}} {{- .Chart.Name -}}-{{- .Release.Name -}} {{- end -}} {{/* 公共标签 */}} {{- define "demo.labels" -}} app: {{ template "demo.fullname" . }} chart: "{{ .Chart.Name }}-{{ .Chart.Version }}" release: "{{ .Release.Name }}" {{- end -}} {{/* 标签选择器 */}} {{- define "demo.selectorLabels" -}} app: {{ template "demo.fullname" . }} release: "{{ .Release.Name }}" {{- end -}} -

变量定义

vim values.yamlreplicaCount: 1 image: repository: harbor.test.info/library/java-demo pullPolicy: IfNotPresent tag: "latest" imagePullSecrets: [] service: type: ClusterIP port: 8080 ingress: enabled: true annotations: nginx.ingress.kubernetes.io/proxy-body-size: 100m nginx.ingress.kubernetes.io/proxy-connect-timeout: "600" nginx.ingress.kubernetes.io/proxy-read-timeout: "600" nginx.ingress.kubernetes.io/proxy-send-timeout: "600" host: demo.test.info tls: [] resources: {} autoscaling: enabled: false minReplicas: 1 maxReplicas: 100 targetCPUUtilizationPercentage: 80 nodeSelector: {} -

Chart定义

vim Chart.yamlapiVersion: v2 name: java-demo description: java应用示例 type: application version: 0.1.0 -

deployment定义

vim templates/deployment.yamlapiVersion: apps/v1 kind: Deployment metadata: name: {{ include "demo.fullname" . }} labels: {{- include "demo.labels" . | nindent 4 }} spec: {{- if not .Values.autoscaling.enabled }} replicas: {{ .Values.replicaCount }} {{- end }} selector: matchLabels: {{- include "demo.selectorLabels" . | nindent 6 }} template: metadata: {{- with .Values.podAnnotations }} annotations: {{- toYaml . | nindent 8 }} {{- end }} labels: {{- include "demo.selectorLabels" . | nindent 8 }} spec: {{- with .Values.imagePullSecrets }} imagePullSecrets: {{- toYaml . | nindent 8 }} {{- end }} containers: - name: {{ .Chart.Name }} image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}" imagePullPolicy: {{ .Values.image.pullPolicy }} ports: - name: http containerPort: {{ .Values.service.port }} protocol: TCP livenessProbe: httpGet: path: / port: http readinessProbe: httpGet: path: / port: http resources: {{- toYaml .Values.resources | nindent 12 }} {{- with .Values.nodeSelector }} nodeSelector: {{- toYaml . | nindent 8 }} {{- end }} -

service定义

vim templates/service.yamlapiVersion: v1 kind: Service metadata: name: {{ include "demo.fullname" . }} labels: {{- include "demo.labels" . | nindent 4 }} spec: type: {{ .Values.service.type }} ports: - port: {{ .Values.service.port }} targetPort: http protocol: TCP name: http selector: {{- include "demo.selectorLabels" . | nindent 4 }} -

ingress定义

vim templates/ingress.yaml{{- if .Values.ingress.enabled -}} apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: {{ include "demo.fullname" . }} labels: {{- include "demo.labels" . | nindent 4 }} {{- with .Values.ingress.annotations }} annotations: {{- toYaml . | nindent 4 }} {{- end }} spec: {{- if .Values.ingress.tls }} tls: {{- range .Values.ingress.tls }} - hosts: {{- range .hosts }} - {{ . | quote }} {{- end }} secretName: {{ .secretName }} {{- end }} {{- end }} rules: {{- range .Values.ingress.hosts }} - host: {{ .host | quote }} http: paths: {{- range .paths }} - path: {{ . }} backend: serviceName: {{ include "demo.fullname" . }} servicePort: {{ .Values.service.port }} {{- end }} {{- end }} {{- end -}} -

hpa定义

vim templates/hpa.yaml{{- if .Values.autoscaling.enabled }} apiVersion: autoscaling/v2beta1 kind: HorizontalPodAutoscaler metadata: name: {{ include "demo.fullname" . }} labels: {{- include "demo.labels" . | nindent 4 }} spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: {{ include "demo.fullname" . }} minReplicas: {{ .Values.autoscaling.minReplicas }} maxReplicas: {{ .Values.autoscaling.maxReplicas }} metrics: {{- if .Values.autoscaling.targetCPUUtilizationPercentage }} - type: Resource resource: name: cpu targetAverageUtilization: {{ .Values.autoscaling.targetCPUUtilizationPercentage }} {{- end }} {{- if .Values.autoscaling.targetMemoryUtilizationPercentage }} - type: Resource resource: name: memory targetAverageUtilization: {{ .Values.autoscaling.targetMemoryUtilizationPercentage }} {{- end }} {{- end }} -

验证调试

helm install demo --dry-run java-demo/

(二)、harbor管理Helm Charts

-

安装push插件

helm plugin install https://github.com/chartmuseum/helm-push -

添加仓库

helm repo add --username admin --password Harbor12345 myrepo http://harbor.test.info/chartrepo/library -

打包

helm package java-demo/ # java-demo-0.1.0.tgz -

推送

helm push java-demo-0.1.0.tgz --username=admin --password=Harbor12345 http://harbor.test.info/chartrepo/library

(三)、流水线实践

-

定义

pipeline { environment { // harbor地址 registry = "harbor.test.info" // chart项目 project = "library" // 命令空间 Namespace = "test" __ROPE_GIT_URL = "http://192.168.168.11/microservice/java-demo.git" service_name = "java-demo" service_port = 8080 replicaCount = 1 BRANCH_NAME = "master" // 认证 image_pull_secret = "registry-pull-secret" harbor_registry_auth = "harbor_registry_auth" git_auth = "git_auth" k8s_auth = "k8s_auth" } agent { kubernetes { label "jenkins-slave" yaml """ kind: Pod metadata: name: jenkins-slave spec: hostAliases: - ip: "192.168.168.11" hostnames: ["harbor.test.info"] containers: - name: jnlp image: "${registry}/library/jenkins-slave:v1" imagePullPolicy: Always volumeMounts: - name: docker-cmd mountPath: /usr/bin/docker - name: docker-sock mountPath: /var/run/docker.sock - name: maven-cache mountPath: /root/.m2 volumes: - name: docker-cmd hostPath: path: /usr/bin/docker - name: docker-sock hostPath: path: /var/run/docker.sock - name: maven-cache hostPath: path: /tmp/m2 """ } } stages { stage('git阶段') { steps { checkout([ $class: 'GitSCM', branches: [[name: "${BRANCH_NAME}"]], doGenerateSubmoduleConfigurations: false, extensions: [], submoduleCfg: [], userRemoteConfigs: [[credentialsId: "${git_auth}",url: "${__ROPE_GIT_URL}"]] ]) } } stage('maven打包') { steps { sh """ mvn clean package -DskipTests -DskipDocker -U """ } } stage('docker阶段'){ steps { withCredentials([usernamePassword(credentialsId: "${harbor_registry_auth}", passwordVariable: 'password', usernameVariable: 'username')]) { sh """ docker login -u ${username} -p '${password}' ${registry} image_name=${registry}/${project}/${service_name}:${BUILD_NUMBER} docker build -t \${image_name} . docker push \${image_name} docker rmi \${image_name} """ configFileProvider([configFile(fileId: "${k8s_auth}", targetLocation: "admin.kubeconfig")]){ sh """ # 添加镜像拉取认证 kubectl create secret docker-registry ${image_pull_secret} --docker-username=${username} --docker-password=${password} --docker-server=${registry} -n ${Namespace} --kubeconfig admin.kubeconfig |true # 添加私有chart仓库 helm repo add --username ${username} --password ${password} myrepo http://${registry}/chartrepo/${project} """ } } } } stage ('helm部署k8s阶段') { steps { sh """ common_args="-n ${Namespace} --kubeconfig admin.kubeconfig" image=${registry}/${project}/${service_name} tag=${BUILD_NUMBER} helm_args="\${service_name} --set image.repository=\${image} --set image.tag=\${tag} --set replicaCount=${replicaCount} --set imagePullSecrets[0].name=${image_pull_secret} --set service.targetPort=\${service_port} myrepo/ms" # 判断是否为新部署 if helm history \${service_name} \${common_args} &>/dev/null;then action=upgrade else action=install fi helm \${action} \${helm_args} \${common_args} """ } } } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号