1. MNIST读取数据

%pylab inline

Populating the interactive namespace from numpy and matplotlib

在Yann LeCun教授的网站中(http://yann.lecun.com/exdb/mnist ) 对MNIST数据集做出了详细的介绍。

在TensorFlow中对MNIST数据集进行了封装。

MNIST数据集是NIST数据集的一个子集,它包含了60000张图片作为训练数据,10000张图片作为测试数据。在MNIST数据集中的每一张图片都代表了 0~9中的一个数字。图片大小均为\(28 * 28\),且数字都会出现在图片的正中间。

TensorFlow提供了一个类来处理MNIST数据:自动下载并转化MNIST数据的格式,将数据从原始的数据包中解析成训练和测试神经网络时使用的格式。

1. 读取数据集,第一次TensorFlow会自动下载数据集到下面的路径中。

\[\begin{pmatrix}

\text{train-images-idx3-ubyte.gz:} & \text{training set images (9912422 bytes)} \\

\text{train-labels-idx1-ubyte.gz:} & \text{training set labels (28881 bytes)} \\

\text{t10k-images-idx3-ubyte.gz:} & \text{test set images (1648877 bytes)} \\

\text{t10k-labels-idx1-ubyte.gz:} & \text{test set labels (4542 bytes)} \\

\end{pmatrix}

\]

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("../../datasets/MNIST_data/", one_hot=True)

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.

Extracting ../../datasets/MNIST_data/train-images-idx3-ubyte.gz

Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.

Extracting ../../datasets/MNIST_data/train-labels-idx1-ubyte.gz

Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.

Extracting ../../datasets/MNIST_data/t10k-images-idx3-ubyte.gz

Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.

Extracting ../../datasets/MNIST_data/t10k-labels-idx1-ubyte.gz

2. 数据集会自动被分成3个子集,train(训练)、validation(验证)和test(测试)。以下代码会显示数据集的大小。

print("Training data size: ", mnist.train.num_examples)

print("Validating data size: ", mnist.validation.num_examples)

print("Testing data size: ", mnist.test.num_examples)

Training data size: 55000

Validating data size: 5000

Testing data size: 10000

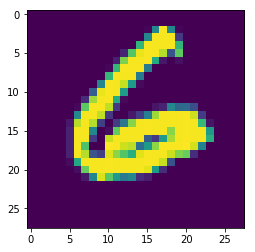

3. 查看training数据集中某个成员的像素矩阵生成的一维数组和其属于的数字标签。

print("Example training data: ", mnist.train.images[0])

print("Example training data label: ", mnist.train.labels[0])

Example training data: [ 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.01176471 0.46274513 0.99215692 0.45882356 0.01176471

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.46274513 0.98823535 0.98823535 0.98823535

0.16862746 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.65882355 0.99215692 0.98823535 0.98823535

0.98823535 0.65882355 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.10196079 0.73333335 0.97254908 0.99215692 0.98823535

0.89019614 0.25882354 0.75294125 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.08627451 0.80392164 0.98823535 0.98823535 0.99215692

0.6156863 0.0627451 0. 0.04313726 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.01176471 0.66666669 0.99215692 0.99215692 0.99215692

0.41568631 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.66666669 0.98823535 0.98823535 0.98823535

0.41568631 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.45490199 0.99215692 0.98823535 0.91372555

0.5529412 0.02352941 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.29803923 0.97254908 0.99215692 0.98823535

0.5529412 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.1254902 0.85490203 0.98823535 0.99215692

0.6156863 0.02352941 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.01960784 0.74901962 0.99215692 0.99215692

0.90588242 0.16470589 0. 0. 0. 0.

0.05882353 0.09411766 0.46274513 0.33725491 0.34117648 0.2392157 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.47058827 0.98823535

0.98823535 0.90588242 0.16470589 0. 0. 0.06666667

0.18431373 0.43137258 0.8588236 0.98823535 0.98823535 0.98823535

0.99215692 0.92549026 0.17254902 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0.06666667 0.89411771 0.98823535 0.89019614 0.18823531 0.

0.17647059 0.39607847 0.81960791 0.98823535 0.99215692 0.98823535

0.98823535 0.98823535 0.98823535 0.99215692 0.98823535 0.67058825

0.0509804 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.09411766 0.98823535 0.98823535

0.4666667 0. 0.03529412 0.76078439 0.98823535 0.98823535

0.98823535 0.99215692 0.98823535 0.98823535 0.82352948 0.98823535

0.99215692 0.98823535 0.98823535 0.54509807 0. 0. 0.

0. 0. 0. 0. 0. 0.

0.09411766 0.98823535 0.98823535 0.17647059 0. 0.91372555

0.98823535 0.98823535 0.98823535 0.98823535 0.29411766 0.16862746

0.53725493 0.8705883 0.98823535 0.99215692 0.98823535 0.98823535

0.627451 0. 0. 0. 0. 0. 0.

0. 0. 0. 0.09411766 0.99215692 0.72156864

0. 0. 1. 0.99215692 0.92549026 0.90196085

0.36862746 0.54509807 0.83137262 0.99215692 0.99215692 0.99215692

1. 0.93725497 0.45098042 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0.09411766 0.98823535 0.89019614 0.26274511 0.18431373 0.95294124

0.82745105 0.72941178 0.63137257 0.95686281 0.99215692 0.98823535

0.98823535 0.92156869 0.80784321 0.76862752 0.12941177 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0.02745098 0.60392159 0.98823535 0.98823535

0.98823535 0.99215692 0.98823535 0.98823535 0.98823535 0.98823535

0.99215692 0.98823535 0.91372555 0.25098041 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.03529412

0.76862752 0.98823535 0.98823535 0.99215692 0.98823535 0.98823535

0.98823535 0.98823535 0.96078438 0.54509807 0.12941177 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0.06666667 0.45882356 0.94901967 0.74509805 0.53725493

0.41568631 0.45882356 0.1254902 0.08235294 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 0. ]

Example training data label: [ 0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

因为神经网络的输入是一个特征向量,所以将一张二维图像的像素矩阵放到一个一维数组中可以方便TensorFlow将图片的像素矩阵提供给神经网络的输入层。像素矩阵中的元素的取值 \([0,1]\) ,它代表了颜色的深浅。其中 \(0\) 表示白色背景(backgroud),\(1\) 表示黑色前景(forefround)。

mnist.train.images[0].shape

(784,)

sqrt(784)

28.0

a = mnist.train.images[0]

a.shape = [28,28]

imshow(a)

<matplotlib.image.AxesImage at 0x2115388dba8>

4. 使用mnist.train.next_batch来实现随机梯度下降。

mnist.train.next_batch可以从所有的训练数据中读取一小部分作为一个训练batch。

batch_size = 100

xs, ys = mnist.train.next_batch(batch_size) # 从train的集合中选取batch_size个训练数据。

print("X shape:", xs.shape)

print("Y shape:", ys.shape)

X shape: (100, 784)

Y shape: (100, 10)

探寻有趣之事!

浙公网安备 33010602011771号

浙公网安备 33010602011771号