作业day07

http://www.haha56.net/xiaohua/gushi/

http://www.haha56.net/xiaohua/gushi/ 爬取文字并做词频和词云分析

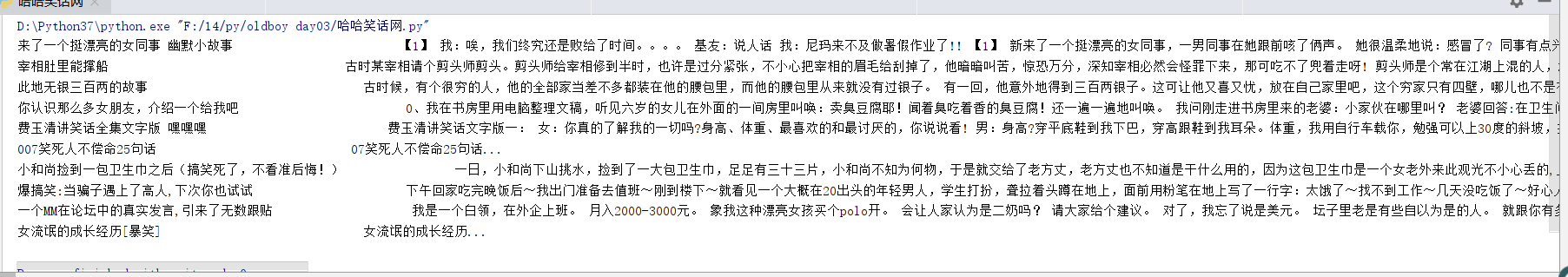

# 哈哈笑话网爬取文字

import requests

import re

response = requests.get('http://www.haha56.net/xiaohua/gushi/')

response.encoding = 'gb2312'

data = response.text

content_res = re.findall('<dd class="preview">(.*?)</dd>', data)

title_res = re.findall('<dt><a href=".*?" target="_blank">(.*?)</a></dt>', data)

for i in range(len(title_res)):

if "<b><font color='#FF0000'>" in title_res[i]:

title_res[i] = title_res[i].replace("<b><font color='#FF0000'>", '')

if "</font></b>" in title_res[i]:

title_res[i] = title_res[i].replace("</font></b>", '')

if "\"" in title_res[i]:

title_res[i] = title_res[i].replace("\"", '\'')

if "<b>" in title_res[i]:

title_res[i] = title_res[i].replace("<b>", '')

if "</b>" in title_res[i]:

title_res[i] = title_res[i].replace("</b>", '')

# print(title_res)

# print(content_res)

title_content_dic = {}

for i in range(len(title_res)):

title_content_dic[title_res[i]] = content_res[i]

for i in title_content_dic.items():

print(f'{i[0]:<40} {i[1]:<1000}')

# 哈哈笑话网词频统计

import jieba

f = open(r'F:\14\py\oldboy day03\哈哈笑话.txt', 'r', encoding='utf8')

data = f.read()

data_jieba = jieba.lcut(data)

counts = {}

excludes = {"...", "一个", "有点"}

for word in data_jieba:

if len(word) == 1:

continue

else:

rword = word

counts[rword] = counts.get(rword, 0) + 1

for word in excludes:

del counts[word]

items = list(counts.items())

items.sort(key=lambda x: x[1], reverse=True)

for i in range(10):

word, count = items[i]

print("{0:<10}{1:>5}".format(word, count))

# 哈哈笑话网词云

import wordcloud

from imageio import imread

mask = imread(r"F:\14\py\oldboy day03\爱心.png")

f = open(r'F:\14\py\oldboy day03\哈哈笑话.txt', 'r', encoding='utf8')

data = f.read()

w = wordcloud.WordCloud(font_path=r'C:\Windows\Fonts\simkai.ttf', mask=mask, width=200, height=200,background_color="white")

w.generate(data)

w.to_file('outfile1.png')

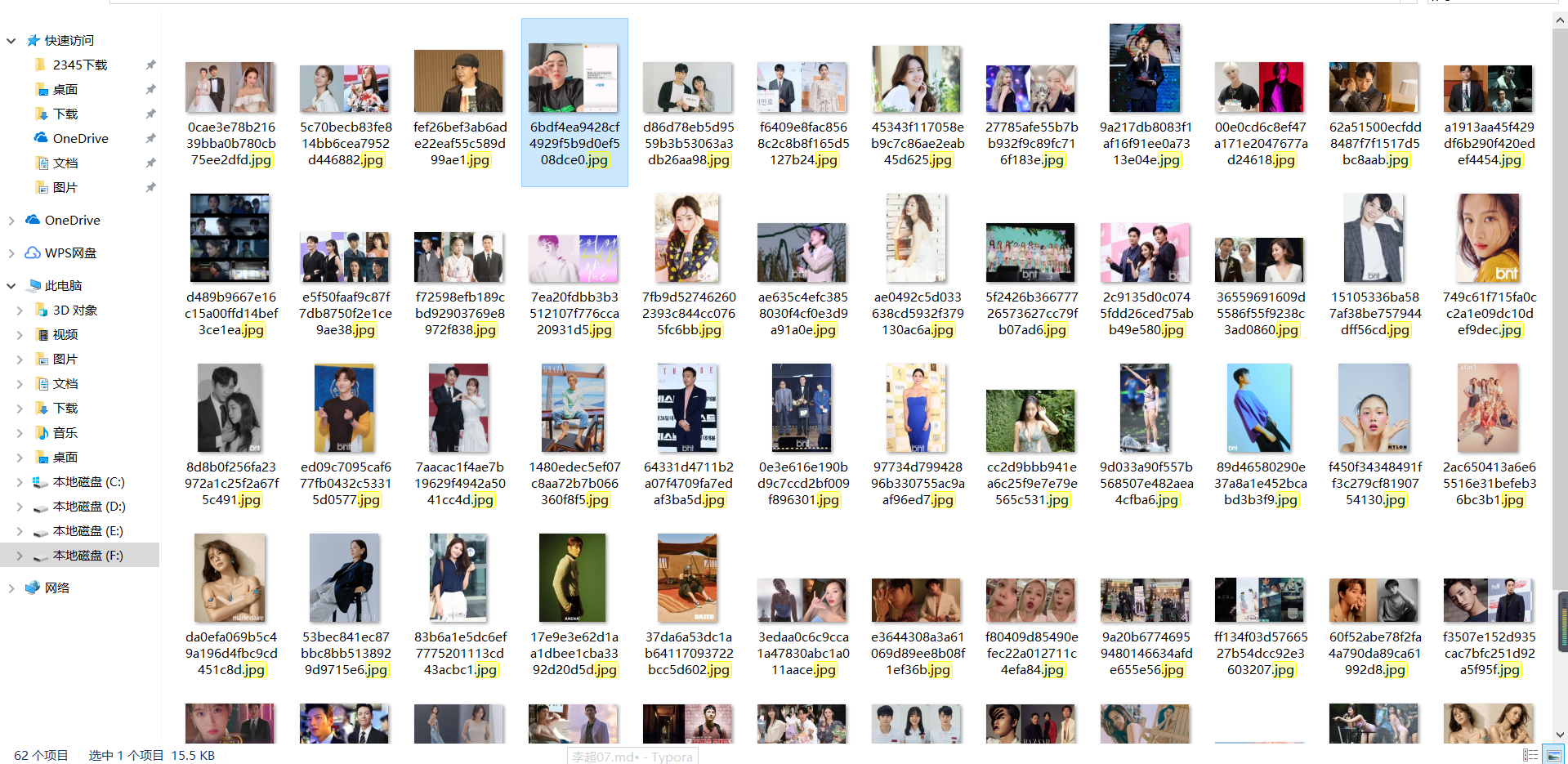

https://www.y3600.cc/ 爬取图片内容。

# 韩剧网

import requests

import re

response = requests.get('https://www.y3600.cc/')

data = response.text

# print(data)

img_url_res = re.findall('img src="(.*?)"', data)

for i in img_url_res:

img_response = requests.get("http:" + i)

img_data = img_response.content

img_name = i.split('/')[-1]

f = open(img_name + ".jpg", 'wb')

f.write(img_data)

f.flush()

浙公网安备 33010602011771号

浙公网安备 33010602011771号