Attention Mechanisms-attention cues 课后题

1、What can be the volitional cue when decoding a sequence token by token in machine translation? What are the nonvolitional cues and the sensory inputs?

待补充

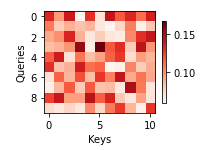

2、Randomly generate a 10×10 matrix and use the softmax operation to ensure each row is a valid probability distribution. Visualize the output attention weights.

data = torch.rand(10, 11)

data = torch.nn.functional.softmax(data, dim = 0)

show_heatmaps(data.reshape(1,1,data.shape[0],data.shape[1]), xlabel='Keys', ylabel='Queries')

浙公网安备 33010602011771号

浙公网安备 33010602011771号