二进制部署高可用kubernetes-1.22.7集群

部署高可用k8s集群

一:环境初始化

1、系统规划

(为了验证方便在这里我将两个负载均衡nginx部署在node节点上的,可以根据自己的情况调整)

|

K8s-master01 |

192.168.74.130 |

etcd、kube-apiserver、kube-controller-manager、kube-scheduler |

|

K8s-master02 |

192.168.74.131 |

etcd、kube-apiserver、kube-controller-manager、kube-scheduler |

|

K8s-node1 |

192.168.74.132 |

etcd、kube-proxy、docker、dns、calico、kubelet nginx(在这里用作高可用) |

|

K8s-node2 |

192.168.74.133 |

kube-proxy、docker、dns、calico、kubelet (keeplice) nginx(在这里用作高可用) |

|

K8s-node3 |

192.168.74.134 |

kube-proxy、docker、dns、calico、kubelet nginx(在这里用作高可用) |

|

vip |

192.168.74.100 |

虚拟ip在下面配置高可用的时候用到的虚拟ip地址 |

1.1、在k8s-master安装ansible

(根据自己的情况选择是否使用ansible来批量部署)

安装epel源,ansible及更新

[root@localhost ~]# yum -y update

[root@localhost ~]# yum -y install epel-release

[root@localhost ~]# yum -y ansible

1.2、修改ansible的hosts配置文件

[root@localhost ~]# vim /etc/ansible/hosts

[k8s-all]

192.168.74.130

192.168.74.131

192.168.74.132

192.168.74.133

192.168.74.134

[k8s-etcd]

192.168.74.130

192.168.74.131

192.168.74.132

[k8s-node]

192.168.74.132

192.168.74.133

192.168.74.134

[k8s-master]

192.168.74.130

192.168.74.1311.3、分配ansible主机的秘钥配置免密登录

[root@localhost ~]# ssh-keygen //一路回车

[root@localhost ~]# ssh-copy-id @192.168.74.130

[root@localhost ~]# ssh-copy-id @192.168.74.131

[root@localhost ~]# ssh-copy-id @192.168.74.132

[root@localhost ~]# ssh-copy-id @192.168.74.133

[root@localhost ~]# ssh-copy-id @192.168.74.134

1.4、测试ansible是否正常

[root@localhost ~]# ansible k8s-all -m ping

1.5、修改hosts及hostname配置文件分别写入如下数据

[root@localhost ~]# vim /etc/hosts

192.168.74.130 k8s-master01

192.168.74.131 k8s-master02

192.168.74.132 k8s-node1

192.168.74.133 k8s-node2

192.168.74.134 k8s-node3[root@localhost ~]# ansible k8s-all -m copy -a "src=/etc/hosts dest=/etc/hosts"(拷贝到每台主机)

1.6、每台服务器修改对应的hostname

[root@localhost ~]# ansible 192.168.74.130 -m shell -a 'echo "k8s-master01" > /etc/hostname'

[root@localhost ~]# ansible 192.168.74.131 -m shell -a 'echo "k8s-master02" > /etc/hostname'

[root@localhost ~]# ansible 192.168.74.132 -m shell -a 'echo "k8s-node1" > /etc/hostname'

[root@localhost ~]# ansible 192.168.74.133 -m shell -a 'echo "k8s-node2" > /etc/hostname'

[root@localhost ~]# ansible 192.168.74.134 -m shell -a 'echo "k8s-node3" > /etc/hostname'1.7、关闭安全策略firewalld,selinux临时关闭,永久关闭需要修改配置文件为disabled

[root@localhost ~]# ansible k8s-all -m shell -a 'systemctl stop firewalld'

[root@localhost ~]# ansible k8s-all -m shell -a 'systemctl disable firewalld'

[root@localhost ~]# ansible k8s-all -m shell -a 'getenforce 0'(临时关闭)

[root@localhost ~]# ansible k8s-all -m shell -a 'sed -i 's/enforcing/disabled/g' /etc/selinux/config'(永久关闭)1.8、设置内核并分配到每台服务器

[root@localhost ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1分发内核配置文件并执行生效配置

[root@localhost ~]# ansible k8s-all -m copy -a "src=/etc/sysctl.d/k8s.conf dest=/etc/sysctl.d/k8s.conf "

[root@localhost ~]# ansible k8s-all -m shell -a 'modprobe br_netfilter'

[root@localhost ~]# ansible k8s-all -m shell -a 'sysctl -p /etc/sysctl.d/k8s.conf' (加载使其生效)1.9、同步时间有内网时间服务器的话就使用自己的时间服务器

[root@localhost ~]# ansible k8s-all -m yum -a "name=ntpdate state=latest"

[root@localhost ~]# ansible k8s-all -m cron -a "name='k8s cluster crontab' minute=*/30 hour=* day=* month=* weekday=* job='ntpdate time7.aliyun.com >/dev/null 2>&1'"

[root@localhost ~]# ansible k8s-all -m shell -a "ntpdate time7.aliyun.com"

[root@localhost ~]# ansible k8s-all -m shell -a "date" (查看同步时间是否正确)1.10、在创建集群之前创建如下几个目录用于放认真证书跟配置文件

[root@localhost ~]# ansible k8s-all -m file -a 'path=/etc/kubernetes/ssl state=directory'

[root@localhost ~]# mkdir /opt/k8s/{certs,cfg,unit} -p (在ansible服务器上创建)2:升级linux内核为4.0以上版本

(根据自己的情况确定是否需要升级)

#2.1:导入ELRepo公钥

[root@localhost ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

#2.2:安装ELRepo

[root@localhost ~]# yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

#2.3:安装kernel-it内核

[root@localhost ~]# yum -y --disablerepo='*' --enablerepo=elrepo-kernel install kernel-lt

#2.4:查看启动器

[root@localhost ~]# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#2.5:设置默认内核启动项 然后重启服务器

[root@localhost ~]# grub2-set-default 0二:集群证书准备

1、集群证书简介

k8s从1.8版本开始,集群中各个组件需要使用TLS证书对通信进行加密,每个k8s集群都需要有独立的CA证书体系,这里我们采用比较常用的CloudFlare 的 PKI 工具集 cfssl 来生成 Certificate Authority (CA) 证书和秘钥文件,CA 是自签名的证书,用来签名后续创建的其它 TLS 证书。

使用证书的组件如下:

- etcd:使用 ca.pem、etcd-key.pem、etcd.pem;(etcd对外提供服务、节点间通信(etcd peer)使用同一套证书)

- kube-apiserver:使用 ca.pem、ca-key.pem、kube-apiserver-key.pem、kube-apiserver.pem;

- kubelet:使用 ca.pem ca-key.pem;

- kube-proxy:使用 ca.pem、kube-proxy-key.pem、kube-proxy.pem;

- kubectl:使用 ca.pem、admin-key.pem、admin.pem;

- kube-controller-manager:使用 ca-key.pem、ca.pem、kube-controller-manager.pem、kube-controller-manager-key.pem;

- kube-scheduler:使用ca-key.pem、ca.pem、kube-scheduler-key.pem、kube-scheduler.pem

2、证书生成

2.1、安装cfssl

生成证书时可在任一节点完成,这里在k8s-master01主机执行,证书只需要创建一次即可,以后在向集群中添加新节点时只要将 /etc/kubernetes/ssl 目录下的证书拷贝到新节点上即可。

[root@k8s-master01 `]# mkdir k8s/cfss -p

[root@k8s-master01 k8s]# cd k8s/cfss/

[root@k8s-master01 cfssl]# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

[root@k8s-master01 cfssl]# chmod +x cfssl_1.6.1_linux-amd64

[root@k8s-master01 cfssl]# cp cfssl_1.6.1_linux-amd64 /usr/local/bin/cfssl

[root@k8s-master01 cfssl]# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

[root@k8s-master01 cfssl]# chmod +x cfssljson_1.6.1_linux-amd64

[root@k8s-master01 cfssl]# cp cfssljson_1.6.1_linux-amd64 /usr/local/bin/cfssljson

[root@k8s-master01 cfssl]# wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_certinfo_1.6.1_linux_amd64

[root@k8s-master01 cfssl]# chmod +x cfssl-certinfo_1.6.1_linux-amd64

[root@k8s-master01 cfssl]# cp cfssl-certinfo_1.6.1_linux-amd64 /usr/local/bin/cfssl-certinfo

2.2、创建CA根证书

由于维护多套CA实在过于繁杂,这里CA证书用来签署集群其它组件的证书

这个文件中包含后面签署etcd、kubernetes等其它证书的时候用到的配置

[root@k8s-master01 cfss]# mkdir -pv /opt/k8s/certs

[root@k8s-master01 cfss]# cd /opt/k8s/certs编写ca证书json文件

root@k8s-master01 ~]# vim /opt/k8s/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "438000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "438000h"

}

}

}

}- ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

- signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

- server auth:表示client可以用该 CA 对server提供的证书进行验证;

- client auth:表示server可以用该CA对client提供的证书进行验证;

- expiry: 表示证书过期时间,我们设置10年,当然你如果比较在意安全性,可以适当减少

- 创建 CA 证书签名请求模板

[root@k8s-master01 ~]# vim /opt/k8s/certs/ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "438000h"

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}- 生成CA证书、私钥和csr证书签名请求

该命令会生成运行CA所必需的文件ca-key.pem(私钥)和ca.pem(证书),还会生成ca.csr(证书签名请求),用于交叉签名或重新签名。

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 cfss]# cfssl gencert -initca /opt/k8s/certs/ca-csr.json | cfssljson -bare ca

2020/07/12 23:40:00 [INFO] generating a new CA key and certificate from CSR

2020/07/12 23:40:00 [INFO] generate received request

2020/07/12 23:40:00 [INFO] received CSR

2020/07/12 23:40:00 [INFO] generating key: rsa-2048

2020/07/12 23:40:00 [INFO] encoded CSR

2020/07/12 23:40:00 [INFO] signed certificate with serial number 3540203425752791995603686283296571607602929152842.3、分发证书

[root@k8s-master01 certs]# ansible k8s-all -m copy -a 'src=/opt/k8s/certs/ca.csr dest=/etc/kubernetes/ssl/'

[root@k8s-master01 certs]# ansible k8s-all -m copy -a 'src=/opt/k8s/certs/ca-key.pem dest=/etc/kubernetes/ssl/'

[root@k8s-master01 certs]# ansible k8s-all -m copy -a 'src=/opt/k8s/certs/ca.pem dest=/etc/kubernetes/ssl/'三:部署etcd集群

1、简介

etcd 是k8s集群最重要的组件,用来存储k8s的所有服务信息, etcd 挂了,集群就挂了,我们这里把etcd部署在master三台节点上做高可用,etcd集群采用raft算法选举Leader, 由于Raft算法在做决策时需要多数节点的投票,所以etcd一般部署集群推荐奇数个节点,推荐的数量为3、5或者7个节点构成一个集群

官方地址 https://github.com/coreos/etcd/releases

2、etcd集群安装

2.1、下载etcd二进制文件

etcd命令为下载的二进制文件,解压后复制到指定目录即可

[root@k8s-master01 ~]# cd k8s/

[root@k8s-master01 k8s]#wget https://mirrors.huaweicloud.com/etcd/v3.4.10/etcd-v3.4.10-linux-amd64.tar.gz

[root@k8s-master01 k8s]# tar -xf etcd-v3.4.10-linux-amd64.tar.gz

[root@k8s-master01 k8s]# cd etcd-v3.4.10-linux-amd64/ ##有2个文件,etcdctl是操作etcd的命令2.2、把etcd二进制文件传输etcd节点

[root@k8s-master01 ~]# ansible k8s-etcd -m copy -a 'src=/root/k8s/etcd-v3.4.10-linux-amd64/etcd dest=/usr/local/bin/ mode=0755'

[root@k8s-master01 ~]# ansible k8s-etcd -m copy -a 'src=/root/k8s/etcd-v3.4.10-linux-amd64/etcdctl dest=/usr/local/bin/ mode=0755'说明:若是不用ansible,可以直接用scp把两个文件传输到三个master节点的/usr/local/bin/目录下

2.3、创建etcd证书请求模板文件

[root@k8s-master01 ~]# vim /opt/k8s/certs/etcd-csr.json ##证书请求文件这里的认证地址可以多预留几个ip(以防以后扩展)

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.74.130",

"192.168.74.131",

"192.168.74.132"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}说明:hosts中的IP为各etcd节点IP及本地127地址,etcd的证书需要签入所有节点ip,在生产环境中hosts列表最好多预留几个IP,这样后续扩展节点或者因故障需要迁移时不需要再重新生成证书

2.4、生成证书及私钥

注意命令中使用的证书的具体位置

[root@k8s-master01 etcd-v3.4.9-linux-amd64]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/opt/k8s/certs/ca.pem -ca-key=/opt/k8s/certs/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2020/07/12 23:57:10 [INFO] generate received request

2020/07/12 23:57:10 [INFO] received CSR

2020/07/12 23:57:10 [INFO] generating key: rsa-2048

2020/07/12 23:57:11 [INFO] encoded CSR

2020/07/12 23:57:11 [INFO] signed certificate with serial number 117864690592567978439940422940262623097240517922

2020/07/12 23:57:11 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").[root@k8s-master01 certs]#

查看证书

etcd.csr是签署时用到的中间文件,如果你不打算自己签署证书,而是让第三方的CA机构签署,只需要把etcd.csr文件提交给CA机构。

[root@k8s-master01 certs]# ll etcd*

-rw-r--r--. 1 root root 1066 Apr 22 17:17 etcd.csr

-rw-r--r--. 1 root root 293 Apr 22 17:10 etcd-csr.json

-rw-------. 1 root root 1679 Apr 22 17:17 etcd-key.pem

-rw-r--r--. 1 root root 1444 Apr 22 17:17 etcd.pem2.5、证书分发

把生成的etcd证书复制到创建的证书目录并放至所有etcd节点跟master节点

正常情况下只需要copy这三个文件即可,ca.pem(已经存在)、etcd-key.pem、etcd.pem

ansible k8s-etcd -m copy -a 'src=/opt/k8s/certs/etcd.pem dest=/etc/kubernetes/ssl/'

ansible k8s-etcd -m copy -a 'src=/opt/k8s/certs/etcd-key.pem dest=/etc/kubernetes/ssl/'

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/etcd-key.pem dest=/etc/kubernetes/ssl/' #注意etcd的证书也是需要拷贝到master节点的kubel-api去连接etcd的时候需要用到

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/etcd-key.pem dest=/etc/kubernetes/ssl/'2.6、修改etcd配置参数

为了安全性起我这里使用单独的用户启动 Etcd

##创建etcd用户和组

[root@k8s-master01 ~]# ansible k8s-etcd -m group -a 'name=etcd'

[root@k8s-master01 ~]# ansible k8s-etcd -m user -a 'name=etcd group=etcd comment="etcd user" shell=/sbin/nologin home=/var/lib/etcd createhome=no'

##创建etcd数据存放目录并授权

[root@k8s-master01 ~]# ansible k8s-etcd -m file -a 'path=/var/lib/etcd state=directory owner=etcd group=etcd'2.7、配置etcd配置文件

etcd.conf配置文件信息,配置文件中涉及证书,etcd用户需要对其有可读权限,否则会提示无法获取证书,644权限即可

[root@k8s-master01 certs]# mkdir -pv /opt/k8s/cfg

[root@k8s-master01 certs]# vim /opt/k8s/cfg/etcd.conf

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.74.130:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.74.130:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.74.130:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.74.130:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.74.130:2380,etcd02=https://192.168.74.131:2380,etcd03=https://192.168.74.132:2380"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"参数解释:

- ETCD_NAME:etcd节点成员名称,在一个etcd集群中必须唯一性,可使用Hostname或者machine-id

- ETCD_LISTEN_PEER_URLS:和其它成员节点间通信地址,每个节点不同,必须使用IP,使用域名无效

- ETCD_LISTEN_CLIENT_URLS:对外提供服务的地址,通常为本机节点。使用域名无效

- ETCD_INITIAL_ADVERTISE_PEER_URLS:节点监听地址,并会通告集群其它节点

- ETCD_INITIAL_CLUSTER:集群中所有节点信息,格式为:节点名称+监听的本地端口,及:ETCD_NAME:https://ETCD_INITIAL_ADVERTISE_PEER_URLS

- ETCD_ADVERTISE_CLIENT_URLS:节点成员客户端url列表,对外公告此节点客户端监听地址,可以使用域名

- ETCD_AUTO_COMPACTION_RETENTION: 在一个小时内为mvcc键值存储的自动压实保留。0表示禁用自动压缩

- ETCD_QUOTA_BACKEND_BYTES: ETCDdb存储数据大小,默认2G,推荐8G

- ETCD_MAX_REQUEST_BYTES: 事务中允许的最大操作数,默认1.5M,官方推荐10M,我这里设置5M,大家根据自己实际业务设置

由于我们是三个节点etcd集群,所以需要把etcd.conf配置文件复制到另外2个节点,并把上面参数解释中红色参数修改为对应主机IP。

分发etcd.conf配置文件,当然你不用ansible,可以直接用scp命令把配置文件传输到三台机器对应位置,然后三台机器分别修改IP、ETCD_NAME等参数。

[root@k8s-master01 certs]# cd /opt/k8s/cfg/

[root@k8s-master01 cfg]# ansible k8s-etcd -m shell -a 'mkdir -p /etc/kubernetes/config'

[root@k8s-master01 cfg]# ansible k8s-etcd -m copy -a 'src=/opt/k8s/cfg/etcd.conf dest=/etc/kubernetes/config/etcd.conf'##登陆对应主机修改配置文件,把对应IP修改为本地IP

注意!各自的etcd配置文件修改对应的ip跟对应的节点名

编辑etcd.service 启动文件

[root@k8s-master01 cfg]# mkdir -pv /opt/k8s/unit

[root@k8s-master01 cfg]# vim /opt/k8s/unit/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/etc/kubernetes/config/etcd.conf

ExecStart=/usr/local/bin/etcd \

--initial-cluster-state=new \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--enable-v2

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

~ 分发etcd启动配置文件

[root@k8s-master01 ~]# ansible k8s-all -m copy -a 'src=/opt/k8s/unit/etcd.service dest=/usr/lib/systemd/system/etcd.service'

[root@k8s-master01 ~]# ansible k8s-all -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-all -m shell -a 'systemctl enable etcd'

[root@k8s-master01 ~]# ansible k8s-all -m shell -a 'systemctl start etcd'

注意!

这里需要所有etcd服务同时启动,在三台机器上同时执行启动命令,启动其中一台后,服务会卡在那里,直到集群中所有etcd节点都已启动。我这里因为是ansible远程执行,所以没有出现这个问题。

2.8、验证集群

etcd3版本,查看集群状态时,需要指定对应的证书位置

etcdctl --endpoints=https://192.168.74.130:2379,https://192.168.74.131:2379,https://192.168.74.132:2379 --cert-file=/etc/kubernetes/ssl/etcd.pem --ca-file=/etc/kubernetes/ssl/ca.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem endpoint status

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| http://192.168.74.130:2379 | ab2bb1deacf7fe9 | 3.4.10 | 24 MB | false | false | 6 | 1262481 | 1262481 | |

| http://192.168.74.131:2379 | 9bd94adc2b28d4b7 | 3.4.10 | 24 MB | false | false | 6 | 1262481 | 1262481 | |

| http://192.168.74.132:2379 | 28ce172e5aefd54b | 3.4.10 | 24 MB | true | false | 6 | 1262481 | 1262481 | |

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+## 可以看到集群显示健康,并可以看到IS LEADER=true 所在节点

四:kubectl命令行工具部署

1、简介

摘要:随着版本的不断迭代,k8s为了集群安全,集群中趋向采用TLS+RBAC的安全配置方式,所以我们在部署过程中,所有组件都需要证书,并启用RBAC认证。

master节点组件:kube-apiserver、etcd、kube-controller-manager、kube-scheduler、kubectl

node节点组件:kubelet、kube-proxy、docker、coredns、calico

部署master组件

2、分配k8s组件二进制包

2.1、下载kubernetes二进制安装包

[root@k8s-master01 ~]# cd /root/k8s/

[root@k8s-master01 k8s]# wget https://storage.googleapis.com/kubernetes-release/release/v1.22.7/kubernetes-server-linux-amd64.tar.gz (可以复制地址用浏览器下载更快 )

##master二进制命令文件传输

[root@k8s-master01 k8s]# tar -xf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master01 k8s]# cp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} /usr/local/bin/

[root@k8s-master01 k8s]# scp kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 192.168.74.130:/usr/local/bin/

[root@k8s-master01 k8s]# scp kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 192.168.74.131:/usr/local/bin/[root@k8s-master01 k8s]# scp

##node节点二进制文件传输

[root@k8s-master01 k8s]# scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.74.132:/usr/local/bin/

[root@k8s-master01 k8s]# scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.74.133:/usr/local/bin/

[root@k8s-master01 k8s]# scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.74.134:/usr/local/bin/

2.2、创建admin证书

kubectl用于日常直接管理K8S集群,kubectl要进行管理k8s,就需要和k8s的组件进行通信,也就需要用到证书。

kubectl我们部署在三台master节点

[root@k8s-master01 ~]# vim /opt/k8s/certs/admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:masters",

"OU": "System"

}

]

}2.3、生成admin证书和私钥

(用于使用kubectl命令时有admin权限)

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2019/04/23 14:56:49 [INFO] generate received request

2019/04/23 14:56:49 [INFO] received CSR

2019/04/23 14:56:49 [INFO] generating key: rsa-2048

2019/04/23 14:56:49 [INFO] encoded CSR

2019/04/23 14:56:49 [INFO] signed certificate with serial number 506524128693715675957824591128854950490977162654

2019/04/23 14:56:49 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").- 查看证书

[root@k8s-master01 certs]# ll admin*

-rw-r--r-- 1 root root 1013 Apr 23 14:56 admin.csr

-rw-r--r-- 1 root root 231 Apr 23 14:54 admin-csr.json

-rw------- 1 root root 1679 Apr 23 14:56 admin-key.pem

-rw-r--r-- 1 root root 1407 Apr 23 14:56 admin.pem2.4、分发证书

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin-key.pem dest=/etc/kubernetes/ssl/'

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin.pem dest=/etc/kubernetes/ssl/'- 生成kubeconfig 配置文件

下面几个步骤会在家目录下的.kube生成config文件,之后kubectl和api通信就需要用到该文件,这也就是说如果在其他节点上操作集群需要用到这个kubectl,就需要将该文件拷贝到其他节点。

设置集群参数

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.74.100:8888 (不指定文件名会自动将config文件生成到~/.kube/家目录下面去)

注意!这里的ip地址是使用的虚拟ip(VIP的地址)如果你使用单master就改成kubel-apiserver接口地址或者就指定本机api:127.0.0.0:8888

Cluster "kubernetes" set.

# 设置客户端认证参数

[root@k8s-master01 ~]# kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/etc/kubernetes/ssl/admin-key.pemUser "admin" set.

#设置上下文参数

[root@k8s-master01 ~]# kubectl config set-context admin@kubernetes --cluster=kubernetes --user=adminContext "admin@kubernetes" created.

# 设置默认上下文

[root@k8s-master01 ~]# kubectl config use-context admin@kubernetesSwitched to context "admin@kubernetes".

五:kube-apiserver部署

1、简介:

1)、kube-apiserver为是整个k8s集群中的数据总线和数据中心,提供了对集群的增删改查及watch等HTTP Rest接口

2)、kube-apiserver是无状态的,虽然客户端如kubelet可通过启动参数"--api-servers"指定多个api-server,但只有第一个生效,并不能达到高可用的效果,关于kube-apiserver高可用方案,我们在后面介绍,本章,之介绍如何安装。

创建k8s集群各组件运行用户

安全性考虑,我们创建单独的用户运行k8s中各组件(也可以不用创建后面不指定用户就是了根据自己的情况调整)

[root@k8s-master01 ~]# ansible k8s-master -m group -a 'name=kube'

[root@k8s-master01 ~]# ansible k8s-master -m user -a 'name=kube group=kube comment="Kubernetes user" shell=/sbin/nologin createhome=no'2、部署kube-apiserver

2.1、创建kube-apiserver证书请求文件

apiserver TLS 认证端口需要的证书

[root@k8s-master01 ~]# vim /opt/k8s/certs/kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.74.100", #注意这个ip是vip在证书里面也必须配置等下需要使用这个高可用来访问api接口

"192.168.74.130",

"192.168.74.131",

"172.252.0.1", #注意这里是cluster-ip地址也不能少

"localhost",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}hosts字段列表中,指定了master节点ip,本地ip,172.252.0.1为集群service ip一般为设置的网络段中第一个ip

2.2、生成 kubernetes 证书和私钥

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

2019/04/23 16:56:52 [INFO] generate received request

2019/04/23 16:56:52 [INFO] received CSR

2019/04/23 16:56:52 [INFO] generating key: rsa-2048

2019/04/23 16:56:52 [INFO] encoded CSR

2019/04/23 16:56:52 [INFO] signed certificate with serial number 22317568679091080825926949538404731378745389881

2019/04/23 16:56:52 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").- 查看证书生成

[root@k8s-master01 certs]# ll kube-apiserver*

-rw-r--r-- 1 root root 1277 Apr 23 16:56 kube-apiserver.csr

-rw-r--r-- 1 root root 489 Apr 23 16:56 kube-apiserver-csr.json

-rw------- 1 root root 1675 Apr 23 16:56 kube-apiserver-key.pem

-rw-r--r-- 1 root root 1651 Apr 23 16:56 kube-apiserver.pem2.3、证书分发

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-apiserver.pem dest=/etc/kubernetes/ssl'

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-apiserver-key.pem dest=/etc/kubernetes/ssl'

5)配置kube-apiserver客户端使用的token文件

kubelet 启动时向 kube-apiserver发送注册信息,在双向的TLS加密通信环境中需要认证,手工为kubelet生成证书/私钥在node节点较少且数量固定时可行,采用TLS Bootstrapping 机制,可使大量的node节点自动完成向kube-apiserver的注册请求。

原理:kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token.csv 一致,如果一致则自动为 kubelet生成证书和秘钥。

[root@k8s-master01 ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

fb8f04963e38858eab0867e8d2296d6b

[root@k8s-master01 ~]# vim /opt/k8s/cfg/bootstrap-token.csv

fb8f04963e38858eab0867e8d2296d6b,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

##分发token文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/bootstrap-token.csv dest=/etc/kubernetes/config/'- 生成 apiserver RBAC 审计配置文件

[root@k8s-master01 ~]# vim /opt/k8s/cfg/audit-policy.yaml

apiVersion: audit.k8s.io/v1

kind: Policy

rules:

- level: Metadata##分发审计文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/audit-policy.yaml dest=/etc/kubernetes/config/'

2.4、编辑kube-apiserver核心文件

apiserver 启动参数配置文件,注意创建参数中涉及的日志目录,并授权kube用户访问

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kube-apiserver.conf

###

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--secure-port=8888"

KUBE_ETCD_SERVERS="--etcd-servers=https://192.168.74.130:2379,https://192.168.74.131:2379,https://192.168.74.132:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=172.252.0.0/16"

KUBE_API_ARGS=" --allow-privileged=true \

--anonymous-auth=false \

--runtime-config=api/all=true \

--anonymous-auth=false \

--apiserver-count=3 \

--authorization-mode=Node,RBAC \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--token-auth-file=/etc/kubernetes/config/bootstrap-token.csv \

--enable-bootstrap-token-auth \

--enable-garbage-collector \

--endpoint-reconciler-type=lease \

--event-ttl=168h0m0s \

--kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--kubelet-timeout=3s \

--runtime-config=api/all=true \

--audit-policy-file=/etc/kubernetes/config/audit-policy.yaml \

--service-node-port-range=9400-65535 \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/etcd.pem \

--etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \

--etcd-compaction-interval=0s \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--enable-aggregator-routing=true \

--runtime-config=api/all=true \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--v=2"

##分发参数配置文件,同时把参数中出现的IP修改为对应的本机IP

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-apiserver.conf dest=/etc/kubernetes/config/'

##创建日志目录并授权

[root@k8s-master01 ~]# ansible k8s-master -m file -a 'path=/var/log/kube-audit state=directory owner=kube group=kube'

个别参数解释:

- KUBE_API_ADDRESS:向集群成员通知apiserver消息的IP地址。这个地址必须能够被集群中其他成员访问。如果IP地址为空,将会使用--bind-address,如果未指定--bind-address,将会使用主机的默认接口地址

- KUBE_API_PORT:用于监听具有认证授权功能的HTTPS协议的端口。如果为0,则不会监听HTTPS协议。 (默认值6443)

- KUBE_ETCD_SERVERS:连接的etcd服务器列表

- KUBE_ADMISSION_CONTROL:控制资源进入集群的准入控制插件的顺序列表

- apiserver-count:集群中apiserver数量

- KUBE_SERVICE_ADDRESSES: CIDR IP范围,用于分配service 集群IP。不能与分配给节点pod的任何IP范围重叠

kube-apiserver启动脚本配置文件kube-apiserver.service

[root@k8s-master01 ~]# vim /opt/k8s/unit/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target## 分发apiserver启动脚本文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-apiserver.service dest=/usr/lib/systemd/system/'- 启动kube-apiserver 服务

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kube-apiserver'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kube-apiserver'

2.5、授予 kubernetes 证书访问 kubelet API 的权限

1.22.7版本的kubernetes需要添加环境变量(在master下面添加)

vim /etc/profile 新增一行

export KUBERNETES_MASTER="127.0.0.1:8888"保存退出

source /etc/profile 刷新环境变量

[root@k8s-master01 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes后面部署好集群,在执行 kubectl exec、run、logs 等命令时,apiserver 会转发到 kubelet。这里定义 RBAC 规则,授权 apiserver 调用 kubelet API,否则会报类似以下错误:(1.22.7版本需要配置yaml文件生成权限才能使用执行命令,在下文会用到)

Error from server (Forbidden): Forbidden (user=kubernetes, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-8477bdff5d-2lf7k)

六:kube-controller-manager部署

1、简介:

1)、Kubernetes控制器管理器是一个守护进程它通过apiserver监视集群的共享状态,并进行更改以尝试将当前状态移向所需状态。

2)、kube-controller-manager是有状态的服务,会修改集群的状态信息。如果多个master节点上的相关服务同时生效,则会有同步与一致性问题,所以多master节点中的kube-controller-manager服务只能是主备的关系,kukubernetes采用租赁锁(lease-lock)实现leader的选举,具体到kube-controller-manager,设置启动参数"--leader-elect=true"。

2、创建kube-conftroller-manager

2.1创建kube-conftroller-manager证书签名请求

1、kube-controller-mamager连接 apiserver 需要使用的证书,同时本身 10257 端口也会使用此证书

2、kube-controller-mamager与kubei-apiserver通信采用双向TLS认证

[root@k8s-master01 ~]# vim /opt/k8s/certs/kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"192.168.74.130",

"192.168.74.131",

"localhost"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}1、hosts 列表包含所有 kube-controller-manager 节点 IP;

2、CN 为 system:kube-controller-manager;O 为 system:kube-controller-manager;kube-apiserver预定义的 RBAC使用的ClusterRoleBindings system:kube-controller-manager将用户system:kube-controller-manager与ClusterRole system:kube-controller-manager绑定。

2.2、生成kube-controller-manager证书与私钥

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2019/04/24 13:03:36 [INFO] generate received request

2019/04/24 13:03:36 [INFO] received CSR

2019/04/24 13:03:36 [INFO] generating key: rsa-2048

2019/04/24 13:03:36 [INFO] encoded CSR

2019/04/24 13:03:36 [INFO] signed certificate with serial number 461545639209226313174106252389263020486388400892

2019/04/24 13:03:36 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").- 查看证书

[root@k8s-master01 certs]# ll kube-controller-manager*

-rw-r--r-- 1 root root 1155 Apr 24 13:03 kube-controller-manager.csr

-rw-r--r-- 1 root root 432 Apr 24 13:00 kube-controller-manager-csr.json

-rw------- 1 root root 1679 Apr 24 13:03 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1529 Apr 24 13:03 kube-controller-manager.pem2.3、分发证书

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-controller-manager-key.pem dest=/etc/kubernetes/ssl/'

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-controller-manager.pem dest=/etc/kubernetes/ssl/'- 生成配置文件kube-controller-manager.kubeconfig

kube-controller-manager 组件开启安全端口及RBAC认证所需配置

## 配置集群参数

### --kubeconfig:指定kubeconfig文件路径与文件名;如果不设置,默认生成在~/.kube/config文件。

### 后面需要用到此文件,所以我们把配置信息单独指向到指定文件中

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.74.100:6443 --kubeconfig=kube-controller-manager.kubeconfigCluster "kubernetes" set.

## 配置客户端认证参数

### --server:指定api-server,若不指定,后面脚本中,可以指定master

### 认证用户为前文签名中的"system:kube-controller-manager";

[root@k8s-master01 ~]# kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem --embed-certs=true --client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem --kubeconfig=kube-controller-manager.kubeconfigUser "system:kube-controller-manager" set

## 配置上下文参数

[root@k8s-master01 ~]# kubectl config set-context system:kube-controller-manager@kubernetes --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfigContext "system:kube-controller-manager@kubernetes" created.

## 配置默认上下文

[root@k8s-master01 ~]# kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=kube-controller-manager.kubeconfigSwitched to context "system:kube-controller-manager@kubernetes".

## 分发生成的配置文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/kube-controller-manager.kubeconfig dest=/etc/kubernetes/config/'2.4、编辑kube-controller-manager核心文件

controller manager 将不安全端口 10252 绑定到 127.0.0.1 确保 kuebctl get cs 有正确返回;将安全端口 10257 绑定到 0.0.0.0 公开,提供服务调用;由于controller manager开始连接apiserver的6443认证端口,所以需要 --use-service-account-credentials 选项来让 controller manager 创建单独的 service account(默认 system:kube-controller-manager 用户没有那么高权限)

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kube-controller-manager.conf

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--bind-address=0.0.0.0 \

--authentication-kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \

--bind-address=0.0.0.0 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--controllers=*,bootstrapsigner,tokencleaner \

--deployment-controller-sync-period=10s \

--experimental-cluster-signing-duration=87600h0m0s \

--enable-garbage-collector=true \

--kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \

--leader-elect=true \

--node-monitor-grace-period=20s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--terminated-pod-gc-threshold=50 \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--secure-port=10257 \

--service-cluster-ip-range=172.252.0.0/16 \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--use-service-account-credentials=true \

--v=2"

## 分发kube-controller-manager配置文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-controller-manager.conf dest=/etc/kubernetes/config'

参数说明:

- address/bind-address:默认值:0.0.0.0,监听--secure-port端口的IP地址。关联的接口必须由集群的其他部分和CLI/web客户端访问。

- cluster-name:集群名称

- cluster-signing-cert-file/cluster-signing-key-file:用于集群范围认证

- controllers:启动的contrller列表,默认为”*”,启用所有的controller,但不包含” bootstrapsigner”与”tokencleaner”;

- kubeconfig:带有授权和master位置信息的kubeconfig文件路径

- leader-elect:在执行主逻辑之前,启动leader选举,并获得leader权

- service-cluster-ip-range:集群service的IP地址范

8)启动脚本

[root@k8s-master01 ~]# vim /opt/k8s/unit/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target## 分发启动脚本

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-controller-manager.service dest=/usr/lib/systemd/system/'9)启动服务

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kube-controller-manager'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kube-controller-manager'

七:kube-scheduler部署

1、简介:

1)、Kube-scheduler作为组件运行在master节点,主要任务是把从kube-apiserver中获取的未被调度的pod通过一系列调度算法找到最适合的node,最终通过向kube-apiserver中写入Binding对象(其中指定了pod名字和调度后的node名字)来完成调度

2)、kube-scheduler与kube-controller-manager一样,如果高可用,都是采用leader选举模式。启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

简单总结:

kube-scheduler负责分配调度Pod到集群内的node节点

监听kube-apiserver,查询还未分配的Node的Pod

根据调度策略为这些Pod分配节点

2、创建kube-scheduler

2.1、创建kube-scheduler证书签名请求

kube-scheduler 连接 apiserver 需要使用的证书,同时本身 10259 端口也会使用此证书(可以多预留ip)

[root@k8s-master01 ~]# vim /opt/k8s/certs/kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"localhost",

"192.168.74.130",

"192.168.74.131",

"192.168.74.132"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}2.2、生成kube-scheduler证书与私钥

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2019/04/24 16:08:38 [INFO] generate received request

2019/04/24 16:08:38 [INFO] received CSR

2019/04/24 16:08:38 [INFO] generating key: rsa-2048

2019/04/24 16:08:38 [INFO] encoded CSR

2019/04/24 16:08:38 [INFO] signed certificate with serial number 288219277582790216633679349308422764913188390208

2019/04/24 16:08:38 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").3)查看证书

[root@k8s-master01 certs]# ll kube-scheduler*

-rw-r--r-- 1 root root 1131 Apr 24 16:11 kube-scheduler.csr

-rw-r--r-- 1 root root 345 Apr 24 16:03 kube-scheduler-csr.json

-rw------- 1 root root 1679 Apr 24 16:11 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1505 Apr 24 16:11 kube-scheduler.pem2.3、分发证书

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-scheduler-key.pem dest=/etc/kubernetes/ssl/'

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-scheduler.pem dest=/etc/kubernetes/ssl/'5)生成配置文件kube-scheduler.kubeconfig

1、kube-scheduler 组件开启安全端口及 RBAC 认证所需配置

2、kube-scheduler kubeconfig文件中包含Master地址信息与上一步创建的证书、私钥

## 设置集群参数

###(注意了这里我使用的是vip地址在没有配置这个负载均衡的地址的时候验证服务的时候是不健康的,最好就使用负载均衡地址,当然你也可以使用127.0.0.1:8888)

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.74.100:8888 --kubeconfig=kube-scheduler.kubeconfigCluster "kubernetes" set.

## 配置客户端认证参数

[root@k8s-master01 ~]# kubectl config set-credentials "system:kube-scheduler" --client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem --client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfigUser "system:kube-scheduler" set.

## 配置上下文参数

[root@k8s-master01 ~]# kubectl config set-context system:kube-scheduler@kubernetes --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfigContext "system:kube-scheduler@kubernetes" created.

## 配置默认上下文

[root@k8s-master01 ~]# kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=kube-scheduler.kubeconfigSwitched to context "system:kube-scheduler@kubernetes".

## 配置文件分发

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/kube-scheduler.kubeconfig dest=/etc/kubernetes/config/'2.4、编辑kube-scheduler核心文件

kube-shceduler 同 kube-controller manager 一样将不安全端口绑定在本地,安全端口对外公开

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kube-scheduler.conf

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--address=127.0.0.1 \

--authentication-kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \

--bind-address=0.0.0.0 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--leader-elect=true \

--tls-cert-file=/etc/kubernetes/ssl/kube-scheduler.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--v=2"## 分发配置文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-scheduler.conf dest=/etc/kubernetes/config'7)启动脚本

需要指定需要加载的配置文件路径

[root@k8s-master01 ~]# vim /opt/k8s/unit/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-scheduler.conf

#User=kube

ExecStart=/usr/local/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target##脚本分发

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-scheduler.service dest=/usr/lib/systemd/system/'8)启动服务

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kube-scheduler.service'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kube-scheduler.service'2.5、验证master集群状态

在三个节点中,任一主机执行以下命令,都应返回集群状态信息(这是在配置的本地api访问ip查看才是正常的,配置了负载均衡的在下步配置kube-apiserver负载均衡+高可用后才会是下面的状态)

[root@k8s-master02 config]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

八:kube-apiserver 高可用配置

1、简介:

整个的master节点各组件的部署,上面我们提到过,k8s组件中,kube-controller-manager、kube-scheduler及etcd这三个服务高可用,都是通过leader选举模式产生,本章节我们着重介绍下kube-apiserver高可用配置

1)、以下操作属于node节点上组件的部署,在master节点上只是进行文件配置,然后发布至各node节点。

2)、若是需要master也作为node节点加入集群,也需要在master节点部署docker、kubelet、kube-proxy。

2、配置高可用

2.1、常用高可用

keeplaived+HAproxy

公有云SLB

Nginx反向代理(本文使用)

docker

在部署node组件之前,我们需要先在node节点部署docker,因为后续kubelet启动,需要依赖docker服务,并且,k8s部署服务,都是基于docker进行运行。后面遇到时自会明白。

2.2、Nginx反向代理方法实现高可用

## 设置存储库

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'yum install -y yum-utils device-mapper-persistent-data lvm2'## 添加yum源

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo'## 安装指定版本docker

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'yum install docker-ce-18.09.5-3.el7 -y'## 启动

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl enable docker'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl start docker'2.3、创建nginx.conf配置文件

原理:为了保证 apiserver 的 HA,需要在每个 node (注意了!是在所有node节点上)上部署 nginx 来反向代理(tcp)所有 apiserver;然后 kubelet、kube-proxy 组件连接本地 127.0.0.1:8888 访问 apiserver,以确保任何 master 挂掉以后 node 都不会受到影响;

## 创建nginx配置文件目录(根据自己的情况决定是否添加配置到我创建的这个目录)

[root@k8s-master01 ~]# ansible k8s-node -m file -a 'path=/etc/nginx state=directory'## 编辑nginx配置文件

[root@k8s-master01 ~]# vim /opt/k8s/cfg/nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 192.168.74.130:8888;

server 192.168.74.131:8888; }

server {

listen 0.0.0.0:8888;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}## 分发nginx.conf至node节点

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/cfg/nginx.conf dest=/etc/nginx/'2.4、配置 Nginx 基于 docker 进程,然后配置 systemd 来启动

[root@k8s-master01 k8s]# vim /opt/k8s/unit/nginx-proxy.service

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run -p 127.0.0.1:8888:8888 \

-v /etc/nginx:/etc/nginx \

--name nginx-proxy \

--net=host \

--restart=on-failure:5 \

--memory=512M \

nginx:1.14.2-alpine

ExecStartPre=-/usr/bin/docker rm -f nginx-proxy

ExecStop=/usr/bin/docker stop nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target## 分发至node节点

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/unit/nginx-proxy.service dest=/usr/lib/systemd/system/'## 启动服务

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl enable nginx-proxy'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl start nginx-proxy'

2.5、nginx+keepalived

nginx同上配置这里配置keepalived高可用(这里我同样安装到node节点)

Nginx+keepalived高可用

###主节点

# yum install keepalived

# vi /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.74.100/24

}

track_script {

check_nginx

}

}# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1else

exit 0fi

# systemctl start keepalived

# systemctl enable keepalived

###备节点

#vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.74.100/24

}

track_script {

check_nginx

}

}# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1else

exit 0fi

# systemctl start keepalived

# systemctl enable keepalived2.6、测试VIP是否正常工作

curl -k --header "Authorization: Bearer 8762670119726309a80b1fe94eb66e93" https://192.168.74.130:8888/version{

"major": "1",

"minor": "18",

"gitVersion": "v1.22.7",

"gitCommit": "52c56ce7a8272c798dbc29846288d7cd9fbae032",

"gitTreeState": "clean",

"buildDate": "2022-04-16T11:48:36Z",

"goVersion": "go1.13.9",

"compiler": "gc",

"platform": "linux/amd64"

}

九:kubelet部署

1、简介:

Kubelet组件运行在Node节点上,维持运行中的Pods以及提供kuberntes运行时环境,主要完成以下使命:

1.监视分配给该Node节点的pods

2.挂载pod所需要的volumes

3.下载pod的secret

4.通过docker/rkt来运行pod中的容器

5.周期的执行pod中为容器定义的liveness探针

6.上报pod的状态给系统的其他组件

7.上报Node的状态

1、以下操作属于node节点上组件的部署,在master节点上只是进行文件配置,然后发布至各node节点。

2、若是需要master也作为node节点加入集群,也需要在master节点部署docker、kubelet、kube-proxy。

2、部署kubelet

2.1、创建角色绑定

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper cluster 角色(role), 然后 kubelet 才能有权限创建认证请求(certificate signing requests):

[root@k8s-master01 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap--user=kubelet-bootstrap 是部署kube-apiserver时创建bootstrap-token.csv文件中指定的用户,同时也需要写入bootstrap.kubeconfig 文件

2.2、创建kubelet kubeconfig文件,设置集群参数

## 设置集群参数(因为使用了负载均衡跟高可用这里的地址就是vip地址)

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.72.100:8888 --kubeconfig=bootstrap.kubeconfigCluster "kubernetes" set.

## 设置客户端认证参数

### tocker是前文提到的bootstrap-token.csv文件中token值

[root@k8s-master01 ~]# kubectl config set-credentials kubelet-bootstrap --token=fb8f04963e38858eab0867e8d2296d6b --kubeconfig=bootstrap.kubeconfigUser "kubelet-bootstrap" set.

## 设置上下文参数

[root@k8s-master01 ~]# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfigContext "default" created.

## 设置默认上下问参数

[root@k8s-master01 ~]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfigSwitched to context "default".

2.3、 分发生成的集群配置文件到各node节点

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/root/bootstrap.kubeconfig dest=/etc/kubernetes/config/'2.4、创建系统核心配置文件服务

我们先在master节点配置好,然后用ansible分发至2各node节点,然后修改对应主机名及IP即可

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kubelet-conf.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/etc/kubernetes/ssl/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

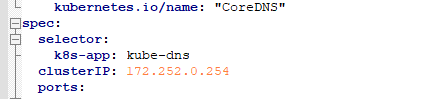

clusterDNS:

- "172.252.0.254"

podCIDR: "171.252.10.0/18"

maxPods: 220

serializeImagePulls: false

hairpinMode: promiscuous-bridge

cgroupDriver: cgroupfs

runtimeRequestTimeout: "15m"

rotateCertificates: true

serverTLSBootstrap: true

readOnlyPort: 0

port: 10250

address: "192.168.74.132"## 分发至node节点(别忘了修改参数中对应的主机名、IP地址)

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/cfg/kubelet-conf.yaml dest=/etc/kubernetes/config/'参数解释:

- clusterDNS:定于dns服务器ip地址(在下面创建dns服务的时候定义的ip注意dns的ip必须是在集群ip网段不是pod集群网段)

- podCIDR:这个就是定义的pod集群网段,注意不能跟cluster在同一网段

- maxPods:是定义这个节点最大能启动多少个pod

- address:kubelet节点ip地址

4)创建kubelet系统脚本

[root@k8s-master01 ~]# vim /opt/k8s/unit/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config/kubelet.conf

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/config/bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--root-dir=/var/lib/kubelet \

--kubeconfig=/etc/kubernetes/config/kubelet.kubeconfig \

--config=/etc/kubernetes/config/kubelet-config.yaml \

--hostname-override=10.148.158.5 \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause \

--image-pull-progress-deadline=15m \

--volume-plugin-dir=/var/lib/kubelet/kubelet-plugins/volume/exec/ \

--logtostderr=true \

--v=2

[Install]

WantedBy=multi-user.target注意参数--hostname-override是节点名我直接使用ip来区分的,如果你使用(k8s-node)这样来命令的话一定要在没太机器上做好hosts解析

## 分发脚本配置文件并修改相关的ip地址

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/unit/kubelet.service dest=/usr/lib/systemd/system/'## 创建kubelet数据目录

[root@k8s-master01 ~]# ansible k8s-node -m file -a 'path=/var/lib/kubelet state=directory'5)启动服务

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl enable kubelet'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl start kubelet'6)查看csr请求

查看未授权的csr请求,处于”Pending”状态

[root@k8s-master01 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-5m922 100s kubelet-bootstrap Pending

csr-k4v2g 99s kubelet-bootstrap Pending2.5、批准kubelet 的 TLS 证书请求

kubelet 首次启动向 kube-apiserver 发送证书签名请求,必须由 kubernetes 系统允许通过后,才会将该 node 加入到集群。

## 批准后 node节点就加入集群了

[root@k8s-master01 ~]# kubectl certificate approve csr-5m922

[root@k8s-master01 ~]# kubectl certificate approve csr-k4v2g## 查看node节点就绪状态

### 由于我们还没有安装网络,所以node节点还处于NotReady状态

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node01 NotReady <none> 49m v1.22.7

k8s-node02 NotReady <none> 6m15s v1.22.7

十:kube-proxy部署

1、简介:

kube-proxy的作用主要是负责service的实现,具体来说,就是实现了内部从pod到service和外部的从node port向service的访问

新版本目前 kube-proxy 组件全部采用 ipvs 方式负载,所以为了 kube-proxy 能正常工作需要预先处理一下 ipvs 配置以及相关依赖(每台 node 都要处理)

2、部署kube-proxy

2.1、开启ipvs

[root@k8s-master01 ~]# ansible k8s-node -m shell -a "yum install -y ipvsadm ipset conntrack"

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'modprobe -- ip_vs'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'modprobe -- ip_vs_rr'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'modprobe -- ip_vs_wrr'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'modprobe -- ip_vs_sh'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'modprobe -- nf_conntrack_ipv4'2.2、创建kube-proxy证书请求

[root@k8s-master01 ~]# vim /opt/k8s/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:kube-proxy",

"OU": "System"

}

]

}2.3、生成kube-proxy证书与私钥

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy2019/04/25 17:39:22 [INFO] generate received request

2019/04/25 17:39:22 [INFO] received CSR

2019/04/25 17:39:22 [INFO] generating key: rsa-2048

2019/04/25 17:39:22 [INFO] encoded CSR

2019/04/25 17:39:22 [INFO] signed certificate with serial number 265052874363255358468035370835573343349230196562

2019/04/25 17:39:22 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").3)查看证书生成

[root@k8s-master01 certs]# ll kube-proxy*

-rw-r--r-- 1 root root 1029 Apr 25 17:39 kube-proxy.csr

-rw-r--r-- 1 root root 302 Apr 25 17:37 kube-proxy-csr.json

-rw------- 1 root root 1675 Apr 25 17:39 kube-proxy-key.pem

-rw-r--r-- 1 root root 1428 Apr 25 17:39 kube-proxy.pem2.4、证书分发

[root@k8s-master01 certs]# ansible k8s-node -m copy -a 'src=/opt/k8s/certs/kube-proxy-key.pem dest=/etc/kubernetes/ssl/'

[root@k8s-master01 certs]# ansible k8s-node -m copy -a 'src=/opt/k8s/certs/kube-proxy.pem dest=/etc/kubernetes/ssl/'5)创建kube-proxy kubeconfig文件

kube-proxy组件连接 apiserver 所需配置文件

2.5、配置集群参数

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.74.100:8888

--kubeconfig=kube-proxy.kubeconfigCluster "kubernetes" set.

## 配置客户端认证参数

[root@k8s-master01 ~]# kubectl config set-credentials system:kube-proxy --client-certificate=/opt/k8s/certs/kube-proxy.pem --embed-certs=true --client-key=/opt/k8s/certs/kube-proxy-key.pem --kubeconfig=kube-proxy.kubeconfigUser "system:kube-proxy" set.

## 配置集群上下文

[root@k8s-master01 ~]# kubectl config set-context system:kube-proxy@kubernetes --cluster=kubernetes --user=system:kube-proxy --kubeconfig=kube-proxy.kubeconfigContext "system:kube-proxy@kubernetes" created.

## 配置集群默认上下文

[root@k8s-master01 ~]# kubectl config use-context system:kube-proxy@kubernetes --kubeconfig=kube-proxy.kubeconfigSwitched to context "system:kube-proxy@kubernetes".

##配置文件分发至node节点

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/root/kube-proxy.kubeconfig dest=/etc/kubernetes/config/'6)配置kube-proxy参数

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kube-proxy-conf.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/config/kube-proxy.kubeconfig"

qps: 100

bindAddress: 192.168.74.132

healthzBindAddress: 192.168.74.132:10256

metricsBindAddress: 192.168.74.132:10249

enableProfiling: true

clusterCIDR: .252.10.0/18 hostnameOverride: 192.168.74.132 mode: "ipvs" portRange: "" iptables: masqueradeAll: false ipvs: scheduler: rr excludeCIDRs: []注意这里的clusterCIDR是上面安装kubelet组件的时候定义的pod集群网段

## 分发参数配置文件

### 修改hostname-override字段所属主机名

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/cfg/kube-proxy-conf.yaml dest=/etc/kubernetes/config/'7)kube-proxy系统服务脚本

[root@k8s-master01 ~]# vim /opt/k8s/unit/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/config/kube-proxy-config.yaml \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.6、 分发至node节点

[root@k8s-master01 ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/unit/kube-proxy.service dest=/usr/lib/systemd/system/'## 启动服务

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl enable kube-proxy'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl start kube-proxy'2.7、查看ipvs路由规则

检查LVS状态,可以看到已经创建了一个LVS集群,将来自172.252.0.1:443的请求转到三台master的6443端口,而6443就是api-server的端口

[root@k8s-node01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.252.0.1:443 wrr

-> 192.168.74.130:8888 Masq 1 0 0

-> 192.168.74.131:8888 Masq 1 0 0

-> 192.168.74.135:8888 Masq 1 0 0

十一:Calico部署

1、简介:

前面已经介绍master与node节点集群组件部署,由于K8S本身不支持网络,当 node 全部启动后,由于网络组件(CNI)未安装会显示为 NotReady 状态,需要借助第三方网络才能进行创建Pod,下面将部署 Calico 网络为K8S提供网络支持,完成跨节点网络通讯。

Calico与Flannel为什么选择calico这种网络方式进行通信

flannel vxlan和calico ipip模式都是隧道方案,但是calico的封装协议IPIP的header更小,所以性能比flannel vxlan要好一点点。

Flannel过程是需要封装跟解封装的过程比较消耗CPU资源

官方文档 https://docs.projectcalico.org/v3.6/introduction

2、部署calico

2.1、现在calico的yaml脚本

去github下载Calico yaml(或者直接使用我的这个yaml文件,点击下载地址下面需要部署的几个组件)

压缩包里面包含了

calico.yaml :网络组件

coredns.yaml:DNS组件

apiserver-to-kubelet.yaml :添加使用kubelctl执行查看log.exec等命令认证权限

rancher.yaml:安装图形化管理web界面工具(注意本yaml文件拉取的是最新的稳定版本的rancher,可以根据自己的需求修改版本)

[root@k8s-master01 ~]# mkdir /opt/k8s/calico

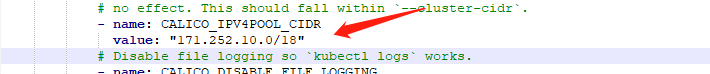

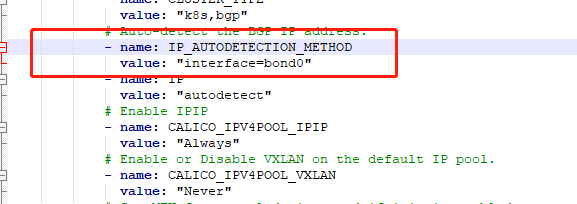

[root@k8s-master01 ~]# cd /opt/k8s/calico/2.2、修改calico.yaml文件两处配置

修改第一处配置,把这个注释去掉修改成pod集群ip网段

修改第二处在相应位置出入这个配置指定可以连接集群的网卡,这里是指定死的网卡bon0如果多个网卡可以使用ens.*根据自己网卡名进行修改

[root@k8s-master01 calico]# kubectl apply -f calico.yaml4)查看

[root@k8s-master01 ~]# kubectl get po -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-75569d87d7-lxmgq 1/1 Running 0 70s 192.168.74.132 k8s-node01 <none> <none>

calico-node-lhmt8 1/1 Running 0 70s 192.168.74.132 k8s-node01 <none> <none>

calico-node-nkmh2 1/1 Running 0 70s 192.168.74.133 k8s-node02 <none> <none>

calico-node-wsm9s 1/1 Running 0 70s 192.168.74.134 k8s-node03 <none> <none>2.3、验证集群状态

## 查看node是否为就绪状态

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node01 Ready <none> 19h v1.22.7

k8s-node02 Ready <none> 18h v1.22.7

k8s-node03 Ready <none> 18h v1.22.7

十二:Coredns部署替代老版本的dns

1、简介:

集群其他组件全部完成后我们应当部署集群 DNS 使 service 等能够正常解析,1.11版本coredns已经取代kube-dns成为集群默认dns。

2、部署coredns

2.1、下载yaml配置清单

[root@k8s-master01 ~]# mkdir /opt/k8s/coredns

[root@k8s-master01 ~]# cd /opt/k8s/coredns/

将上面下载下来的coredns.yaml放到创建的目录下面

2.2、修改默认配置清单文件

(需要修改一处就是上面我们部署kubelet的时候指定的dns ip地址其他的已经改好了)

[root@k8s-master01 ~]# vim /opt/k8s/coredns/coredns.yaml

参数解释:

1)errors官方没有明确解释,后面研究

2)health:健康检查,提供了指定端口(默认为8080)上的HTTP端点,如果实例是健康的,则返回“OK”。

3)cluster.local:CoreDNS为kubernetes提供的域,171.252.0.0/18这告诉Kubernetes中间件它负责为反向区域提供PTR请求0.0.254.10.in-addr.arpa ..换句话说,这是允许反向DNS解析服务(我们经常使用到得DNS服务器里面有两个区域,即“正向查找区域”和“反向查找区域”,正向查找区域就是我们通常所说的域名解析,反向查找区域即是这里所说的IP反向解析,它的作用就是通过查询IP地址的PTR记录来得到该IP地址指向的域名,当然,要成功得到域名就必需要有该IP地址的PTR记录。PTR记录是邮件交换记录的一种,邮件交换记录中有A记录和PTR记录,A记录解析名字到地址,而PTR记录解析地址到名字。地址是指一个客户端的IP地址,名字是指一个客户的完全合格域名。通过对PTR记录的查询,达到反查的目的。)

4)proxy:这可以配置多个upstream 域名服务器,也可以用于延迟查找 /etc/resolv.conf 中定义的域名服务器

5)cache:这允许缓存两个响应结果,一个是肯定结果(即,查询返回一个结果)和否定结果(查询返回“没有这样的域”),具有单独的高速缓存大小和TTLs。

# 这里 kubernetes cluster.local 为 创建 svc 的 IP 段

kubernetes cluster.local 172.252.0.0/16

# clusterIP 为 指定 DNS 的 IP

3)创建

对于配置清单中定义的一些资源,后面介绍K8S应用时,都会介绍说明,这里只要看对应的资源是否成功创建即可。

## 根据配置清单创建对应资源

[root@k8s-master01 coredns]# kubectl apply -f coredns.yaml## 查看资源创建

### pods svc均已创建

[root@k8s-master01 coredns]# kubectl get pod,svc -n kube-system -o wide2.3、测试dns解析

在这里就可以使用创建上kubectl具有管理员权限

在master节点执行

kubectl apply -f apiserver-to-kubelet.yaml## 现在授权了就可以正常使用kubectl命令创建pod

[root@k8s-master01 ~]# kubectl run nginx --image=nginx:1.14.2-alpine## 查看pod状态

## 可以看到pod被调度到k8s-node01节点,IP地址171.252.96.194

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-8477bdff5d-qr5bl 1/1 Running 0 46s 171.252.96.194 k8s-node01 <none> <none>## 创建service

[root@k8s-master01 ~]# kubectl expose deployment nginx --port=80 --target-port=80## 查看service

### 可以看到service nginx已经创建,并已经分配地址

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.252.0.1 <none> 443/TCP 2d4h

nginx ClusterIP 172.252.95.165 <none> 80/TCP 12s2.5、 验证dns解析

### 不要使用busybox镜像测试,有坑

### 创建包含nslookup的pod镜像alpine

[root@k8s-master01 ~]# kubectl run alpine --image=alpine-- sleep 3600## 查看pod名称

[root@k8s-master01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

alpine-7c78c944f5-gsxbv 1/1 Running 0 113s

nginx-8477bdff5d-qr5bl 1/1 Running 0 9m48s## 测试

[root@k8s-master01 ~]# kubectl exec -it alpine-7c78c944f5-gsxbv -- nslookup nginx

nslookup: can't resolve '(null)': Name does not resolve

Name: nginx

Address 1: 171.252.95.165 nginx.default.svc.cluster.local十三:node新节点加入集群

前面我们二进制部署K8S集群时,两台master节点仅仅作为集群管理节点,所以master节点上中并未部署docker、kubelet、kube-proxy等服务。后来我在部署mertics-server、istio组件服务时,发现无法正常运行,后来尝试把master节点也加入集群进行调度,这些组件才能够正常部署,并可以正确获取集群资源。所以本篇文章主要介绍如何在已经部署好集群的master节点部署docker、kubelet、kube-proxy等服务(添加新的node节点添加ansibel新节点地址并定义[k8s-node-new]将下面执行的ansibel k8s-master修改成ansibel k8s-node-new在一样的执行步骤操作)。

注意:本篇文章操作是基于我之前部署集群时,没有在master节点部署kubele、kube-proxy、docker等组件,若你的集群是kubeadm方式部署(默认已经部署这些组件),可以不关注此文章。

1、部署docker

## 设置存储库

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'yum install -y yum-utils device-mapper-persistent-data lvm2'## 添加yum源

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo'## 安装指定版本docker(也可以使用二进制安装docker)

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'yum install docker-ce-18.09.5-3.el7 -y'## 启动

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable docker'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start docker'2、部署kubelet

对于在 master 节点启动 kubelet 来说,不需要 nginx 做负载均衡;可以跳过nginx-proxy部署,直接进行kubelet、kube-proxy的安装,并修改 kubelet.kubeconfig、kube-proxy.kubeconfig 中的 apiserver 地址为当前 master ip 8888 端口即可,我配置文件中是192.168.74.100:8888连接的是负载均衡地址,无需修改。

## 把配置文件分发至各master节点

### bootstrap.kubeconfig

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/bootstrap.kubeconfig dest=/etc/kubernetes/config/'

### kubelet.conf(别忘了修改参数中对应的主机名、IP地址、--node-labels选项)

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kubelet-conf.yaml dest=/etc/kubernetes/config/'

### master节点设置taint

[root@k8s-master01 ~]# kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=:NoSchedule

[root@k8s-master02 ~]# kubectl taint nodes k8s-master02 node-role.kubernetes.io/master=:NoSchedule

注意:kubelet.conf配置文件中--node-labels=node-role.kubernetes.io/k8s-node=true 这个选项,它的作用只是在 kubectl get node 时 ROLES 栏显示是什么节点;

对于master节点需要修改为--node-labels=node-role.kubernetes.io/k8s-master=true,后面这个 node-role.kubernetes.io/master 是 kubeadm 用的,这个 label 会告诉 k8s 调度器当前节点为 master节点;

如果不想让master节点参与到正常的pod调度,则需要对master进行打污点标签,这样master就不会有pod创建(pod创建时可以进行容忍度设置,这样master还是可以进行pod调度)

### kubelet.service

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kubelet.service dest=/usr/lib/systemd/system/'

## 创建kubelet数据目录

[root@k8s-master01 ~]# ansible k8s-master -m file -a 'path=/var/lib/kubelet state=directory'

## 启动服务

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kubelet'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kubelet

## 查看未授权的csr请求

[root@k8s-master01 ~]# kubectl get csr

## 批准kubelet 的 TLS 证书请求

[root@k8s-master01 ~]# kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve

## 查看各节点就绪状态

### 需要等待一段时间,因为需要下载安装网络组件镜像

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready <none> 1m v1.22.7

k8s-master02 Ready <none> 1m v1.22.7

k8s-node01 Ready <none> 20m v1.22.7

k8s-node02 Ready <none> 20m v1.22.7

k8s-node03 Ready <none> 20m v1.22.72、kube-proxy部署

## 开启ipvs

[root@k8s-master01 ~]# ansible k8s-master -m shell -a "yum install -y ipvsadm ipset conntrack"

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'modprobe -- ip_vs'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'modprobe -- ip_vs_rr'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'modprobe -- ip_vs_wrr'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'modprobe -- ip_vs_sh'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'modprobe -- nf_conntrack_ipv4' #内核5.0以上的版本改为nf_conntrack

## 分发kube-proxy证书文件

[root@k8s-master01 certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-proxy-key.pem dest=/etc/kubernetes/ssl/'

[root@k8s-master01 certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-proxy.pem dest=/etc/kubernetes/ssl/'

## kubeconfig

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/kube-proxy.kubeconfig dest=/etc/kubernetes/config/'

## kube-proxy.conf配置文件(修改hostname-override字段所属主机名)

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-proxy-conf.yaml dest=/etc/kubernetes/config/'

## kube-proxy.service启动脚本

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-proxy.service dest=/usr/lib/systemd/system/'

## 启动

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kube-proxy'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kube-proxy'总结:以上就是master节点部署kubelet、kube-proxy组件过程,以上有几个关键点重新整理:

1、对于新安装集群来说,建议在部署集群时就安装三个服务,而不是集群部署后,遇到问题再进行部署。

2、master节点上kubelet.conf中--node-labels=node-role.kubernetes.io/k8s-node=true修改为--node-labels=node-role.kubernetes.io/k8s-master=true

3、master节点上,kubelet、kube-proxy直接与本地kube-apiserver通信即可,无需进行nginx负载均衡配置,及不需要部署nginx-proxy

十四:mertics-server节点监控部署

集群部署好后,如果我们想知道集群中每个节点及节点上的pod资源使用情况,命令行下可以直接使用kubectl top node/pod来查看资源使用情况,默认此命令不能正常使用,需要我们部署对应api资源才可以使用此命令。从 Kubernetes 1.8 开始,资源使用指标(如容器 CPU 和内存使用率)通过 Metrics API 在 Kubernetes 中获取, metrics-server 替代了heapster。Metrics Server 实现了Resource Metrics API,Metrics Server 是集群范围资源使用数据的聚合器。 Metrics Server 从每个节点上的 Kubelet 公开的 Summary API 中采集指标信息。heapster从1.13版本开始被废弃,官方推荐使用Metrics Server+Prometheus方案进行集群监控。

1、下载资源清单配置文件(6个)

项目地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server

配置文件有两种获取方式:

1、https://github.com/kubernetes-incubator/metrics-server/tree/master/deploy/1.8%2B

2、https://github.com/kubernetes/kubernetes/tree/release-1.14/cluster/addons/metrics-server(推荐使用此方式)

[root@k8s-master01 ~]# mkdir /opt/metrics

### 选择对应分支,下载指定配置文件

###或者直接git clone https://github.com/kubernetes/kubernetes.git,metrics-server 插件位于 kubernetes 的 cluster/addons/metrics-server/ 目录下

[root@k8s-master01 metrics]# for file in auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml;do wget https://raw.githubusercontent.com/kubernetes/kubernetes/v1.14.1/cluster/addons/metrics-server/$file;done

2、修改配置文件

由于某些原因,有些镜像在国内无法下载,所以我们需要修改配置文件中镜像下载地址,要注意红色字体为镜像运行的参数

##镜像地址启动参数修改

[root@k8s-master01 metrics]# vim metrics-server-deployment.yaml

###mertics-server 修改启动参数镜像地址

......

containers:

- name: metrics-server

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.1

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP......

###metrics-server-nanny 修改镜像地址及启动参数

......- name: metrics-server-nanny

image: registry.cn-hangzhou.aliyuncs.com/google_containers/addon-resizer:1.8.4

.....

command:

- /pod_nanny

- --config-dir=/etc/config

- --cpu=100m

- --extra-cpu=0.5m

- --memory=100Mi

- --extra-memory=50Mi

- --threshold=5

- --deployment=metrics-server-v0.3.1

- --container=metrics-server

- --poll-period=300000

- --estimator=exponential......

## 在新的版本中,授权文内没有 node/stats 的权限,需要手动去添加

[root@k8s-master01 metrics]# vim resource-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats ## 添加此参数

- namespacesmertics-server镜像参数解释:

- --kubelet-insecure-tls:不验证客户端证书

- --kubelet-preferred-address-types: metrics-server连节点时默认是连接节点的主机名,但是coredns里面没有物理机主机名的解析,需要加个参数,让它连接节点的IP

特别注意:

如果不加参数,有可能会报以下错误:

unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:k8s-node02: unable to fetch metrics from Kubelet k8s-node02 (10.10.0.23): request failed - "401 Unauthorized", response: "Unauthorized", unable to fully scrape metrics from source kubelet_summary:k8s-node01: unable to fetch metrics from Kubelet k8s-node01 (10.10.0.17): request failed - "401 Unauthorized", response: "Unauthorized"]

3、kube-apiserver配置文件修改

二进制部署安装,需要手动修改apiserver添加开启聚合服务的参数,当然如果你已经添加,那么请跳过这一步

## 编辑kube-apiserver.conf 添加如下参数,从下面参数中可以看出,需要生产新的证书,因此我们还需要为metrics生产证书--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem

--requestheader-allowed-names=""

--requestheader-extra-headers-prefix=X-Remote-Extra-

--requestheader-group-headers=X-Remote-Group

--requestheader-username-headers=X-Remote-User

--proxy-client-cert-file=/etc/kubernetes/ssl/metrics-proxy.pem

--proxy-client-key-file=/etc/kubernetes/ssl/metrics-proxy-key.pem参数说明:

- --requestheader-XXX、--proxy-client-XXX 是 kube-apiserver 的 aggregator layer 相关的配置参数,metrics-server & HPA 需要使用;

- --requestheader-client-ca-file:用于签名 --proxy-client-cert-file 和 --proxy-client-key-file 指定的证书(ca证书),在启用了 metric aggregator 时使用;

注:如果 --requestheader-allowed-names 不为空,则--proxy-client-cert-file 证书的 CN 必须位于 allowed-names 中,默认为 aggregator;

如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 --enable-aggregator-routing=true 参数

特别注意:

kube-apiserver不开启聚合层会报以下类似错误:

I0109 05:55:43.708300 1 serving.go:273] Generated self-signed cert (apiserver.local.config/certificates/apiserver.crt, apiserver.local.config/certificates/apiserver.key)

Error: cluster doesn't provide requestheader-client-ca-file

4、为metrics server生成证书

上面可以看到,kube-apiserver开启聚合层,也需要使用证书,为了便于区分,我们这里为mertics 单独生产证书

关于证书的创建也可参考之前部署其它组件时创建证书时候的步骤

## 创建kube-proxy证书请求

[root@k8s-master01 ~]# vim /opt/k8s/certs/metrics-proxy-csr.json

{

"CN": "metrics-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "metrics-proxy",

"OU": "System"

}

]

}## 生成kube-proxy证书与私钥

[root@k8s-master01 ~]# cd /opt/k8s/certs/

[root@k8s-master01 certs]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes metrics-proxy-csr.json | cfssljson -bare metrics-proxy## 证书分发

[root@k8s-master01 certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/metrics-proxy-key.pem dest=/etc/kubernetes/ssl/'[root@k8s-master01 certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/metrics-proxy.pem dest=/etc/kubernetes/ssl/'## 重启kube-apiserver服务

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl restart kube-apiserver'5、kubelet参数修改

主要检查以下两个参数,否则无法正常获取节点主机或者pod的资源使用情况

- 删除--read-only-port=0

- 添加--authentication-token-webhook=true参数

6、根据清单文件创建mertics server服务

[root@k8s-master01 metrics]# kubectl apply -f .##查看mertics-server运行情况

[root@k8s-master01 metrics]# kubectl get pods -n kube-system |grep metrics-server

metrics-server-v0.3.1-57654875b4-6nflh 2/2 Running 0 13m## 验证查看是否可以正常获取资源使用详情

[root@k8s-master01 metrics]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 245m 24% 648Mi 74%

k8s-master02 166m 16% 630Mi 72%

k8s-node01 137m 13% 645Mi 73%

k8s-node02 96m 4% 461Mi 15%

k8s-node03 360m 18% 1075Mi 37%特别注意:

我是二进制部署,所以刚开始并没有在master节点部署kubelet、kube-proxy组件,所以导致一直安装失败: