强化学习理论-第5课-蒙特卡洛方法

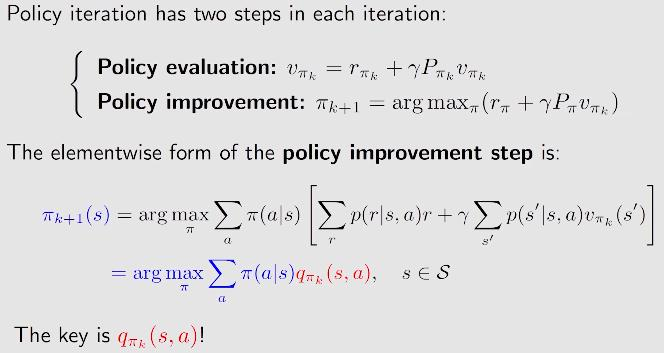

之前的章节都是基于model base,这节是model free的方法。

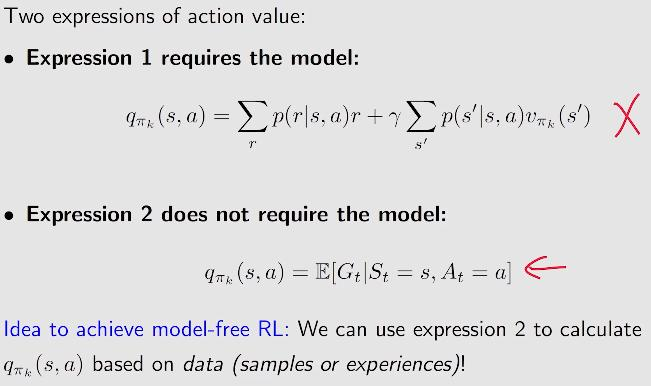

1. model-base to model-free:

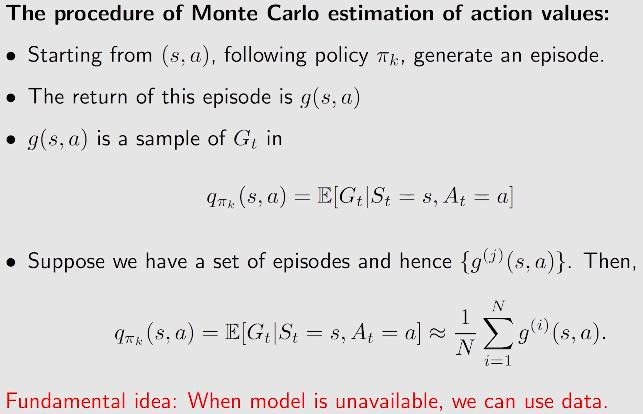

2. 计算\(q_{\pi k}:\)

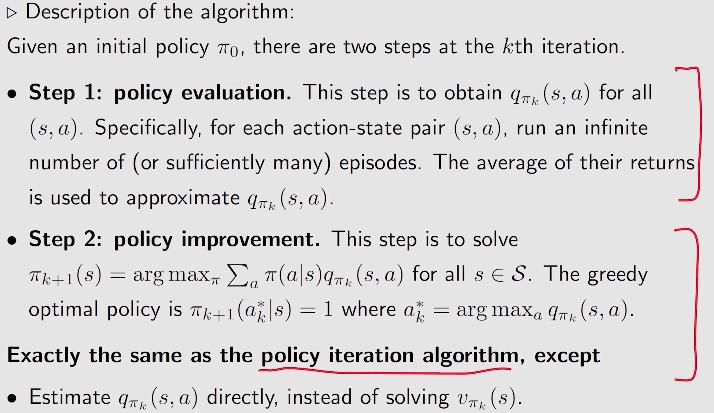

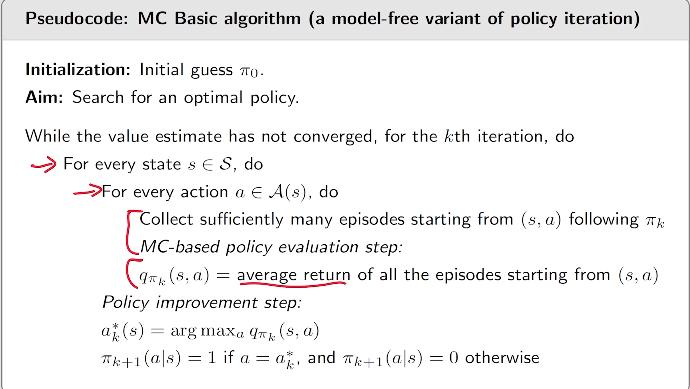

3. MC base algorithm:

step 1和model base是不一样的,后面的步骤是一样的。

4. MC exploring starts算法:

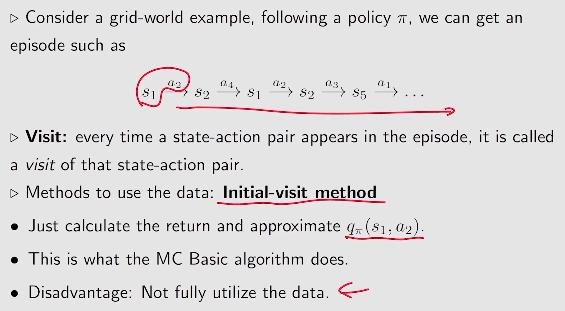

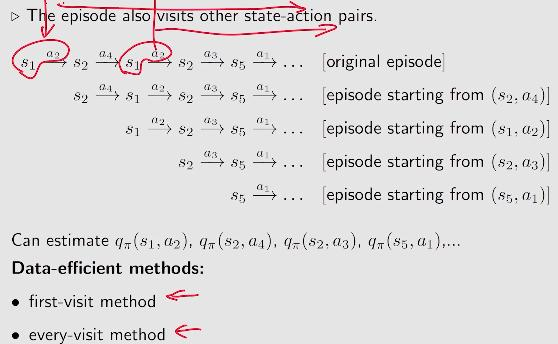

提出visit概念,MC Basic的算法只考虑第一个visit,对数据的使用是浪费的。

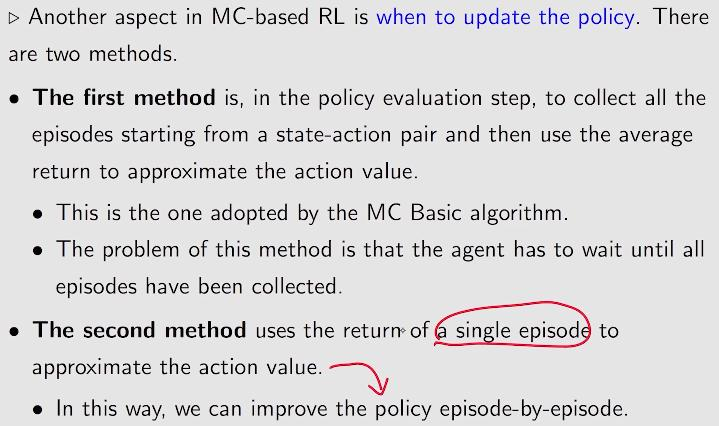

4.1 update the policy:

第一种方案是收集到所有的episodes,然后再计算;另一种是获得一个episode,计算近似值,改进策略

4.2 MC exploring starts:

在实际当中,获取所有的(s,a)是比较困难的,所以有下面的改进策略。

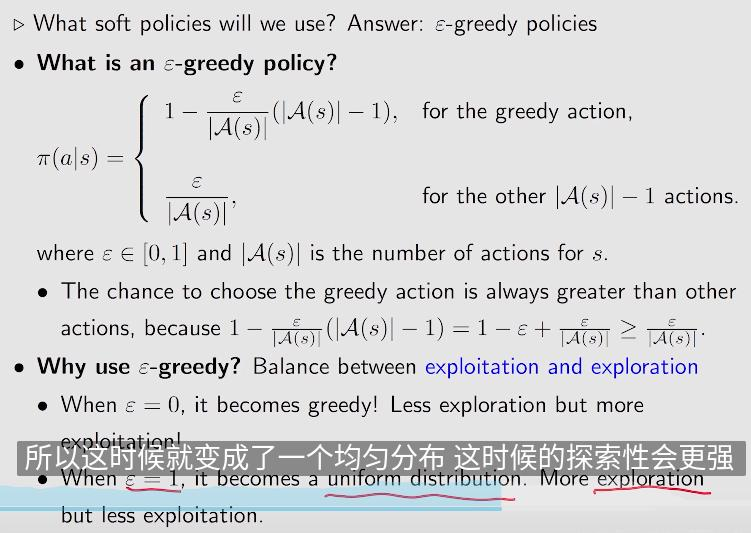

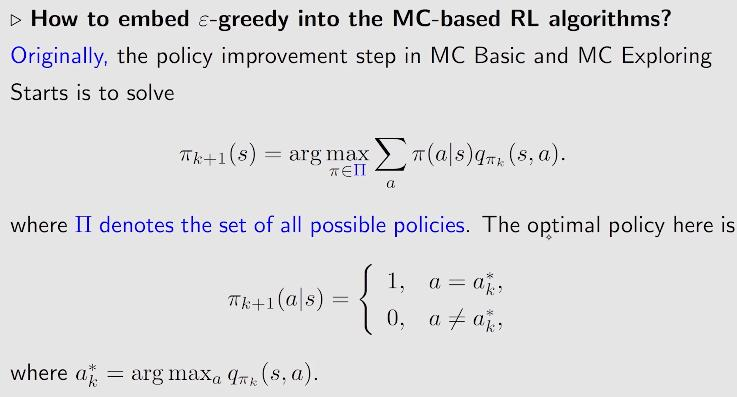

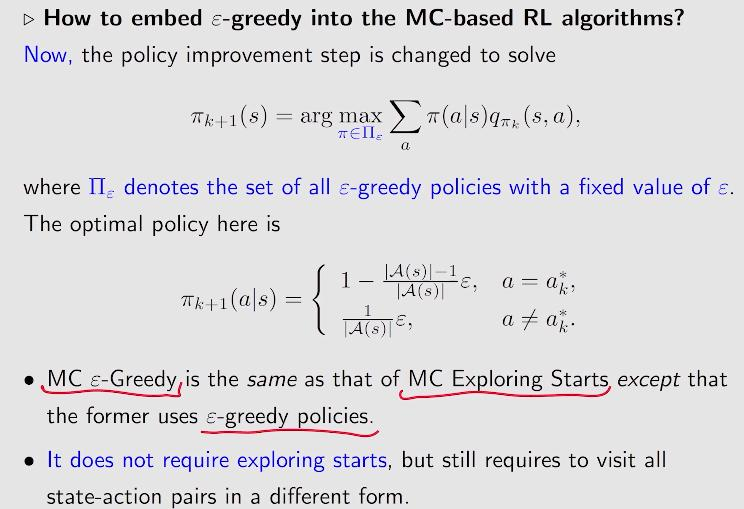

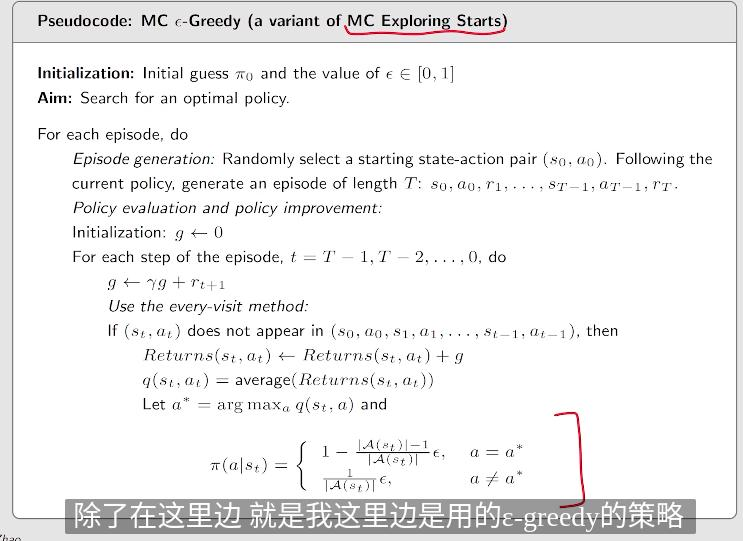

5. MC Epsilon-Greedy:

A policy is called soft if the probability to take any action is positive.

def mc_basic(self, length = 30, epochs = 10):

for epoch in range(epochs):

for state in range(self.state_space_size):

for action in range(self.action_space_size):

episode = self.obtain_episode(self.policy, state, action, length)

g = 0

for step in range(len(episode)-1, -1, -1):

g = episode[step]['reward'] + self.gama * g

self.qvalue[state][action] = g

qvalue_star = self.qvalue[state].max()

action_star = self.qvalue[state].tolist().index(qvalue_star)

self.policy[state] = np.zeros(shape = self.action_space_size)

self.policy[state,action_star] = 1

def mc_exploring_starts(self, length = 10):

policy = self.mean_policy.copy()

qvalue = self.qvalue.copy()

returns = [[[0 for row in range(1)] for col in range(5)] for block in range(25)]

while np.linalg.norm(policy - self.policy, ord = 1) > 0.001:

policy = self.policy.copy()

for state in range(self.state_space_size):

for action in range(self.action_space_size):

visit_list = []

g = 0

episode = self.obtain_episode(policy=self.policy, start_state=state, start_action=action,

length=length)

for step in range(len(episode) - 1, -1, -1):

reward = episode[step]['reward']

state = episode[step]['state']

action = episode[step]['action']

g = self.gama * g + reward

if [state, action] not in visit_list:

visit_list.append([state, action])

returns[state][action].append(g)

qvalue[state, action] = np.array(returns[state][action]).mean()

qvalue_star = qvalue[state].max()

action_star = qvalue[state].tolist().index(qvalue_star)

self.policy[state] = np.zeros(shape = self.action_space_size).copy()

self.policy[state, action_star] = 1

def mc_epsilon_greedy(self, length = 10, epsilon=0):

policy = self.mean_policy.copy()

qvalue = self.qvalue.copy()

returns = [[[0 for row in range(1)] for col in range(5)] for block in range(25)]

while np.linalg.norm(policy - self.policy, ord = 1) > 0.001:

policy = self.policy.copy()

for state in range(self.state_space_size):

for action in range(self.action_space_size):

visit_list = []

g = 0

episode = self.obtain_episode(policy=self.policy, start_state=state, start_action=action,

length=length)

for step in range(len(episode) - 1, -1, -1):

reward = episode[step]['reward']

state = episode[step]['state']

action = episode[step]['action']

g = self.gama * g + reward

if [state, action] not in visit_list:

visit_list.append([state, action])

returns[state][action].append(g)

qvalue[state, action] = np.array(returns[state][action]).mean()

qvalue_star = qvalue[state].max()

action_star = qvalue[state].tolist().index(qvalue_star)

for a in range(self.action_space_size):

if a == action_star:

self.policy[state, a] = 1 - (

self.action_space_size - 1) / self.action_space_size * epsilon

else:

self.policy[state, a] = 1 / self.action_space_size * epsilon

浙公网安备 33010602011771号

浙公网安备 33010602011771号