redis讲解

注意:对于redis,虽然支持虚拟内存,但是不建议用,因为会降低性能!

环境:

centos6.4 linux-node1.example.com

[root@linux-node1 src]# pwd

/usr/local/src

[root@linux-node1 src]# wget http://download.redis.io/releases/redis-3.2.3.tar.gz

[root@linux-node1 src]#tar -zxf redis-3.2.3.tar.gz

[root@linux-node1 src]#cd redis-3.2.3

[root@linux-node1 redis-3.2.3]# make PREFIX=/usr/local/redis-3.2.3 install

[root@linux-node1 utils]# pwd

/usr/local/src/redis-3.2.3/utils

[root@linux-node1 utils]# cp redis_init_script /etc/init.d/redis

[root@linux-node1 utils]# chmod +x /etc/init.d/redis

[root@linux-node1 utils]#ln -s /usr/local/redis-3.2.3/ /usr/local/redis

修改后启动的脚本:

|

[root@linux-node1 utils]# cat /etc/init.d/redis #!/bin/sh # # Simple Redis init.d script conceived to work on Linux systems # as it does use of the /proc filesystem.

REDISPORT=6379 EXEC=/usr/local/redis/bin/redis-server CLIEXEC=/usr/local/redis/bin/redis-cli

PIDFILE=/var/run/redis_${REDISPORT}.pid CONF="/etc/redis/${REDISPORT}.conf"

case "$1" in start) if [ -f $PIDFILE ] then echo "$PIDFILE exists, process is already running or crashed" else echo "Starting Redis server..." $EXEC $CONF fi ;; stop) if [ ! -f $PIDFILE ] then echo "$PIDFILE does not exist, process is not running" else PID=$(cat $PIDFILE) echo "Stopping ..." $CLIEXEC -p $REDISPORT shutdown while [ -x /proc/${PID} ] do echo "Waiting for Redis to shutdown ..." sleep 1 done echo "Redis stopped" fi ;; *) echo "Please use start or stop as first argument" ;; esac |

[root@linux-node1 ~]# mkdir -p /etc/redis

[root@linux-node1 ~]# cp /usr/local/src/redis-3.2.3/redis.conf /etc/redis/6379.conf

启动服务:

[root@linux-node1 ~]# /etc/init.d/redis start --》发现启动时在前端

修改redis配置文件使得启动是在后台:

|

[root@linux-node1 ~]# grep daemonize /etc/redis/6379.conf # Note that Redis will write a pid file in /var/run/redis.pid when daemonized. daemonize yes

[root@linux-node1 ~]# /etc/init.d/redis start

[root@linux-node1 ~]# netstat -tunlp |grep redis tcp 0 0 127.0.0.1:6379 0.0.0.0:* LISTEN 5012/redis-server 1 |

对redis-cli命令做软链接:

[root@linux-node1 ~]# ln -s /usr/local/redis/bin/redis-cli /usr/local/bin/redis-cli

登陆:

[root@linux-node1 ~]# redis-cli

127.0.0.1:6379>

或者:

[root@linux-node1 ~]# redis-cli -h 127.0.0.1 -p 6379

127.0.0.1:6379>

注意:redis是单线程单进程,所以可以启动多个redis服务。

如下是一些基础操作:

注意:redis命令是不区分大小写的。

[root@linux-node1 ~]# grep logfile /etc/redis/6379.conf

logfile "/var/log/redis.log" ---》添加日志文件

[root@linux-node1 ~]# tree /usr/local/redis/bin/

/usr/local/redis/bin/

├── redis-benchmark

├── redis-check-aof

├── redis-check-rdb

├── redis-cli

├── redis-sentinel -> redis-server

└── redis-server

redis数据类型:

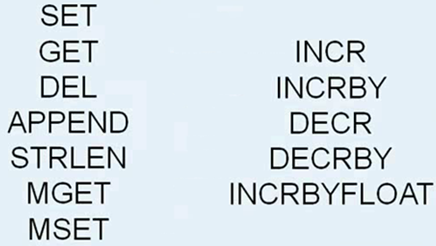

1)字符串类型

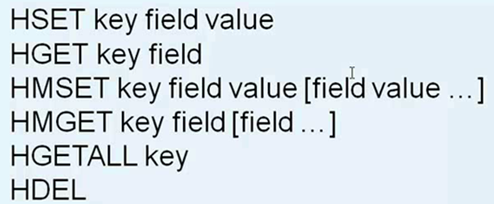

2)散列类型

例如:

|

127.0.0.1:6379> hset telephone name iphone (integer) 1 127.0.0.1:6379> hset telephone colour red (integer) 1 127.0.0.1:6379> hset telephone price 4500 (integer) 1 127.0.0.1:6379> hget telephone colour "red" 127.0.0.1:6379> hget telephone price "4500" 127.0.0.1:6379> hgetall telephone 1) "name" 2) "iphone" 3) "colour" 4) "red" 5) "price" 6) "4500" |

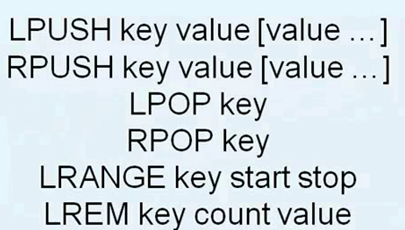

3)列表类型

例如:

|

[root@linux-node1 ~]# redis-cli 127.0.0.1:6379> lpush num 0 (integer) 1 127.0.0.1:6379> lpush num 1 (integer) 2 127.0.0.1:6379> lpush num 2 (integer) 3 127.0.0.1:6379> rpush num 3 ---》执行完之后,列表从左到右是:2 1 0 3 (integer) 4 127.0.0.1:6379> llen num ---》查看列表的长度是4 (integer) 4 127.0.0.1:6379> lpop num ----》从左弹出1列,把2弹出 "2" 127.0.0.1:6379> rpop num ----》从右弹出1列,把3弹出 "3" 127.0.0.1:6379> llen num ----》再次查看列表长度,是2;此时列表变成了1 0 (integer) 2

127.0.0.1:6379> Lpush num 10 (integer) 3 127.0.0.1:6379> rpush num 20 ---》此时的列表内容从左到右位: 10 1 0 20 (integer) 4 127.0.0.1:6379> LRANGE num 0 1 1) "10" 2) "1" 127.0.0.1:6379> LRANGE num 0 -1 ----》-1代表从右第一列开始 1) "10" 2) "1" 3) "0" 4) "20" 127.0.0.1:6379> LRANGE num 0 -2 1) "10" 2) "1" 3) "0" 127.0.0.1:6379> LRANGE num 0 -3 1) "10" 2) "1"

127.0.0.1:6379> LINDEX num -1 ---》lindex是获取指定的值 "20" 127.0.0.1:6379> LINDEX num -2 "0" 127.0.0.1:6379> LINDEX num -3 "1" 127.0.0.1:6379> LINDEX num -4 "10"

127.0.0.1:6379> LRANGE num 0 -1 1) "10" 2) "1" 3) "0" 4) "20" 127.0.0.1:6379> LTRIM num 0 2 ----》ltrim只保留0到2的列的内容,把20删除了。 OK 127.0.0.1:6379> LRANGE num 0 -1 1) "10" 2) "1" 3) "0" 补充: lpush从左往里写数据,rpop从右弹出数据,即是先进先出。logstash写日志也用的是此种方法。 |

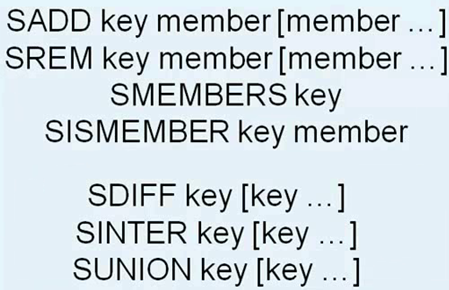

4)集合类型

例如:

|

127.0.0.1:6379> sadd jihe1 a b c (integer) 3 127.0.0.1:6379> sadd jihe2 b c d (integer) 3 127.0.0.1:6379> SMEMBERS jihe2 ---》查看jihe2的内容 1) "c" 2) "d" 3) "b" 127.0.0.1:6379> sismember jihe1 d --》sismember判断d是否在jihe1里,返回0则不在。 (integer) 0 127.0.0.1:6379> sismember jihe1 c --》sismember判断c是否在jihe1里,返回1则在。 (integer) 1

差集: 127.0.0.1:6379> sdiff jihe1 jihe2 ---》jihe1减去jihe2 1) "a" 127.0.0.1:6379> sdiff jihe2 jihe1 ---》jihe2减去jihe1 1) "d"

交集: 127.0.0.1:6379> sinter jihe1 jihe2 1) "c" 2) "b"

并集: 127.0.0.1:6379> SUNION jihe1 jihe2 1) "a" 2) "c" 3) "d" 4) "b" |

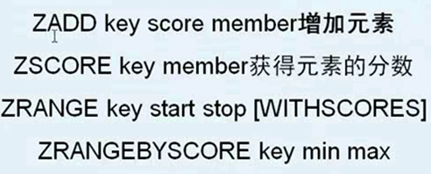

5)有序集合

例如:

|

127.0.0.1:6379> ZADD youxu 80 1 (integer) 1 127.0.0.1:6379> ZADD youxu 80 2 (integer) 1 127.0.0.1:6379> ZADD youxu 83 c (integer) 1 127.0.0.1:6379> ZADD youxu 84 d 85 e (integer) 2 127.0.0.1:6379> ZSCORE youxu a (nil) 127.0.0.1:6379> ZSCORE youxu c "83" 127.0.0.1:6379> ZSCORE youxu d "84"

查看: 127.0.0.1:6379> ZRANGE youxu 0 3 1) "1" 2) "2" 3) "c" 4) "d" |

redis事务:

发布/订阅:

redis持久化:

1)修改redis配置文件如下两行,打开RDB(快照持久化)的操作:

dbfilename dump_6379.rdb

dir /usr/local/redis/

[root@linux-node1 ~]# redis-cli

127.0.0.1:6379> set key 1

OK

127.0.0.1:6379> get key

"1"

127.0.0.1:6379> save

OK

127.0.0.1:6379> exit

[root@linux-node1 ~]#

[root@linux-node1 ~]# ll /usr/local/redis/

total 8

drwxr-xr-x 2 root root 4096 Aug 4 05:17 bin

-rw-r--r-- 1 root root 88 Aug 4 08:04 dump_6379.rdb

[root@linux-node1 ~]# redis-cli

127.0.0.1:6379> set key 2

OK

127.0.0.1:6379> bgsave

Background saving started

[root@linux-node1 ~]# ll /usr/local/redis/

total 8

drwxr-xr-x 2 root root 4096 Aug 4 05:17 bin

-rw-r--r-- 1 root root 88 Aug 4 08:06 dump_6379.rdb ---》二进制数据

注意:

save命令会阻塞进程,bgsave则不会,只是把进程放置在后端。

kill命令杀死redis进程,数据会保存;而kill -9杀死进程,数据则不会保存。所以在异常情况下时,快照会丢失保存后的数据。

rdb的优点则是:非常适合灾难恢复,把rdb此文件备份就行。是个紧凑型的二进制文件,大小比较小。对性能的影响也比较小。恢复时间短。

缺点是:数据量大的时候会有延时,性能会受影响。

2)修改redis配置文件如下两行,打开AOF的操作:

[root@linux-node1 ~]# grep appendonly /etc/redis/6379.conf

appendonly yes

[root@linux-node1 ~]# /etc/init.d/redis stop

[root@linux-node1 ~]# /etc/init.d/redis start

Starting Redis server...

[root@linux-node1 ~]# redis-cli

127.0.0.1:6379> set key 1

OK

127.0.0.1:6379> set key 2

OK

[root@linux-node1 ~]# ll /usr/local/redis/

total 12

-rw-r--r-- 1 root root 81 Aug 4 08:22 appendonly.aof

drwxr-xr-x 2 root root 4096 Aug 4 05:17 bin

-rw-r--r-- 1 root root 88 Aug 4 08:21 dump_6379.rdb

[root@linux-node1 ~]# cat /usr/local/redis/appendonly.aof

*2

$6

SELECT

$1

0

*3

$3

set

$3

key

$1

1

*3

$3

set

$3

key

$1

2

redis主从

[root@linux-node1 ~]# cd /etc/redis/

[root@linux-node1 redis]# ll

total 48

-rw-r--r-- 1 root root 46735 Aug 4 08:19 6379.conf

[root@linux-node1 redis]# cp 6379.conf 6380.conf

[root@linux-node1 redis]# vim 6380.conf

[root@linux-node1 redis]# diff 6379.conf 6380.conf

82c82

< # Accept connections on the specified port, default is 6379 (IANA #815344).

---

> # Accept connections on the specified port, default is 6380 (IANA #815344).

84c84

< port 6379

---

> port 6380

150c150

< pidfile /var/run/redis_6379.pid

---

> pidfile /var/run/redis_6380.pid

237c237

< dbfilename dump_6379.rdb

---

> dbfilename dump_6380.rdb

729c729

< # cluster-config-file nodes-6379.conf

---

> # cluster-config-file nodes-6380.conf

[root@linux-node1 ~]# /usr/local/redis/bin/redis-server /etc/redis/6380.conf

[root@linux-node1 ~]# netstat -tunlp |grep 6380

tcp 0 0 127.0.0.1:6380 0.0.0.0:* LISTEN 8674/redis-server 1

[root@linux-node1 ~]# ps -ef |grep redis

root 8639 1 0 08:22 ? 00:00:01 /usr/local/redis/bin/redis-server 127.0.0.1:6379

root 8674 1 0 08:34 ? 00:00:00 /usr/local/redis/bin/redis-server 127.0.0.1:6380

root 8688 8582 0 08:38 pts/1 00:00:00 grep redis

[root@linux-node1 ~]# kill -9 8674

[root@linux-node1 ~]# grep appendonly /etc/redis/6380.conf

appendonly yes

# The name of the append only file (default: "appendonly.aof")

appendfilename "appendonly_6380.aof" ---》改了此处,否则6379和6380都用的是此文件。

再次重启6380服务:

/usr/local/redis/bin/redis-server /etc/redis/6380.conf

把6380做成6379的从:

对于3.x的版本,redis的配置文件里默认绑定的是127.0.0.1,这里我们修改成192.168.1.200

[root@linux-node1 ~]# redis-cli -p 6380

127.0.0.1:6380> info replication

# Replication

role:master

connected_slaves:0

master_repl_offset:0

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

127.0.0.1:6380> SLAVEOF 192.168.1.200 6379

注意:也可以考虑把主的持久化关闭,只打开从的持久化。

redis集群

http://redisdoc.com/topic/cluster-tutorial.html

四种大方案:

1)客户端分片(比较灵活,但是加节点就比较麻烦了)

2)代理proxy(Twemproxy)

3)redis Cluster(问题:客户端缺失)

4)Codis(豌豆荚开源的)

redis中文网站:http://redisdoc.com/

建议:可以三主三从。

我们这里的环境是8个节点,三主三从,另外两个节点模拟添加节点和删除节点。

[root@linux-node1 opt]# mkdir `seq 7001 7008`

[root@linux-node1 opt]# ll

total 36

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7001

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7002

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7003

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7004

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7005

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7006

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7007

drwxr-xr-x 2 root root 4096 Aug 4 09:17 7008

[root@linux-node1 opt]# cp /etc/redis/6379.conf .

把6379.conf的相关配置文件修改成带6379的特征,便于后续批量修改:

[root@linux-node1 opt]# grep 6379 6379.conf

# Accept connections on the specified port, default is 6379 (IANA #815344).

port 6379

pidfile /var/run/redis_6379.pid

logfile "/var/log/redis_6379.log"

dbfilename dump_6379.rdb

dir /opt/6379

appendfilename "appendonly_6379.aof"

# cluster-config-file nodes-6379.conf

以下是一个包含了最少选项的集群配置文件示例:

port 7000

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

appendonly yes

[root@linux-node1 opt]# grep -A 4 "port 6379" 6379.conf

port 6379

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

标黄的三行我们加在port 6379下方。

[root@linux-node1 opt]# sed 's/6379/7001/g' 6379.conf >> 7001/redis.conf

[root@linux-node1 opt]# sed 's/6379/7002/g' 6379.conf >> 7002/redis.conf

[root@linux-node1 opt]# sed 's/6379/7003/g' 6379.conf >> 7003/redis.conf

[root@linux-node1 opt]# sed 's/6379/7004/g' 6379.conf >> 7004/redis.conf

[root@linux-node1 opt]# sed 's/6379/7005/g' 6379.conf >> 7005/redis.conf

[root@linux-node1 opt]# sed 's/6379/7006/g' 6379.conf >> 7006/redis.conf

[root@linux-node1 opt]# sed 's/6379/7007/g' 6379.conf >> 7007/redis.conf

[root@linux-node1 opt]# sed 's/6379/7008/g' 6379.conf >> 7008/redis.conf

批量启动服务:

[root@linux-node1 opt]# for i in `seq 7001 7008`;do cd /opt/$i && /usr/local/redis/bin/redis-server redis.conf;done

[root@linux-node1 7008]# netstat -tunlp |grep :700

tcp 0 0 0.0.0.0:7001 0.0.0.0:* LISTEN 8879/redis-server *

tcp 0 0 0.0.0.0:7002 0.0.0.0:* LISTEN 8881/redis-server *

tcp 0 0 0.0.0.0:7003 0.0.0.0:* LISTEN 8887/redis-server *

tcp 0 0 0.0.0.0:7004 0.0.0.0:* LISTEN 8889/redis-server *

tcp 0 0 0.0.0.0:7005 0.0.0.0:* LISTEN 8893/redis-server *

tcp 0 0 0.0.0.0:7006 0.0.0.0:* LISTEN 8897/redis-server *

tcp 0 0 0.0.0.0:7007 0.0.0.0:* LISTEN 8901/redis-server *

tcp 0 0 0.0.0.0:7008 0.0.0.0:* LISTEN 8905/redis-server *

[root@linux-node1 ~]# yum -y install ruby rubygems

[root@linux-node1 ~]# gem install redis

Successfully installed redis-3.3.1

1 gem installed

Installing ri documentation for redis-3.3.1...

Installing RDoc documentation for redis-3.3.1...

[root@linux-node1 ~]# cp /usr/local/src/redis-3.2.3/src/redis-trib.rb /usr/local/bin/redis-trib

[root@linux-node1 ~]# redis-trib help

Usage: redis-trib <command> <options> <arguments ...>

set-timeout host:port milliseconds

reshard host:port

--pipeline <arg>

--slots <arg>

--timeout <arg>

--yes

--to <arg>

--from <arg>

fix host:port

--timeout <arg>

rebalance host:port

--use-empty-masters

--pipeline <arg>

--timeout <arg>

--auto-weights

--threshold <arg>

--simulate

--weight <arg>

add-node new_host:new_port existing_host:existing_port

--slave

--master-id <arg>

import host:port

--replace

--from <arg>

--copy

del-node host:port node_id

info host:port

help (show this help)

create host1:port1 ... hostN:portN

--replicas <arg>

call host:port command arg arg .. arg

check host:port

For check, fix, reshard, del-node, set-timeout you can specify the host and port of any working node in the cluster.

创建集群的节点:

[root@linux-node1 ~]# redis-trib create --replicas 1 127.0.0.1:7001 127.0.0.1:7002 127.0.0.1:7003 127.0.0.1:7004 127.0.0.1:7005 127.0.0.1:7006

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 3 masters:

127.0.0.1:7001

127.0.0.1:7002

127.0.0.1:7003

Adding replica 127.0.0.1:7004 to 127.0.0.1:7001

Adding replica 127.0.0.1:7005 to 127.0.0.1:7002

Adding replica 127.0.0.1:7006 to 127.0.0.1:7003

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:0-5460 (5461 slots) master

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5461-10922 (5462 slots) master

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:10923-16383 (5461 slots) master

S: e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004

replicates ad81ddb7a34af50223e5d7c75840f3526adbb1e1

S: 0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005

replicates dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

S: ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006

replicates 21326806e89966836293feab652c30f85d0fe806

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join....

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:0-5460 (5461 slots) master

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5461-10922 (5462 slots) master

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:10923-16383 (5461 slots) master

M: e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004

slots: (0 slots) master

replicates ad81ddb7a34af50223e5d7c75840f3526adbb1e1

M: 0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005

slots: (0 slots) master

replicates dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

M: ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006

slots: (0 slots) master

replicates 21326806e89966836293feab652c30f85d0fe806

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

查看创建的结果:

[root@linux-node1 ~]# redis-cli -c -p 7001

127.0.0.1:7001> info replication

# Replication

role:master

connected_slaves:1

slave0:ip=127.0.0.1,port=7004,state=online,offset=337,lag=1

master_repl_offset:337

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:2

repl_backlog_histlen:336

登陆7001上创建数据,发现自动分配到7003上了:

[root@linux-node1 ~]# redis-cli -c -p 7001

127.0.0.1:7001> set key 1

-> Redirected to slot [12539] located at 127.0.0.1:7003

OK

127.0.0.1:7003> quit

127.0.0.1:7001> get key

-> Redirected to slot [12539] located at 127.0.0.1:7003

"1"

[root@linux-node1 ~]# redis-cli -c -p 7001

127.0.0.1:7001> cluster nodes

0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005 slave dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 0 1470277147043 5 connected

ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006 slave 21326806e89966836293feab652c30f85d0fe806 0 1470277147547 6 connected

e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004 slave ad81ddb7a34af50223e5d7c75840f3526adbb1e1 0 1470277149060 4 connected

21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003 master - 0 1470277148558 3 connected 10923-16383

ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001 myself,master - 0 0 1 connected 0-5460

dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002 master - 0 1470277148558 2 connected 5461-10922

添加节点:

[root@linux-node1 ~]# redis-trib add-node 127.0.0.1:7007 127.0.0.1:7001

>>> Adding node 127.0.0.1:7007 to cluster 127.0.0.1:7001

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005

slots: (0 slots) slave

replicates dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

S: ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006

slots: (0 slots) slave

replicates 21326806e89966836293feab652c30f85d0fe806

S: e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004

slots: (0 slots) slave

replicates ad81ddb7a34af50223e5d7c75840f3526adbb1e1

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:7007 to make it join the cluster.

[OK] New node added correctly.

查看:

127.0.0.1:7001> cluster nodes

0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005 slave dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 0 1470277378616 5 connected

ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006 slave 21326806e89966836293feab652c30f85d0fe806 0 1470277379626 6 connected

e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004 slave ad81ddb7a34af50223e5d7c75840f3526adbb1e1 0 1470277378111 4 connected

21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003 master - 0 1470277378616 3 connected 10923-16383

ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001 myself,master - 0 0 1 connected 0-5460

dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002 master - 0 1470277379121 2 connected 5461-10922

94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007 master - 0 1470277380130 0 connected ----》但是什么槽位都没分配。

给新加的7007分配槽位:

[root@linux-node1 ~]# redis-trib reshard 127.0.0.1:7007

>>> Performing Cluster Check (using node 127.0.0.1:7007)

M: 94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007

slots: (0 slots) master

0 additional replica(s)

S: 0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005

slots: (0 slots) slave

replicates dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

S: e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004

slots: (0 slots) slave

replicates ad81ddb7a34af50223e5d7c75840f3526adbb1e1

S: ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006

slots: (0 slots) slave

replicates 21326806e89966836293feab652c30f85d0fe806

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 500

What is the receiving node ID? 94a28bc730616c8db9e437e83821ae80c2b4c150 ---》7007的ID

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:all

Ready to move 500 slots.

Source nodes:

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:10923-16383 (5461 slots) master

1 additional replica(s)

Destination node:

M: 94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007

slots: (0 slots) master

0 additional replica(s)

Resharding plan:

Moving slot 5461 from dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

Moving slot 5462 from dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

Moving slot 5463 from dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

Moving slot 5464 from dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

................................

再次查看:

127.0.0.1:7001> cluster nodes

0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005 slave dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 0 1470277896209 5 connected

ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006 slave 21326806e89966836293feab652c30f85d0fe806 0 1470277895202 6 connected

e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004 slave ad81ddb7a34af50223e5d7c75840f3526adbb1e1 0 1470277896209 4 connected

21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003 master - 0 1470277895201 3 connected 11089-16383

ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001 myself,master - 0 0 1 connected 166-5460

dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002 master - 0 1470277896714 2 connected 5628-10922

94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007 master - 0 1470277897216 7 connected 0-165 5461-5627 10923-11088

把7008加入,做7007的从节点:

[root@linux-node1 ~]# redis-trib add-node 127.0.0.1:7008 127.0.0.1:7001

>>> Adding node 127.0.0.1:7008 to cluster 127.0.0.1:7001

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001

slots:166-5460 (5295 slots) master

1 additional replica(s)

S: 0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005

slots: (0 slots) slave

replicates dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c

S: ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006

slots: (0 slots) slave

replicates 21326806e89966836293feab652c30f85d0fe806

S: e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004

slots: (0 slots) slave

replicates ad81ddb7a34af50223e5d7c75840f3526adbb1e1

M: 21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003

slots:11089-16383 (5295 slots) master

1 additional replica(s)

M: dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002

slots:5628-10922 (5295 slots) master

1 additional replica(s)

M: 94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007

slots:0-165,5461-5627,10923-11088 (499 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:7008 to make it join the cluster.

[OK] New node added correctly.

登陆到7008上:

[root@linux-node1 ~]# redis-cli -c -p 7008

127.0.0.1:7008> cluster replicate 94a28bc730616c8db9e437e83821ae80c2b4c150 --》7007的ID

OK

127.0.0.1:7008> cluster nodes

57735a00df1d735e000a99a10cc87911b966d578 127.0.0.1:7008 myself,slave 94a28bc730616c8db9e437e83821ae80c2b4c150 0 0 0 connected

dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 127.0.0.1:7002 master - 0 1470278429469 2 connected 5628-10922

0653e748fbe6dca003d5def47fd7851a252a4918 127.0.0.1:7005 slave dbb2938f6ad3b79848c55d18f0ed7cc41c74e50c 0 1470278427954 2 connected

ad81ddb7a34af50223e5d7c75840f3526adbb1e1 127.0.0.1:7001 master - 0 1470278428459 1 connected 166-5460

21326806e89966836293feab652c30f85d0fe806 127.0.0.1:7003 master - 0 1470278429469 3 connected 11089-16383

e7928c03114a492268be131d3ca977cbba40f368 127.0.0.1:7004 slave ad81ddb7a34af50223e5d7c75840f3526adbb1e1 0 1470278428966 1 connected

94a28bc730616c8db9e437e83821ae80c2b4c150 127.0.0.1:7007 master - 0 1470278427451 7 connected 0-165 5461-5627 10923-11088

ec7451eb5641fa0f0fda968dbfa101a94a6ae522 127.0.0.1:7006 slave 21326806e89966836293feab652c30f85d0fe806 0 1470278428966 3 connected

注意:在线添加从节点的过程是,master端bgsave备份rdb文件,把rdb文件传到slave端,salve端重载下OK。当master量非常大的时候,会有一些问题,这个时候会分配很多的槽位,每个redis的量只是一部分。

redis cluster一些场合不能用:涉及到多key的操作则不行,key可能没存在一个节点上,就像分库分表的情况,不分表可以查出,但分表之后则查不出数据了。(无中心状态)

redis管理工具

1)phpredisadmin

下载地址:https://github.com/ErikDubbelboer/phpRedisAdmin/

安装PHP环境,及管理工具

[root@linux-node1 ~]# yum -y install httpd php php-redis

[root@linux-node1 ~]# cd /var/www/html/

[root@linux-node1 html]# git clone https://github.com/erikdubbelboer/phpRedisAdmin.git

Initialized empty Git repository in /var/www/html/phpRedisAdmin/.git/

remote: Counting objects: 593, done.

remote: Total 593 (delta 0), reused 0 (delta 0), pack-reused 593

Receiving objects: 100% (593/593), 175.09 KiB | 21 KiB/s, done.

Resolving deltas: 100% (359/359), done.

[root@linux-node1 html]# cd phpRedisAdmin/

[root@linux-node1 phpRedisAdmin]# git clone https://github.com/nrk/predis.git vendor

Initialized empty Git repository in /var/www/html/phpRedisAdmin/vendor/.git/

remote: Counting objects: 22351, done.

remote: Total 22351 (delta 0), reused 0 (delta 0), pack-reused 22351

Receiving objects: 100% (22351/22351), 4.80 MiB | 55 KiB/s, done.

Resolving deltas: 100% (15401/15401), done.

[root@linux-node1 html]# ll

total 4

drwxr-xr-x 7 root root 4096 Aug 4 10:56 phpRedisAdmin

[root@linux-node1 html]# chown -R apache.apache phpRedisAdmin/

浏览器打开:http://192.168.1.200/phpRedisAdmin

2)rdbtools

Installing rdbtools Pre-Requisites :

- python 2.x and pip.

- redis-py is optional and only needed to run test cases.

官网:https://github.com/sripathikrishnan/redis-rdb-tools

[root@linux-node1 ~]# pip install rdbtools

Traceback (most recent call last):

File "/usr/bin/pip", line 5, in <module>

from pkg_resources import load_entry_point

ImportError: No module named pkg_resources

解决办法:yum install python-devel

再次执行又报了如下的错误:

[root@linux-node1 ~]# pip install rdbtools

[root@linux-node1 7001]# pwd

/opt/7001

[root@linux-node1 7001]# rdb -c memory dump_7001.rdb > memory.csv

3)saltstack的redis模块

豌豆荚Codis的软件:

网址https://github.com/CodisLabs/codis

安装go语言

[root@linux-node1 ~]# yum -y install golang

[root@linux-node1 ~]# mkdir /opt/gopath

[root@linux-node1 ~]# tail -1 /etc/profile

export GOPATH=/opt/gopath/

export PATH=$PATH:/opt/gopath/bin

[root@linux-node1 ~]# source /etc/profile

安装JDK:

[root@linux-node1 src]# pwd

/usr/local/src

[root@linux-node1 src]# ll

total 195868

-rw-r--r-- 1 root root 181310701 Feb 11 17:28 jdk-8u73-linux-x64.gz

[root@linux-node1 src]# tar zxf jdk-8u73-linux-x64.gz && mv jdk1.8.0_73 /usr/local/jdk && chown -R root:root /usr/local/jdk

[root@linux-node1 src]# tail -3 /etc/profile

export JAVA_HOME=/usr/local/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

[root@linux-node1 src]# source /etc/profile

下载zookeeper:

[root@linux-node1 ~]# cd /usr/local/src/

[root@linux-node1 src]# wget http://mirrors.cnnic.cn/apache/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gz

做个伪分布式集群:在一台机器上跑三个redis

[root@linux-node1 ~]# cd /opt/

[root@linux-node1 opt]# mkdir zk1 zk2 zk3

[root@linux-node1 opt]# echo 1 >> zk1/myid

[root@linux-node1 opt]# echo 2 >> zk2/myid

[root@linux-node1 opt]# echo 3 >> zk3/myid

[root@linux-node1 opt]# cd /usr/local/src/

[root@linux-node1 src]# tar -xf zookeeper-3.4.6.tar.gz

[root@linux-node1 src]# mv zookeeper-3.4.6 /usr/local/zookeeper

修改配置文件:

[root@linux-node1 conf]# pwd

/usr/local/zookeeper/conf

[root@linux-node1 conf]# cp zoo_sample.cfg /opt/zoo.cfg

[root@linux-node1 opt]# grep ^[a-z] zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/opt/zk1

clientPort=2181

server.1=192.168.1.200:2887:3887

server.2=192.168.1.200:2888:3888

server.3=192.168.1.200:2889:3889

[root@linux-node1 opt]# cp zoo.cfg zk1/zk1.cfg

[root@linux-node1 opt]# cp zoo.cfg zk2/zk2.cfg

[root@linux-node1 opt]# cp zoo.cfg zk3/zk3.cfg

[root@linux-node1 opt]# grep ^[a-z] zk1/zk1.cfg

tickTime=2000 #心跳时间

initLimit=10 #心跳探测间隔数

syncLimit=5

dataDir=/opt/zk1

clientPort=2181

server.1=192.168.1.200:2887:3887

server.2=192.168.1.200:2888:3888

server.3=192.168.1.200:2889:3889

[root@linux-node1 opt]# grep ^[a-z] zk2/zk2.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/opt/zk2

clientPort=2182

server.1=192.168.1.200:2887:3887

server.2=192.168.1.200:2888:3888

server.3=192.168.1.200:2889:3889

[root@linux-node1 opt]# grep ^[a-z] zk3/zk3.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/opt/zk3

clientPort=2183

server.1=192.168.1.200:2887:3887

server.2=192.168.1.200:2888:3888

server.3=192.168.1.200:2889:3889

启动服务:

[root@linux-node1 ~]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk1/zk1.cfg

JMX enabled by default

Using config: /opt/zk1/zk1.cfg

Starting zookeeper ... STARTED

[root@linux-node1 ~]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk2/zk2.cfg

[root@linux-node1 ~]# /usr/local/zookeeper/bin/zkServer.sh start /opt/zk3/zk3.cfg

查看状态:

[root@linux-node1 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk1/zk1.cfg

JMX enabled by default

Using config: /opt/zk1/zk1.cfg

Mode: follower

[root@linux-node1 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk2/zk2.cfg

JMX enabled by default

Using config: /opt/zk2/zk2.cfg

Mode: leader

[root@linux-node1 opt]# /usr/local/zookeeper/bin/zkServer.sh status /opt/zk3/zk3.cfg

JMX enabled by default

Using config: /opt/zk3/zk3.cfg

Mode: follower

连接测试:

[root@linux-node1 ~]# /usr/local/zookeeper/bin/zkCli.sh -server 192.168.1.200:2181

Connecting to 192.168.1.200:2181

[zk: 192.168.1.200:2181(CONNECTED) 0] ls /

[zookeeper]

[zk: 192.168.1.200:2181(CONNECTED) 1] ls /zookeeper

[quota]

下载codis代码:

[root@linux-node1 ~]# go get -u -d github.com/CodisLabs/codis

package github.com/CodisLabs/codis: no buildable Go source files in /opt/gopath/src/github.com/CodisLabs/codis

补充:

使用saltstack部署redis

cd /srv/salt

mkdir -p redis/files

cd redis/files

cp /usr/local/src/ redis-3.2.3.tar.gz .

cd /srv/salt/redis

cat install.sls --->内容如下:

|

redis-install: file.managerd: - name: /usr/local/src/redis-3.2.3.tar.gz - source: salt://redis/files/redis-3.2.3.tar.gz - user: root - group: root - mode: 755

cmd.run: - name: cd /usr/local/src && tar zxf redis-3.2.3.tar.gz && cd redis-3.2.3 && make PREFIX=/usr/local/redis install - unless: test -d /usr/local/redis - require: - file: redis-install

redis-config: - name: /etc/redis/6379.conf - source: salt://redis/files/6379.conf - user: root - group: root - mode: 644

redis-service: file.managed: - name: /etc/init.d/redis - source: salt://redis/files/redis.init - user: root - group: root - mode: 755 cmd.run: - name: chkconfig --add redis && chkconfig redis on - unless: chkconfig --list |grep redis service.running: - name: redis - enable: True - watch: - file: redis-config - require: - cmd: redis-install - cmd: redis-service |

注意:/srv/salt/redis/files目录下要有redis-3.2.3.tar.gz,6379.conf,redis.init文件。

/srv/salt/redis目录下有install.sls文件。

在/srv/salt/目录下的top.sls文件里指定:

|

base: '*': - redis.install |

浙公网安备 33010602011771号

浙公网安备 33010602011771号