airflow部署

-

环境准备

-

Python的安装

python安装的过程中 你可能会遇到各种各样的问题,上网搜各种问题的解法也不尽相同,最关键的是基本没啥效果。在我安装的过程中总结了几点,再执行我下面的流程的时候,一定要一步不落,并且保证环境一定要干净,如果在执行某个步骤的时候出现 已存在的字眼,一定要删掉然后重新执行这一步。(这都是血与泪的教训)

#python依赖 yum -y install zlib zlib-devel yum -y install bzip2 bzip2-devel yum -y install ncurses ncurses-devel yum -y install readline readline-devel yum -y install openssl openssl-devel yum -y install openssl-static yum -y install xz lzma xz-devel yum -y install sqlite sqlite-devel yum -y install gdbm gdbm-devel yum -y install tk tk-devel yum install gcc #安装wget命令 yum -y install wget #使用wget下载Python源码压缩包到/root目录下 wget -P /root https://www.python.org/ftp/python/3.6.5/Python-3.6.5.tgz #在当前目录解压Python源码压缩包 tar -zxvf Python-3.6.5.tgz #进入解压后的文件目录下 cd /root/Python-3.6.5 #检测及校验平台 ./configure --with-ssl --prefix=/service/python3 #编译Python源代码 make #安装Python make install #备份原来的Python软连接 mv /usr/bin/python /usr/bin/python2.backup #制作新的指向Python3的软连接 ln -s /service/python3/bin/python3 /usr/bin/python #建立pip的软连接 ln -s /service/python3//bin/pip3 /usr/bin/pip

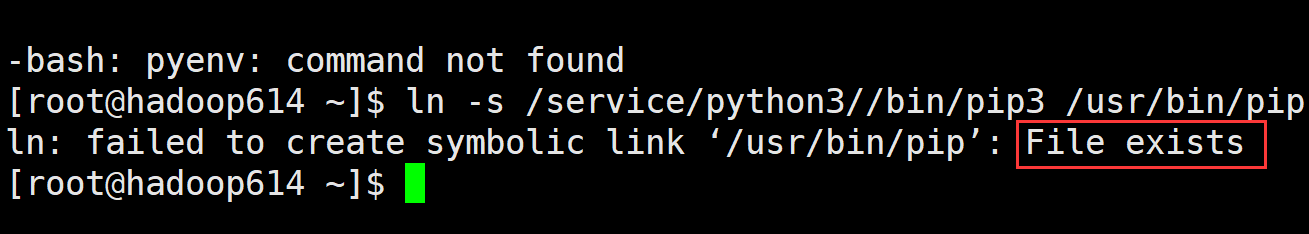

###注意这一步执行的时候就可能会出现已经存在,这时候你要把/usr/bin/pip删掉然后再执行这一步

错误:ln: failed to create symbolic link ‘/usr/bin/pip’: File exists

Error downloading packages:

python3-rpm-generators-6-2.el7.noarch: [Errno 5] [Errno 2] No such file or directory

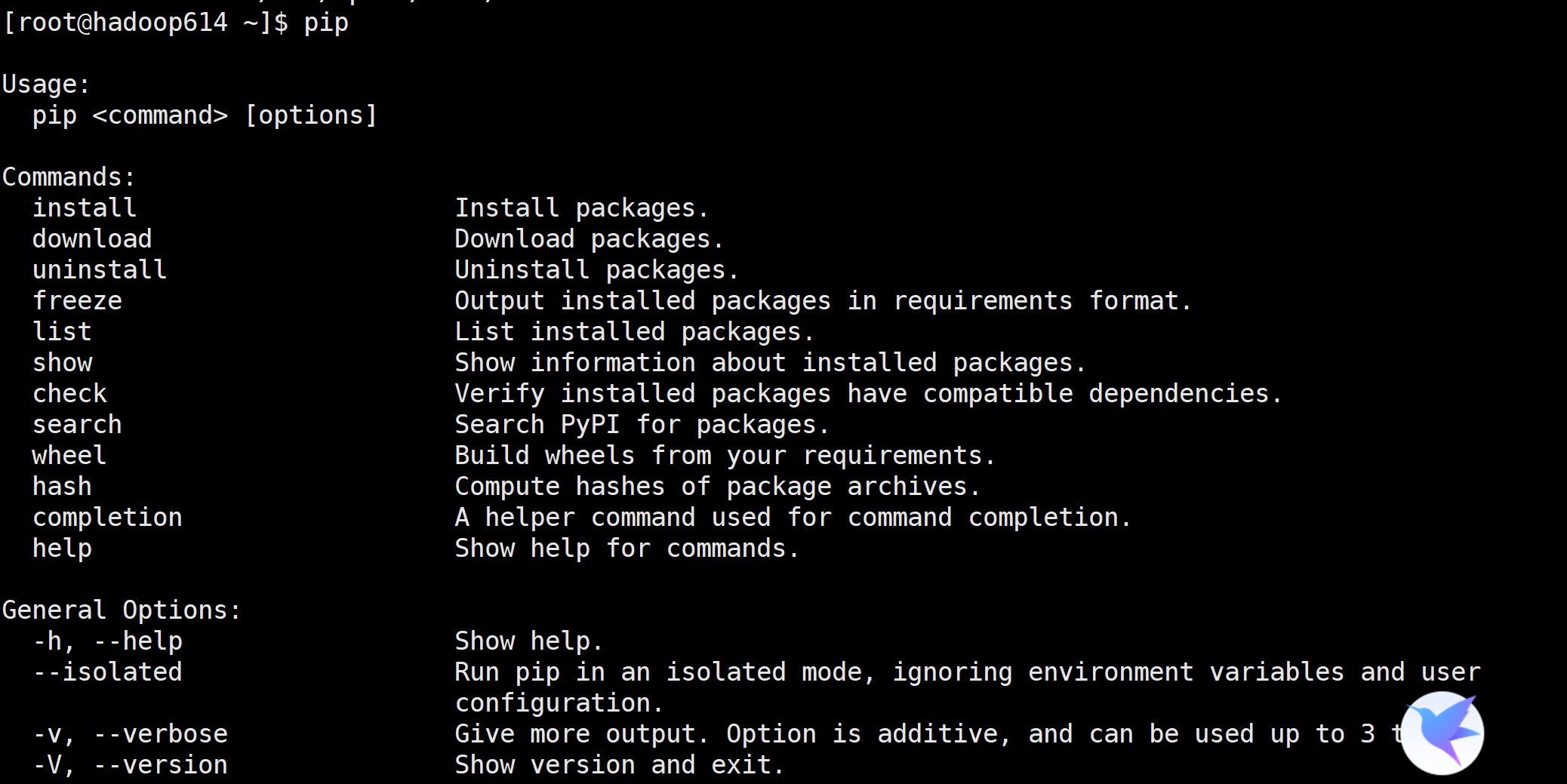

对了 在以上步骤都执行完毕以后请输入pip命令看一下是否正常

如下就是正常的

-

MySQL的安装

mysql的安装有两个方式一个是RPM安装,另一种是tar包部署

本文中给出简单的RPM部署

如果不成功或者想保证一次性安装成功可以用TAR包部署,TAR包部署可参考

https://www.cnblogs.com/xuziyu/p/10353968.html

#卸载mariadb rpm -qa | grep mariadb rpm -e --nodeps mariadb-libs-5.5.52-1.el7.x86_64 #sudo rpm -e --nodeps mariadb-libs-5.5.52-1.el7.x86_64 rpm -qa | grep mariadb

#下载mysql的repo源

wget -P /root http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

#通过rpm安装

rpm -ivh mysql-community-release-el7-5.noarch.rpm

#安装mysql

yum install mysql-server

#授权

chown -R mysql:mysql /var/lib/mysql

#开启Mysql服务

service mysqld start

#用root用户连接登录mysql:

mysql -uroot

#重置mysql密码

use mysql;

update user set password=password('root') where user='root';

flush privileges;

#为Airflow建库、建用户

#建库:

create database airflow;

#建用户:

create user 'airflow'@'%' identified by 'airflow';

create user 'airflow'@'localhost' identified by 'airflow';

#为用户授权:

grant all on airflow.* to 'airflow'@'%';

grant all on airflow.* to 'root'@'%';

flush privileges;

exit;

Airflow2019年1月成为了Apache的顶级项目,它是由Python编写的一个任务调度框架。

-

接下来安装airflow

#设置临时环境变量 export SLUGIFY_USES_TEXT_UNIDECODE=yes #添加编辑环境变量 vi /etc/profile #在最后添加以下内容: ----》 export PS1="[\u@\h \w]\$ " #Python环境变量 export PYTHON_HOME=/service/python3 export PATH=$PATH:$PYTHON_HOME/bin #Airflow环境变量 export AIRFLOW_HOME=/root/airflow export SITE_AIRFLOW_HOME=/service/python3/lib/python3.6/site-packages/airflow export PATH=$PATH:$SITE_AIRFLOW_HOME/bin ----》 #生效环境变量 source /etc/profile #安装apache-airflow并且指定1.10.0版本 pip install apache-airflow===1.10.0

(这一步你若能顺利执行下来,你就可以欢呼一会了,太难了)

airflow会被安装到python3下的site-packages目录下,完整目录为:

${PYTHON_HOME}/lib/python3.6/site-packages/airflow

#绝对路径/service/python3/lib/python3.6/site-packages/airflow

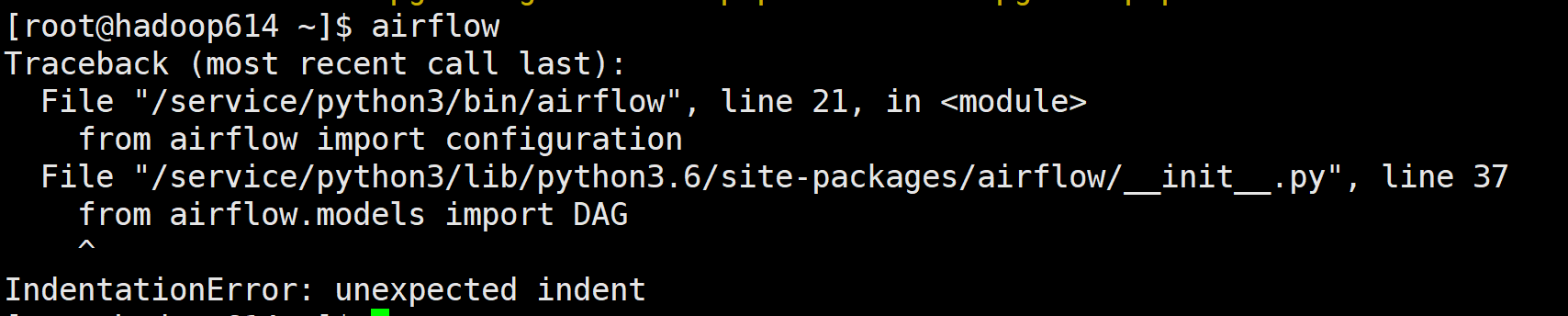

执行airflow命令做初始化操作

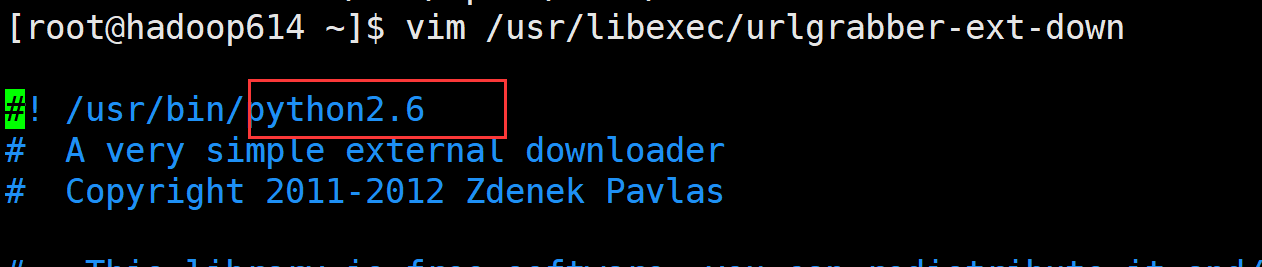

解决:参考博客:https://www.cnblogs.com/wang-li/p/7620483.html

https://blog.csdn.net/yingkongshi99/article/details/52658538

airflow

####

[2019-07-17 04:40:01,565] {__init__.py:51} INFO - Using executor SequentialExecutor

usage: airflow [-h]

{backfill,list_tasks,clear,pause,unpause,trigger_dag,delete_dag,pool,variables,kerberos,render,run,initdb,list_dags,dag_state,task_failed_deps,task_state,serve_logs,test,webserver,resetdb,upgradedb,scheduler,worker,flower,version,connections,create_user}

...

airflow: error: the following arguments are required: subcommand

####

#到此,airflow会在刚刚的AIRFLOW_HOME目录下生成一些文件。当然,执行该命令时可能会报一些错误,可以不用理会!

#报错如下:

[2019-07-17 04:40:01,565] {__init__.py:51} INFO - Using executor SequentialExecutor

usage: airflow [-h]

{backfill,list_tasks,clear,pause,unpause,trigger_dag,delete_dag,pool,variables,kerberos,render,run,initdb,list_dags,dag_state,task_failed_deps,task_state,serve_logs,test,webserver,resetdb,upgradedb,scheduler,worker,flower,version,connections,create_user}

...

airflow: error: the following arguments are required: subcommand

#生成的文件logs如下所示:

[root@test01 ~]$ cd airflow/

[root@test01 ~/airflow]$ ll

total 28

-rw-r--r--. 1 root root 20572 Jul 17 04:40 airflow.cfg

drwxr-xr-x. 3 root root 23 Jul 17 04:40 logs

-rw-r--r--. 1 root root 2299 Jul 17 04:40 unittests.cfg

#为airflow安装mysql模块 pip install 'apache-airflow[mysql]'

#出现报错:

ERROR: Complete output from command python setup.py egg_info:

ERROR: /bin/sh: mysql_config: command not found

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/tmp/pip-install-dq81ujxt/mysqlclient/setup.py", line 16, in <module>

metadata, options = get_config()

File "/tmp/pip-install-dq81ujxt/mysqlclient/setup_posix.py", line 51, in get_config

libs = mysql_config("libs")

File "/tmp/pip-install-dq81ujxt/mysqlclient/setup_posix.py", line 29, in mysql_config

raise EnvironmentError("%s not found" % (_mysql_config_path,))

OSError: mysql_config not found

----------------------------------------

ERROR: Command "python setup.py egg_info" failed with error code 1 in /tmp/pip-install-dq81ujxt/mysqlclient/

#解决方案,查看是否有mysql_config文件

[root@test01 ~]$ find / -name mysql_config

#如果没有

[root@test01 ~]$ yum -y install mysql-devel

#安装完成后再次验证是否有mysql_config

find / -name mysql_config

#采用mysql作为airflow的元数据库

pip install mysqlclient

#安装MySQLdb

pip install MySQLdb

#报错不支持

Collecting MySQLdb

ERROR: Could not find a version that satisfies the requirement MySQLdb (from versions: none)

ERROR: No matching distribution found for MySQLdb

#所以使用python-mysql

pip install pymysql

pip install cryptography

#避免之后产生错误

#airflow.exceptions.AirflowException: Could not create Fernet object: Incorrect padding

#需要修改airflow.cfg (默认位于~/airflow/)里的fernet_key

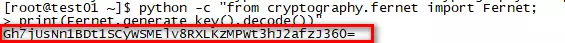

#修改方法

python -c "from cryptography.fernet import Fernet;

print(Fernet.generate_key().decode())"

#这个命令生成一个key,复制这个key然后替换airflow.cfg文件里的fernet_key的值,

#可能出现报错

Traceback (most recent call last):

File "<string>", line 1, in <module>

ModuleNotFoundError: No module named 'cryptography'

#处理方式:

pip install cryptography

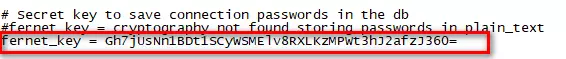

#文件中进行fernet_key值修改

cd ${AIRFLOW_HOME}

vi airflow.cfg

#查找fernet_net

/fernet_net

#编辑替换fernet值

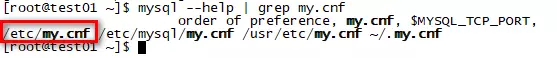

#修改airflow.cfg文件中的sql_alchemy_conn配置 sql_alchemy_conn = mysql+mysqldb://airflow:airflow@localhost:3306/airflow #保存文件 #为避免初始化数据库时有如下报错 #Global variable explicit_defaults_for_timestamp needs to be on (1) for mysql #修改MySQL配置文件my.cnf #查找my.cnf位置 mysql --help | grep my.cnf

#修改my.cnf vi /etc/my.cnf #在[mysqld]下面(一定不要写错地方)添加如下配置: explicit_defaults_for_timestamp=true

#重启mysql服务使配置生效 service mysqld restart #检查配置是否生效 mysql -uroot -proot mysql> select @@global.explicit_defaults_for_timestamp; +------------------------------------------+ | @@global.explicit_defaults_for_timestamp | +------------------------------------------+ | 1 | +------------------------------------------+ 1 row in set (0.00 sec)

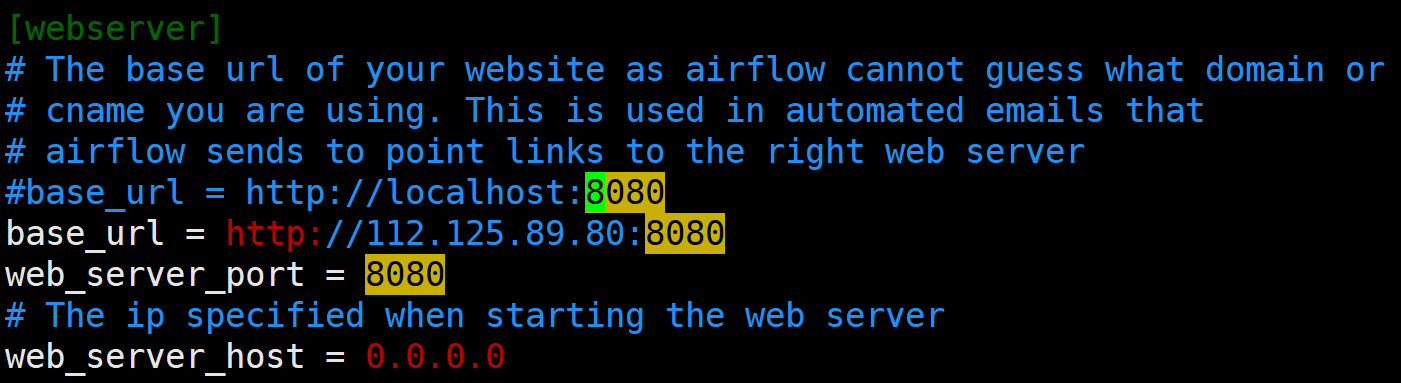

通过修改airflow.cfg调整配置

1.修改webserver地址

base_url = http://IP:8080 web_server_port = 8080

2.修改executor

3.时区

其他配置

#同时需要修改另外三个文件

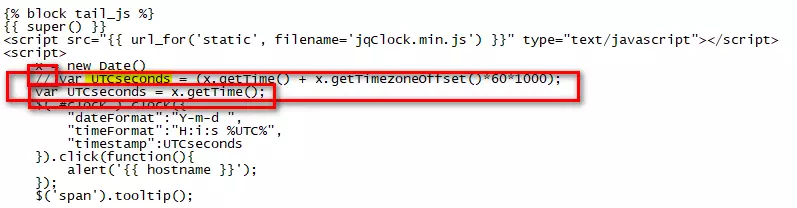

#修改webserver页面上右上角展示的时间:

vi ${PYTHON_HOME}/lib/python3.6/site-packages/airflow/www/templates/admin/master.html

-----------------------------------

{% block tail_js %}

{{ super() }}

<script src="{{ url_for('static', filename='jqClock.min.js') }}" type="text/javascript"></script>

<script>

x = new Date()

// var UTCseconds = (x.getTime() + x.getTimezoneOffset()*60*1000);##修改的内容

var UTCseconds = x.getTime();##修改的内容

$("#clock").clock({

#修改webserver lastRun时间:

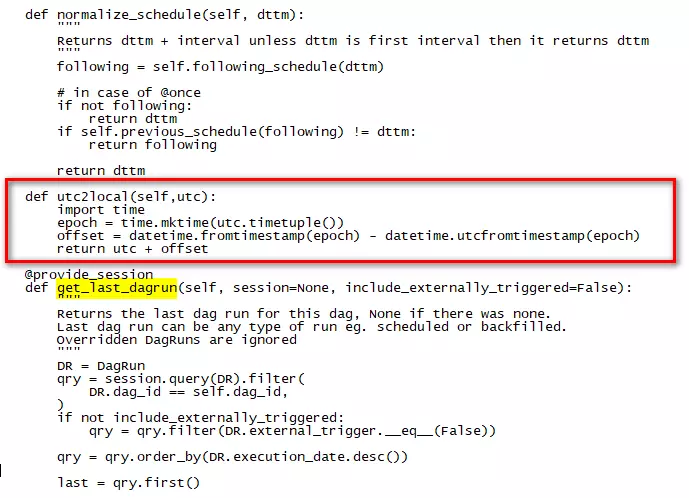

vi ${PYTHON_HOME}/lib/python3.6/site-packages/airflow/models.py

-----------------------------------》

#在指定位置添加如下内容,可以借助get_last_dagrun定位

def utc2local(self,utc):

import time

epoch = time.mktime(utc.timetuple())

offset = datetime.fromtimestamp(epoch) - datetime.utcfromtimestamp(epoch)

return utc + offset

vi ${PYTHON_HOME}/lib/python3.6/site-packages/airflow/www/templates/airflow/dags.html

#在图中指定位置修改为如下内容

dag.utc2local(last_run.execution_date).strftime("%Y-%m-%d %H:%M")

dag.utc2local(last_run.start_date).strftime("%Y-%m-%d %H:%M")

4.添加用户认证(暂时不做这一步,还没懂)

在这里我们采用简单的password认证方式 #(1)安装password组件: sudo pip install apache-airflow[password] #(2)修改airflow.cfg配置文件: [webserver] authenticate = True auth_backend = airflow.contrib.auth.backends.password_auth #(3)编写python脚本用于添加用户账号: #编写add_account.py文件: import airflow from airflow import models, settings from airflow.contrib.auth.backends.password_auth import PasswordUser user = PasswordUser(models.User()) user.username = 'airflow' user.email = 'test_airflow@wps.cn' user.password = 'airflow' session = settings.Session() session.add(user) session.commit() session.close() exit() #执行add_account.py文件: python add_account.py #你会发现mysql元数据库表user中会多出来一条记录的。

5修改scheduler线程数控制并发量

parallelism = 32

6修改检测新DAG间隔

min_file_process_interval = 5

-

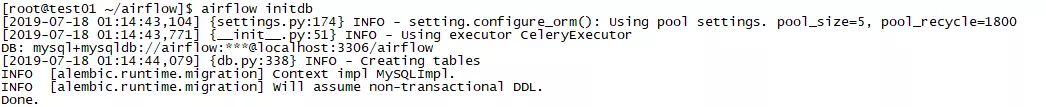

初始化源数据库及启动组件

#初始化元数据库信息(其实也就是新建airflow依赖的表) pip install celery pip install apache-airflow['kubernetes'] airflow initdb #或者使用airflow resetdb

#准备操作

#关闭linux防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

#同时需要关闭windows防火墙

#数据库设置

mysql -uroot -proot

mysql> set password for 'root'@'localhost' =password('');

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on airflow.* to 'airflow'@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on airflow.* to 'root'@'%';

Query OK, 0 rows affected (0.01 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> exit;

#启动组件:

airflow webserver -D

#airflow scheduler -D

#airflow worker -D

#airflow flower -D

-

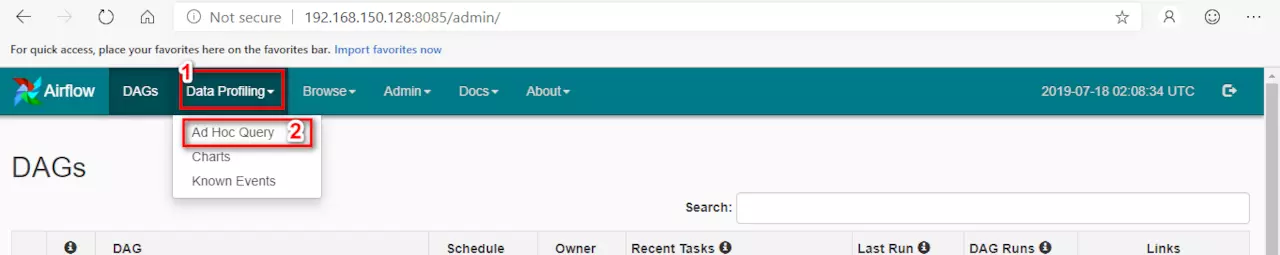

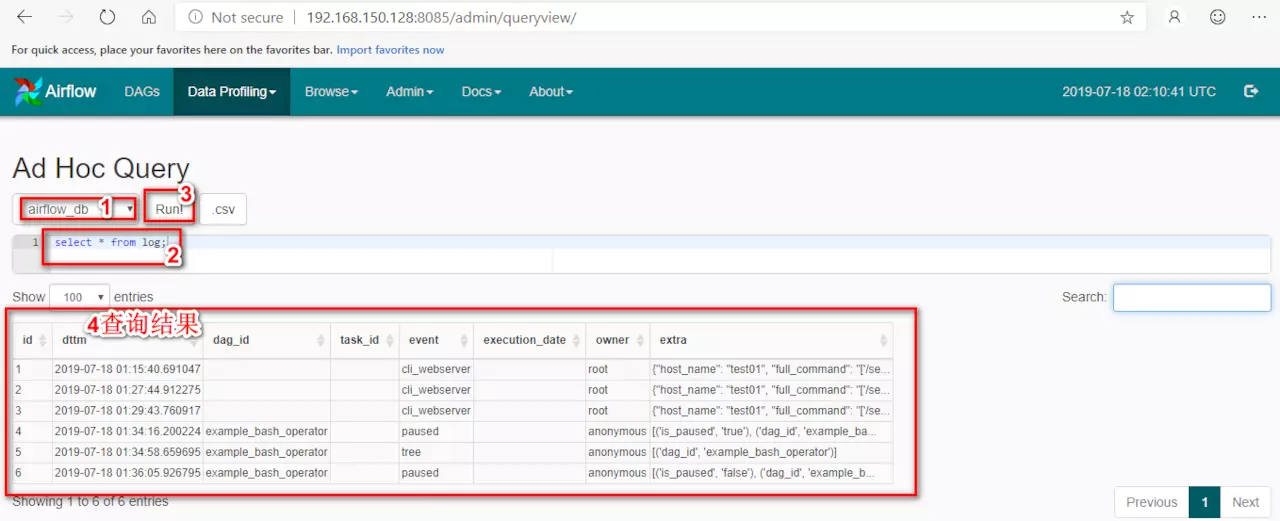

Web页面查看

#地址 192.168.150.128:8085/admin/ #测试 可以选择airflow_db数据库简单查询进行测试 select * from log;

浙公网安备 33010602011771号

浙公网安备 33010602011771号