ELASTICSEARCH使用入门和整合springboot

ELASTICSEARCH使用入门

-

安装

-

使用的是docker进行的安装,安装步骤见docker笔记总结

-

安装完成后访问对应ip的9200端口既可查看到docker的各种版本信息

{ "name" : "48f14487625a", "cluster_name" : "elasticsearch", "cluster_uuid" : "TKkifGUjSNSYeOvtE_PZ1Q", "version" : { "number" : "7.6.2",//版本号 "build_flavor" : "default", "build_type" : "docker", "build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f", "build_date" : "2020-03-26T06:34:37.794943Z", "build_snapshot" : false, "lucene_version" : "8.4.0", "minimum_wire_compatibility_version" : "6.8.0", "minimum_index_compatibility_version" : "6.0.0-beta1" }, "tagline" : "You Know, for Search" }通过访问kibana 5601端口可对ELASTICSEARCH进行可视化界面。

-

-

基本概念

-

关系型数据库和es对比(一列的差不多是一个东西)

Relational DB ‐> Databases ‐> Tables ‐> Rows ‐> Columns

Elasticsearch ‐> Indices ‐> Types ‐> Documents ‐> Fields

-

-

基本操作

这些命令都可以在kibana 5601的:

有二个快捷键:

ctrl+home回到头

ctrl+end回到尾部

![]()

-

_cat查看命令

- GET /_cat/nodes:查看所有节点

- GET/cat/health: 查看es健康状况

- GET /cat/master:查看主节点

- GET /_cat/indicies:查看所有索引 ;

-

文档增删改操作

-

PUT customer/external/1创建文档

{

"name":"John Doe"

}put和post请求的可以新增文档,有一点差异

POST新增。如果不指定id,会自动生成id。指定id就会修改这个数据,并新增版本号;

PUT可以新增也可以修改。PUT必须指定id;

PUT请求更多的用来做修改操作

返回数据:

{ "_index": "customer", "_type": "external", "_id": "1", "_version": 1, "result": "created", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 0, "_primary_term": 1 }下划线字段代表了元数据:

"_index": "customer" 表明该数据在哪个数据库下;

"_type": "external" 表明该数据在哪个类型下;

"_id": "1" 表明被保存数据的id;

"_version": 1, 被保存数据的版本

"result": "created" 这里是创建了一条数据,如果重新put一条数据,则该状态会变为updated,并且版本号也会发生变化。

-

GET /customer/external/1查看文档

返回字段及其作用

{ "_index": "customer",//在哪个索引 "_type": "external",//在哪个类型 "_id": "1",//记录id "_version": 3,//版本号 "_seq_no": 6,//并发控制字段,每次更新都会+1,用来做乐观锁 "_primary_term": 1,//同上,主分片重新分配,如重启,就会变化 "found": true, "_source": { "name": "John Doe" } }通过“if_seq_no=1&if_primary_term=1 ”,当序列号匹配的时候,才进行修改,否则不修改。可以实现乐观锁。保证只能被一个用户抢夺过去

-

带_update的更新,只能发送post请求

POST /customer/external/1/_update

{

"doc":{

"name":"jon

}

}

返回结果

{ "_index" : "customer", "_type" : "external", "_id" : "1", "_version" : 3, "result" : "noop",//如果没有发生变化这个字段为noop,发生了变化为updated "_shards" : { "total" : 0, "successful" : 0, "failed" : 0 }, "_seq_no" : 2, "_primary_term" : 1 }带不带_update进行更新的场景

对于大并发更新,不带 _update;

对于大并发查询偶尔更新,带 _update;对比更新,重新计算分配规则。保证数据的一致性吧。 -

删除文档或者索引

不能后删除类型

DELETE customer/external/1

返回数据

{ "_index" : "customer", "_type" : "external", "_id" : "1", "_version" : 5, "result" : "deleted", "_shards" : { "total" : 2, "successful" : 1, "failed" : 0 }, "_seq_no" : 4, "_primary_term" : 1 }DELETE customer

返回数据

{ "acknowledged" : true } -

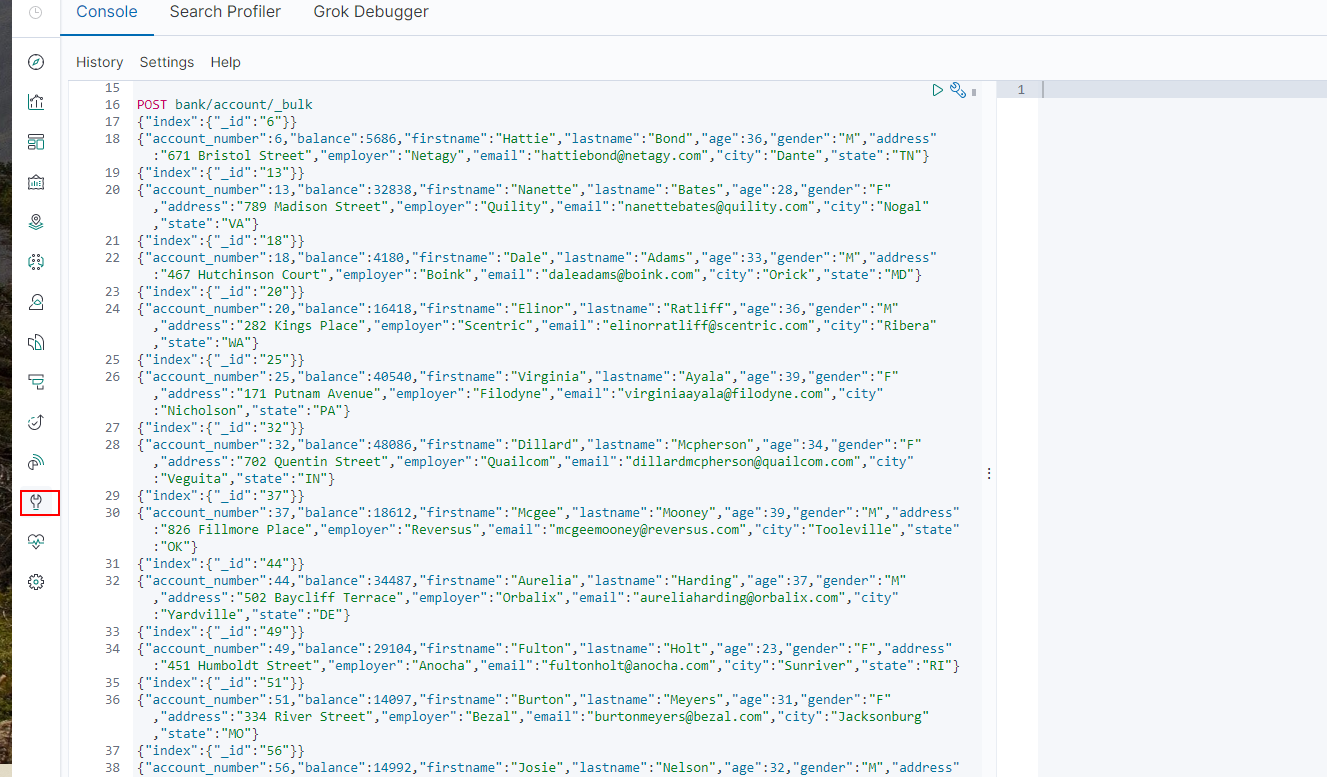

批量操作

语法格式

{action:{metadata}}\n {request body }\n 如果没有请求体可以不写例子:

POST /_bulk {"delete":{"_index":"website","_type":"blog","_id":"123"}}//没有请求体 {"create":{"_index":"website","_type":"blog","_id":"123"}} {"title":"my first blog post"} {"index":{"_index":"website","_type":"blog"}}//插入操作 {"title":"my second blog post"} {"update":{"_index":"website","_type":"blog","_id":"123"}} {"doc":{"title":"my updated blog post"}}

-

-

-

文档查询操作

https://www.elastic.co/guide/en/elasticsearch/reference/7.6/index.html在这个链接中查看所有的操作命令

-

检索的二种方式

- 通过REST request uri 发送搜索参数 (uri +检索参数)

- 通过REST request body 来发送它们(uri+请求体);

- 响应结果解释:

took - Elasticsearch执行搜索的时间(毫秒)

time_ out- 告诉我们搜索是否超时

shards- 告诉我们多少个分片被搜索了,以及统计了成功/失败的搜索分片

hits-搜索结果

hits.total-搜索结果

hits.hits-实际的搜索结果数组(默认为前10的文档)

sort -

结果的排序key (键) (没有则按 score

排序)

score

和max _score -相关性得分和最高得分(全文检索用)

-

uri+请求体进行检索(使用

from和size可以进行分页查询)GET /bank/_search { "query": { "match_all": {} }, "sort": [ { "account_number": "asc" }, {"balance":"desc"} ], "from": 20, "size": 10 }HTTP客户端工具(),get请求不能够携带请求体

-

uri +检索参数

GET bank/_search?q=*&sort=account_number:asc

-

-

Query DSL

Elasticsearch提供了一个可以执行查询的Json风格的DSL。这个被称为Query DSL,该查询语言非常全面。

-

常用结构

-

QUERY_NAME:{

ARGUMENT:VALUE,

ARGUMENT:VALUE,...

}例如:"query": { "match_all": {} },

-

QUERY_NAME:{

FIELD_NAME:{

ARGUMENT:VALUE,

ARGUMENT:VALUE,...

}

}例如:"sort": balance:

-

QUERY_NAME:VALUE

例如:"from": 0

-

-

match进行全文检索

查询字符串是模糊查询(全文检索,最终会按照评分进行排序,会对检索条件进行分词匹配。)

查询数字是精确查询

GET bank/_search

{

"query": {

"match": {

"account_number": "20"

}

}

} -

match_phrase [短句匹配]

- 将需要匹配的当成一整个单词进行检索(不分词)

GET bank/_search

{

"query": {

"match_phrase": {

"address": "mill road"

}

}

}- match的另一种查询方式

GET bank/_search

{

"query": {

"match": {

"address.keyword": "990 Mill Road"

}

}

}使用了keyword后就是对这个字段进行精确查询只有完全等于所要查的字段名时才能进行匹配。

-

multi_math【多字段匹配】

同时对state和address字段进行查询

GET bank/_search

{

"query": {

"multi_match": {

"query": "mill",

"fields": [

"state",

"address"

]

}

}

} -

bool用来做复合查询(同时使用must,must_not,should关键字)

GET bank/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"gender": "M"

}

},

{

"match": {

"address": "mill"

}

}

],

"must_not": [

{

"match": {

"age": "38"

}

}

]

}

}地址必须包含mill,性别必须包含M,年龄必须不是38(数字类型是精确查找),should并不会改变查询结果,只会更改相关性得分,

-

Filter【结果过滤】

不会影响得分,只会过滤结果

GET bank/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"address": "mill"

}

}

],

"filter": {

"range": {

"balance": {

"gte": "10000",

"lte": "20000"

}

}

}

}

}

}查询addrees中含有mill的并且工资大于10000小于20000的

-

term使用

自己在使用es的时候查询没有遇到过得关键字的方法:

-

在查询结果里面找到

这个点进去查看

文本字段不要使用term进行检索,非文本字段使用term进行检索

GET bank/_search

{

"query": {

"term": {

"address": "mill Road"

}

}

}

这样查是检索不到任何信息的,

GET bank/_search

{

"query": {

"term": {

"age": "38"

}

}

}

-

Aggregation(执行聚合)

相当于sql中的groupby和sql中的聚合函数

使用语法:

"aggs":{

"aggs_name这次聚合的名字,方便展示在结果集中":{

"AGG_TYPE聚合的类型(avg,term,terms)":{}

}

},例如:

"aggs": {

"ageAgg": {

"terms": {

"field": "age",

"size": 10

}

},

"ageAvg": {

"avg": {

"field": "age"

}

},

"balanceAvg": {

"avg": {

"field": "balance"

}

}

},terms,avg都是聚合的方式,terms是按多个字段进行聚合,avg是求均值,这些都是平行的聚合方式。聚合也可以进行嵌套,就是在ageAgg的基础上在进行聚合。

"aggs": {

"ageAgg": {

"terms": {

"field": "age",

"size": 100

},

"aggs": {

"genderAgg": {

"terms": {

"field": "gender.keyword"

},

"aggs": {

"balanceAvg": {

"avg": {

"field": "balance"

}

}

}

},

"ageBalanceAvg": {

"avg": {

"field": "balance"

}

}

}

}

},文本字段进行聚合要加上keyword,上面聚合的意思是按照年龄先进行分类,然后在此基础上按照男女进行聚合后,在算出男女的平均工资。ageBalanceAvg是按照年龄进行的平均值

-

Mapping映射

Maping是用来定义一个文档(document),以及它所包含的属性(field)是如何存储和索引的。第一次存档的时候会自动进行确定文档字段的类型

可以包含的类型有:

核心类型

字符串( string )

text, keyword

数字类型( Numenic》

long, integer, short, byte, double, floathalf floatscaled_ float

日期类型(Date )

date

布尔美型( Boolean )

boolean

二进制类型(binary )

binary复合类型

数组美型(Aray )

Array支持不针对特定的美型

对象美型(Object )

object用于单JSON对象

嵌套类型(Nested )

nested用于JSON对象数组地理类型( Geo )

地理坐标( Geo-points )

geo. ,point用于悔述经纬度坐标

地理阳形(Geo-snape )

geo. shape用于强述复杂形状,如多边形..ElasticSearch7-去掉type概念所以我们在查找的时候只使用了索引,没有使用类型

-

GET bank/_mapping

查看索引mapping

-

PUT /my_index

创建索引,并设置filed的类型

PUT /my_index

{

"mappings": {

"properties": {

"age": {

"type": "integer"

},

"email": {

"type": "keyword"//是keyword就会做精确匹配,不会做全文检索

},

"name": {

"type": "text", "index": false

}

}

}

}index:false表明字段不能被检索,

"gender" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}字段下还可以有keyword映射,在查询的时候可以使用字段keyword

添加新的字段映射

PUT /my_index/_mapping

{

"properties": {

"employee-id": {

"type": "keyword",

"index": false

}

}

}更新映射

对于已经存在的字段映射,我们不能更新。更新必须创建新的索引,进行数据迁移。

数据迁移:

POST _reindex

{

"source":{

"index":"bank",

"type":"account"//老的数据才有type

},

"dest":{

"index":"new_bank"

}

}

-

-

分词

-

自带的分词器大部分都是分英文的

POST _analyze

{

"analyzer": "standard",

"text": "The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."

} -

中文分词器使用的是ik分词器

https://github.com/medcl/elasticsearch-analysis-ik/releases/download 对应es版本安装

我们已经将elasticsearch容器的“/usr/share/elasticsearch/plugins”目录,映射到宿主机的“ /mydata/elasticsearch/plugins”目录下,所以比较方便的做法就是下载“/elasticsearch-analysis-ik-7.6.2.zip”文件,然后解压到该文件夹下即可。安装完毕后,需要重启elasticsearch容器。

-

测试

GET my_index/_analyze

{

"analyzer": "ik_max_word",

"text":"我是中国人"

}因为有些词汇没有,所以需要自己定义词库

修改/usr/share/elasticsearch/plugins/ik/config中的IKAnalyzer.cfg.xml更改这个文件

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd"> <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 --> <entry key="ext_dict"></entry> <!--用户可以在这里配置自己的扩展停止词字典--> <entry key="ext_stopwords"></entry> <!--用户可以在这里配置远程扩展字典 --> <entry key="remote_ext_dict">http://#/es/fenci.txt</entry>//更改这里 <!--用户可以在这里配置远程扩展停止词字典--> <!-- <entry key="remote_ext_stopwords">words_location</entry> --> </properties>修改完成后,需要重启elasticsearch容器,否则修改不生效。

-

-

elasticsearch-Rest-Client(springboot整合elasticsearch)

-

9300: TCP

spring-data-elasticsearch:transport-api.jar;

- springboot版本不同,ransport-api.jar不同,不能适配es版本

- 7.x已经不建议使用,8以后就要废弃

-

9200: HTTP

- jestClient: 非官方,更新慢;

- RestTemplate:模拟HTTP请求,ES很多操作需要自己封装,麻烦;

- HttpClient:同上;

- Elasticsearch-Rest-Client:官方RestClient,封装了ES操作,API层次分明,上手简单;

最终选择Elasticsearch-Rest-Client(elasticsearch-rest-high-level-client);

https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high.html

-

导入依赖

<dependency> <groupId>org.elasticsearch.client</groupId> <artifactId>elasticsearch-rest-high-level-client</artifactId> <version>7.6.2</version> </dependency>在spring-boot-dependencies中所依赖的ELK版本位6.8.7

springboot中导入了版本需要改一下:

<elasticsearch.version>6.8.7</elasticsearch.version>

更改:

<properties> ... <elasticsearch.version>7.6.2</elasticsearch.version> </properties>怎么样通过java调用保存数据:

https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high-document-index.html参考 -

编写配置文件

public static final RequestOptions COMMON_OPTIONS; static { RequestOptions.Builder builder = RequestOptions.DEFAULT.toBuilder(); // builder.addHeader("Authorization", "Bearer " + TOKEN); // builder.setHttpAsyncResponseConsumerFactory( // new HttpAsyncResponseConsumerFactory // .HeapBufferedResponseConsumerFactory(30 * 1024 * 1024 * 1024)); COMMON_OPTIONS = builder.build(); } @Bean public RestHighLevelClient esRestClient(){ RestClientBuilder builder = RestClient.builder(new HttpHost("192.168.0.111", 9200)); RestHighLevelClient client = new RestHighLevelClient(builder); // RestHighLevelClient client = new RestHighLevelClient( // RestClient.builder( // new HttpHost("localhost", 9200, "http"))); return client; } -

写数据

@Test public void indexData() throws IOException { IndexRequest indexRequest = new IndexRequest ("users"); User user = new User(); user.setUserName("张三"); user.setAge(20); user.setGender("男"); String jsonString = JSON.toJSONString(user); //设置要保存的内容 indexRequest.source(jsonString, XContentType.JSON); //执行创建索引和保存数据 IndexResponse index = client.index(indexRequest, GulimallElasticSearchConfig.COMMON_OPTIONS); System.out.println(index); } -

获取数据

public void searchData() throws IOException { GetRequest getRequest = new GetRequest( "users", "_-2vAHIB0nzmLJLkxKWk"); GetResponse getResponse = client.get(getRequest, RequestOptions.DEFAULT); System.out.println(getResponse); String index = getResponse.getIndex(); System.out.println(index); String id = getResponse.getId(); System.out.println(id); if (getResponse.isExists()) { long version = getResponse.getVersion(); System.out.println(version); String sourceAsString = getResponse.getSourceAsString(); System.out.println(sourceAsString); Map<String, Object> sourceAsMap = getResponse.getSourceAsMap(); System.out.println(sourceAsMap); byte[] sourceAsBytes = getResponse.getSourceAsBytes(); } else { } } -

复杂查询

//1. 创建检索请求 SearchRequest searchRequest = new SearchRequest(); //1.1)指定索引 searchRequest.indices("bank"); //1.2)构造检索条件 SearchSourceBuilder sourceBuilder = new SearchSourceBuilder(); //有前面各种各样的查询条件from sort size啊 sourceBuilder.query(QueryBuilders.matchQuery("address","Mill")); //1.2.1)按照年龄分布进行聚合 TermsAggregationBuilder ageAgg=AggregationBuilders.terms("ageAgg").field("age").size(10); sourceBuilder.aggregation(ageAgg); //1.2.2)计算平均年龄 AvgAggregationBuilder ageAvg = AggregationBuilders.avg("ageAvg").field("age"); sourceBuilder.aggregation(ageAvg); //1.2.3)计算平均薪资 AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg").field("balance"); sourceBuilder.aggregation(balanceAvg); System.out.println("检索条件:"+sourceBuilder); searchRequest.source(sourceBuilder); //2. 执行检索 SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT); System.out.println("检索结果:"+searchResponse); //3. 将检索结果封装为Bean SearchHits hits = searchResponse.getHits(); SearchHit[] searchHits = hits.getHits(); for (SearchHit searchHit : searchHits) { String sourceAsString = searchHit.getSourceAsString(); Account account = JSON.parseObject(sourceAsString, Account.class); System.out.println(account); } //4. 获取聚合信息 Aggregations aggregations = searchResponse.getAggregations(); Terms ageAgg1 = aggregations.get("ageAgg"); for (Terms.Bucket bucket : ageAgg1.getBuckets()) { String keyAsString = bucket.getKeyAsString(); System.out.println("年龄:"+keyAsString+" ==> "+bucket.getDocCount()); } Avg ageAvg1 = aggregations.get("ageAvg"); System.out.println("平均年龄:"+ageAvg1.getValue()); Avg balanceAvg1 = aggregations.get("balanceAvg"); System.out.println("平均薪资:"+balanceAvg1.getValue()); }

-

浙公网安备 33010602011771号

浙公网安备 33010602011771号