Kubernetes - 简单集群配置

# install docker

[22:33:01 root@master1 scripts]#cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"],

"insecure-registries": ["10.0.0.55:80"], #配置harbor地址

"exec-opts": ["native.cgroupdriver=systemd"] #因为k8s部分服务无法用systemd启动,需要加上cgroup配置systemd。

}

[22:45:21 root@master1 images]#docker load < k8s-v1.22.1.tar

07363fa84210: Loading layer [==================================================>] 3.062MB/3.062MB

71204d686e50: Loading layer [==================================================>] 1.71MB/1.71MB

09a0fcc34bc8: Loading layer [==================================================>] 124.9MB/124.9MB

Loaded image: 10.0.0.19:80/google_containers/kube-apiserver:v1.22.1

498fc61ea412: Loading layer [==================================================>] 118.5MB/118.5MB

Loaded image: 10.0.0.19:80/google_containers/kube-controller-manager:v1.22.1

03294f7c6532: Loading layer [==================================================>] 49.14MB/49.14MB

Loaded image: 10.0.0.19:80/google_containers/kube-scheduler:v1.22.1

48b90c7688a2: Loading layer [==================================================>] 61.99MB/61.99MB

54e23a97593b: Loading layer [==================================================>] 43.47MB/43.47MB

Loaded image: 10.0.0.19:80/google_containers/kube-proxy:v1.22.1

dee215ffc666: Loading layer [==================================================>] 684.5kB/684.5kB

Loaded image: 10.0.0.19:80/google_containers/pause:3.5

417cb9b79ade: Loading layer [==================================================>] 3.062MB/3.062MB

33158bca9fb5: Loading layer [==================================================>] 2.13MB/2.13MB

13de6ee856e9: Loading layer [==================================================>] 266.9MB/266.9MB

eb364b1a02ca: Loading layer [==================================================>] 2.19MB/2.19MB

ce8b3ebd2ee7: Loading layer [==================================================>] 21.5MB/21.5MB

Loaded image: 10.0.0.19:80/google_containers/etcd:3.5.0-0

225df95e717c: Loading layer [==================================================>] 336.4kB/336.4kB

f72781b18181: Loading layer [==================================================>] 47.34MB/47.34MB

Loaded image: 10.0.0.19:80/google_containers/coredns:v1.8.4

[22:45:47 root@master1 images]#docker load < k8s-dashboard-v2.3.1.tar

8ca79a390046: Loading layer [==================================================>] 223.1MB/223.1MB

c94f86b1c637: Loading layer [==================================================>] 3.072kB/3.072kB

Loaded image: 10.0.0.19:80/google_containers/dashboard:v2.3.1

6de384dd3099: Loading layer [==================================================>] 34.55MB/34.55MB

a652c34ae13a: Loading layer [==================================================>] 3.584kB/3.584kB

Loaded image: 10.0.0.19:80/google_containers/metrics-scraper:v1.0.6

[23:27:44 root@master1 images]#docker tag 10.0.0.19:80/google_containers/kube-apiserver:v1.22.1 10.0.0.55:80/google_containers/kube-apiserver:v1.22.1

[23:28:46 root@master1 images]#docker tag 10.0.0.19:80/google_containers/kube-scheduler:v1.22.1 10.0.0.55:80/google_containers/kube-scheduler:v1.22.1

[23:29:12 root@master1 images]#docker tag 10.0.0.19:80/google_containers/kube-proxy:v1.22.1 10.0.0.55:80/google_containers/kube-proxy:v1.22.1

[23:29:28 root@master1 images]#docker tag 10.0.0.19:80/google_containers/kube-controller-manager:v1.22.1 10.0.0.55:80/google_containers/kube-controller-manager:v1.22.1

[23:29:43 root@master1 images]#docker tag 10.0.0.19:80/google_containers/dashboard:v2.3.1 10.0.0.55:80/google_containers/dashboard:v2.3.1

[23:30:13 root@master1 images]#docker tag 10.0.0.19:80/google_containers/etcd:3.5.0-0 10.0.0.55:80/google_containers/ectd:3.5.0-0

[23:30:40 root@master1 images]#docker tag 10.0.0.19:80/google_containers/coredns:v1.8.4 10.0.0.55:80/google_containers/coredns:v1.8.4

[23:31:02 root@master1 images]#docker tag 10.0.0.19:80/google_containers/pause:3.5 10.0.0.55:80/google_containers/pause:3.5

[23:31:21 root@master1 images]#docker tag 10.0.0.19:80/google_containers/metrics-scraper:v1.0.6 10.0.0.55:80/google_containers/metrics-scraper:v1.0.6

[23:33:11 root@master1 images]#for i in 10.0.0.55:80/google_containers/kube-proxy:v1.22.1 10.0.0.55:80/google_containers/kube-controller-manager:v1.22.1 10.0.0.55:80/google_containers/dashboard:v2.3.1 10.0.0.55:80/google_containers/ectd:3.5.0-0 10.0.0.55:80/google_containers/coredns:v1.8.4 10.0.0.55:80/google_containers/pause:3.5 10.0.0.55:80/google_containers/metrics-scraper:v1.0.6; do docker push $i; done

The push refers to repository [10.0.0.55:80/google_containers/kube-proxy]

54e23a97593b: Pushed

48b90c7688a2: Pushed

v1.22.1: digest: sha256:87ad8b02618b73419d77920e0556e7c484501ddfa79f7ad554f5d17a473e84da size: 740

The push refers to repository [10.0.0.55:80/google_containers/kube-controller-manager]

498fc61ea412: Pushed

71204d686e50: Mounted from google_containers/kube-scheduler

07363fa84210: Mounted from google_containers/kube-scheduler

v1.22.1: digest: sha256:42617ed730cf7afdfccea9eb584abe3bb139a6dab56686bea0c6359037b4daec size: 949

The push refers to repository [10.0.0.55:80/google_containers/dashboard]

c94f86b1c637: Pushed

8ca79a390046: Pushed

v2.3.1: digest: sha256:e5848489963be532ec39d454ce509f2300ed8d3470bdfb8419be5d3a982bb09a size: 736

The push refers to repository [10.0.0.55:80/google_containers/ectd]

ce8b3ebd2ee7: Pushed

eb364b1a02ca: Pushed

13de6ee856e9: Pushed

33158bca9fb5: Pushed

417cb9b79ade: Pushed

3.5.0-0: digest: sha256:de6a50021feadfde321d44cf1934a806595e59d9cc77d68f0ce85cef8d1ab2ed size: 1372

The push refers to repository [10.0.0.55:80/google_containers/coredns]

f72781b18181: Pushed

225df95e717c: Pushed

v1.8.4: digest: sha256:10683d82b024a58cc248c468c2632f9d1b260500f7cd9bb8e73f751048d7d6d4 size: 739

The push refers to repository [10.0.0.55:80/google_containers/pause]

dee215ffc666: Pushed

3.5: digest: sha256:2f4b437353f90e646504ec8317dacd6123e931152674628289c990a7a05790b0 size: 526

The push refers to repository [10.0.0.55:80/google_containers/metrics-scraper]

a652c34ae13a: Pushed

6de384dd3099: Pushed

v1.0.6: digest: sha256:c09adb7f46e1a9b5b0bde058713c5cb47e9e7f647d38a37027cd94ef558f0612 size: 736

# install docker-compose

curl -L https://github.com/docker/compose/releases/download/1.25.3/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

或者

[root@ubuntu1804 ~]#apt -y install python-pip

[root@ubuntu1804 ~]#pip install docker-compose

[root@ubuntu1804 ~]#docker-compose --version

# install harbor

[22:14:51 root@ha1 harbor]#tar -zxvf harbor-offline-installer-v2.3.2.tgz -C /usr/local/

[22:14:51 root@ha1 harbor]#cd /usr/local/harbor/

[22:14:51 root@ha1 harbor]#docker load < harbor.v2.3.2.tar.gz

[22:20:07 root@ha1 harbor]#grep -Ev '^#|^ #|^$|^ #' harbor.yml

hostname: 10.0.0.55

http:

port: 80

harbor_admin_password: 123456

database:

password: root123

max_idle_conns: 100

max_open_conns: 900

data_volume: /data/harbor

trivy:

ignore_unfixed: false

skip_update: false

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 10

chart:

absolute_url: disabled

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor

_version: 2.3.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- trivy

161 ./prepare

162 ./install.sh

# 单机部署

[06:02:31 root@master1 ~]#bash install_k8s_base_env.sh

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[22:31:19 root@master1 scripts]#apt update && apt install -y apt-transport-https

[22:34:58 root@master1 scripts]#curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

[22:35:48 root@master1 scripts]#cat /etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

[22:35:43 root@master1 scripts]#apt update

[22:37:04 root@master1 scripts]#apt install -y kubelet=1.22.1-00 kubeadm=1.22.1-00 kubectl=1.22.1-00

[23:35:12 root@master1 images]#kubeadm init --kubernetes-version=1.22.1 \

> --apiserver-advertise-address=10.0.0.50 \

> --image-repository 10.0.0.55:80/google_containers \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16 \

> --ignore-preflight-errors=Swap

============================

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.50:6443 --token 0jsmms.m4e3uaoa3mu0pc6j \

--discovery-token-ca-cert-hash sha256:5183e53d6c411e7166c641a19737a9a2f68a9b6d48b17e18177a03047dae517f

==========================

[06:10:55 root@master1 ~]#vim /etc/kubernetes/manifests/kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

#- --port=0 ->> 注释掉这一行

[06:11:52 root@master1 ~]#systemctl restart kubelet.service

[06:12:09 root@master1 ~]#kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

controller-manager Healthy ok

[06:02:31 root@master1 ~]#mkdir flannel && cd flannel

[06:18:44 root@master1 flannel]#wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[07:04:33 root@master1 flannel]#kubectl apply -f kube-flannel.yml

[07:04:53 root@master1 flannel]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.noisedu.cn Ready control-plane,master 61m v1.22.1

[07:13:15 root@node2 ~]#kubeadm join 10.0.0.50:6443 --token 0jsmms.m4e3uaoa3mu0pc6j \

> --discovery-token-ca-cert-hash sha256:5183e53d6c411e7166c641a19737a9a2f68a9b6d48b17e18177a03047dae517f

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[07:05:11 root@master1 flannel]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.noisedu.cn Ready control-plane,master 70m v1.22.1

node1.noisedu.cn Ready <none> 32s v1.22.1

node2.noisedu.cn Ready <none> 24s v1.22.1

# 配置命令扩展

[07:14:33 root@master1 ~]#echo "source <(kubectl completion bash)" >> ~/.bashrc

[07:17:31 root@master1 ~]#source .bashrc

# 配置dashboard

[07:21:14 root@master1 data]#grep 'image' recommended.yaml

image: 10.0.0.55:80/google_containers/dashboard:v2.3.1

imagePullPolicy: IfNotPresent

image: 10.0.0.55:80/google_containers/metrics-scraper:v1.0.6

imagePullPolicy: IfNotPresent

[07:21:23 root@master1 data]#vim recommended.yaml

[07:21:56 root@master1 data]#kubectl apply -f recommended.yaml

[07:23:41 root@master1 ~]#kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[07:25:59 root@master1 ~]#kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[07:27:11 root@master1 ~]#kubectl -n kube-system get secret| grep dashboard-admin

dashboard-admin-token-crqqw kubernetes.io/service-account-token 3 77s

[07:27:16 root@master1 ~]#kubectl describe secrets -n kube-system dashboard-admin-token-crqqw

Name: dashboard-admin-token-crqqw

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 10ab0291-68da-47ae-9428-e37132e1b7e9

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ik8yRVRkdVNMZGlMcHVaVC1mYWlwbkNMajhicldNWE50SDdubXc0ckhyY0kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tY3JxcXciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMTBhYjAyOTEtNjhkYS00N2FlLTk0MjgtZTM3MTMyZTFiN2U5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.Gnrfd733obT7txJLfody2kCLDnKVucPcS4Tx1gM-b2MmgrHqebHRsgy6jJWEjGOd5xR8HxSy1cfJPSSEHEFrXWi9HWoeUlFDVI23Du9GFnJbTNJuIIcjh9Mh0QgoHkDNY2Bo6vQ7BbAK4-iE39VHZYwmDe15WpIMwCv5gvzqgjbc5KbjKIbPK91DwnS61GBL95-Tbq18eF-nMRrHQEndfPAfoh6qdos2mxA1S2LS7fouXZPKUgTmwkHWGZsnZDmuZ-J4JOpv5S1aU8HMFcV-5L6yIrThTly8PiCnW6mIQ-YQqy06keaXhRvD3kMmQPqFWScSKtoW8sg1LCK1x80-ew

=======

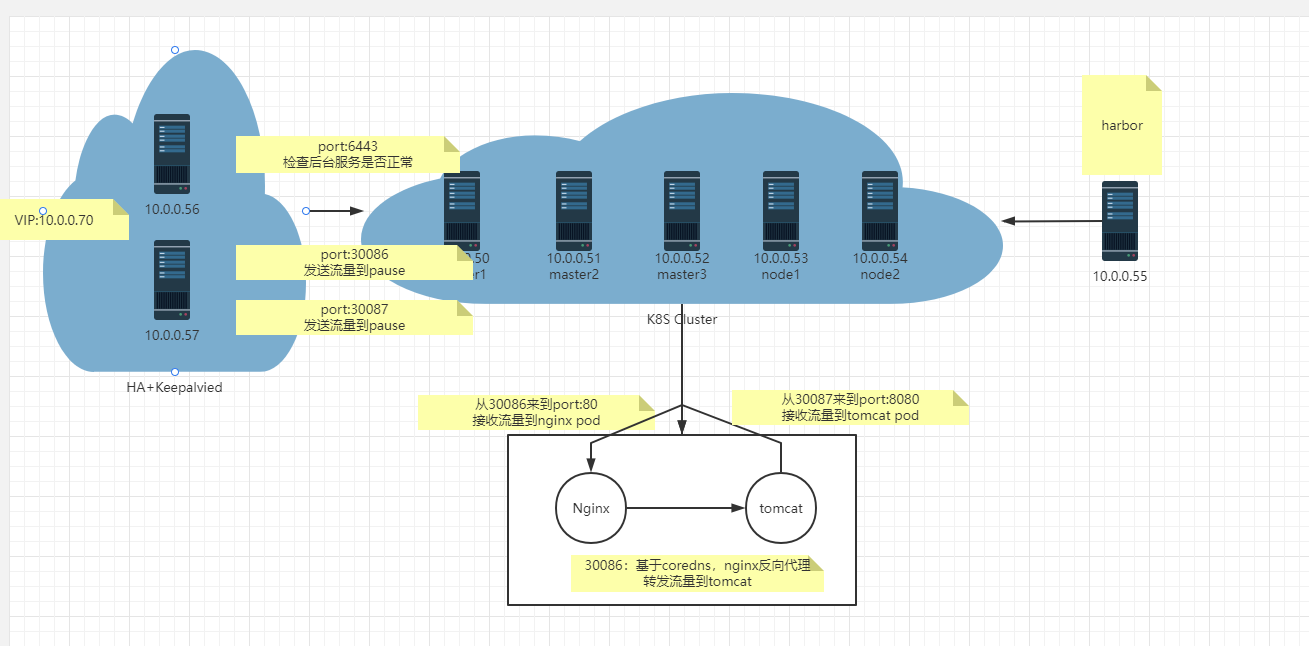

# haproxy + keepalived 配置

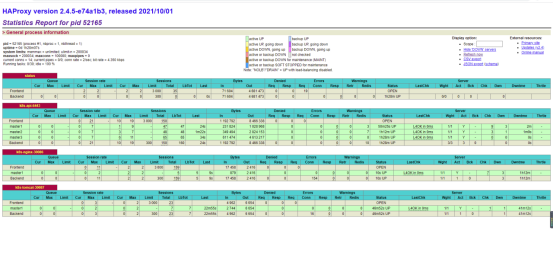

[21:11:28 root@noise ~]#vim /etc/haproxy/haproxy.cfg

listen status

bind 10.0.0.70:9999

mode http

log global

stats enable

stats uri /haproxy-stats

stats auth haadmin:123456

listen k8s-api-6443

bind 10.0.0.70:6443

mode tcp

server master1 10.0.0.50:6443 check inter 3s fall 3 rise 5

server master2 10.0.0.51:6443 check inter 3s fall 3 rise 5

server master3 10.0.0.52:6443 check inter 3s fall 3 rise 5

listen k8s-nginx-30086

bind 10.0.0.70:30086

mode tcp

server master1 10.0.0.50:30086 check inter 3s fall 3 rise 5

listen k8s-tomcat-30087

bind 10.0.0.70:30087

mode tcp

server master1 10.0.0.50:30087 check inter 3s fall 3 rise 5

[21:12:11 root@noise ~]#vim /etc/keepalived/keepalived.conf

vrrp_script check_haproxy {

script "/data/check_haproxy.sh"

interval 1

weight -30

fall 3

rise 5

timeout 2

}

vrrp_instance haproxy_ins {

state MASTER

interface eth0

virtual_router_id 99

#nopreempt

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.70/24 dev eth0 label eth0:2

10.0.0.80/24 dev eth0 label eth0:3

}

track_interface {

eth0

}

track_script {

check_haproxy

}

}

[21:18:52 root@noise ~]#vim /data/check_haproxy.sh

HAPROXY_STATUS=$(ps -C haproxy --no-heade | wc -l)

if [ $HAPROXY_STATUS -eq 0 ];then

systemctl start haproxy

sleep 3

if [ $(ps -C haproxy --no-heade | wc -l) -eq 0 ];then

killall keepalived

fi

fi

systemctl restart keepalived

[21:22:39 root@noise ~]#chmod +x /data/check_haproxy.sh

[21:25:39 root@noise ~]#systemctl restart keepalived.service && systemctl status keepalived.service

[21:28:06 root@noise ~]#systemctl restart haproxy.service && systemctl status haproxy.service

================================== 开始集群配置

# 配置master1之前,先清空之前的环境

#

kubeadm reset

rm -rf ~/.kube/config

ifconfig cni0 down

rm -rf /etc/cni/net.d/10-flannel.conflist

rm -rf /etc/cni/*

# 开始配置, --control-plane-endpoint指定VIP, --apiserver-bind-port指定对外apiserver的端口

[21:56:20 root@master1 ~]#kubeadm init --kubernetes-version=1.22.1 --apiserver-advertise-address=10.0.0.50 \

--control-plane-endpoint=10.0.0.70 --apiserver-bind-port=6443 --image-repository 10.0.0.55:80/google_containers \

--service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=Swap

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

--discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

--discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e

# 配置网络的flannel

[22:02:35 root@master1 ~]#cd flannel/

[22:02:53 root@master1 flannel]#ll

total 67324

drwxr-xr-x 2 root root 4096 Nov 27 07:03 ./

drwx------ 9 root root 4096 Nov 27 21:55 ../

-rw-r--r-- 1 root root 68921344 Nov 27 07:01 flannel-v0.14.0.tar

-rw-r--r-- 1 root root 4847 Nov 27 06:46 kube-flannel.yml

[22:02:54 root@master1 flannel]#kubectl apply -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[22:03:37 root@master1 flannel]#kubectl get node

NAME STATUS ROLES AGE VERSION

master1.noisedu.cn Ready control-plane,master 3m28s v1.22.1

# 在master1上面申请集群证书

[22:03:51 root@master1 flannel]#kubeadm init phase upload-certs --upload-certs

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

d54d04e7699d3304f62fa314c70db2fddcf8e8c0cf3193f47e5d2fc820c17292

# 给其他master配置

[22:08:20 root@master2 ~]# kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

> --discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e \

> --control-plane --certificate-key d54d04e7699d3304f62fa314c70db2fddcf8e8c0cf3193f47e5d2fc820c17292

[22:10:40 root@master2 ~]#mkdir -p $HOME/.kube

[22:10:47 root@master2 ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[22:10:47 root@master2 ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

[22:08:47 root@master3 ~]# kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

> --discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e \

> --control-plane --certificate-key d54d04e7699d3304f62fa314c70db2fddcf8e8c0cf3193f47e5d2fc820c17292

[22:11:18 root@master3 ~]#mkdir -p $HOME/.kube

[22:11:19 root@master3 ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[22:11:20 root@master3 ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 加入node1节点

[21:56:15 root@node1 ~]#kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

> --discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e

[preflight] Running pre-flight checks

# 加入node2节点

[21:56:17 root@node2 ~]#kubeadm join 10.0.0.70:6443 --token k6j1vm.evcq53ekhxi3g67c \

> --discovery-token-ca-cert-hash sha256:d7591b3185e42651dcc7d64b399c633c59f6a8b2766b15a6c4173597ab96975e

[preflight] Running pre-flight checks

[22:05:23 root@master1 flannel]#kubectl get node

NAME STATUS ROLES AGE VERSION

master1.noisedu.cn Ready control-plane,master 15m v1.22.1

master2.noisedu.cn Ready control-plane,master 5m43s v1.22.1

master3.noisedu.cn Ready control-plane,master 4m43s v1.22.1

node1.noisedu.cn Ready <none> 31s v1.22.1

node2.noisedu.cn Ready <none> 27s v1.22.1

=======================

# 下载nginx和tomcat的基础镜像

[22:42:15 root@master1 flannel]#docker pull nginx

[22:43:15 root@master1 flannel]#docker pull tomcat

[22:47:08 root@master1 flannel]#docker tag tomcat:latest 10.0.0.55:80/myapps/tomcat:v1.0

[22:47:13 root@master1 flannel]#docker tag nginx:latest 10.0.0.55:80/myapps/nginx:v1.0

[22:47:18 root@master1 flannel]#docker push 10.0.0.55:80/myapps/nginx:v1.0

[22:47:33 root@master1 flannel]#docker push 10.0.0.55:80/myapps/tomcat:v1.0

# 此处下面yaml文件主要是deployment部署配置和service网络配置

# 修改好的nginx yaml直接应用

[00:40:48 root@master1 apps]#cat 01-cluster-tomcat-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: 10.0.0.55:80/myapps/tomcat:v1.0

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-web-service

labels:

app: tomcat-web-service

spec:

type: NodePort

selector:

app: tomcat

ports:

- protocol: TCP

name: http

port: 80

targetPort: 8080

nodePort: 30087

[23:49:56 root@master1 apps]#kubectl apply -f 01-cluster-tomcat-web.yaml

deployment.apps/tomcat-deployment created

service/tomcat-web-service created

# 修改好的tomcat yaml直接应用

[00:42:17 root@master1 apps]#cat 02-cluster-nginx-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.55:80/myapps/nginx:v1.0

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-web-service

labels:

app: nginx-web-service

spec:

type: NodePort

selector:

app: nginx

ports:

- protocol: TCP

name: http

port: 80

targetPort: 80

nodePort: 30086

[23:50:04 root@master1 apps]#kubectl apply -f 02-cluster-nginx-web.yaml

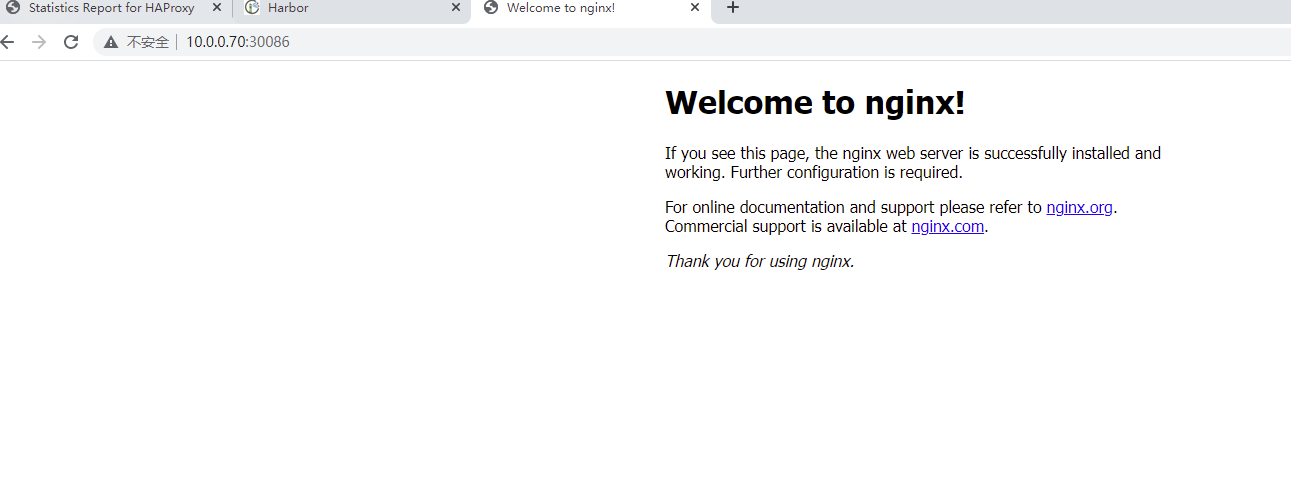

deployment.apps/nginx-deployment created

service/nginx-web-service created

[23:50:31 root@master1 apps]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-854f9849c5-cn6qs 1/1 Running 0 30s 10.244.4.37 node2.noisedu.cn <none> <none>

pod/tomcat-deployment-5c7f64459f-qmq5c 1/1 Running 0 33s 10.244.3.3 node1.noisedu.cn <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 110m <none>

service/nginx-web-service NodePort 10.111.94.221 <none> 80:30086/TCP 30s app=nginx

service/tomcat-web-service NodePort 10.111.91.145 <none> 80:30087/TCP 33s app=tomcat

#nginx 反向代理配置文件,用于dockerfile

[00:37:19 root@master1 apps]#cat default.conf

server {

listen 80;

listen [::]:80;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

location /tomcat {

proxy_pass http://tomcat-web-service/;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

# Dockerfile,个性化配置

[00:38:21 root@master1 apps]#cat Dockerfile

# 构建一个基于nginx定制nginx 反向代理镜像

# 基础镜像

FROM 10.0.0.55:80/myapps/nginx:v1.0

# 镜像作者

MAINTAINER Noise 578110218@qq.com

# 增加相关文件

ADD default.conf /etc/nginx/conf.d/default.conf

# 修改以后的image上传到harbor

[00:35:12 root@master1 apps]#docker build -t 10.0.0.55:80/myapps/nginx:v6.0 .

Sending build context to Docker daemon 7.68kB

Step 1/3 : FROM 10.0.0.55:80/myapps/nginx:v1.0

---> ea335eea17ab

Step 2/3 : MAINTAINER Noise 578110218@qq.com

---> Running in 26121650a0b9

Removing intermediate container 26121650a0b9

---> 50ce75305d10

Step 3/3 : ADD default.conf /etc/nginx/conf.d/default.conf

---> 5d838bfbfd17

Successfully built 5d838bfbfd17

Successfully tagged 10.0.0.55:80/myapps/nginx:v6.0

[00:35:38 root@master1 apps]#docker push 10.0.0.55:80/myapps/nginx:v6.0

The push refers to repository [10.0.0.55:80/myapps/nginx]

6101daccf355: Pushed

8525cde30b22: Layer already exists

1e8ad06c81b6: Layer already exists

49eeddd2150f: Layer already exists

ff4c72779430: Layer already exists

37380c5830fe: Layer already exists

e1bbcf243d0e: Layer already exists

v6.0: digest: sha256:1f55c00ade32eab2e5dc2bbfeae1f298a9b62e3783b9c2516d8da5a3eef1f9eb size: 1777

#配置Nginx反向代理的yml文件

[00:38:30 root@master1 apps]#cat 03-cluster-nginx-proxy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.55:80/myapps/nginx:v6.0

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-web-service

labels:

app: nginx-web-service

spec:

type: NodePort

selector:

app: nginx

ports:

- protocol: TCP

name: http

port: 80

targetPort: 80

nodePort: 30086

#应用反向代理文件

[00:37:02 root@master1 apps]#kubectl delete -f 03-cluster-nginx-proxy.yaml

deployment.apps "nginx-deployment" deleted

service "nginx-web-service" deleted

[00:37:09 root@master1 apps]#kubectl apply -f 03-cluster-nginx-proxy.yaml

deployment.apps/nginx-deployment created

service/nginx-web-service created

[00:38:40 root@master1 apps]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-859c68ccc8-xnlwj 1/1 Running 0 3m29s 10.244.4.43 node2.noisedu.cn <none> <none>

pod/tomcat-deployment-5c7f64459f-qmq5c 1/1 Running 0 50m 10.244.3.3 node1.noisedu.cn <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 160m <none>

service/nginx-web-service NodePort 10.106.96.3 <none> 80:30086/TCP 3m29s app=nginx

service/tomcat-web-service NodePort 10.111.91.145 <none> 80:30087/TCP 50m app=tomcat

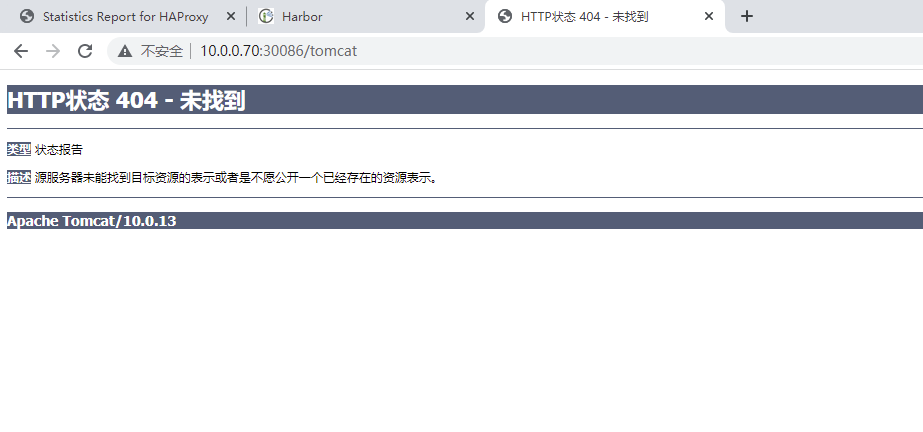

# 虽然tomcat报错404,但是依然能证明我们能访问到这个地址,并且由tomcat报错404.

浙公网安备 33010602011771号

浙公网安备 33010602011771号