使用 kubeadm 部署 Kubernetes 集群

[root@docker31 ~]# getenforce

Disabled

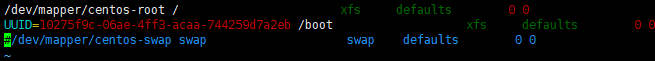

[root@docker31 ~]# swapoff -a

[root@docker31 ~]# vim /etc/fstab #注释掉swap

[root@docker31 ~]# mount -a

[root@docker31 ~]# ssh-keygen

[root@docker31 ~]# ssh-copy-id 192.168.1.31

[root@docker31 ~]# ssh-copy-id 192.168.1.32

[root@docker32 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@docker32 ~]# getenforce

Disabled

[root@docker32 ~]# swapoff -a

[root@docker32 ~]# vim /etc/fstab

[root@docker32 ~]# mount -a

[root@docker32 ~]# ssh-keygen

[root@docker32 ~]# ssh-copy-id 192.168.1.32

[root@docker32 ~]# ssh-copy-id 192.168.1.31

[root@docker31 ~]# modprobe br_netfilter

[root@docker31 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@docker31 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@docker31 ~]# sysctl -p /etc/sysctl.d/k8s.conf

[root@docker32 ~]# modprobe br_netfilter

[root@docker32 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@docker32 ~]# cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@docker32 ~]# sysctl -p /etc/sysctl.d/k8s.conf

[root@docker31 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

将yum源传到docker32

[root@docker31 ~]# scp 192.168.1.31:/etc/yum.repos.d/kubernetes.repo 192.168.1.32:/etc/yum.repos.d/

安装kubelet 、kubeadm 、kubectl,指定一个版本

[root@docker31 ~]# yum install -y kubelet-1.19.2 kubeadm-1.19.2 kubectl-1.19.2

[root@docker31 ~]# systemctl start kubelet && systemctl enable kubelet

[root@docker32 ~]# yum install -y kubelet-1.19.2 kubeadm-1.19.2 kubectl-1.19.2

[root@docker32 ~]# systemctl start kubelet && systemctl enable kubelet

安装docker-ce

配置阿里云docker-ce yum源

链接地址:https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.79c11b112Vehg5

[root@docker31 ~]# sudo yum install -y yum-utils device-mapper-persistent-data lvm2

[root@docker31 ~]# sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@docker31 ~]# yum install -y docker-ce

[root@docker31 ~]# systemctl start docker && systemctl enable docker

配置镜像加速和docker文件驱动

[root@docker31 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://r9ex4y8s.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

加载配置重启docker

[root@docker31 ~]# systemctl daemon-reload

[root@docker31 ~]# systemctl restart docker

在docker32上布置

[root@docker32 ~]# sudo yum install -y yum-utils device-mapper-persistent-data lvm2

[root@docker32 ~]# sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@docker32 ~]# yum install -y docker-ce

[root@docker32 ~]# systemctl start docker && systemctl enable docker

[root@docker32 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://r9ex4y8s.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@docker32 ~]# systemctl daemon-reload

[root@docker32 ~]# systemctl restart docker

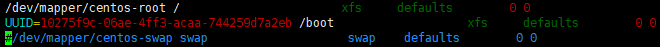

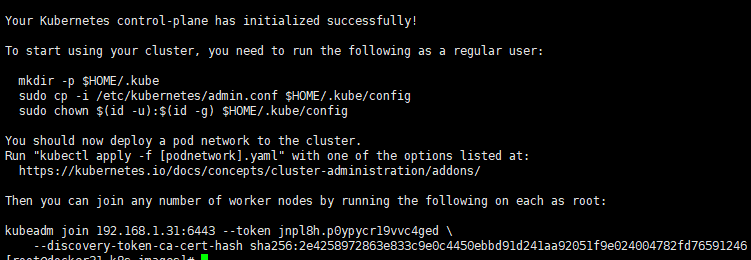

使用kubeadm初始化k8s集群

[root@docker31 k8s-images]# kubeadm init --kubernetes-version=1.19.2 --apiserver-advertise-address=192.168.1.31 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16

[root@docker31 k8s-images]# mkdir -p $HOME/.kube

[root@docker31 k8s-images]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@docker31 k8s-images]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

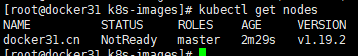

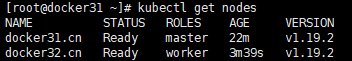

查看集群

[root@docker31 k8s-images]# kubectl get nodes

[root@docker31 ~]# wget https://docs.projectcalico.org/manifests/calico.yaml -O calico.yaml --no-check-certificate

[root@docker31 ~]# kubectl apply -f calico.yaml

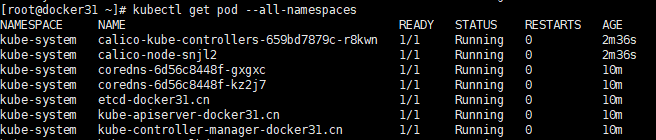

拉取镜像需要一段时间,查看pod状态为running则安装成功

[root@docker31 ~]# kubectl get pod --all-namespaces

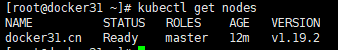

再次查看集群状态

[root@docker31 ~]# kubectl get nodes

状态为ready

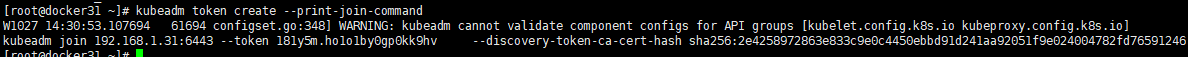

加入docker32节点

查看加入节点的命令

[root@docker31 ~]# kubeadm token create --print-join-command

[root@docker32 ~]# kubeadm join 192.168.1.31:6443 --token 181y5m.ho1o1by0gp0kk9hv --discovery-token-ca-cert-hash sha256:2e4258972863e833c9e0c4450ebbd91d241aa92051f9e024004782fd76591246

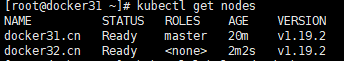

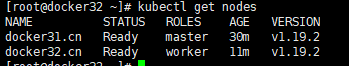

[root@docker31 ~]# kubectl get nodes

添加角色

[root@docker31 ~]# kubectl label node docker32.cn node-role.kubernetes.io/worker=worker

[root@docker31 ~]# kubectl get nodes

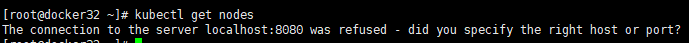

设置在docker32节点上可以查看集群状态

[root@docker32 ~]# kubectl get nodes

[root@docker31 ~]# scp /root/.kube/config 192.168.1.32:/root/.kube/

[root@docker32 ~]# kubectl get nodes

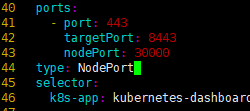

[root@docker31 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml -O dashboard.yaml

修改yaml配置

[root@docker31 ~]# vim dashboard.yaml

nodePort: 30000

type: NodePort

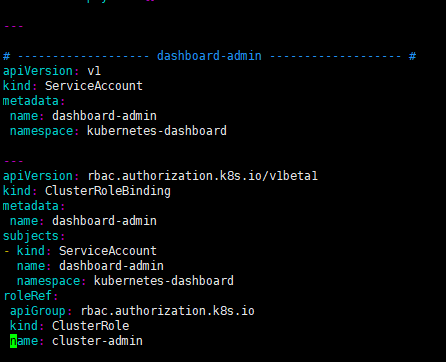

---

# ------------------- dashboard-admin ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

[root@docker31 ~]# kubectl apply -f dashboard.yaml

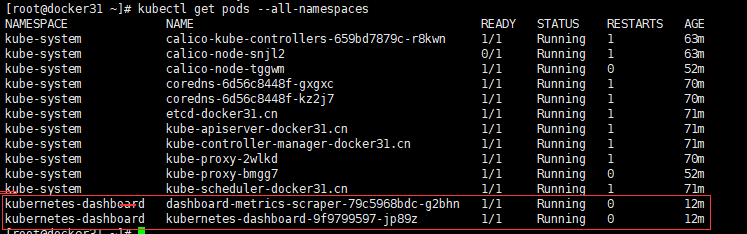

查看

[root@docker31 ~]# kubectl get pods --all-namespaces

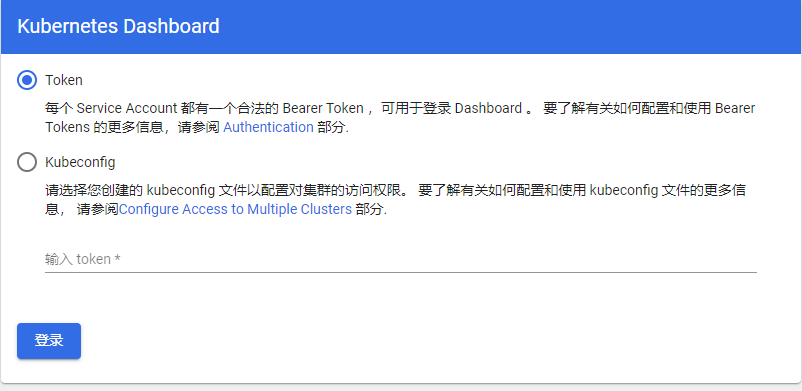

查看token登录令牌

[root@docker31 ~]# kubectl describe secrets -n kubernetes-dashboard dashboard-admin

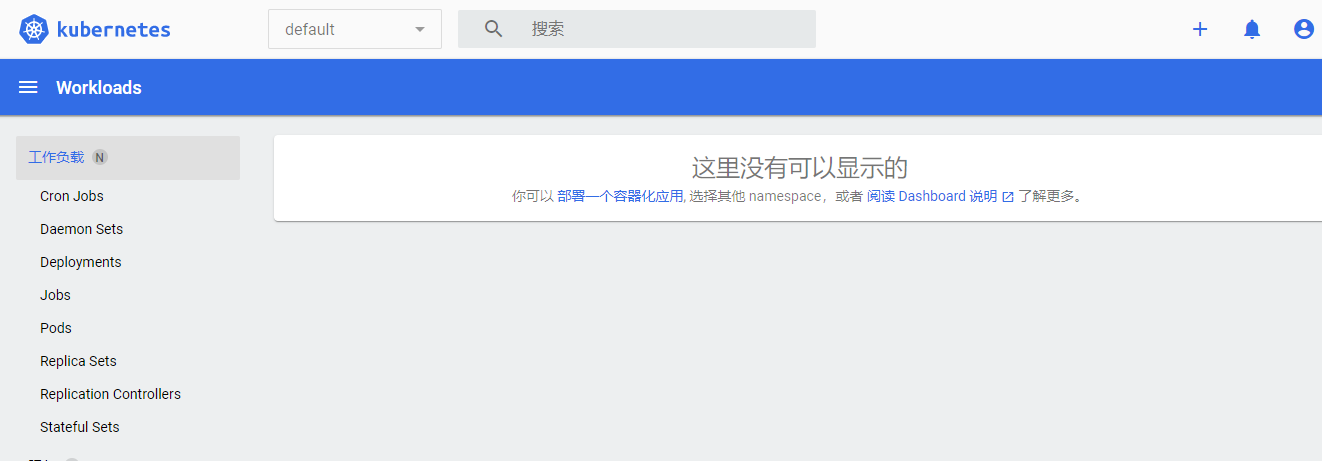

浏览器访问:https://192.168.1.31:30000

输入token

浙公网安备 33010602011771号

浙公网安备 33010602011771号