Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

解决问题-》有的放矢

1.spark 报错 Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

有道翻译:检查您的集群UI,以确保worker已经注册并拥有足够的资源

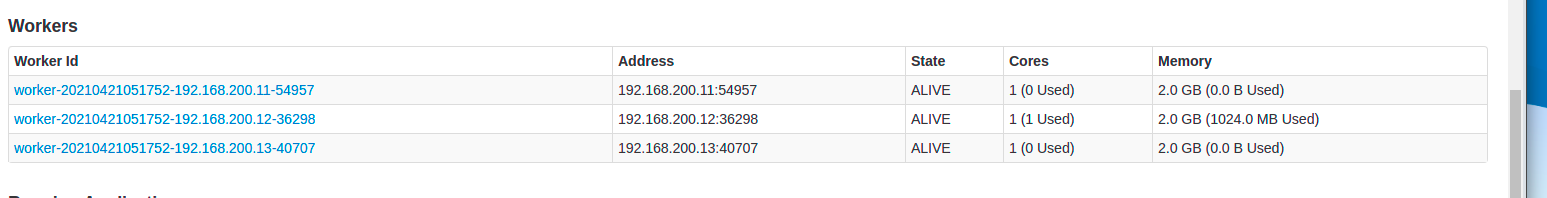

前提: worker已经激活

再检查资源:打开http://192.168.200.11:8080/

发现 running 发现进程过多,可能导致资源不足,遂关闭进程,重新执行。

解决。

2.Operation category READ is not supported in state standby

有道翻译:在standby状态下不支持操作类别读取

/usr/app/hadoop-2.7.3/bin/hdfs haadmin -transitionToActive --forcemanual nn1 //(nn1过渡到active状态)

/usr/app/hadoop-2.7.3/bin/hdfs haadmin -transitionToStandby --forcemanual nn2 //(nn2过渡到standby状态)

3.Spark-shell:java.io.FileNotFoundException: File file:/movie/movies.dat does not exist

关闭spark-shell,重新执行 /usr/app/spark-2.0.0-bin-hadoop2.7/bin/spark-shell --master spark://hadoop11:7077 --executor-memory 1G --total-executor-cores 1

打开http://192.168.200.11:8080/#running-app 查看core所在机器

浙公网安备 33010602011771号

浙公网安备 33010602011771号