构建filebeat+kafka+ELK的日志分析系统

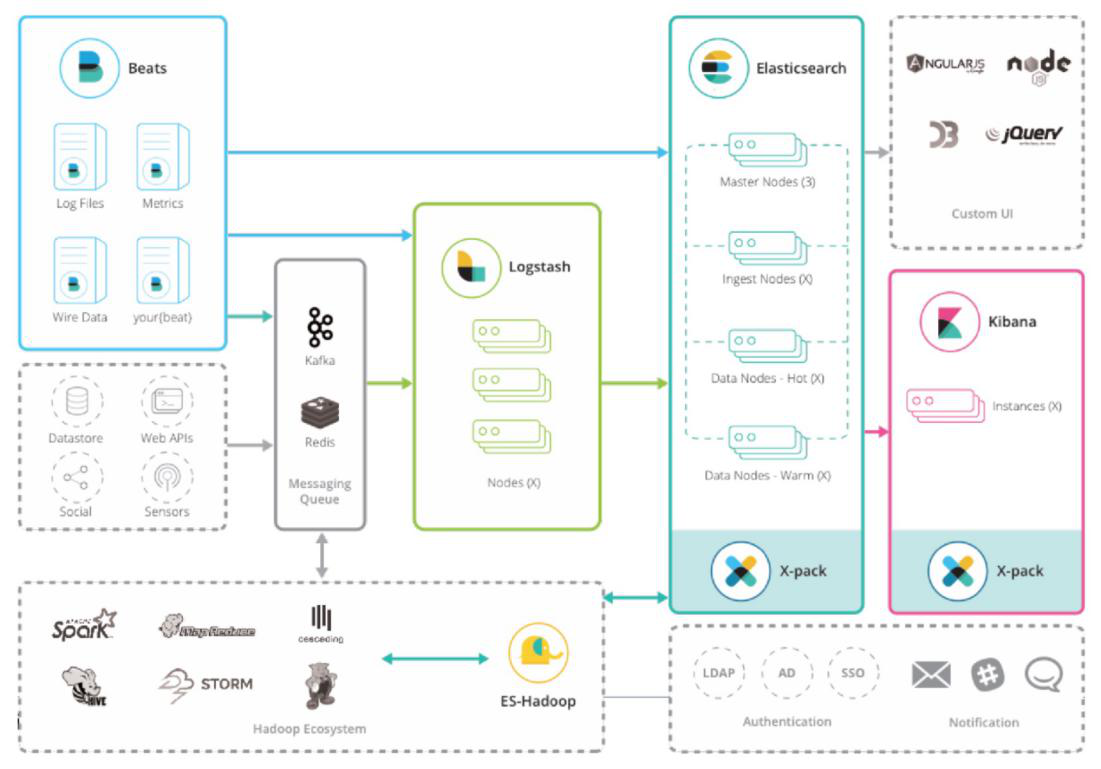

Elastic Stack整体架构

目前整体的架构:使用beats从各个数据源搜集数据,然后缓存到消息队列中,然后Logstash通过消息队列获取数据,加工过滤后发送到Elasticsearch,最后通过Kibana展示。典型架构如下:

基于这种架构,本文构建了filebeat+kafka+ELK的日志分析系统,其过程简述如下:

filebeat -> kafka -> logstash -> elasticsearch -> kibana

0 构建前的准备:

(1) 机器及角色分配

10.X.X.143 zookeeper

10.X.X.144 zookeeper kafka

10.X.X.145 zookeeper kafka

10.X.X.229 elasticsearch kibana filebeat

10.X.X.230 elasticsearch logstash filebeat

(2) 软件版本

elasticsearch-6.4.2

logstash-6.4.2

kibana-6.4.2

filebeat-6.4.2

kafka_2.11-1.1.0

zookeeper-3.4.5-cdh5.12.0

1 kafka+zookeeper

(1) 搭建kafka zookeeper的集群:zookeeper使用CDH的zookeeper,在10.X.X.144/145安装kafka;

(2) 登录10.X.X.144或者10.X.X.145:

# cd /opt/kafka_2.11-1.1.0/bin

# 配置kafka server.properties的broker.id、log.dirs=/var/log/kafka-logs

# vim ../config/server.properties

# 启动kafka

# nohup ./kafka-server-start.sh ../config/server.properties >/dev/null 2>&1 &

# ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test

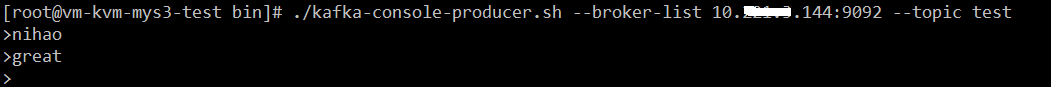

# ./kafka-console-producer.sh --broker-list 10.X.X.144:9092 --topic test

# 另启一个终端

# ./kafka-console-consumer.sh --bootstrap-server 10.X.X.144:9092 --from-beginning --topic test

有输出,说明kafka的topic的生产和消费没有问题。

2 ELK

(1) 搭建ELK集群,参考《搭建ELK日志分析平台》,具体流程不再细说

角色分配如下:

10.X.X.229 elasticsearch kibana filebeat

10.X.X.230 elasticsearch logstash filebeat

(2) 测试,确保集群通信没问题。

3 集群配置,搭建filebeat+kafka+ELK的日志分析系统

(1) 目标:

利用filebeat 采集10.X.X.229/230的messages日志信息,传输到kafka集群10.X.X.144:9092,并将topic命名为"logstash",logstash作为消费者,消费队列消息,将日志数据传到elasticsearch,存储并建立索引 kafka-syslog-%{+YYYY.MM.dd},最后,通过kibana展示。

filebeat -> kafka -> logstash -> elasticsearch -> kibana

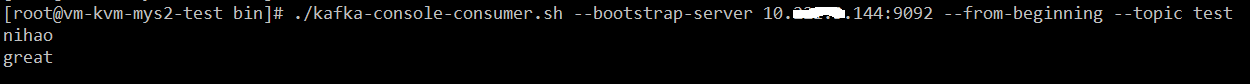

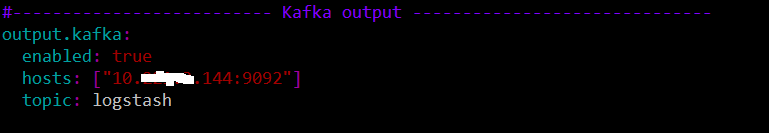

(2) Filebeat配置,分别在10.X.X.229/230做如下配置:

# vim /etc/filebeat/filebeat.yml

# systemctl start filebeat

# systemctl enable filebeat

(3) Logstash配置

# vim /etc/logstash/conf.d/kafka-syslog.conf

input {

kafka {

codec => "plain"

bootstrap_servers => "10.X.X.144:9092,10.X.X.145:9092"

topics => ["logstash"]

group_id => "syslog_group"

consumer_threads => 2

auto_offset_reset => "latest"

decorate_events => true

type => "test"

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["10.X.X.229:9200"]

index => "kafka-syslog-%{+YYYY.MM.dd}"

}

}

# cd /usr/share/logstash/bin/

# 配置验证

# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/kafka-syslog.conf --config.test_and_exit

# systemctl start logstash

# systemctl enable logstash

(4) 配置elasticsearch\kibana,参考《搭建ELK日志分析平台》,具体不再细说

# systemctl start elasticsearch

# systemctl enable elasticsearch

# systemctl start kibana

# systemctl enable kibana

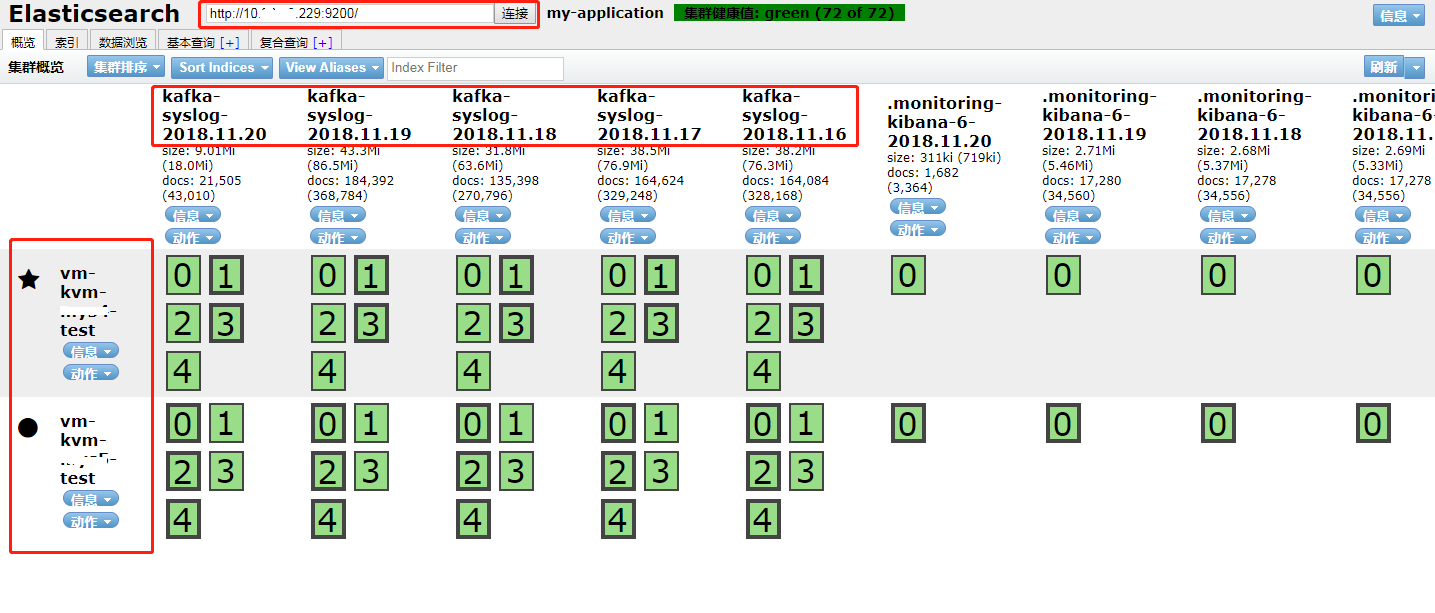

(5) Elasticsearch head查看

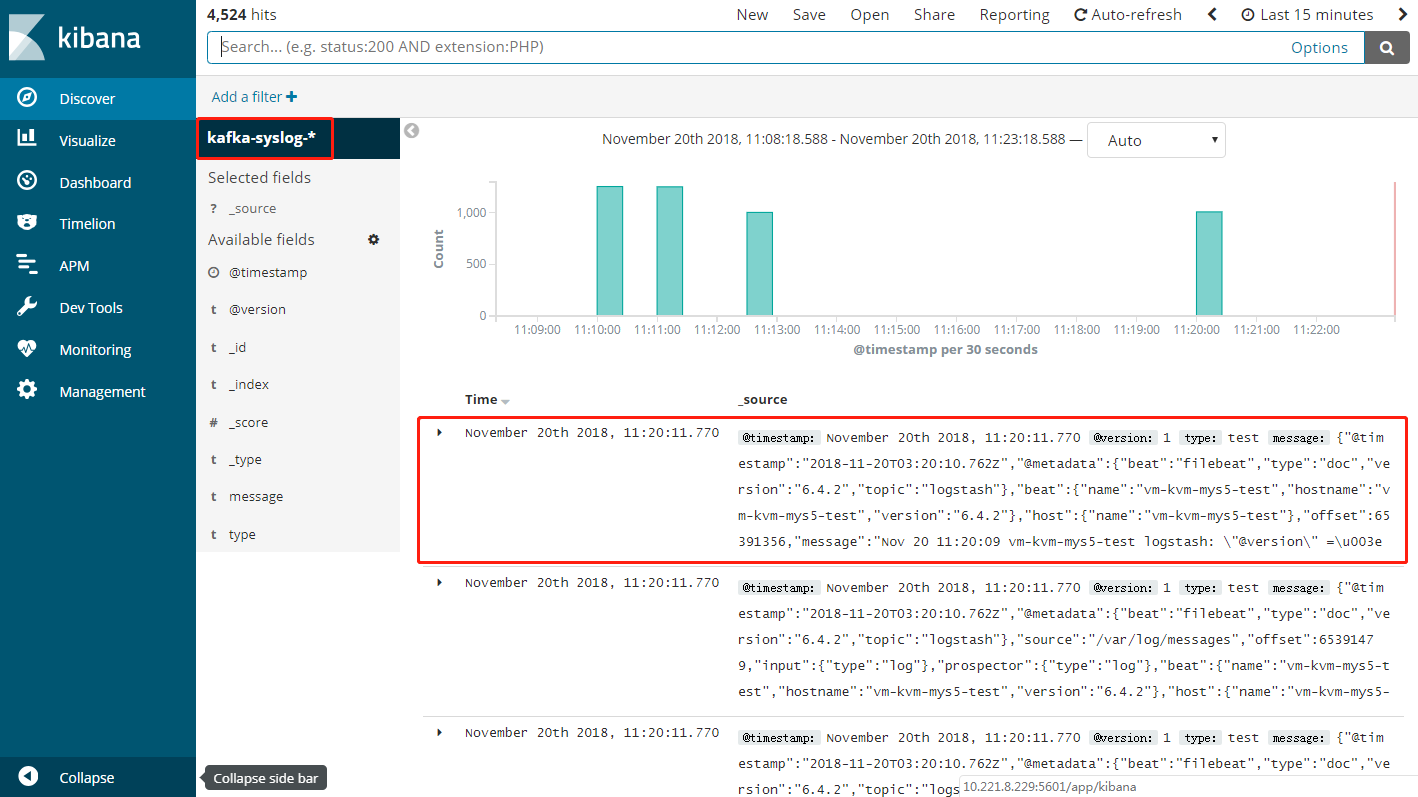

(6) Kibana上查看

浙公网安备 33010602011771号

浙公网安备 33010602011771号