1、二进制安装kubernetes

关于k8s的介绍,这里就不做过多说明了。百度上很多的教程与文档。下面开始准备环境并安装

本文章参考:http://www.kubeasy.com/

1、系统环境配置

1.1、系统版本

[root@k8s-master1 ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

1.2、虚拟机环境(我这里是在自己电脑的虚拟机上部署的)

网络选择桥接或者NAT模式都可以,我这里方便自己另外一台电脑直连就用的桥接模式。但是IP一定要设置为静态IP。

1.3、所有节点配置hosts文件

[root@k8s-master1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.21 k8s-master1

192.168.2.22 k8s-master2

192.168.2.23 k8s-master3

192.168.2.24 k8s-node1

192.168.2.25 k8s-node2

192.168.2.200 k8s-master-lb

1.4、所有节点配置yum源

[root@k8s-master1 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master1 ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

#需要五台主机都需要配置一下,可用ansible批量配置

1.5、所有节点安装必备工具

[root@k8s-master1 ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

#ansible命令如下,第四小节的配置同理

[root@k8s-master1 ~]# ansible -i ~/all.cfg all -a -m shell -a "yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y"

[root@k8s-master1 ~]# cat all.cfg

[all]

[master]

192.168.2.21

192.168.2.22

192.168.2.23

[node]

192.168.2.24

192.168.2.25

[all:vars]

ansible_ssh_user=root

ansible_ssh_pass=*******

1.6、所有节点关闭firewalld 、dnsmasq、selinux(CentOS7需要关闭NetworkManager,CentOS8不需要)

[root@k8s-master1 ~]# systemctl disable --now firewalld

[root@k8s-master1 ~]# systemctl disable --now dnsmasq

[root@k8s-master1 ~]# systemctl disable --now NetworkManager

[root@k8s-master1 ~]# setenforce 0

[root@k8s-master1 ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

[root@k8s-master1 ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

1.7、所有节点关闭swap分区,fstab注释swap

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

1.8、所有节点配置时间同步

1、安装ntpdate

[root@k8s-master1 ~]# rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

[root@k8s-master1 ~]# yum install ntpdate -y

2、所有节点同步时间。时间同步配置如下:

[root@k8s-master1 ~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

[root@k8s-master1 ~]# echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

[root@k8s-master1 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

1.9、所有节点配置limit

[root@k8s-master1 ~]# ulimit -SHn 65535

[root@k8s-master1 ~]# vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

1.10、master1节点免密登录其他节点

[root@k8s-master1 ~]# ssh-keygen -t rsa

[root@k8s-master1 ~]# for i in k8s-master1 k8s-master2 k8s-master3 k8s-node1 k8s-node2;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

2、内核升级(可选择不升级内核只配置ipvs)

2.1、下载内核升级包

[root@k8s-master1 ~]# cd /root

[root@k8s-master1 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

[root@k8s-master1 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

2.2、拷贝至其他节点

[root@k8s-master1 ~]# for i in k8s-master2 k8s-master3 k8s-node1 k8s-node2;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done

2.3、所有节点安装(只在master1演示)

[root@k8s-master1 ~]# cd /root && yum localinstall -y kernel-ml*

2.4、所有节点更改内核启动顺序

[root@k8s-master1 ~]# grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

[root@k8s-master1 ~]# grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

2.5、检查内核版本并重启

#重启前

[root@k8s-master1 ~]# grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

#所有节点重启后检查

[root@k8s-master1 ~]# uname -a

Linux k8s-master1 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux

2.6、安装ipvsadm,并加载ipvs模块

[root@k8s-master1 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y

[root@k8s-master1 ~]# vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

[root@k8s-master1 ~]# systemctl enable --now systemd-modules-load.service

#检查是否加载成功

[root@k8s-master1 ~]# lsmod | grep -e ip_vs -e nf_conntrack

nf_conntrack_netlink 40960 0

nfnetlink 16384 3 nf_conntrack_netlink,ip_set

ip_vs_ftp 16384 0

nf_nat 32768 2 nf_nat_ipv4,ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 143360 6 xt_conntrack,nf_nat,ipt_MASQUERADE,nf_nat_ipv4,nf_conntrack_netlink,ip_vs

nf_defrag_ipv6 20480 1 nf_conntrack

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

2.7、开启一些k8s集群中必须的内核参数,所有节点配置

[root@k8s-master1 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

[root@k8s-master1 ~]# sysctl --system

2.8、所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

[root@k8s-master1 ~]# reboot

[root@k8s-master1 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack

二、基本组件安装

本节主要安装的是集群中用到的各种组件,比如Docker-ce、Kubernetes各组件等。

1、docker的安装

1.1、所有节点安装docker19(只在master1演示)

[root@k8s-master1 ~]# yum install docker-ce-19.03.* -y

由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd。所有节点配置

[root@k8s-master1 ~]# mkdir /etc/docker

[root@k8s-master1 ~]# cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

#所有节点设置开机启动docker

systemctl daemon-reload && systemctl enable --now docker

2、k8s及etcd安装

2.1、Master01下载etcd和kubernetes安装包

[root@k8s-master1 ~]# wget https://dl.k8s.io/v1.20.0/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master1 ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

2.2、安装k8s和etcd

以下操作都在master01执行

#解压kubernetes安装文件

[root@k8s-master1 ~]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

#解压etcd安装文件

[root@k8s-master1 ~]# tar -zxvf etcd-v3.4.13-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.4.13-linux-amd64/etcd{,ctl}

2.3、版本查看

[root@k8s-master1 ~]# kubelet --version

Kubernetes v1.20.0

[root@k8s-master1 ~]# etcdctl version

etcdctl version: 3.4.13

API version: 3.4

2.4、推送至其他节点

[root@k8s-master1 ~]# MasterNodes='k8s-master2 k8s-master3'

[root@k8s-master1 ~]# WorkNodes='k8s-node1 k8s-node2'

[root@k8s-master1 ~]# for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

[root@k8s-master1 ~]# for NODE in $WorkNodes; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

2.5、所有节点创建/opt/cni/bin目录

mkdir -p /opt/cni/bin

三、生成证书

二进制安装最关键步骤,一步错误全盘皆输,一定要注意每个步骤都要是正确的

Master01下载生成证书工具

[root@k8s-master1 ~]# wget "https://pkg.cfssl.org/R1.2/cfssl_linux-amd64" -O /usr/local/bin/cfssl

[root@k8s-master1 ~]# wget "https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson

[root@k8s-master1 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

1、etcd证书(以下所有演示都在master1上进行)

1.1、创建相关目录

#所有Master节点创建etcd证书目录

[root@k8s-master1 ~]# mkdir /etc/etcd/ssl -p

#所有节点创建kubernetes相关目录

[root@k8s-master1 ~]# mkdir -p /etc/kubernetes/pki

1.2、master1节点生成etcd证书

1.2.1、创建证书请求文件的目录

[root@k8s-master1 pki]# mkdir -p /root/k8s-ha-install/pki

1.2.2、创建ca文件

[root@k8s-master1 pki]# cd /root/k8s-ha-install/pki

[root@k8s-master1 pki]# cat >etcd-ca-csr.json <<eof

> {

> "CN": "etcd",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "etcd",

> "OU": "Etcd Security"

> }

> ],

> "ca": {

> "expiry": "876000h"

> }

> }

> eof

[root@k8s-master1 pki]# cat > etcd-csr.json << eof

> {

> "CN": "etcd",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "etcd",

> "OU": "Etcd Security"

> }

> ]

> }

> eof

1.2.3、生成证书

[root@k8s-master1 pki]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

[root@k8s-master1 pki]# cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master1,k8s-master2,k8s-master3,192.168.2.21,192.168.2.22,192.168.2.23 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

1.2.4、分发证书到其他节点

[root@k8s-master1 pki]# MasterNodes='k8s-master2 k8s-master3'

[root@k8s-master1 pki]# WorkNodes='k8s-node1 k8s-node2'

[root@k8s-master1 pki]# for NODE in $MasterNodes; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

2、生成k8s组件证书(以下所有演示都在master1上进行)

2.1、切换到证书请求文件目录

[root@k8s-master1 pki]# cd /root/k8s-ha-install/pki

2.2、创建证书

2.2.1、创建ca文件

#证书设置的过期时间876000h

[root@k8s-master1 pki]# cat >ca-csr.json << eof

> {

> "CN": "kubernetes",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "Kubernetes",

> "OU": "Kubernetes-manual"

> }

> ],

> "ca": {

> "expiry": "876000h"

> }

> }

> eof

[root@k8s-master1 pki]# cat > ca-config.json << eof

> {

> "signing": {

> "default": {

> "expiry": "876000h"

> },

> "profiles": {

> "kubernetes": {

> "usages": [

> "signing",

> "key encipherment",

> "server auth",

> "client auth"

> ],

> "expiry": "876000h"

> }

> }

> }

> }

> eof

#下面生成聚合证书的时候需要

[root@k8s-master1 pki]# cat > front-proxy-ca-csr.json << eof

> {

> "CN": "kubernetes",

> "key": {

> "algo": "rsa",

> "size": 2048

> }

> }

> eof

[root@k8s-master1 pki]# cat > front-proxy-client-csr.json << eof

> {

> "CN": "front-proxy-client",

> "key": {

> "algo": "rsa",

> "size": 2048

> }

> }

> eof

#生成controller-manage需要的

[root@k8s-master1 pki]# cat > manager-csr.json << eof

> {

> "CN": "system:kube-controller-manager",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "system:kube-controller-manager",

> "OU": "Kubernetes-manual"

> }

> ]

> }

> eof

#生成scheduler证书需要的

[root@k8s-master1 pki]# cat > scheduler-csr.json << eof

> {

> "CN": "system:kube-scheduler",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "system:kube-scheduler",

> "OU": "Kubernetes-manual"

> }

> ]

> }

> eof

#生成admin证书需要的

[root@k8s-master1 pki]# cat > admin-csr.json << eof

> {

> "CN": "admin",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "ST": "Beijing",

> "L": "Beijing",

> "O": "system:masters",

> "OU": "Kubernetes-manual"

> }

> ]

> }

> eof

2.2.2、生成apiserver证书文件

[root@k8s-master1 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

# 10.96.0.是k8s service的网段,如果说需要更改k8s service网段,那就需要更改10.96.0.1,

# 如果不是高可用集群,192.168.2.200为Master1的IP

[root@k8s-master1 pki]# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.2.200,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.2.21,192.168.2.22,192.168.2.23 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

2.2.2、生成apiserver的聚合证书。Requestheader-client-xxx requestheader-allowwd-xxx:aggerator

[root@k8s-master1 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

[root@k8s-master1 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

#返回结果(忽略警告)

2.2.3、生成controller-manage的证书

[root@k8s-master1 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 注意,如果不是高可用集群,192.168.2.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

# set-cluster:设置一个集群项

[root@k8s-master1 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.2.200:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置一个环境项,一个上下文

[root@k8s-master1 pki]# kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# set-credentials 设置一个用户项

[root@k8s-master1 pki]# kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 使用某个环境当做默认环境

[root@k8s-master1 pki]# kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

2.2.4、生成Scheduler的证书

#证书生成命令的意思和controller-manage是一样的

[root@k8s-master1 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 注意,如果不是高可用集群,192.168.2.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master1 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.2.200:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

[root@k8s-master1 pki]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

[root@k8s-master1 pki]# kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

[root@k8s-master1 pki]# kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

2.2.5、生成admin的证书

[root@k8s-master1 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

# 注意,如果不是高可用集群,192.168.2.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master1 pki]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.2.200:8443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

[root@k8s-master1 pki]# kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

[root@k8s-master1 pki]# kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

[root@k8s-master1 pki]# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

2.2.6、创建ServiceAccount Key(secret)

[root@k8s-master1 pki]# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

[root@k8s-master1 pki]# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

2.2.7、分发证书至其他节点

[root@k8s-master1 pki]# for NODE in k8s-master2 k8s-master3 k8s-node1 k8s-node2; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

2.2.8、查看证书文件

[root@k8s-master1 pki]# ls /etc/kubernetes/pki/

admin.csr apiserver-key.pem ca.pem front-proxy-client.csr sa.pub

admin-key.pem apiserver.pem controller-manager.csr front-proxy-ca.csr front-proxy-client-key.pem scheduler.csr

admin.pem ca.csr controller-manager-key.pem front-proxy-ca-key.pem front-proxy-client.pem scheduler-key.pem

apiserver.csr ca-key.pem controller-manager.pem front-proxy-ca.pem sa.key scheduler.pem

[root@k8s-master1 pki]# ls /etc/kubernetes/pki/ | wc -l

23

四、Kubernetes系统组件配置

1、etcd配置

etcd配置大致相同,注意修改每个Master节点的etcd配置的主机名和IP地址

1.1、master1

[root@k8s-master1 pki]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master1'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.2.21:2380'

listen-client-urls: 'https://192.168.2.21:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.2.21:2380'

advertise-client-urls: 'https://192.168.2.21:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master1=https://192.168.2.21:2380,k8s-master2=https://192.168.2.22:2380,k8s-master3=https://192.168.2.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

1.2、mater2

[root@k8s-master2 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master2'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.2.22:2380'

listen-client-urls: 'https://192.168.2.22:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.2.22:2380'

advertise-client-urls: 'https://192.168.2.22:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master1=https://192.168.2.21:2380,k8s-master2=https://192.168.2.22:2380,k8s-master3=https://192.168.2.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

1.3、mater3

[root@k8s-master3 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master3'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.2.23:2380'

listen-client-urls: 'https://192.168.2.23:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.2.23:2380'

advertise-client-urls: 'https://192.168.2.23:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master1=https://192.168.2.21:2380,k8s-master2=https://192.168.2.22:2380,k8s-master3=https://192.168.2.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

1.4、创建service启动

所有Master节点创建etcd service并启动,只在master1演示

[root@k8s-master1 pki]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

#所有Master节点创建etcd的证书目录

[root@k8s-master1 pki]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master1 pki]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master1 pki]# systemctl daemon-reload

[root@k8s-master1 pki]# systemctl enable --now etcd

#查看etcd状态,因为我这里已经先部署好etcd了。可能DB SIZE不太一样

[root@k8s-master1 pki]# export ETCDCTL_API=3

[root@k8s-master1 pki]# etcdctl --endpoints="192.168.2.23:2379,192.168.2.22:2379,192.168.2.21:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.2.23:2379 | f8f64b73ad0515b1 | 3.4.13 | 8.6 MB | false | false | 111 | 29291 | 29291 | |

| 192.168.2.22:2379 | f31142d0262d7ccb | 3.4.13 | 8.6 MB | true | false | 111 | 29291 | 29291 | |

| 192.168.2.21:2379 | c3c3a0afb8f7b9ce | 3.4.13 | 8.5 MB | false | false | 111 | 29291 | 29291 | |

+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

2、高可用配置

高可用配置(注意:如果不是高可用集群,haproxy和keepalived无需安装)

如果在云上安装也无需执行此章节的步骤,可以直接使用云上的lb,比如阿里云slb,腾讯云elb等

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

Slb -> haproxy -> apiserver

2.1、所有Master节点安装keepalived和haproxy

yum install keepalived haproxy -y

2.2、所有Master节点配置HAProxy,配置一样。只在master1演示

#将原文件内容清空后,直接复制进去就行了

[root@k8s-master1 ~]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master1 192.168.2.21:6443 check

server k8s-master2 192.168.2.22:6443 check

server k8s-master3 192.168.2.23:6443 check

#拷贝到其他master主机,此处不过多描述

[root@k8s-master1 ~]# ansible -i all.cfg master -m copy -a "src=/etc/haproxy/haproxy.cfg dest=/etc/haproxy/haproxy.cfg"

2.3、keepalived的配置每个节点不太一样,已经标注。

注意每个节点的IP和网卡(interface参数)

[root@k8s-master1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.2.21

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.2.200

}

track_script {

chk_apiserver

} }

[root@k8s-master2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 192.168.2.22

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.2.200

}

track_script {

chk_apiserver

} }

[root@k8s-master3 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 192.168.2.23

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.2.200

}

track_script {

chk_apiserver

} }

2.4、健康检查配置

所有master节点

[root@k8s-master1 keepalived]# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

[root@k8s-master1 keepalived]# chmod +x /etc/keepalived/check_apiserver.sh

#拷贝到其他节点

[root@k8s-master1 keepalived]# ansible -i ~/all.cfg master -m copy -a "src=/etc/keepalived/check_apiserver.sh dest=/etc/keepalived/check_apiserver.sh"

#所有节点启动这里只展示master1

[root@k8s-master1 keepalived]# systemctl daemon-reload

[root@k8s-master1 keepalived]# systemctl enable --now haproxy

[root@k8s-master1 keepalived]# systemctl enable --now keepalived

#VIP测试

[root@k8s-master1 pki]# ping 192.168.2.200

PING 192.168.2.200 (192.168.2.200) 56(84) bytes of data.

64 bytes from 192.168.2.200: icmp_seq=1 ttl=64 time=1.39 ms

64 bytes from 192.168.2.200: icmp_seq=2 ttl=64 time=2.46 ms

64 bytes from 192.168.2.200: icmp_seq=3 ttl=64 time=1.68 ms

64 bytes from 192.168.2.200: icmp_seq=4 ttl=64 time=1.08 ms

#重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

[root@k8s-master1 pki]# telnet 192.168.2.200 8443

Trying 192.168.2.200...

Connected to 192.168.2.200.

Escape character is '^]'.

如果ping不通且telnet没有出现 ],则认为VIP不可以,不可在继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

五、k8s组件配置

所有节点创建相关目录,只在master1上演示。配置文件不同的地方会在下面标准说明

[root@k8s-master1 ~]# mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes

1、apiserver

所有Master节点创建kube-apiserver service,# 注意,如果不是高可用集群,192.168.2.200改为master01的地址

注意本文档使用的k8s service网段为10.96.0.0/12,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

1.1、master1配置

[root@k8s-master1 pki]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.2.21 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.2.21:2379,https://192.168.2.22:2379,https://192.168.2.23:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

1.2、master2配置

[root@k8s-master2 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.2.22 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.2.21:2379,https://192.168.2.22:2379,https://192.168.2.23:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

1.3、master3配置

[root@k8s-master3 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.2.23 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.2.21:2379,https://192.168.2.22:2379,https://192.168.2.23:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

1.4、启动apiserver

所有Master节点开启kube-apiserver

[root@k8s-master1 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

#检查kube-apiserver的状态

[root@k8s-master1 ~]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2021-08-18 09:10:23 CST; 2h 10min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 8675 (kube-apiserver)

Tasks: 11

Memory: 324.4M

CGroup: /system.slice/kube-apiserver.service

└─8675 /usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --...

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.184075 8675 clientconn.go:948] ClientConn switching balancer to "..._first"

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.184643 8675 balancer_conn_wrappers.go:78] pickfirstBalancer: Hand... <nil>}

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.194880 8675 balancer_conn_wrappers.go:78] pickfirstBalancer: Hand... <nil>}

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.198196 8675 controlbuf.go:508] transport: loopyWriter.run returni...losing"

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.947570 8675 client.go:360] parsed scheme: "passthrough"

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.947623 8675 passthrough.go:48] ccResolverWrapper: sending update ... <nil>}

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.947632 8675 clientconn.go:948] ClientConn switching balancer to "..._first"

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.947871 8675 balancer_conn_wrappers.go:78] pickfirstBalancer: Hand... <nil>}

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.959037 8675 balancer_conn_wrappers.go:78] pickfirstBalancer: Hand... <nil>}

8月 18 11:20:59 k8s-master1 kube-apiserver[8675]: I0818 11:20:59.960546 8675 controlbuf.go:508] transport: loopyWriter.run returni...losing"

Hint: Some lines were ellipsized, use -l to show in full.

2、ControllerManager

所有Master节点配置kube-controller-manager service,配置文件是一样的。只需要在master1上写好后复制到其他节点就可

2.1、配置文件

[root@k8s-master1 pki]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

#拷贝

[root@k8s-master1 pki]# ansible -i ~/all.cfg master -m copy -a "src=/usr/lib/systemd/system/kube-controller-manager.service dest=/usr/lib/systemd/system/kube-controller-manager.service"

2.2、启动

所有节点启动

[root@k8s-master1 pki]# systemctl daemon-reload

[root@k8s-master1 pki]# systemctl enable --now kube-controller-manager

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-master1 ~]# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2021-08-18 09:09:12 CST; 2h 16min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 7740 (kube-controller)

CGroup: /system.slice/kube-controller-manager.service

└─7740 /usr/local/bin/kube-controller-manager --v=2 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kubernetes/pki/ca.p...

8月 18 09:09:21 k8s-master1 kube-controller-manager[7740]: I0818 09:09:21.215107 7740 secure_serving.go:197] Serving securely on [::]:10257

8月 18 09:09:21 k8s-master1 kube-controller-manager[7740]: I0818 09:09:21.219859 7740 dynamic_cafile_content.go:167] Starting reque...ca.pem

8月 18 09:09:21 k8s-master1 kube-controller-manager[7740]: I0818 09:09:21.220092 7740 tlsconfig.go:240] Starting DynamicServingCert...roller

8月 18 09:09:21 k8s-master1 kube-controller-manager[7740]: I0818 09:09:21.220513 7740 deprecated_insecure_serving.go:53] Serving in...:10252

8月 18 09:09:21 k8s-master1 kube-controller-manager[7740]: I0818 09:09:21.220568 7740 leaderelection.go:243] attempting to acquire ...ger...

8月 18 09:09:38 k8s-master1 kube-controller-manager[7740]: E0818 09:09:38.155156 7740 leaderelection.go:325] error retrieving resource lo...

8月 18 09:09:50 k8s-master1 kube-controller-manager[7740]: E0818 09:09:50.827115 7740 leaderelection.go:325] error retrieving resource lo...

8月 18 09:10:04 k8s-master1 kube-controller-manager[7740]: E0818 09:10:04.014911 7740 leaderelection.go:325] error retrieving resource lo...

8月 18 09:10:18 k8s-master1 kube-controller-manager[7740]: E0818 09:10:18.221159 7740 leaderelection.go:325] error retrieving resou...ceeded

8月 18 09:10:31 k8s-master1 kube-controller-manager[7740]: E0818 09:10:31.738073 7740 leaderelection.go:325] error retrieving resource lo...

Hint: Some lines were ellipsized, use -l to show in full.

3、Scheduler

所有master节点配置

3.1、配置文件

[root@k8s-master1 pki]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

#拷贝

[root@k8s-master1 pki]# ansible -i ~/all.cfg master -m copy -a "src=/usr/lib/systemd/system/kube-scheduler.service dest=/usr/lib/systemd/system/kube-scheduler.service"

3.2、启动

[root@k8s-master1 pki]# systemctl daemon-reload

[root@k8s-master1 pki]# systemctl enable --now kube-scheduler

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master1 ~]# systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2021-08-18 09:05:35 CST; 2h 22min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 6579 (kube-scheduler)

CGroup: /system.slice/kube-scheduler.service

└─6579 /usr/local/bin/kube-scheduler --v=2 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernetes...

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943260 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943284 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943344 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943366 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943384 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943419 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943438 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: W0818 09:10:14.943459 6579 reflector.go:436] k8s.io/client-go/informers/factory....ceeding

8月 18 09:10:14 k8s-master1 kube-scheduler[6579]: E0818 09:10:14.943529 6579 leaderelection.go:325] error retrieving resource lock...on lost

8月 18 09:10:18 k8s-master1 kube-scheduler[6579]: I0818 09:10:18.566097 6579 leaderelection.go:253] successfully acquired lease ku...heduler

Hint: Some lines were ellipsized, use -l to show in full.

六、 TLS Bootstrapping配置

用来给kubelet自动颁发证书

在Master01创建bootstrap

注意,如果不是高可用集群,192.168.2.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master1 ~]# cd /root/k8s-ha-install/bootstrap

[root@k8s-master1 bootstrap]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.2.200:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

[root@k8s-master1 bootstrap]# kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

[root@k8s-master1 bootstrap]# kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

[root@k8s-master1 bootstrap]# kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

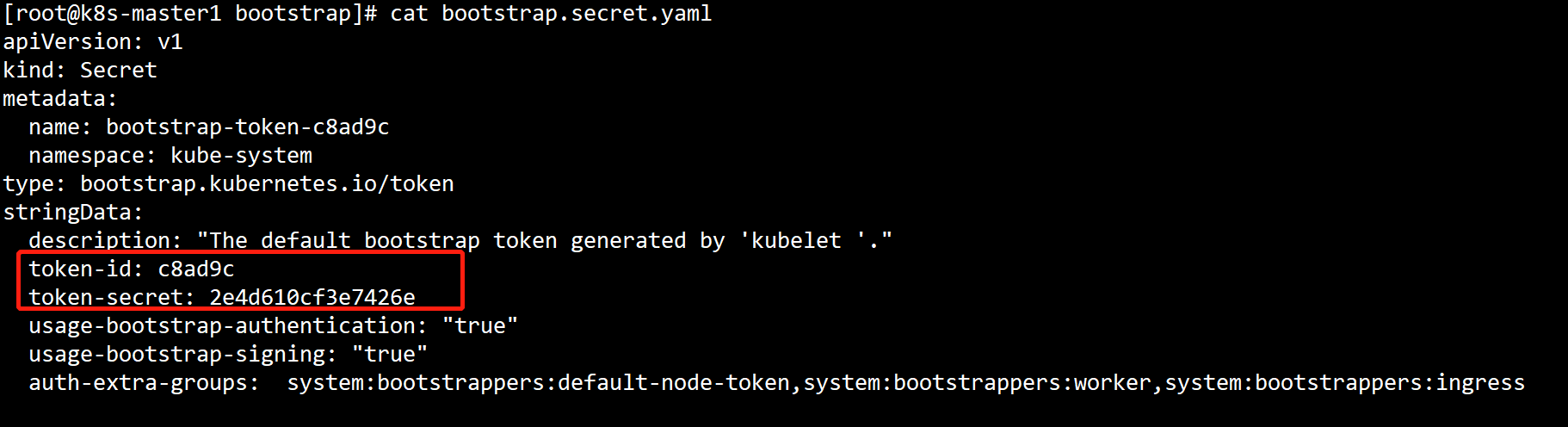

注意:如果要修改bootstrap.secret.yaml的token-id和token-secret,需要保证下图红圈内的字符串一致的,并且位数是一样的。还要保证上个命令的黄色字体:c8ad9c.2e4d610cf3e7426e与你修改的字符串要一致

[root@k8s-master1 bootstrap]# mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

[root@k8s-master1 bootstrap]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

七、Node节点配置

1、拷贝证书

[root@k8s-master1 bootstrap]# cd /etc/kubernetes/

[root@k8s-master1 kubernetes]# for NODE in k8s-master2 k8s-master3 k8s-node1 k8s-node2; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

2、kubelet配置,包括node节点

2.1、所有节点创建相关目录

[root@k8s-master1 bootstrap]# mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

2.2、所有节点配置kubelet service

[root@k8s-master1 bootstrap]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

2.3、所有节点配置kubelet service的配置文件

[root@k8s-master1 bootstrap]#vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

2.4、创建kubelet配置文件

#注意:如果更改了k8s的service网段,需要更改kubelet-conf.yml 的clusterDNS:配置,改成k8s Service网段的第十个地址,比如10.96.0.10

[root@k8s-master1 bootstrap]# vim /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

2.6、所有节点启动kubelet

[root@k8s-master1 bootstrap]# systemctl daemon-reload

[root@k8s-master1 bootstrap]# systemctl enable --now kubelet

#查看集群状态

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 14h v1.20.0

k8s-master2 Ready <none> 14h v1.20.0

k8s-master3 Ready <none> 14h v1.20.0

k8s-node1 Ready <none> 14h v1.20.0

k8s-node2 Ready <none> 14h v1.20.0

3、kube-proxy配置

注意,如果不是高可用集群,192.168.2.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

3.1、创建配置文件

[root@k8s-master1 ~]# cd /root/k8s-ha-install

[root@k8s-master1 k8s-ha-install]# kubectl -n kube-system create serviceaccount kube-proxy

[root@k8s-master1 k8s-ha-install]# kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

[root@k8s-master1 k8s-ha-install]# SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

[root@k8s-master1 k8s-ha-install]# PKI_DIR=/etc/kubernetes/pki

[root@k8s-master1 k8s-ha-install]# K8S_DIR=/etc/kubernetes

[root@k8s-master1 k8s-ha-install]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.2.200:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

[root@k8s-master1 k8s-ha-install]# kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

[root@k8s-master1 k8s-ha-install]# kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

[root@k8s-master1 k8s-ha-install]# kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

3.2、在master1将kube-proxy的systemd Service文件发送到其他节点

如果更改了集群Pod的网段,需要更改kube-proxy/kube-proxy.conf的clusterCIDR: 172.16.0.0/12参数为pod的网段。

[root@k8s-master1 k8s-ha-install]# for NODE in k8s-master1 k8s-master2 k8s-master3; do

scp ${K8S_DIR}/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp kube-proxy/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

[root@k8s-master1 k8s-ha-install]# for NODE in k8s-node1 k8s-node2; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp kube-proxy/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

3.3、所有节点启动kube-proxy

[root@k8s-master1 k8s-ha-install]# systemctl daemon-reload

[root@k8s-master1 k8s-ha-install]# systemctl enable --now kube-proxy

Created symlink /etc/systemd/system/multi-user.target.wants/kube-proxy.service → /usr/lib/systemd/system/kube-proxy.service.

八、安装calico

1、安装配置

1.1、创建配置文件

#创建calico目录

[root@k8s-master1 k8s-ha-install]# mkdir -p /root/k8s-ha-install/calico/

#下面的这个配置文件是从官网上拉下来的,需要做一点修改

[root@k8s-master1 calico]# vim /root/k8s-ha-install/calico/calico-etcd.yaml

---

# Source: calico/templates/calico-etcd-secrets.yaml

# The following contains k8s Secrets for use with a TLS enabled etcd cluster.

# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# The keys below should be uncommented and the values populated with the base64

# encoded contents of each file that would be associated with the TLS data.

# Example command for encoding a file contents: cat <file> | base64 -w 0

# etcd-key: null

# etcd-cert: null

# etcd-ca: null

---

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: "" # "/calico-secrets/etcd-ca"

etcd_cert: "" # "/calico-secrets/etcd-cert"

etcd_key: "" # "/calico-secrets/etcd-key"

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use for workload interfaces and tunnels.

# - If Wireguard is enabled, set to your network MTU - 60

# - Otherwise, if VXLAN or BPF mode is enabled, set to your network MTU - 50

# - Otherwise, if IPIP is enabled, set to your network MTU - 20

# - Otherwise, if not using any encapsulation, set to your network MTU.

veth_mtu: "1440"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"etcd_endpoints": "__ETCD_ENDPOINTS__",

"etcd_key_file": "__ETCD_KEY_FILE__",

"etcd_cert_file": "__ETCD_CERT_FILE__",

"etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

},

{

"type": "bandwidth",

"capabilities": {"bandwidth": true}

}

]

}

---

# Source: calico/templates/calico-kube-controllers-rbac.yaml

# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

# Pods are monitored for changing labels.

# The node controller monitors Kubernetes nodes.

# Namespace and serviceaccount labels are used for policy.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

- serviceaccounts

verbs:

- watch

- list

- get

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

---

---

# Source: calico/templates/calico-node-rbac.yaml

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

# Pod CIDR auto-detection on kubeadm needs access to config maps.

- apiGroups: [""]

resources:

- configmaps

verbs:

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.15.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

securityContext:

privileged: true

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.15.3

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.15.3

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Set noderef for node controller.

- name: CALICO_K8S_NODE_REF

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Enable or Disable VXLAN on the default IP pool.

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the VXLAN tunnel device.

- name: FELIX_VXLANMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the Wireguard tunnel device.

- name: FELIX_WIREGUARDMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

- name: policysync

mountPath: /var/run/nodeagent

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the etcd TLS secrets with mode 400.

# See https://kubernetes.io/docs/concepts/configuration/secret/

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0400

# Used to create per-pod Unix Domain Sockets

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

# Used to install Flex Volume Driver

- name: flexvol-driver-host

hostPath:

type: DirectoryOrCreate

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-kube-controllers.yaml

# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers can only have a single active instance.

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

nodeSelector:

kubernetes.io/os: linux

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccountName: calico-kube-controllers

priorityClassName: system-cluster-critical

# The controllers must run in the host network namespace so that

# it isn't governed by policy that would prevent it from working.

hostNetwork: true

containers:

- name: calico-kube-controllers

image: registry.cn-beijing.aliyuncs.com/dotbalo/kube-controllers:v3.15.3

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Choose which controllers to run.

- name: ENABLED_CONTROLLERS

value: policy,namespace,serviceaccount,workloadendpoint,node

volumeMounts:

# Mount in the etcd TLS secrets.

- mountPath: /calico-secrets

name: etcd-certs

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

volumes:

# Mount in the etcd TLS secrets with mode 400.

# See https://kubernetes.io/docs/concepts/configuration/secret/

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0400

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system

---

# Source: calico/templates/calico-typha.yaml

---

# Source: calico/templates/configure-canal.yaml

---

# Source: calico/templates/kdd-crds.yaml

1.2、修改配置文件

[root@k8s-master1 calico]# cd /root/k8s-ha-install/calico/

[root@k8s-master1 calico]# sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.2.21:2379,https://192.168.2.22:2379,https://192.168.2.23:2379"#g' calico-etcd.yaml、

[root@k8s-master1 calico]# ETCD_CA=`cat /etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '\n'`

[root@k8s-master1 calico]# ETCD_CERT=`cat /etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '\n'`

[root@k8s-master1 calico]# ETCD_KEY=`cat /etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '\n'`

[root@k8s-master1 calico]# sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

[root@k8s-master1 calico]# sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

# 更改此处为自己的pod网段

[root@k8s-master1 calico]# POD_SUBNET="172.16.0.0/12"

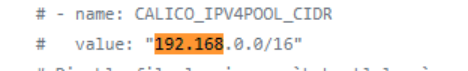

注意下面的这个步骤是把calico-etcd.yaml文件里面的CALICO_IPV4POOL_CIDR下的网段改成自己的Pod网段,也就是把192.168.x.x/16改成自己的集群网段,并打开注释:

所以更改的时候请确保这个步骤的这个网段没有被统一替换掉,如果被替换掉了,还请改回来:

[root@k8s-master1 calico]# sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

1.3、创建容器

[root@k8s-master1 calico]# kubectl apply -f calico-etcd.yaml

#查看容器状态

[root@k8s-master1 calico]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5f6d4b864b-fqsvh 1/1 Running 1 14h

calico-node-5gdbw 1/1 Running 3 14h

calico-node-9vrmx 1/1 Running 2 14h

calico-node-hc8zr 1/1 Running 1 14h

calico-node-lp6lj 1/1 Running 3 14h

calico-node-xc5c2 1/1 Running 2 14h

coredns-867d46bfc6-2kxqv 1/1 Running 1 14h

metrics-server-595f65d8d5-brww7 1/1 Running 2 14h

九、安装coredns

1、配置文件的准备

[root@k8s-master1 ~]# mkdir -p /root/k8s-ha-install/CoreDNS/

[root@k8s-master1 ~]# cd /root/k8s-ha-install/CoreDNS/

[root@k8s-master1 CoreDNS]# vim coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef: