第六周:生成式对抗网络

视频学习

代码学习

08_GAN_double_moon

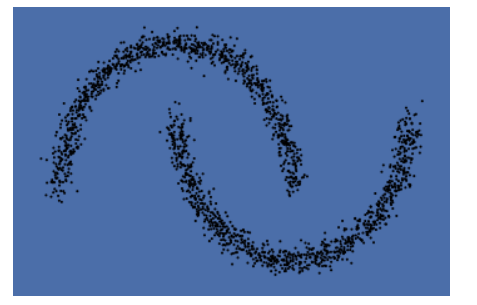

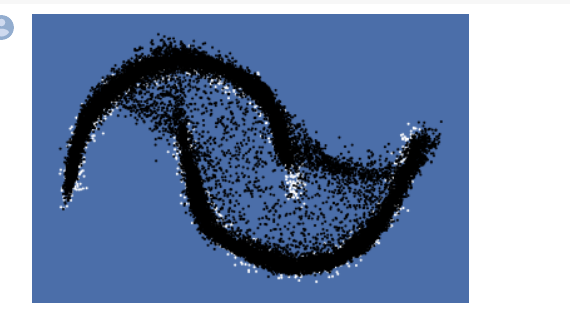

借助于 sklearn.datasets.make_moons 库,生成双半月形的数据,同时把数据点画出来。

可以看出,数据散点呈现两个半月形状。

定义模型

和视频中学习的内容一样,生成器和判别器都是由神经网络组成。

生成器和判别器的结构都非常简单,具体如下:

生成器: 32 ==> 128 ==> 2

判别器: 2 ==> 128 ==> 1

生成器生成的是样本,即一组坐标(x,y),我们希望生成器能够由一组任意的 32组噪声生成座标(x,y)处于两个半月形状上。

判别器输入的是一组座标(x,y),最后一层是sigmoid函数,是一个范围在(0,1)间的数,即样本为真或者假的置信度。如果输入的是真样本,得到的结果尽量接近1;如果输入的是假样本,得到的结果尽量接近0。

z_dim = 32

hidden_dim = 128

# 定义生成器

net_G = nn.Sequential(

nn.Linear(z_dim,hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim, 2))

# 定义判别器

net_D = nn.Sequential(

nn.Linear(2,hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim,1),

nn.Sigmoid())

# 网络放到 GPU 上

net_G = net_G.to(device)

net_D = net_D.to(device)

# 定义网络的优化器

optimizer_G = torch.optim.Adam(net_G.parameters(),lr=0.0001)

optimizer_D = torch.optim.Adam(net_D.parameters(),lr=0.0001)

训练过程

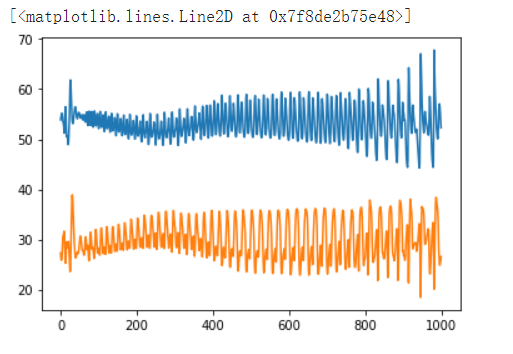

Epoch 50 , D loss: 55.03215408325195, G loss: 29.4384765625

Epoch 100 , D loss: 52.10868835449219, G loss: 28.256412506103516

Epoch 150 , D loss: 54.99608612060547, G loss: 29.113525390625

Epoch 200 , D loss: 54.22781753540039, G loss: 30.472549438476562

Epoch 250 , D loss: 48.84559631347656, G loss: 31.050628662109375

Epoch 300 , D loss: 51.449180603027344, G loss: 32.68932342529297

Epoch 350 , D loss: 50.3018684387207, G loss: 29.780363082885742

Epoch 400 , D loss: 51.828277587890625, G loss: 31.6911563873291

Epoch 450 , D loss: 51.598793029785156, G loss: 28.16646957397461

Epoch 500 , D loss: 54.86663055419922, G loss: 34.15589904785156

Epoch 550 , D loss: 50.41839599609375, G loss: 35.44386291503906

Epoch 600 , D loss: 50.13985061645508, G loss: 33.62955856323242

Epoch 650 , D loss: 55.76271057128906, G loss: 27.325441360473633

Epoch 700 , D loss: 58.96855163574219, G loss: 23.549272537231445

Epoch 750 , D loss: 56.18208312988281, G loss: 24.563640594482422

Epoch 800 , D loss: 52.67356872558594, G loss: 26.480838775634766

Epoch 850 , D loss: 53.760040283203125, G loss: 26.145021438598633

Epoch 900 , D loss: 55.616817474365234, G loss: 27.282987594604492

Epoch 950 , D loss: 55.274330139160156, G loss: 36.21284484863281

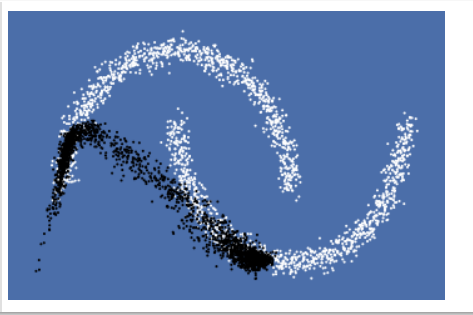

生成器生成假样本

观察是否符合两个半月形状的数据分布,白色的是原来的真实样本,黑色的点是生成器生成的样本。

差得挺多,把学习率修改为 0.001,batch_size改大到250

Epoch 50 , D loss: 10.053263664245605, G loss: 6.621474742889404

Epoch 100 , D loss: 10.277099609375, G loss: 7.5574212074279785

Epoch 150 , D loss: 8.722528457641602, G loss: 6.901734352111816

Epoch 200 , D loss: 11.037171363830566, G loss: 5.7993669509887695

Epoch 250 , D loss: 8.147501945495605, G loss: 7.391366004943848

Epoch 300 , D loss: 9.909518241882324, G loss: 8.469836235046387

Epoch 350 , D loss: 10.291149139404297, G loss: 5.629768371582031

Epoch 400 , D loss: 10.663004875183105, G loss: 6.259310722351074

Epoch 450 , D loss: 11.687268257141113, G loss: 5.398467063903809

Epoch 500 , D loss: 11.070916175842285, G loss: 5.739245891571045

Epoch 550 , D loss: 10.916838645935059, G loss: 5.562057018280029

Epoch 600 , D loss: 10.878161430358887, G loss: 5.665527820587158

Epoch 650 , D loss: 10.889522552490234, G loss: 5.656742095947266

Epoch 700 , D loss: 10.898103713989258, G loss: 5.695590972900391

Epoch 750 , D loss: 10.959508895874023, G loss: 5.653082847595215

Epoch 800 , D loss: 10.988847732543945, G loss: 5.484369277954102

Epoch 850 , D loss: 10.999717712402344, G loss: 5.727382659912109

Epoch 900 , D loss: 10.99517822265625, G loss: 5.487709999084473

Epoch 950 , D loss: 11.027498245239258, G loss: 5.640206336975098

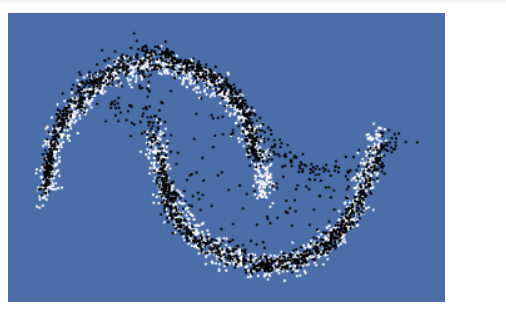

Loss减小,生成器性能提升很多。

生成更多样本,效果还是挺不错!!

09_CGAN_DCGAN_mnist

首先实现CGAN。下面分别是 判别器 和 生成器 的网络结构,可以看出网络结构非常简单,具体如下:

生成器:(784 + 10) ==> 512 ==> 256 ==> 1

判别器:(100 + 10) ==> 128 ==> 256 ==> 512 ==> 784

可以看出,去掉生成器和判别器那 10 维的标签信息,和普通的GAN是完全一样的。下面是网络的具体实现代码:

'''全连接判别器,用于1x28x28的MNIST数据,输出是数据和类别'''

def __init__(self):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(28*28+10, 512),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(512, 256),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(256, 1),

nn.Sigmoid()

)

def forward(self, x, c):

x = x.view(x.size(0), -1)

validity = self.model(torch.cat([x, c], -1))

return validity

class Generator(nn.Module):

'''全连接生成器,用于1x28x28的MNIST数据,输入是噪声和类别'''

def __init__(self, z_dim):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(z_dim+10, 128),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(128, 256),

nn.BatchNorm1d(256, 0.8),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(256, 512),

nn.BatchNorm1d(512, 0.8),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(in_features=512, out_features=28*28),

nn.Tanh()

)

def forward(self, z, c):

x = self.model(torch.cat([z, c], dim=1))

x = x.view(-1, 1, 28, 28)

return x

生成器:

DCGAN

判别器 和 生成器 的网络结构,和之前类似,只是使用了卷积结构。

'''滑动卷积判别器'''

def __init__(self):

super(D_dcgan, self).__init__()

self.conv = nn.Sequential(

# 第一个滑动卷积层,不使用BN,LRelu激活函数

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=3, stride=2, padding=1),

nn.LeakyReLU(0.2, inplace=True),

# 第二个滑动卷积层,包含BN,LRelu激活函数

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(32),

nn.LeakyReLU(0.2, inplace=True),

# 第三个滑动卷积层,包含BN,LRelu激活函数

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(64),

nn.LeakyReLU(0.2, inplace=True),

# 第四个滑动卷积层,包含BN,LRelu激活函数

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=4, stride=1),

nn.BatchNorm2d(128),

nn.LeakyReLU(0.2, inplace=True)

)

# 全连接层+Sigmoid激活函数

self.linear = nn.Sequential(nn.Linear(in_features=128, out_features=1), nn.Sigmoid())

def forward(self, x):

x = self.conv(x)

x = x.view(x.size(0), -1)

validity = self.linear(x)

return validity

class G_dcgan(nn.Module):

'''反滑动卷积生成器'''

def __init__(self, z_dim):

super(G_dcgan, self).__init__()

self.z_dim = z_dim

# 第一层:把输入线性变换成256x4x4的矩阵,并在这个基础上做反卷机操作

self.linear = nn.Linear(self.z_dim, 4*4*256)

self.model = nn.Sequential(

# 第二层:bn+relu

nn.ConvTranspose2d(in_channels=256, out_channels=128, kernel_size=3, stride=2, padding=0),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

# 第三层:bn+relu

nn.ConvTranspose2d(in_channels=128, out_channels=64, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

# 第四层:不使用BN,使用tanh激活函数

nn.ConvTranspose2d(in_channels=64, out_channels=1, kernel_size=4, stride=2, padding=2),

nn.Tanh()

)

def forward(self, z):

# 把随机噪声经过线性变换,resize成256x4x4的大小

x = self.linear(z)

x = x.view([x.size(0), 256, 4, 4])

# 生成图片

x = self.model(x)

return x

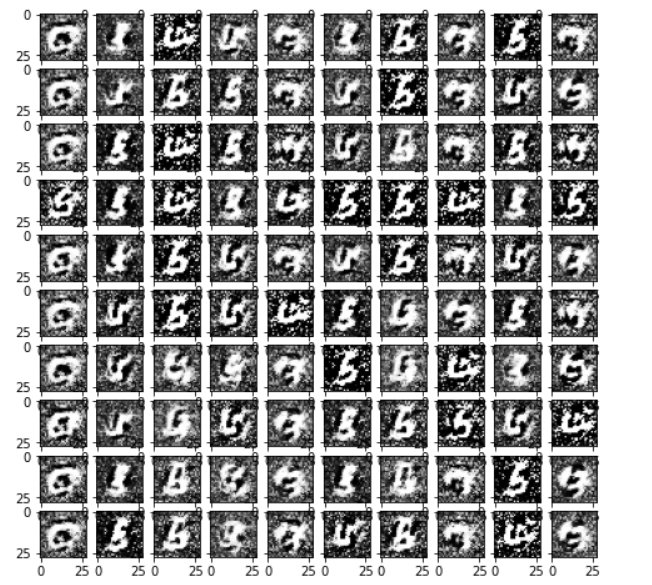

训练结果:

[Epoch 1/30] [D loss: 0.982243] [G loss: 1.162981]

[Epoch 2/30] [D loss: 0.859965] [G loss: 1.234519]

[Epoch 3/30] [D loss: 0.710922] [G loss: 1.406738]

[Epoch 4/30] [D loss: 0.622805] [G loss: 1.822650]

[Epoch 5/30] [D loss: 0.625538] [G loss: 1.830428]

[Epoch 6/30] [D loss: 0.734130] [G loss: 1.342962]

[Epoch 7/30] [D loss: 0.581314] [G loss: 1.962614]

[Epoch 8/30] [D loss: 0.303252] [G loss: 2.160202]

[Epoch 9/30] [D loss: 0.258141] [G loss: 2.724105]

[Epoch 10/30] [D loss: 0.302812] [G loss: 2.840896]

[Epoch 11/30] [D loss: 0.202665] [G loss: 3.437228]

[Epoch 12/30] [D loss: 0.183814] [G loss: 2.709256]

[Epoch 13/30] [D loss: 0.199151] [G loss: 2.663381]

[Epoch 14/30] [D loss: 0.124814] [G loss: 4.076852]

[Epoch 15/30] [D loss: 0.063291] [G loss: 5.015116]

[Epoch 16/30] [D loss: 0.038880] [G loss: 4.663543]

[Epoch 17/30] [D loss: 0.050136] [G loss: 4.281735]

[Epoch 18/30] [D loss: 0.094745] [G loss: 5.899310]

[Epoch 19/30] [D loss: 0.236553] [G loss: 4.483749]

[Epoch 20/30] [D loss: 0.420699] [G loss: 2.239360]

[Epoch 21/30] [D loss: 0.022436] [G loss: 5.435596]

[Epoch 22/30] [D loss: 0.064717] [G loss: 5.401882]

[Epoch 23/30] [D loss: 0.124545] [G loss: 4.375309]

[Epoch 24/30] [D loss: 0.034703] [G loss: 5.206477]

[Epoch 25/30] [D loss: 0.025128] [G loss: 5.225276]

[Epoch 26/30] [D loss: 0.247204] [G loss: 3.576170]

[Epoch 27/30] [D loss: 0.011992] [G loss: 6.023228]

[Epoch 28/30] [D loss: 0.077899] [G loss: 4.900823]

[Epoch 29/30] [D loss: 0.078687] [G loss: 4.758388]

生成结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号