Kubernetes之 Service & Ingress

在K8S中,Pod是应用程序的载体,我们可以通过Pod来访问内部的应用程序,但是Pod又会随着伸缩容,IP又不是固定的,这就意味这不能用IP的方式进行访问

为了解决这个问题,K8S便提供Service这个资源,Service代理了同一个服务的多个Pod集合,对外表现为一个访问入口,访问该入口的请求将经过负载均衡,转发到后端 Pod 中的容器。

kube-proxy

Service在很多情况下只是一个概念,真正起作用的其实是kube-proxy服务进程,每个Node节点上都运行着一个kube-proxy服务进程。当创建Service的时候会通过api-server向etcd写入创建的service的信息,而kube-proxy会基于监听的机制发现这种Service的变动,然后它会将最新的Service信息转换成对应的访问规则。

目前Service支持三种工作模式,我们可以修改

# 开启ipvs 编辑资源清单文件,修改mode: "ipvs" [root@master /]# kubectl edit cm kube-proxy -n kube-system configmap/kube-proxy edited # 获取现在已有的服务 [root@master /]# kubectl get pod -l k8s-app=kube-proxy -n kube-system NAME READY STATUS RESTARTS AGE kube-proxy-4pc5n 1/1 Running 0 2d2h kube-proxy-gw67r 1/1 Running 0 2d3h kube-proxy-rqbgm 1/1 Running 0 2d2h # 删除现在已有的服务 [root@master /]# kubectl delete pod -l k8s-app=kube-proxy -n kube-system pod "kube-proxy-4pc5n" deleted pod "kube-proxy-gw67r" deleted pod "kube-proxy-rqbgm" deleted # 获取删除后自动重启的服务 [root@master /]# kubectl get pod -l k8s-app=kube-proxy -n kube-system NAME READY STATUS RESTARTS AGE kube-proxy-4rl6z 1/1 Running 0 5s kube-proxy-65bt7 1/1 Running 0 5s kube-proxy-w94rl 1/1 Running 0 4s

userspace 模式

该模式下kube-proxy会创建一个监听端口 ,发向Cluster IP的请求被Iptables规则重定向到kube-proxy监听的端口上

kube-proxy根据LB算法选择一个提供服务的Pod并和其建立链接,以将请求转发到Pod上

该模式下,kube-proxy充当了一个四层负责均衡器的角色。由于kube-proxy运行在userspace中,在进行转发处理时会增加内核和用户空间之间的数据拷贝,虽然比较稳定,但是效率比较低

iptables 模式

该模式下,kube-proxy为service映射的每个Pod创建对应的iptables规则,直接将发向Cluster IP的请求重定向到一个Pod IP。 该模式下kube-proxy不承担四层负责均衡器的角色,只负责创建iptables规则。该模式的优点是较userspace模式效率更高,但不能提供灵活的LB策略,当后端Pod不可用时也无法进行重试

ipvs 模式

ipvs模式和iptables类似,kube-proxy监控Pod的变化并创建相应的ipvs规则。ipvs相对iptables转发效率更高。除此以外,ipvs支持更多的LB算法。

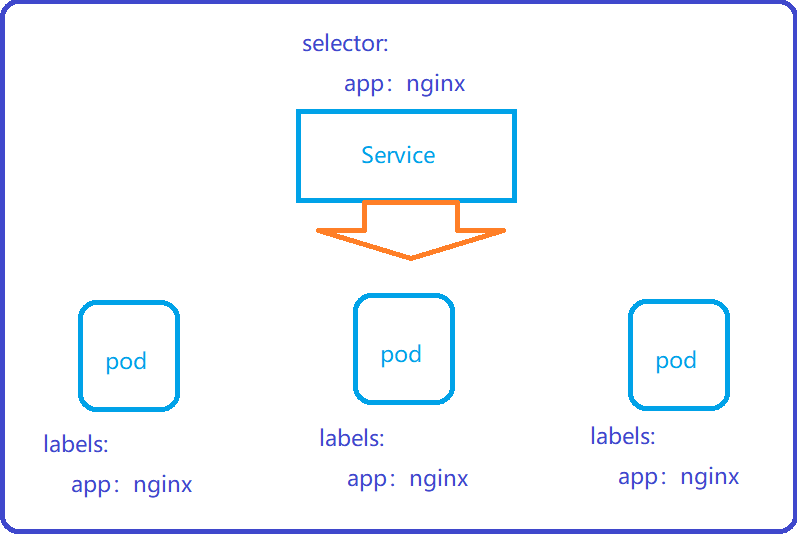

Pod和Service的关系

之前我们已经说到Controller和Pod之间是如何建立关系的。Service与Pod取得联系的方式如出一辙

常用的Service类型

-

kubectl expose --help 查看服务的暴露帮助文档,在最下可以看到几个关键字眼

-

ClusterIP

-

一般用于集群的内部使用,对外无效,默认的也就是这种类型

-

-

NodePort

-

对外访问应用时使用,比如我们的前端Pod是要对外暴露的,面向集群外部的访问

-

-

LoadBalancer

-

使用外接负载均衡器完成到服务的负载分发,注意此模式需要外部云环境支持

-

-

ExternalName

-

把集群外部的服务引入集群内部,直接使用

-

测试环境,在演示之前先把下面的测试环境搭建起来

资源清单文件:nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

name

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

[root@master k8s]# kubectl apply -f nginx-deploy.yaml

deployment.apps/nginx-deploy created

# 可以看到除了自带的哪个ClusterIP , 没有其他的Service被创建 [root@master k8s]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d4h #三个副本正在缓缓启动 注意看 IP 项 [root@master k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deploy-7d7dd5499b-7nfkz 1/1 Running 0 19m 10.244.1.5 node1 <none> <none> nginx-deploy-7d7dd5499b-9c5lf 1/1 Running 0 19m 10.244.1.6 node1 <none> <none> nginx-deploy-7d7dd5499b-lwcq6 1/1 Running 0 19m 10.244.2.6 node2 <none> <none> # 依次进入三个容器 [root@master k8s]# kubectl exec -it nginx-deploy-7d7dd5499b-7nfkz -n dev /bin/sh #一台分配一句填充一下nginx的index.html echo "master hello!" > /usr/share/nginx/html/index.html echo "master master hello!" > /usr/share/nginx/html/index.html echo "master master masterhello!" > /usr/share/nginx/html/index.html # 可以看到 , 我们通过 kubectl get pods -o wide 获取到的IP依次访问了这些Nginx [root@master k8s]# curl 10.244.1.5 master hello ! [root@master k8s]# curl 10.244.1.6 master master hello ! [root@master k8s]# curl 10.244.2.6 master master master hello !

ClusterIP

创建一个Service的资源:service_cluster_ip.yaml

-

这里注意:这里我们通过 selector 匹配那些 标签key = app value=nginx-pod 的资源,也就是上面我们部署的三台Nginx

apiVersion: v1

kind: Service

metadata:

name: service-clusterip

spec:

selector:

app: nginx-pod

type: ClusterIP

ports:

- port: 80 # Service端口

targetPort: 80 # 代理pod的端口

# 部署Servicer类型的资源 [root@master k8s]# kubectl apply -f service_cluster_ip.yaml service/service-clusterip created # 查看service , 可以看到 service-clusterip 这个service的 clusterIp为 10.109.230.1 [root@master k8s]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d19h service-clusterip ClusterIP 10.109.230.1 <none> 80/TCP 23s # 查看该service的详细信息 [root@master k8s]# kubectl describe service service-clusterip Name: service-clusterip Namespace: default Labels: <none> Annotations: Selector: app=nginx-pod Type: ClusterIP IP: 10.109.230.1 Port: <unset> 80/TCP TargetPort: 80/TCP Endpoints: 10.244.1.5:80,10.244.1.6:80,10.244.2.6:80 Session Affinity: None Events: <none> # 开始使用service暴露的clusterIP进行访问 , 可以发现经过负载均衡分发,我们访问到了三台Nginx , 但是这个地址只能再集群内部的节点上使用 [root@master k8s]# curl 10.109.230.1 master master master hello ! [root@master k8s]# curl 10.109.230.1 master master hello ! [root@master k8s]# curl 10.109.230.1 master hello !

Endedpoint

-

大家看看service详细描述信息里有一个数据项目:Endpoints: 10.244.1.5:80,10.244.1.6:80,10.244.2.6:80

-

Endpoint是kubernetes中的一个资源对象,存储在etcd中,用来记录一个service对应的所有pod的访问地址,它是根据service配置文件中selector描述产生的。

# 获取详细描述 [root@master k8s]# kubectl describe service service-clusterip Name: service-clusterip Namespace: default Labels: <none> Annotations: Selector: app=nginx-pod Type: ClusterIP IP: 10.109.230.1 Port: <unset> 80/TCP TargetPort: 80/TCP Endpoints: 10.244.1.5:80,10.244.1.6:80,10.244.2.6:80 Session Affinity: None Events: <none> # 查看pod的信息 [root@master k8s]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deploy-7d7dd5499b-7nfkz 1/1 Running 0 3d15h 10.244.1.5 node1 <none> <none> nginx-deploy-7d7dd5499b-9c5lf 1/1 Running 0 3d15h 10.244.1.6 node1 <none> <none> nginx-deploy-7d7dd5499b-lwcq6 1/1 Running 0 3d15h 10.244.2.6 node2 <none> <none>

nodePort

创建一个Service的资源:service_node_port.yaml

-

这里注意:这里我们通过 selector 匹配那些 标签key = app value=nginx-pod 的资源,也就是上面我们部署的三台Nginx

apiVersion: v1

kind: Service

metadata:

name: service-nodeport

spec:

selector:

app: nginx-pod

type: NodePort # service类型

ports:

- port: 80

nodePort: 30003 # 指定绑定的node的端口(默认的取值范围是:30000-32767), 如果不指定,会默认分配

targetPort: 80

[root@master k8s]# kubectl apply -f service_node_port.yaml service/service-nodeport created # 获取到刚刚部署的service-nodeport对外暴露的端口是:30003 [root@master k8s]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d20h service-clusterip ClusterIP 10.109.230.1 <none> 80/TCP 80m service-nodeport NodePort 10.103.77.9 <none> 80:30003/TCP 23s # 获取详细描述 [root@master k8s]# kubectl describe service service-nodeport Name: service-nodeport Namespace: default Labels: <none> Annotations: Selector: app=nginx-pod Type: NodePort IP: 10.103.77.9 Port: <unset> 80/TCP TargetPort: 80/TCP NodePort: <unset> 30003/TCP Endpoints: 10.244.1.5:80,10.244.1.6:80,10.244.2.6:80 Session Affinity: None External Traffic Policy: Cluster Events: <none>

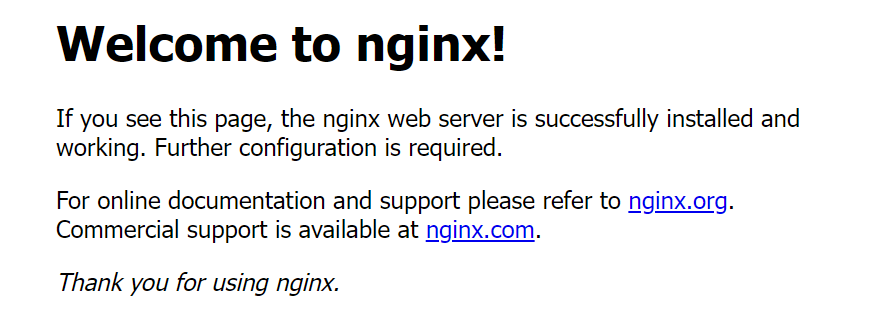

此时我们再配合节点机器的Ip地址 + nodePort暴露出来的端口,通过浏览器访问即可

loadBalance

node节点一般是匿名部署的,ip地址一般不对外做暴露,若想访问,以下有两种实现方式

-

在集群节点中,找到一台可以外网访问的机器,安装Nginx,做反向代理,手动把可以访问的节点添加到Nginx里面

-

LoadBalancer的介入,在基于ClusterIP和已经以NodePort开放的一个服务Service的基础上,向云提供者申请一个负载均衡器,将流量转发到已经以NodePort形式开发的服务Service上

-

较为麻烦,一般不做使用

ExternalName

ExternalName类型的Service用于引入集群外部的服务,它通过externalName属性指定外部一个服务的地址,然后在集群内部访问此service就可以访问到外部的服务了。

apiVersion: v1 kind: Service metadata: name: service-externalname spec: type: ExternalName # service类型 externalName: www.baidu.com #改成ip地址也可以

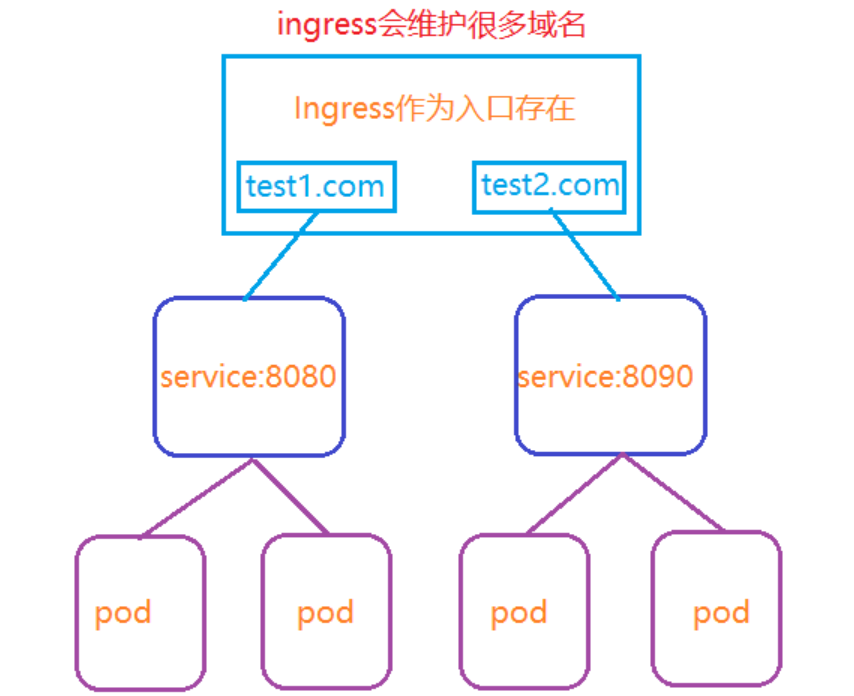

Ingress

在上面我们说到集群之内服务想要暴露,只有通过nodePort或者loadBalance这两种方式,他们都有自己的缺点

-

nodePort :一个Service就要占用集群机器的一个端口,服务变多时不好管控

-

loadBalance : 每个service都需要一个lb , 麻烦之极 , 并且需要kubernetes之外设备的支持

基于这种环境下,Ingress就出现了:Ingress只需要一个NodePort或者一个LB就可以满足暴露多个Service的需求

Ingress的工作原理类似与Nginx,可以理解可以理解成在Ingress里建立诸多映射规则,Ingress Controller通过监听这些配置规则并转化成Nginx的反向代理配置 , 然后对外部提供服务

-

ingress :kubernetes中的一个对象,作用是定义请求如何转发到service的规则

-

ingress controller:具体实现反向代理及负载均衡的程序,对ingress定义的规则进行解析,实现请求转发,实现方式有很多,比如Nginx, Contour, Haproxy等

ingress-nginx

你必须拥有一个 Ingress控制器 才能满足 Ingress 的要求。

创建一个命名空间,待会Ingress就部署在该命名空间下

[root@master /]# kubectl create namespace ingress-nginx

还是之前Nginx的Deployment文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

#将上面的资源部署到 ingress-nginx 这个命名空间下 [root@master /]# kubectl apply -f nginx-deploy.yaml -n ingress-nginx

还是之前Nginx的Deployment文件

apiVersion: v1

kind: Service

metadata:

name: service-nodeport

spec:

selector:

app: nginx-pod

type: NodePort

ports:

- port: 80

nodePort: 30003 #指定绑定的node的端口(默认的取值范围是:30000-32767), 如果不指定,会默认分配

targetPort: 80

#将上面的资源部署到 ingress-nginx 这个命名空间下 [root@master /]# kubectl apply -f nginx-service.yaml -n ingress-nginx

然后开始部署 Ingress Controller

-

这里我们使用官方维护的nginx控制器进行部署,下面你需要变动的数据可能就是命名空间了,如果你的命名空间不是 ingress-nginx , 则需要改动一二

-

下面这个资源清单文件围绕:nginx-ingress-controller 这个Deployment 做了配套账户、权限、配置等,我们只需关注该Deployment 即可

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

配置规则

# 三个Nginx的pod 已经就绪 、 一个Ingress Controller 也是以Pod的形式在运行着 [root@master ninja_download]# kubectl get pods -n ingress-nginx -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deploy-7d7dd5499b-bfsrs 1/1 Running 0 30m 10.244.1.7 node1 <none> <none> nginx-deploy-7d7dd5499b-dpn99 1/1 Running 0 30m 10.244.1.8 node1 <none> <none> nginx-deploy-7d7dd5499b-tvh5s 1/1 Running 0 30m 10.244.2.7 node2 <none> <none> nginx-ingress-controller-766fb9f77-nw7gb 1/1 Running 0 41m 192.168.239.140 node2 <none> <none> # 关联了三台Nginx的Service 也处于就绪状态 [root@master /]# kubectl get service -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service-nodeport NodePort 10.102.164.188 <none> 80:30003/TCP 28m

现在我们Pod通过Deployment创建好了 , 对应的Service也创建好了 , Ingress Controller 也处于就绪状态

下面我们就剩下最后一步:配置 Ingress 规则,让他们产生关联了

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ninja-ingress

spec:

rules:

- host: ninja.ingress.com #对外监听并维护的域名

http:

paths:

- path: /

backend:

serviceName: service-nodeport # 这是关联的 service 的 serviceName

servicePort: 80 #监听的端口

# 将该资源部署在同一命名空间下 [root@master /]# kubectl apply -f ingress-rule.yaml -n ingress-nginx

因为我们监听的是这个域名:ninja.ingress.com 所以我们要想访问的话只有修改一下win本地的hosts文件

-

C:\Windows\System32\drivers\etc\hosts

-

192.168.239.140 ninja.ingress.com : 前面是我们部署Ingress Controller Pod的节点的IP , 后面是Ingress监听的域名信息

下面我们来看看Ingress资源信息

[root@master ninja_download]# kubectl get ingress -n ingress-nginx NAME CLASS HOSTS ADDRESS PORTS AGE ninja-ingress <none> ninja.ingress.com 80 50m

工作原理

-

用户编写Ingress路由规则,说明哪个域名对应哪个K8S中的哪个Service

-

Ingress Controller 动态感知 Ingress路由规则的变化,生成一段对饮的Nginx反向代理配置

-

Ingress动态将该配置写入到一个运行中的Nginx中,并使其生效

浙公网安备 33010602011771号

浙公网安备 33010602011771号