Aws MSK中使用.Net 生产Kafka消息报错Producer terminating with 1 message (32 bytes) still in queue or transit: use flush() to wait for outstanding message deliver

在使用AWS(亚马逊云)的Kafka服务,即MSK时,程序生产的消息发送kafka始终不成功,收到如下报错:

Producer terminating with 1 message (32 bytes) still in queue or transit: use flush() to wait for outstanding message deliver

而且有时topic也没创建出来,而我写的kafka测试程序,在本地环境下,无论创建topic和发送消息均OK,我的kafka测试程序代码如下:

KafkaProducer 生产者

public class KafkaMessageProducer { private readonly String _bootstrapServers; private readonly String _topic; private const int _flushTime = 20; private IProducer<String, String> _producer; public KafkaMessageProducer() { _bootstrapServers = "b-2.test01.XXXXXXX.kafka.XXXXXXX.amazonaws.com:9092,b-1.test01.XXXXXXX.kafka.XXXXXXX.amazonaws.com:9092"; // _bootstrapServers = "127.0.0.1:9092"; // _topic = "test-topic2"; _topic = "default"; } public void Produce(){ var config = new ProducerConfig{ BootstrapServers = _bootstrapServers, // Debug = "protocol", // Acks = Acks.None }; try{ // using (var adminClient = // new AdminClientBuilder(new AdminClientConfig { BootstrapServers = _bootstrapServers }).Build()) // { // Console.WriteLine("CreateTopicsAsync"); // Task.Run(async ()=> await adminClient.CreateTopicsAsync(new TopicSpecification[] { // new TopicSpecification { Name = _topic} // })); // Console.WriteLine("Created"); // } _producer = new ProducerBuilder<String, String>(config).Build(); _producer.Produce(_topic, new Message<string, string> { Key = Guid.NewGuid().ToString(), Value = "New Message: " + DateTime.Now.ToString() }, deliveryReportHandler); Console.WriteLine("prepare to flush"); _producer.Flush(TimeSpan.FromSeconds(_flushTime)); using (var adminClient = new AdminClientBuilder(new AdminClientConfig { BootstrapServers = _bootstrapServers }).Build()) { Console.WriteLine("prepare get metadata"); var meta = adminClient.GetMetadata(TimeSpan.FromSeconds(20)); Console.WriteLine("topic list:"); foreach (var topicMetadata in meta.Topics) { Console.WriteLine(topicMetadata.Topic); } var topic = meta.Topics.SingleOrDefault(t => t.Topic == _topic); if (topic != null) { Console.WriteLine("prepare get Partitions"); var topicPartitions = topic.Partitions; Console.WriteLine("Partitions id list:"); foreach (var partitionMetadata in topicPartitions) { Console.WriteLine(partitionMetadata.PartitionId); } } else { Console.WriteLine("topic is not exist:"+ _topic); } } } catch(Exception ex){ Console.WriteLine("Application Crashed: " + ex.Message); } finally{ Console.WriteLine("finally dispose"); if (_producer != null){ ((IDisposable)_producer).Dispose(); } } } private void deliveryReportHandler(DeliveryReport<string, string> deliveryReport) { Console.WriteLine(deliveryReport.Value); } }

KafkaConsumer 消费者

public class KafkaMessageConsumer { private readonly string _bootstrapServers; private readonly string _groupId; private readonly AutoOffsetReset _autoOffsetReset; private readonly List<String> _topics; private bool _isConsuming; private readonly CancellationTokenSource _cts; private IConsumer<String, String> _consumer; public KafkaMessageConsumer() { _bootstrapServers = "b-2.test01.XXXXXXXX.kafka.XXXXXXXXXX.amazonaws.com:9092,b-1.test01.XXXXXXXX.kafka.XXXXXXXXXX.amazonaws.com:9092"; // _bootstrapServers = "127.0.0.1:9092"; _groupId = "dotnet-kafka-client2"; _autoOffsetReset = AutoOffsetReset.Earliest; // _topics = new List<String>() { "test-topic2" }; _topics = new List<String>() { "default" }; _cts = new CancellationTokenSource(); Console.CancelKeyPress += new ConsoleCancelEventHandler(CancelKeyPressHandler); } protected void CancelKeyPressHandler(object sender, ConsoleCancelEventArgs args){ args.Cancel = true; _isConsuming = false; _cts.Cancel(); } public void Consume(){ var config = new ConsumerConfig { BootstrapServers = _bootstrapServers, GroupId = _groupId, AutoOffsetReset = _autoOffsetReset, AllowAutoCreateTopics=true }; try { using (var adminClient = new AdminClientBuilder(new AdminClientConfig { BootstrapServers = _bootstrapServers }).Build()) { Console.WriteLine("prepare get metadata"); var meta = adminClient.GetMetadata(TimeSpan.FromSeconds(20)); Console.WriteLine("topic list:"); foreach (var topicMetadata in meta.Topics) { Console.WriteLine(topicMetadata.Topic); } var topic = meta.Topics.SingleOrDefault(t => t.Topic == _topics[0]); if (topic != null) { Console.WriteLine("prepare get Partitions"); var topicPartitions = topic.Partitions; Console.WriteLine("Partitions id list:"); foreach (var partitionMetadata in topicPartitions) { Console.WriteLine(partitionMetadata.PartitionId); } } else { Console.WriteLine("topic is not exist:"+ _topics[0]); } } Console.WriteLine("brokers: " + config.BootstrapServers); _consumer = new ConsumerBuilder<String, String>(config).Build(); Console.WriteLine("topic: " + _topics[0]); _consumer.Subscribe(_topics); _isConsuming = true; int i = 0; while (_isConsuming){ i++; Console.WriteLine(i + ": "); ConsumeResult<String, String> consumeResult = _consumer.Consume(_cts.Token); Console.WriteLine(consumeResult.Message.Value); } } catch (OperationCanceledException ex){ Console.WriteLine("Application was ended: " + ex.Message.ToString()); } catch(Exception ex){ Console.WriteLine("Application Crashed: " + ex.Message); } finally{ if (_consumer != null){ _consumer.Close(); ((IDisposable)_consumer).Dispose(); } } } }

经过一番研究(费了九牛二虎之力),发现国外也有人遇到同样的问题

https://github.com/confluentinc/confluent-kafka-dotnet/issues/1391

主要原因是对Aws的kafka服务,也就是MSK中的kafka配置不熟悉,这里贴上它的默认配置说明

https://docs.aws.amazon.com/msk/latest/developerguide/msk-default-configuration.html

其中主要有2个配置影响了我的Kafka消息推送,一个是auto.create.topics.enable,一个是min.insync.replicas

auto.create.topics.enable配置很好理解,就是是否允许服务端自动创建topic,一般这个配置是true,但aws msk里把它默认配置成了false,把它改成true即可。

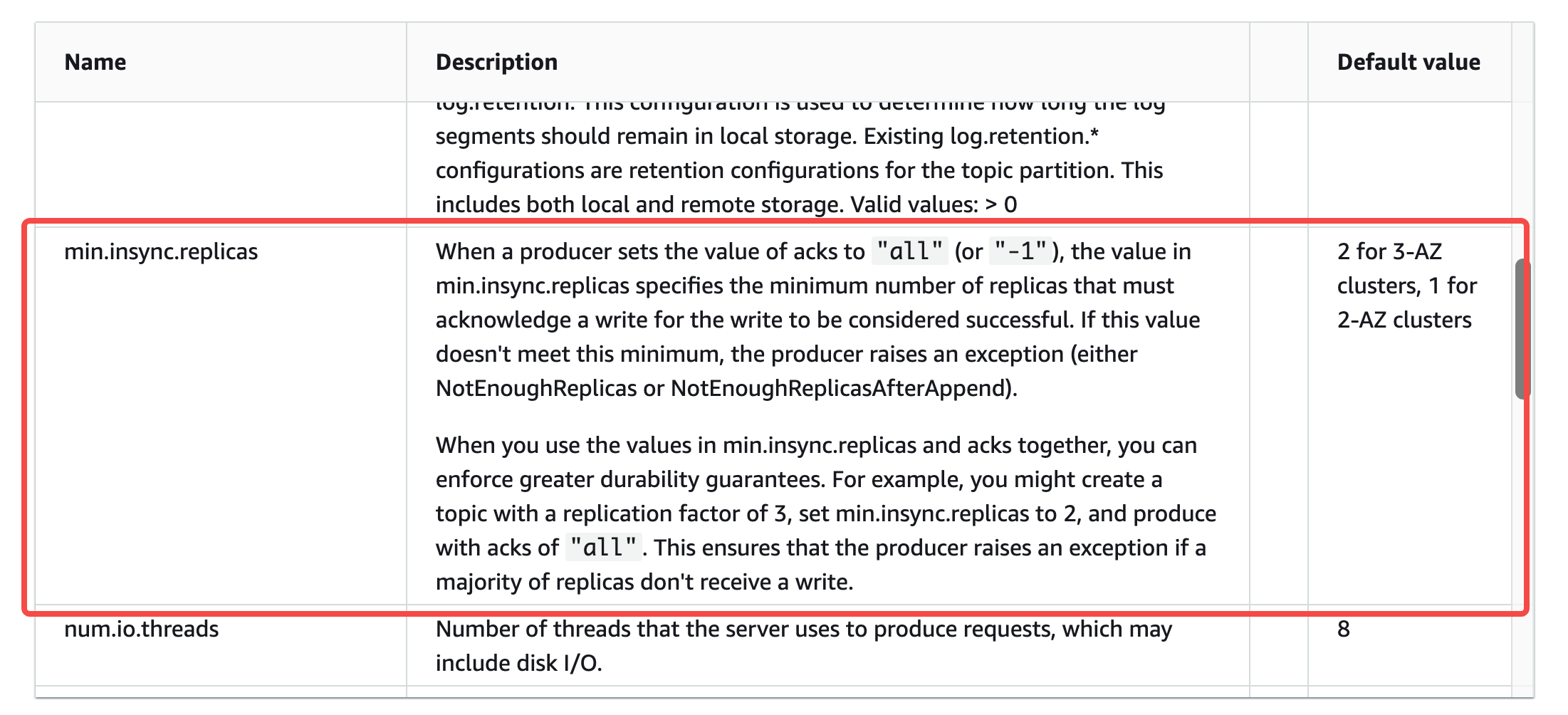

min.insync.replicas这个参数就稍微复杂一点,简单说,它就是设定了写入消息的限制条件,具体的意思,直接见下面的👇翻译吧

当生产者将acks的值设置为"all"(或"-1")时,min.insync.replicas中的值指定了必须确认写操作的最小副本数量,写操作才被认为成功。如果这个值不满足这个最小值,生产者将引发一个异常(NotEnoughReplicas或NotEnoughReplicasAfterAppend)。

当同时使用min.insync.replicas和acks中的值时,可以强制执行更强的持久性保证。例如,您可以创建一个复制因子为3的主题,将min.insync.replicas设置为2,并生成带有“all”的ack。这确保了如果大多数副本没有接收到写入,生产者会引发异常。

由于一般我们写kafka测试程序的时候,设定的副本默认都是1,低于aws msk这里默认配置的2,所以无论怎么写消息都不会成功,除非把它改到1

至此,谜题解开,就是aws msk默认配置惹的祸

本文内容为作者月井石原创,转载请注明出处~

浙公网安备 33010602011771号

浙公网安备 33010602011771号