ImgProcessing.py

1 from MainBaidu import * 2 3 4 # 顺时针旋转90度 5 def RotateClockWise90(img): 6 trans_img = cv2.transpose(img) 7 new_img = cv2.flip(trans_img, 1) 8 return new_img 9 10 11 # 逆时针旋转90度 12 def RotateAntiClockWise90(img): 13 trans_img = cv2.transpose(img) 14 new_img = cv2.flip(trans_img, 0) 15 return new_img 16 17 # 这个类实现了从左到右寻找一条连续的线段,step代表两个点之间的空白区最大为多少仍然看成一条钱 18 class straight_search(): 19 def __init__(self, matrix, x_search=0, y_search=0, step=2): 20 self.x, self.y = x_search, y_search 21 self.s_y, self.s_x = matrix.shape 22 self.matrix = matrix 23 self.step = step 24 self.final = False 25 26 def __iter__(self): 27 return self 28 29 def __next__(self): 30 result = [] 31 while True: 32 if self.final: 33 raise StopIteration() 34 self.matrix_ = self.matrix[max(0, self.y - self.step):min(self.s_y, self.y + self.step + 1), 35 max(0, self.x + 1):min(self.s_x + 1, self.x + self.step + 1)] 36 if self.matrix_.size == 0: 37 self.final = True 38 r = np.where(self.matrix_ == 0) 39 r_ = list(zip(*r)) 40 if r_: 41 distance = abs(r[0] - (self.step if max(0, self.y - self.step + 1) else 0)) 42 index = np.argmin(distance) 43 y_, x_ = r_[index] 44 self.y, self.x = self.y + y_ - (self.step if max(0, self.y - self.step + 1) else 0), self.x + x_ + 1 45 result.append((self.y, self.x)) 46 # print(self.matrix_, (self.y, self.x)) 47 elif result: 48 self.x, self.y = self.x + self.step, self.y 49 return result 50 else: 51 self.x, self.y = self.x + self.step, self.y 52 53 def interval(self, math=max, times=100): 54 tem = 0 55 tem_c = [(0, 0)] 56 for i, c in enumerate(self.__iter__()): 57 if i >= times: 58 return tem_c 59 if c: 60 int_ = c[-1][1] - c[0][1] 61 tem = math(tem, int_) 62 if tem == int_: 63 # print(int_,tem,c) 64 tem_c = c 65 return tem_c 66 67 68 def get_img(img_, baidu_table=False): 69 original_image = cv2.imread(img_) 70 height = original_image.shape[0] 71 width = original_image.shape[1] 72 if width < height: 73 img = RotateAntiClockWise90(original_image) 74 else: 75 img = original_image 76 blur = cv2.GaussianBlur(img, (3, 3), 0) 77 x = cv2.Sobel(blur, cv2.CV_16S, 1, 0) 78 y = cv2.Sobel(blur, cv2.CV_16S, 0, 1) 79 # cv2.convertScaleAbs(src[, dst[, alpha[, beta]]]) 80 # 可选参数alpha是伸缩系数,beta是加到结果上的一个值,结果返回uint类型的图像 81 Scale_absX = cv2.convertScaleAbs(x) # convert 转换 scale 缩放 82 Scale_absY = cv2.convertScaleAbs(y) 83 result = cv2.addWeighted(Scale_absX, 1, Scale_absY, 0.5, 0) 84 85 # cv2.imshow('result', result) 86 87 img_gray = cv2.cvtColor(result, cv2.COLOR_BGR2GRAY) # 转换了灰度化 88 89 # 将图像二值化,设定阈值是100 90 img_thre = img_gray 91 cv2.threshold(img_gray, 30, 255, cv2.THRESH_BINARY_INV, img_thre) 92 93 cv2.imwrite('/install/git/MachineLearning/图像识别-表格提取/output/ts.jpg', img_thre) 94 # cv2.imshow('img_gray', img_gray) 95 # cv2.waitKey(0) 96 # search table range 97 height = img_thre.shape[0] 98 width = img_thre.shape[1] 99 100 y_black_sum = [] 101 s = straight_search(img_thre) 102 # start_time = time.time() 103 for i in range(height - 1): 104 if i % 200 == 0: 105 print(i) 106 s.__init__(img_thre, x_search=100, y_search=i,step=5) 107 int_ = s.interval() 108 length = int_[-1][1] - int_[0][1] 109 y_black_sum.append(length) 110 # print(time.time()-start_time) 111 y_black_sum_sort = filter(lambda x: x > 1000, sorted(y_black_sum, reverse=True)) 112 y_black_max = sorted([y_black_sum.index(i) for i in y_black_sum_sort]) 113 tem = list(map(lambda x: (x - y_black_max[y_black_max.index(x) - 1]), y_black_max)) 114 index = y_black_max.index(y_black_max[tem.index(max(tem))]) 115 y1, y2 = y_black_max[index - 1], y_black_max[index] 116 s.__init__(img_thre,x_search=100, y_search=y2, step=5) 117 int_ = s.interval() 118 x1, x2 = int_[0][1], int_[-1][1] 119 img_thre = img_thre[y1:y2, x1 - 10:x2 + 20] 120 img = img[y1:y2, x1 - 10:x2 + 20] 121 122 # cv2.imshow('threshold', img) 123 # cv2.waitKey(0) 124 # 4、分割字符 125 y_white = [] # 记录每一列的白色像素总和 126 y_black = [] # ..........黑色....... 127 height = img_thre.shape[0] 128 width = img_thre.shape[1] 129 y_white_max = 0 130 y_black_max = 0 131 # 计算每一列的黑白色像素总和 132 for i in range(width): 133 s = 0 # 这一列白色总数 134 t = 0 # 这一列黑色总数 135 for j in range(height): 136 if img_thre[j][i] == 255: 137 s += 1 138 if img_thre[j][i] == 0: 139 t += 1 140 y_white_max = max(y_white_max, s) 141 y_black_max = max(y_black_max, t) 142 y_white.append(s) 143 y_black.append(t) 144 145 x_white = [] 146 x_black = [] 147 x_white_max = 0 148 x_black_max = 0 149 for i in range(height): 150 s = 0 # 这一列白色总数 151 t = 0 # 这一列黑色总数 152 for j in range(width): 153 if img_thre[i][j] == 255: 154 s += 1 155 if img_thre[i][j] == 0: 156 t += 1 157 x_white_max = max(x_white_max, s) 158 x_black_max = max(x_black_max, t) 159 x_white.append(s) 160 x_black.append(t) 161 162 arg = False # False表示白底黑字;True表示黑底白字 163 if y_black_max > y_white_max: 164 arg = True 165 166 block = 20 167 168 # 分割图像 169 def find_end_y(start_): 170 end_ = start_ + block 171 172 a = 0.999 173 for m in range(start_, width): 174 if m + block * 1.5 >= width: 175 return width 176 if (min(y_black[m - min(m, block):m]) if arg else min(y_white[m - min(m, block):m])) > ( 177 y_black_max * a - block if arg else y_white_max * a - block): 178 end_ = m 179 break 180 return end_ 181 182 start = 1 183 split_matrix_y = [] 184 while start < width - 1: 185 start += 1 186 if (max(y_white[start:start + block]) if arg else max(y_black[start:start + start + block])) > ( 187 block if arg else block): 188 end = find_end_y(start) 189 if (end - start) < max(20, block * 1.5): 190 continue 191 split_matrix_y.append((max(0, start - block), min(end, width))) 192 start = end 193 194 block = 20 195 196 def find_end_x(start_): 197 end_ = start_ + block 198 a = 0.9 199 for m in range(start_ + 1, height): 200 if m + block * 1.5 >= height: 201 return height 202 if (min(x_black[m - min(m, block):m]) if arg else min(x_white[m - min(m, block):m])) > ( 203 x_black_max * a - block if arg else x_white_max * a - block): 204 end_ = m 205 break 206 return end_ 207 208 start = 1 209 split_matrix_x = [] 210 while start < height - 1: 211 start += 1 212 if (max(x_white[start:start]) if arg else max(x_black[start:start + block])) > ( 213 block if arg else block): 214 end = find_end_x(start) 215 if (end - start) < max(15, block * 1.5): 216 continue 217 split_matrix_x.append((max(0, start - block), min(end, height))) 218 start = end 219 220 img_matrixs = [] 221 if baidu_table: 222 x_next, y_next = 0, 0 223 for x in split_matrix_x: 224 img_matrix = [] 225 for y in split_matrix_y: 226 cj = img[x_next:x[1], y_next:y[1]] 227 cv2.imwrite("img_sub/tem.jpg", cj) 228 img_matrix.append(cj) 229 cv2.rectangle(img, (y_next, x_next), (y[1], x[1]), (255, 0, 0), 2) 230 y_next = y[1] 231 y_next = 0 232 x_next = x[1] 233 img_matrixs.append(img_matrix) 234 235 cv2.imwrite("output/%s" % os.path.basename(img_), img) 236 get_table("output/%s" % os.path.basename(img_)) 237 else: 238 for x in split_matrix_x: 239 img_matrix = [] 240 for y in split_matrix_y: 241 cj = img[x[0]:x[1], y[0]:y[1]] 242 cv2.imwrite("img_sub/tem.jpg", cj) 243 img_matrix.append(cj) 244 cv2.rectangle(img, (y[0], x[0]), (y[1], x[1]), (255, 0, 0), 2) 245 img_matrixs.append(img_matrix) 246 247 cv2.imwrite("output/%s" % os.path.basename(img_), img) 248 # cv2.imshow('caijian', img) 249 # cv2.waitKey(0) 250 251 # cv2.imwrite("tem_" + img_, img) 252 # return "tem_" + img_ 253 254 return img_matrixs 255 256

MainBaidu.py

1 import base64 2 import os 3 import time 4 5 import cv2 6 import numpy as np 7 import requests 8 from PIL import Image 9 from requests.cookies import RequestsCookieJar 10 11 12 def img_bytes(img): 13 ret, buf = cv2.imencode(".png", img) 14 return Image.fromarray(np.uint8(buf)).tobytes() 15 16 17 def get_token(): 18 # client_id 为官网获取的AK, client_secret 为官网获取的SK 19 host = 'https://aip.baidubce.com/oauth/2.0/token?grant_type=client_credentials&client_id=8RlacbCvB7z0yzhEKg41yMh1&client_secret=5bA2LvojST6YwTUztmGlhezoWoIrdG0D' 20 response = requests.get(host) 21 if response: 22 print(response.json()["access_token"]) 23 return response.json()["access_token"] 24 25 26 def get_text(img): 27 try: 28 request_url = "https://aip.baidubce.com/rest/2.0/ocr/v1/general_basic" 29 img = base64.b64encode(img_bytes(img)) 30 params = {"image": img} 31 access_token = get_token() 32 request_url = request_url + "?access_token=" + access_token 33 headers = {'content-type': 'application/x-www-form-urlencoded'} 34 response = requests.post(request_url, data=params, headers=headers) 35 if response: 36 # print(response.json()) 37 return response.json() 38 except: 39 return {} 40 41 42 def test_api(img_): 43 headers = { 44 "headers": [ 45 { 46 "name": "Accept", 47 "value": "*/*" 48 }, 49 { 50 "name": "Accept-Encoding", 51 "value": "gzip, deflate, br" 52 }, 53 { 54 "name": "Accept-Language", 55 "value": "zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2" 56 }, 57 { 58 "name": "Connection", 59 "value": "keep-alive" 60 }, 61 { 62 "name": "Content-Length", 63 "value": "766833" 64 }, 65 { 66 "name": "Content-Type", 67 "value": "application/x-www-form-urlencoded" 68 }, 69 { 70 "name": "Cookie", 71 "value": "BIDUPSID=27CF8D52EDE9863F90EA0F9ECA1CB2E9; BAIDUID=4F735B4C223E84107C92E06E548FD6B7:FG=1; PSTM=1604535270; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; __yjs_duid=1_4ab86138aaa3de37bd8daf8e836664a01608877427152; CAMPAIGN_TRACK=cp%3Aainsem%7Cpf%3Apc%7Cpp%3Achanpin-wenzishibie%7Cpu%3Awenzishibie-baiduocr%7Cci%3A%7Ckw%3A10002846; Hm_lvt_8b973192450250dd85b9011320b455ba=1608877429,1608880419,1609290457; BDRCVFR[Hp1ap0hMjsC]=mk3SLVN4HKm; delPer=0; PSINO=2; H_PS_PSSID=1420_33359_33306_32970_33350_33313_33312_33311_33310_33309_33308_33307_33388_33370; BCLID=11182069166008530591; BDSFRCVID=cXKOJeC626uKBt5rgclH2X9JWzdei_3TH6aoUwQFZT2nBAE-3IwZEG0PeM8g0Ku-S2EqogKKXgOTHw0F_2uxOjjg8UtVJeC6EG0Ptf8g0M5; H_BDCLCKID_SF=tRAOoC8atDvHjjrP-trf5DCShUFs5hviB2Q-5KL-Jt5vE4-GK4JjhMvXWarZLbTb5C5doMbdJJjoSt3hQl6xD4CYLt_LJMcfyeTxoUJHBCnJhhvGqq-KQJ_ebPRiXPb9QgbfopQ7tt5W8ncFbT7l5hKpbt-q0x-jLTnhVn0MBCK0HPonHjDMDj3X3f; Hm_lpvt_8b973192450250dd85b9011320b455ba=1609300079; __yjsv5_shitong=1.0_7_197b58c54993f19402e7254078abfb81fa1b_300_1609300079531_101.206.170.92_22c52dc4; BDUSS=XVXSkJBTFJmd0RTMnhucnprVFFpNTA1TGR4RVROM0RjRHh2MmlVMGRZZGFmQk5nSUFBQUFBJCQAAAAAAAAAAAEAAADJU-TMYcTjysfO0rXE1u0AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFrv619a7-tfW; iocr_token=E034D111095FC15CA3C1C5B16FCE4E7685950628C8AA4A703F99872B230A16240D3B16052487BE08EC04B8AF8933380F93BC9CD0067358929F8F279718BBD248; __cas__st__469=NLI; __cas__id__469=0; __cas__rn__=0; ab_sr=1.0.0_ODEyODQ0ODhlYzk4ODAyYzc0NmUwZTk4NmRmZjc3MGIzY2RhYWNmMDgwMDA4ZDk1OGM5MzA0ODU5MmVjYWEzM2Q3NmQyYjRlMTY5MzkwNjMwODQxZGNlY2FhYTRlZDFl; BA_HECTOR=2100ah000lal200hg01funu3l0r" 72 }, 73 { 74 "name": "Host", 75 "value": "ai.baidu.com" 76 }, 77 { 78 "name": "Origin", 79 "value": "https://ai.baidu.com" 80 }, 81 { 82 "name": "Referer", 83 "value": "https://ai.baidu.com/iocr" 84 }, 85 { 86 "name": "TE", 87 "value": "Trailers" 88 }, 89 { 90 "name": "User-Agent", 91 "value": "Mozilla/5.0 (X11; Linux x86_64; rv:85.0) Gecko/20100101 Firefox/85.0" 92 }, 93 { 94 "name": "x-csrf-iocr", 95 "value": "E034D111095FC15CA3C1C5B16FCE4E7685950628C8AA4A703F99872B230A16240D3B16052487BE08EC04B8AF8933380F93BC9CD0067358929F8F279718BBD248" 96 } 97 ] 98 } 99 cookies = { 100 "__cas__id__469": "0", 101 "__cas__rn__": "0", 102 "__cas__st__469": "NLI", 103 "__yjs_duid": "1_4ab86138aaa3de37bd8daf8e836664a01608877427152", 104 "__yjsv5_shitong": "1.0_7_197b58c54993f19402e7254078abfb81fa1b_300_1609300079531_101.206.170.92_22c52dc4", 105 "ab_sr": "1.0.0_ODEyODQ0ODhlYzk4ODAyYzc0NmUwZTk4NmRmZjc3MGIzY2RhYWNmMDgwMDA4ZDk1OGM5MzA0ODU5MmVjYWEzM2Q3NmQyYjRlMTY5MzkwNjMwODQxZGNlY2FhYTRlZDFl", 106 "BA_HECTOR": "2100ah000lal200hg01funu3l0r", 107 "BAIDUID": "4F735B4C223E84107C92E06E548FD6B7:FG=1", 108 "BCLID": "11182069166008530591", 109 "BDORZ": "FFFB88E999055A3F8A630C64834BD6D0", 110 "BDRCVFR[Hp1ap0hMjsC]": "mk3SLVN4HKm", 111 "BDSFRCVID": "cXKOJeC626uKBt5rgclH2X9JWzdei_3TH6aoUwQFZT2nBAE-3IwZEG0PeM8g0Ku-S2EqogKKXgOTHw0F_2uxOjjg8UtVJeC6EG0Ptf8g0M5", 112 "BDUSS": "XVXSkJBTFJmd0RTMnhucnprVFFpNTA1TGR4RVROM0RjRHh2MmlVMGRZZGFmQk5nSUFBQUFBJCQAAAAAAAAAAAEAAADJU-TMYcTjysfO0rXE1u0AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFrv619a7-tfW", 113 "BIDUPSID": "27CF8D52EDE9863F90EA0F9ECA1CB2E9", 114 "CAMPAIGN_TRACK": "cp:ainsem|pf:pc|pp:chanpin-wenzishibie|pu:wenzishibie-baiduocr|ci:|kw:10002846", 115 "delPer": "0", 116 "H_BDCLCKID_SF": "tRAOoC8atDvHjjrP-trf5DCShUFs5hviB2Q-5KL-Jt5vE4-GK4JjhMvXWarZLbTb5C5doMbdJJjoSt3hQl6xD4CYLt_LJMcfyeTxoUJHBCnJhhvGqq-KQJ_ebPRiXPb9QgbfopQ7tt5W8ncFbT7l5hKpbt-q0x-jLTnhVn0MBCK0HPonHjDMDj3X3f", 117 "H_PS_PSSID": "1420_33359_33306_32970_33350_33313_33312_33311_33310_33309_33308_33307_33388_33370", 118 "Hm_lpvt_8b973192450250dd85b9011320b455ba": "1609300079", 119 "Hm_lvt_8b973192450250dd85b9011320b455ba": "1608877429,1608880419,1609290457", 120 "iocr_token": "E034D111095FC15CA3C1C5B16FCE4E7685950628C8AA4A703F99872B230A16240D3B16052487BE08EC04B8AF8933380F93BC9CD0067358929F8F279718BBD248", 121 "PSINO": "2", 122 "PSTM": "1604535270" 123 } 124 headers = dict( 125 zip(list(map(lambda x: x['name'], headers['headers'])), list(map(lambda x: x['value'], headers['headers'])))) 126 cookie_jar = RequestsCookieJar() 127 for key, values in zip(cookies.keys(), cookies.values()): 128 cookie_jar.set(key, values) 129 request_url = "https://ai.baidu.com/iocr/template/test" 130 f = open(img_, 'rb') 131 img = base64.b64encode(f.read()) 132 params = { 133 "image": img, 134 "version": "2", 135 "templateSign": "9178156358ab59459addfd6a3ccd6b32", 136 "industry": "0", 137 "subType": "1" 138 } 139 response = requests.post(request_url, data=params, headers=headers, cookies=cookie_jar) 140 if response: 141 # print(response) 142 r = response.json() 143 144 return response.json() 145 146 147 # img_ = "output/58-85.26.png" 148 def get_table(img_): 149 # request_url = "https://aip.baidubce.com/rest/2.0/ocr/v1/form" 150 request_url = "https://aip.baidubce.com/rest/2.0/solution/v1/form_ocr/request" 151 f = open(img_, 'rb') 152 img = base64.b64encode(f.read()) 153 154 params = {"image": img} 155 access_token = get_token() 156 request_url = request_url + "?access_token=" + access_token 157 headers = {'content-type': 'application/x-www-form-urlencoded'} 158 response = requests.post(request_url, data=params, headers=headers) 159 if response: 160 print(response.json()) 161 # return response.json() 162 request_url = "https://aip.baidubce.com/rest/2.0/solution/v1/form_ocr/get_request_result" 163 params = {"request_id": response.json()['result'][0]['request_id'], "result_type": "excel"} 164 access_token = get_token() 165 request_url = request_url + "?access_token=" + access_token 166 headers = {'content-type': 'application/x-www-form-urlencoded'} 167 while True: 168 time.sleep(1) 169 response = requests.post(request_url, data=params, headers=headers) 170 if response: 171 if response.json()['result']['ret_msg'] == '进行中': 172 continue 173 else: 174 print(response.json()) 175 os.system("wget -c {} -O output/{}.xls".format(response.json()['result']['result_data'], 176 os.path.basename(img_))) 177 break

MainSelf.py

1 import os 2 import time 3 4 from ImgProcessing import get_img 5 from MainBaidu import get_text 6 import pandas as pd 7 8 def predict(img): 9 try: 10 pred = get_text(img) 11 print(pred) 12 return pred['words_result'][0]['words'] 13 except: 14 return "" 15 16 17 if __name__ == '__main__': 18 input = 'input' 19 output = 'output' 20 imgs = os.listdir(input) 21 imgs = filter(lambda x: str(x).endswith("png"), imgs) 22 for imgname in imgs: 23 # using the baidu API gets table 24 baidu_table = False 25 if baidu_table and imgname+".xls" in os.listdir(output): 26 continue 27 elif imgname in os.listdir(output): 28 continue 29 start = time.time() 30 img_matrixs = get_img(os.path.join(input, imgname),baidu_table) 31 text_matrixs = [] 32 if not baidu_table: 33 for img_matrix in img_matrixs: 34 text_matrix = [] 35 for img in img_matrix: 36 # print(img.shape) 37 text_matrix.append(predict(img)) 38 text_matrixs.append(text_matrix) 39 pd.DataFrame(text_matrixs).to_csv(os.path.join(output, imgname.replace('png', 'csv')), header=False, 40 index=False) 41 print(f"finish {imgname}:{time.time() - start}s \--------------------------------------------------------")

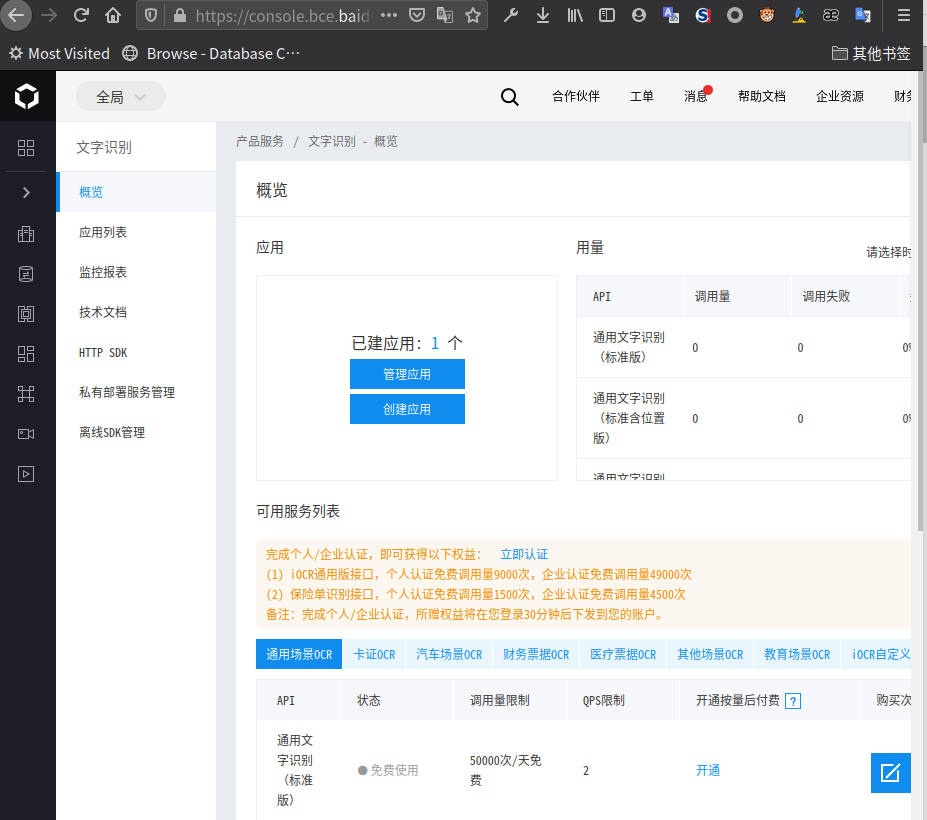

这里我提供了我的百度智能云接口,使用量有限,请到百度智能云创建个人应用

浙公网安备 33010602011771号

浙公网安备 33010602011771号