Kubernetes之Ingress

参考:https://www.jianshu.com/p/c726ed03562a

上一节介绍使用Service暴露应用

NodePort在node启动端口供用户访问,负载均衡默认使用Iptables进行

Service有些弊端,例如只能四层负载均衡,无法实现七层负载均衡,通过IP访问端口可能冲突

Ingress为弥补Service不足而生

Pod与Ingress关系

- 通过Service关联Pod

- 基于域名访问

- 通过Ingresss Controller实现Pod负载均衡

- 支持TCP/UDP 4层和HTTP七层

使用Ingress控制器调用Service,会在每个node什么部署一个Ingress控制器,用户请求控制器,控制器对外提供80,443端口,必须使用域名访问,默认使用nginx(upstream)进行负载均衡

部署Ingress Controller然后创建Ingress规则

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

重命名

mv mandatory.yaml ingress-controller.yaml

修改一下把副本数改成3

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:master

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- default:

min:

memory: 90Mi

cpu: 100m

type: Container

需要注释或者修改以下两行,否则无法启动pod

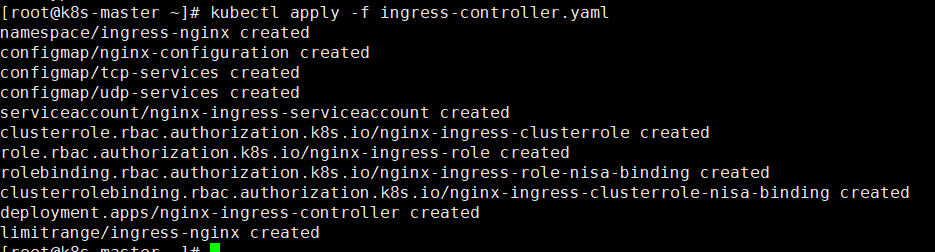

应用

kubectl apply -f ingress-controller.yaml

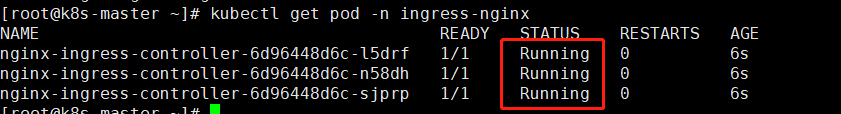

查看

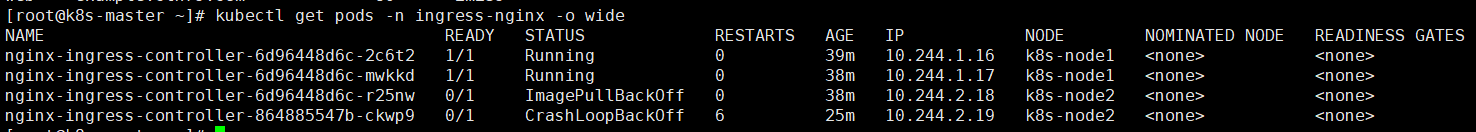

kubectl get pods -n ingress-nginx

下载部署文件service-nodeport.yaml

service-nodeport.yaml为ingress通过nodeport对外提供服务,默认nodeport暴露为随机端口,可以编辑文件自定义端口

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/service-nodeport.yaml --no-check-certificate

应用该文件

kubectl apply -f service-nodeport.yaml

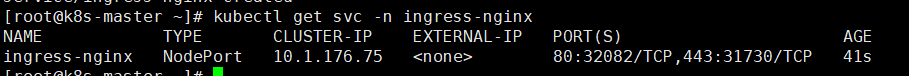

查看创建的service

kubectl get svc -n ingress-nginx

通过创建的svc可以看到已经把ingress-nginx service在主机映射端口为32082(http)31730(https)

发布应用需要创建ingress规则

编辑ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web

spec:

rules:

- host: example.ctnrs.com

http:

paths:

- backend:

serviceName: web

servicePort: 80

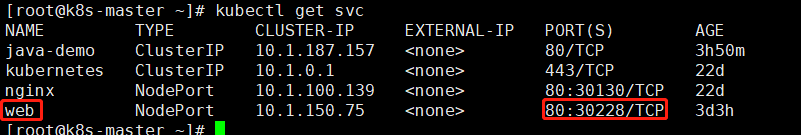

确保有web Service并且是80端口

应用

kubectl apply -f ingress.yaml

查看

kubectl get ingress

ingress必须通过域名访问 设置hosts即可,因为ingress部署在任意一个node上,所以可以解析node任意ip

设置主机的hosts以后需要通过域名加端口访问,随机端口为应用service-nodeport.yaml创建的随机端口,本次为32082

http://example.ctnrs.com:32082/

必须使用域名,不能使用ip加端口进行访问,访问正常即代表本次配置成功

这里对应nginx创建的svc服务,其实在实际调度过程中,流量是直接通过ingress然后调度到后端的pod,而没有经过svc服务,svc只是提供一个收集pod服务的作用。

Ingress高可用

上面我们只是解决了集群对外提供服务的功能,并没有对ingress进行高可用的部署,Ingress高可用,我们可以通过修改deploment的副本数来实现高可用,但是对于ingress承载着整个集群流量的接入,所以在生产环境中,建议把ingres通过DaemonSet的方式部署集群,而且该节点打上污点不允许业务pod进行调度,以避免业务应用于Ingress服务发生资源争抢。然后通过SLB把ingress节点主机添加为后端服务器,进行流量转发。

修改ingress-controller.yaml

修改后的文件如下

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

#kind: Deployment

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

#replicas: 3

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

nodeSelector:

#kubernetes.io/os: linux

vanje/ingress-controller-ready: "true"

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

value: ""

effect: "NoSchedule"

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:master

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- default:

min:

memory: 90Mi

cpu: 100m

type: Container

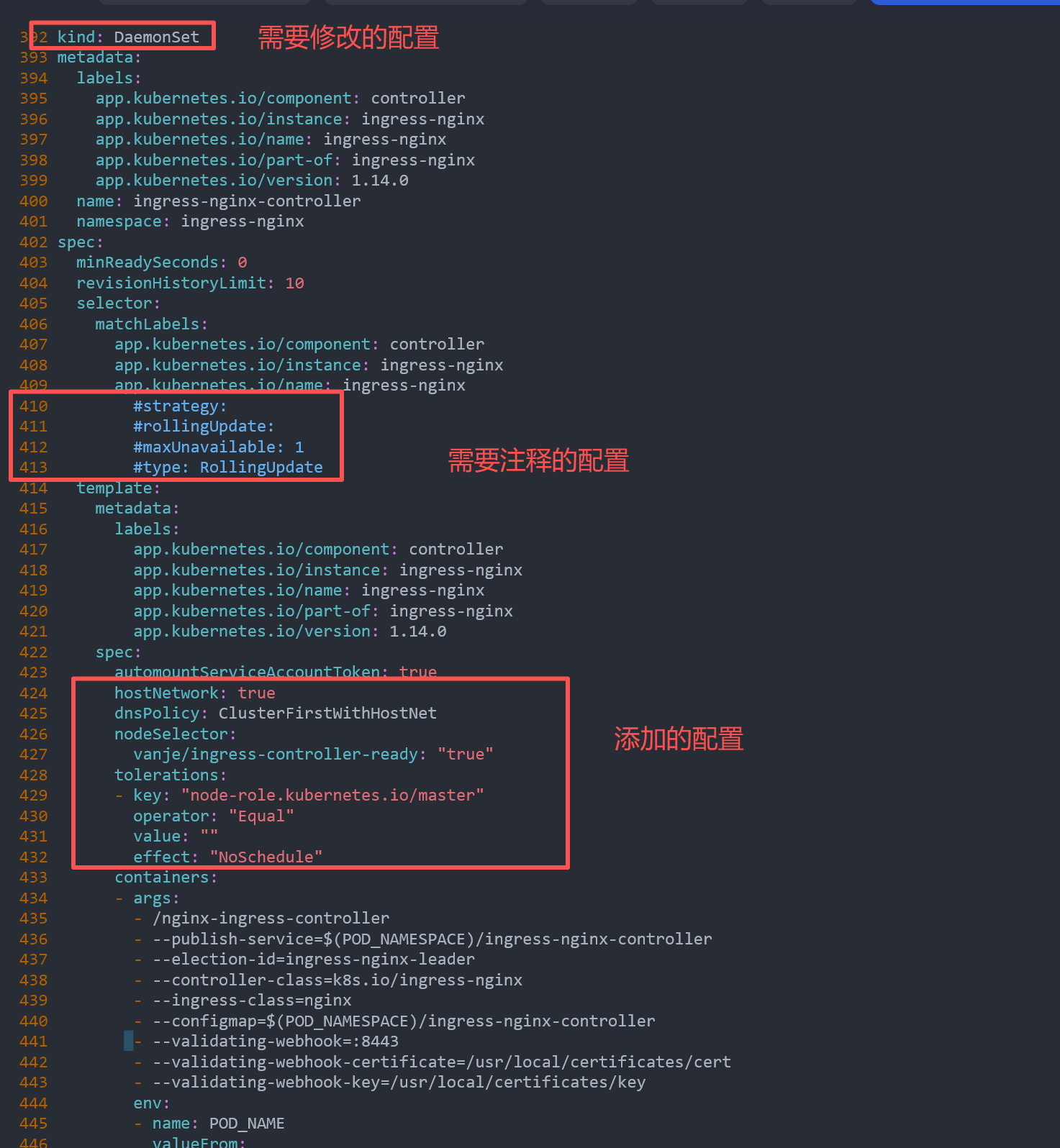

修改参数如下

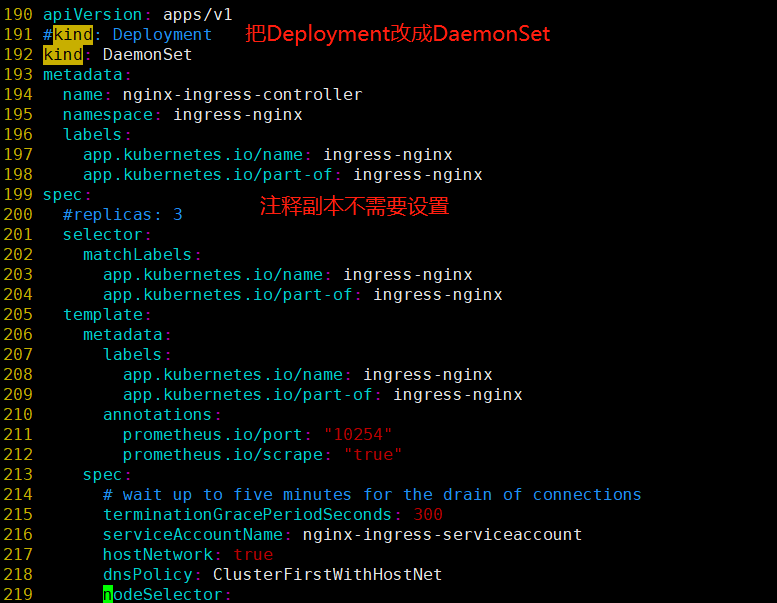

- kind: Deployment #修改为DaemonSet

- replicas: 1 #注销此行,DaemonSet不需要此参数

- hostNetwork: true #添加该字段让docker使用物理机网络,在物理机暴露服务端口(80),注意物理机80端口提前不能被占用

- dnsPolicy: ClusterFirstWithHostNet #使用hostNetwork后容器会使用物理机网络包括DNS,会无法解析内部service,使用此参数让容器使用K8S的DNS

- nodeSelector:vanje/ingress-controller-ready: "true" #添加节点标签

- tolerations: 添加对指定节点容忍度

2025-11-26补充开始

K8S1.33版本部署ingress

部署参考https://kubernetes.github.io/ingress-nginx/deploy/#quick-start

下载后修改配置(注意空格)

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

nodeSelector:

vanje/ingress-controller-ready: "true"

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

value: ""

effect: "NoSchedule"

需要注释以下配置

2025-11-26补充结束

给节点打标签,这里在两台node上部署(最好不要使用master节点部署,应该部署在独立的节点上),我们采用DaemonSet的方式,我们需要对两个node节点打标签及容忍度

#给两个node节点打标签 kubectl label nodes k8s-node1 vanje/ingress-controller-ready=true kubectl label nodes k8s-node2 vanje/ingress-controller-ready=true #节点打污点 #此污点名称需要与yaml文件中pod的容忍污点对应 kubectl taint nodes k8s-node1 node-role.kubernetes.io/master=:NoSchedule kubectl taint nodes k8s-node2 node-role.kubernetes.io/master=:NoSchedule

PS:给node打标签是保证Pod分配到该node节点,给node打污点是防止其他Pod被分配到该node节点只有在Pod里配置了tolerations参数的才会被分配到该node节点。

此例中配置保证了Deployment上Pod之被分配到对应的node节点并且其他Pod因为污点配置而无法分配到该node节点,此类配置适合做外网的接入的node

如果标签错误需要修改或者删除标签需要重新配置并且加参数--overwrite,例如需要删除k8s-node1的标签,把参数改成false

kubectl label nodes k8s-node2 vanje/ingress-controller-ready=false --overwrite

取消污点设置使用以下命令,如不取消可能导致启动pod一直处于Pending状态

kubectl taint nodes --all node-role.kubernetes.io/master-

应用,如果是修改则需要删除之前的在应用,否则无法生效

kubectl delete -f ingress-controller.yaml kubectl apply -f ingress-controller.yaml

查看

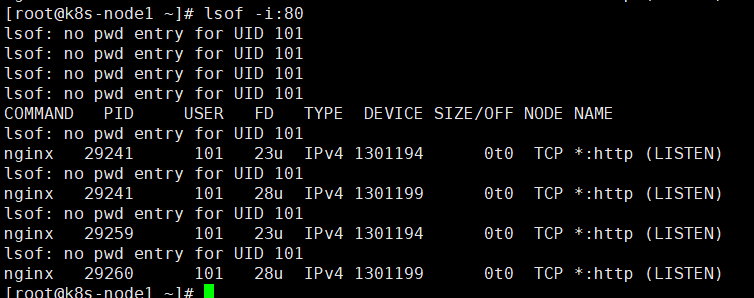

kubectl get pod -n ingress-nginx kubectl get svc -n ingress-nginx kubectl get ingress lsof -i:80

查看启用标签的是那些主机

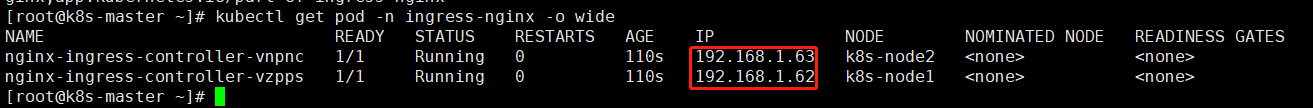

kubectl get pod -n ingress-nginx -o wide

可以看到是启用标签的两台node

在两台node查看80端口是否启动

配置主机host指向为两台node其中之一即可

使用配置文件ingress.yaml里配置的域名访问,不需要加端口

example.ctnrs.com

PS:因为我们创建ingress-controller采用的是hostnetwork模式,所以无需创建ingress-svc服务来把端口映射到节点主机上,即无需应用配置文件

service-nodeport.yaml

浙公网安备 33010602011771号

浙公网安备 33010602011771号