【K8s】- StorageClass网络持久化存储

在使用K8s搭建TDengine数据库集群的时候,出现进不去容器的问题,查了半天才发现是因为没有为TDengine申请对应的PV资源,现将创建的方式记录一下

注:部署内容转载自 https://z.itpub.net/article/detail/EF99DFB338D0F3A5B4DF948E4C1F1346

一、安装NFS

1、安装NFS服务器应用:

yum install -y nfs-utils rpcbind 创建NFS共享目录: mkdir -p /data/k8snfs 编辑NFS配置文件: vim /etc/exports /opt/data/k8snfs *(rw,sync,insecure,no_subtree_check,no_root_squash) 重启服务: service rpcbind restart service nfs restart 检查服务器端是否正常加载 了/etc/exports的配置: showmount -e Localhost 注意:如果使用云服务器,需要开放一下端口,否则客户端连接不上。使用:rpcinfo -p 查看需要开放的端口。注意有tcp和udp

2、安装NFS客户端

注意:每台需要使用NFS的Node都需要安装NFS

安装客户端:

yum install -y nfs-utils

检查是否能访问远端的NFS服务器:

sudo showmount -e {NFS服务器IP地址}

如果出现clnt_create: RPC: Port mapper failure - Timed out,使用云服务的话大概率是接口没开放。

二、部署StorageClass

StorageClass是通过存储分配器(provisioner)来动态分配PV 的,但是Kubernetes官方内置的存储分配器并不支持NFS,所以需要额外安装NFS存储分配器。

1、安装NFS存储分配器

在node节点创建nfs-client-provisioner-authority.yaml文件,执行 kubectl apply -f nfs-client-provisioner-authority.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

2、安装NFS存储分配器,执行 kubectl apply -f nfs-client-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # 存储分配器的默认名称

value: fuseim.pri/ifs

- name: NFS_SERVER # NFS服务器地址

value: xx.xx.236.113

- name: NFS_PATH # NFS共享目录地址

value: /data/k8snfs

volumes:

- name: nfs-client-root

nfs:

server: xx.xx.236.113 # NFS服务器地址

path: /data/k8snfs # NFS共享目录

3、创建StorageClass,执行 kubectl apply -f nfs-storage-class.yml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage # StorageClass名称 # 存储分配器名称 provisioner: fuseim.pri/ifs # 对应“nfs-client-provisioner.yaml”文件中env.PROVISIONER_NAME.value # 允许pvc创建后扩容 allowVolumeExpansion: True parameters: archiveOnDelete: "false" # 资源删除策略,“true”表示删除PVC时,同时删除绑定的PV,false删除PVC时,对应的PV不会删除

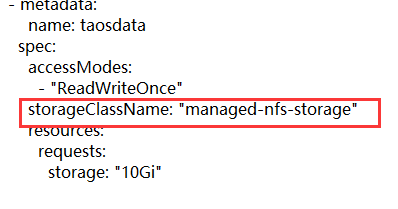

三、修改TDengine中的 tdengine.yaml 文件,将 storageClassName 修改成上一步中的 StorageClass 名称。

然后就完成啦...

当然没有, 就很傻,内网使用时没问题了,还要配置外网访问内网服务,

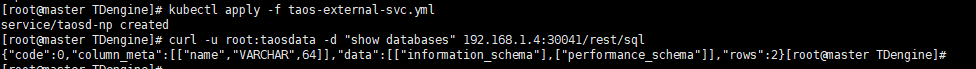

创建 taos-external-svc.yml 文件并运行

apiVersion: v1

kind: Service

metadata:

name: taosd-np

labels:

app: tdengine

annotations:

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

spec:

type: NodePort

selector:

app: tdengine

ports:

- name: tcp6030

protocol: "TCP"

port: 6030

targetPort: 6030

nodePort: 30030

- name: tcp6035

protocol: "TCP"

port: 6035

targetPort: 6035

nodePort: 30035

- name: tcp6041

protocol: "TCP"

port: 6041

targetPort: 6041

nodePort: 30041

开始按照博客写的外部端口是36041,根本行不通,会报错,改成30041就行了

这回才是真的成功

浙公网安备 33010602011771号

浙公网安备 33010602011771号