ceph安装

cat << EOF >> /etc/hosts

10.0.0.201 ceph-node1

10.0.0.202 ceph-node2

10.0.0.203 ceph-node3

EOF

ceph-node1 执行

ssh-keygen

ssh-copy-id -i /root/.ssh/id_rsa.pub ceph-node2

ssh-copy-id -i /root/.ssh/id_rsa.pub ceph-node3

yum -y install ntp -y

vi /etc/ntp.conf

[root@ceph-node1 ~]# ntpq -pn

remote refid st t when poll reach delay offset jitter

+119.28.206.193 100.122.36.196 2 u 12 64 1 17.939 0.073 0.085

*178.16.128.13 193.67.79.202 2 u 8 64 1 256.770 -6.190 0.483

-78.46.102.180 85.10.240.253 3 u 10 64 1 292.383 27.391 0.090

+94.130.49.186 9.193.143.114 3 u 8 64 1 262.992 25.591 0.182

部署yum源 所有机器

https://developer.aliyun.com/mirror/centos?spm=a2c6h.13651102.0.0.3e221b11cT0i4R

[root@ceph-node1 ~]# cd /etc/yum.repos.d/

[root@ceph-node1 /etc/yum.repos.d]# rm -rf *

epel源

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

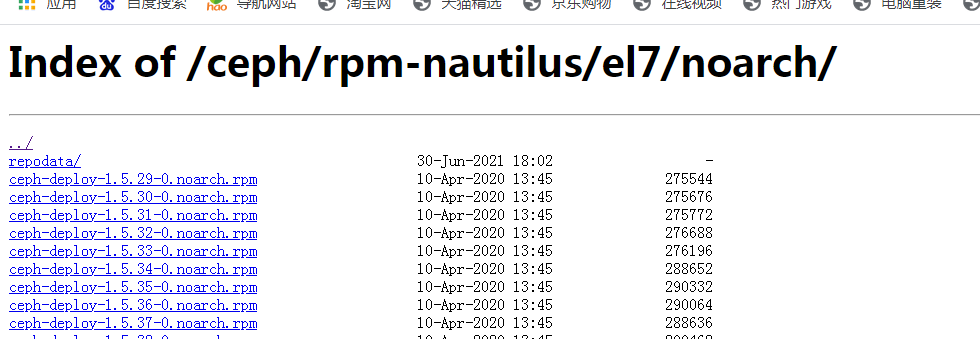

ceph源

https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

配置ceph源

vi ceph.repo

[ceph]

name=ceph

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

enabled=1

gpgcheck=0

[ceph-noarch]

name=cephnoarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

enabled=1

gpgcheck=0

清除缓存

yum makecache

node1 安装

yum install -y python-setuptools ceph-deploy

[root@ceph-node1 ~]# ceph-deploy -v

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME]

[--overwrite-conf] [--ceph-conf CEPH_CONF]

COMMAND ...

ceph-deploy: error: too few arguments

[root@ceph-node1 ~]# ceph-deploy

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME]

[--overwrite-conf] [--ceph-conf CEPH_CONF]

COMMAND ...

Easy Ceph deployment

-^-

/

|O o| ceph-deploy v2.0.1

).-.(

'/|||`

| '| | '|

安装完成

[root@ceph-node1 ~]# yum list | grep deploy

ceph-deploy.noarch 2.0.1-0 @ceph

fusioninventory-agent-task-deploy.noarch 2.3.21-3.el7 epel

jetty-deploy.noarch 9.0.3-8.el7 base

maven-deploy-plugin.noarch 2.7-11.el7 base

maven-deploy-plugin-javadoc.noarch 2.7-11.el7 base

python-paste-deploy.noarch 1.5.0-10.el7 epel

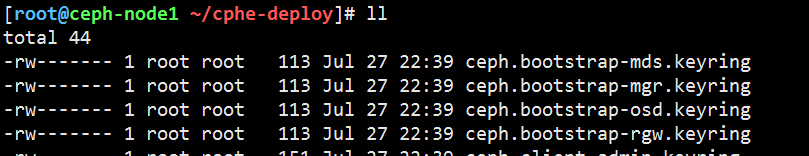

[root@ceph-node1 ~]# mkdir cphe-deploy

[root@ceph-node1 ~]# cd cphe-deploy/

创建集群

ceph-deploy new --public-network 10.0.0.0/24 --cluster-network 10.0.0.0/24 ceph-node1 #将node1作为monitor

--public-network 对外入口

--cluster-network集群内部通讯

手动安装包

yum list | grep eph

calceph.x86_64

ceph-mgr.x86_64

ceph-mds.x86_64

ceph-mon.x86_64

ceph-osd.x86_64

ceph-radosgw.x86_64

所有节点

yum install -y ceph ceph-mon ceph-mgr ceph-mds ceph-radosgw

node1

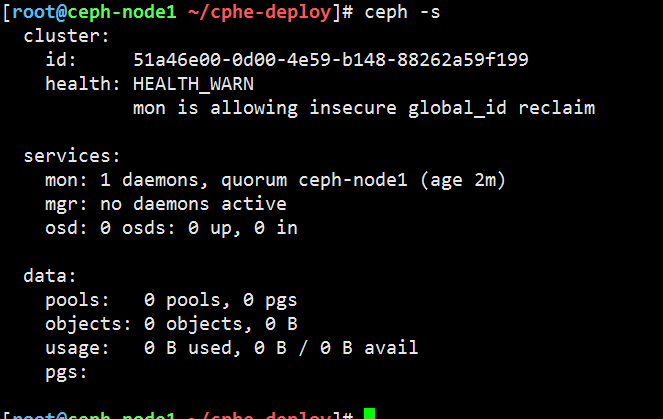

ceph-deploy mon create-initial

拷贝管理员密钥

ceph-deploy admin ceph-node1 ceph-node2 ceph-node3

node1作为监控节点

ceph-deploy mgr create ceph-node1

查看磁盘

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 40G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 39G 0 part

├─centos-root 253:0 0 35.2G 0 lvm /

└─centos-swap 253:1 0 3.8G 0 lvm [SWAP]

sdb 8:16 0 20G 0 disk

└─ceph--01ae2e28--023d--422d--8357--725e2d0da5ba-osd--block--010b60e0--97e1--40f7--95aa--4fb97c4f274e

253:2 0 20G 0 lvm

sr0 11:0 1 4.3G 0 rom

创建 osd

$ ceph-deploy osd create ceph-node1 --data /dev/sdb

$ ceph-deploy osd create ceph-node2 --data /dev/sdb

$ ceph-deploy osd create ceph-node3 --data /dev/sdb

[root@ceph-node1 ~/cphe-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.05846 root default

-3 0.01949 host ceph-node1

0 hdd 0.01949 osd.0 up 1.00000 1.00000

-5 0.01949 host ceph-node2

1 hdd 0.01949 osd.1 up 1.00000 1.00000

-7 0.01949 host ceph-node3

2 hdd 0.01949 osd.2 up 1.00000 1.00000

添加mon节点

ceph-deploy mon add ceph-node2 --address 10.0.0.202

ceph quorum_status --format json-pretty

ceph mon stat

ceph mon dump

添加 mgr

ceph-deploy mgr create ceph-node2 ceph-node3

浙公网安备 33010602011771号

浙公网安备 33010602011771号