利用docker-compose快速搭建kafka集群

一、安装docker-compose工具

安装docker-compose事先需要安装docker,这里需要自行安装

# 升级 pip

pip3 install --upgrade pip

# 指定 docker-compose 版本安装

pip install docker-compose==1.22

# 验证是否安装成功,有返回值,说明安装成功

docker-compose -v

二、搭建zookeeper集群

1.拉取zookeeper镜像

docker pull zookeeper

这里为了方便使用,把拉下的镜像名称进行修改,修改为zookeeper,如果相同则无需修改

docker tag 拉下来镜像的名称name zookeeper

2.创建相关文件夹

mkdir -p /data/docker-compose/zookeeper

mkdir -p /data/docker-data/zookeeper

3.进入文件夹,并且创建docker-compose.yml

cd /data/docker-compose/zookeeper

vi docker-compose.yml

docker-compose.yml文件内容

version: '3.6'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

container_name: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

volumes:

- /data/docker-data/zookeeper/zoo1/data:/data

- /data/docker-data/zookeeper/zoo1/datalog:/datalog

zoo2:

image: zookeeper

restart: always

hostname: zoo2

container_name: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

volumes:

- /data/docker-data/zookeeper/zoo2/data:/data

- /data/docker-data/zookeeper/zoo2/datalog:/datalog

zoo3:

image: zookeeper

restart: always

hostname: zoo3

container_name: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

volumes:

- /data/docker-data/zookeeper/zoo3/data:/data

- /data/docker-data/zookeeper/zoo3/datalog:/datalog

ps:这里我的宿主机都是同一台机器,所以我配置了hosts的,vi /etc/hosts

zoo1、zoo2、zoo3都对应的宿主机ip

4.执行docker-compose.yml

cd /data/docker-compose/zookeeper

docker-compose up -d

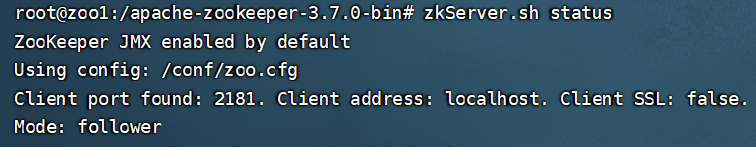

5.验证

docker exec -it zoo1 bash

zkServer.sh status

返回如下信息,则证明部署成功

Mode分follower和leader

三、搭建Kafka集群

1.拉取Kafka镜像

docker pull wurstmeister/kafka

docker tag docker.io/wurstmeister/kafka kafka

docker rmi docker.io/wurstmeister/kafka

2.拉取kafka可视化管理工具镜像

docker pull sheepkiller/kafka-manager

docker tag docker.io/sheepkiller/kafka-manager kafka-manager

docker rmi docker.io/sheepkiller/kafka-manager

2.创建相关文件夹

mkdir -p /data/docker-compose/kafka

mkdir -p /data/docker-data/kafka

2.创建网络,用于kafka和zookeeper共享一个网络段

docker network create --driver bridge zookeeper_kafka_net

3.进入文件夹,并且创建docker-compose.yml

cd /data/docker-compose/kafka

vi docker-compose.yml

4.docker-compose.yml文件内容

version: '3'

services:

kafka1:

image: kafka

restart: always

container_name: kafka1

hostname: kafka1

ports:

- 9091:9092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ADVERTISED_HOST_NAME: kafka1

KAFKA_ADVERTISED_PORT: 9091

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://宿主机IP:9091

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

volumes:

- /data/docker-data/kafka1/docker.sock:/var/run/docker.sock

- /data/docker-data/kafka1/data:/kafka

external_links:

- zoo1

- zoo2

- zoo3

kafka2:

image: kafka

restart: always

container_name: kafka2

hostname: kafka2

ports:

- 9092:9092

environment:

KAFKA_BROKER_ID: 2

KAFKA_ADVERTISED_HOST_NAME: kafka2

KAFKA_ADVERTISED_PORT: 9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://宿主机IP:9092

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

volumes:

- /data/docker-data/kafka2/docker.sock:/var/run/docker.sock

- /data/docker-data/kafka2/data:/kafka

external_links:

- zoo1

- zoo2

- zoo3

kafka3:

image: kafka

restart: always

container_name: kafka3

hostname: kafka3

ports:

- 9093:9092

environment:

KAFKA_BROKER_ID: 3

KAFKA_ADVERTISED_HOST_NAME: kafka3

KAFKA_ADVERTISED_PORT: 9093

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://宿主机IP:9093

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

volumes:

- /data/docker-data/kafka3/docker.sock:/var/run/docker.sock

- /data/docker-data/kafka3/data:/kafka

external_links:

- zoo1

- zoo2

- zoo3

kafka-manager:

image: kafka-manager

restart: always

container_name: kafka-manager

hostname: kafka-manager

ports:

- 9010:9000

links:

- kafka1

- kafka2

- kafka3

external_links:

- zoo1

- zoo2

- zoo3

environment:

ZK_HOSTS: zoo1:2181,zoo2:2181,zoo3:2181

注:links是引入当前docker-compose内部的service,external_links 引入的是当前docker-compose外部的service

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://宿主机IP:9091这里的//不是注释的意思,必须加上,否则容器起不起来

5.执行docker-compose.yml

cd /data/docker-compose/kafka

docker-compose up -d

6.将所有kafka和zookeeper加入一个网络

docker network connect zookeeper_kafka_net zoo1

docker network connect zookeeper_kafka_net zoo2

docker network connect zookeeper_kafka_net zoo3

docker network connect zookeeper_kafka_net kafka1

docker network connect zookeeper_kafka_net kafka2

docker network connect zookeeper_kafka_net kafka3

docker network connect zookeeper_kafka_net kafka-manager

7.重启这组服务

docker-compose restart

因为为每台容器都新增了一个网络,如果不重启,容器用的还是之前的网络,就会导致kafka和kafka-manager是ping不通zookeeper的三个容器的

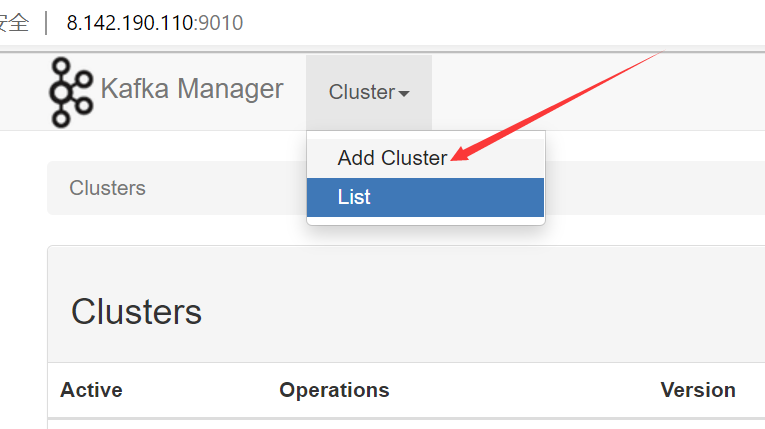

8.验证结果

访问http://宿主机IP:9010/

点击Add Cluster

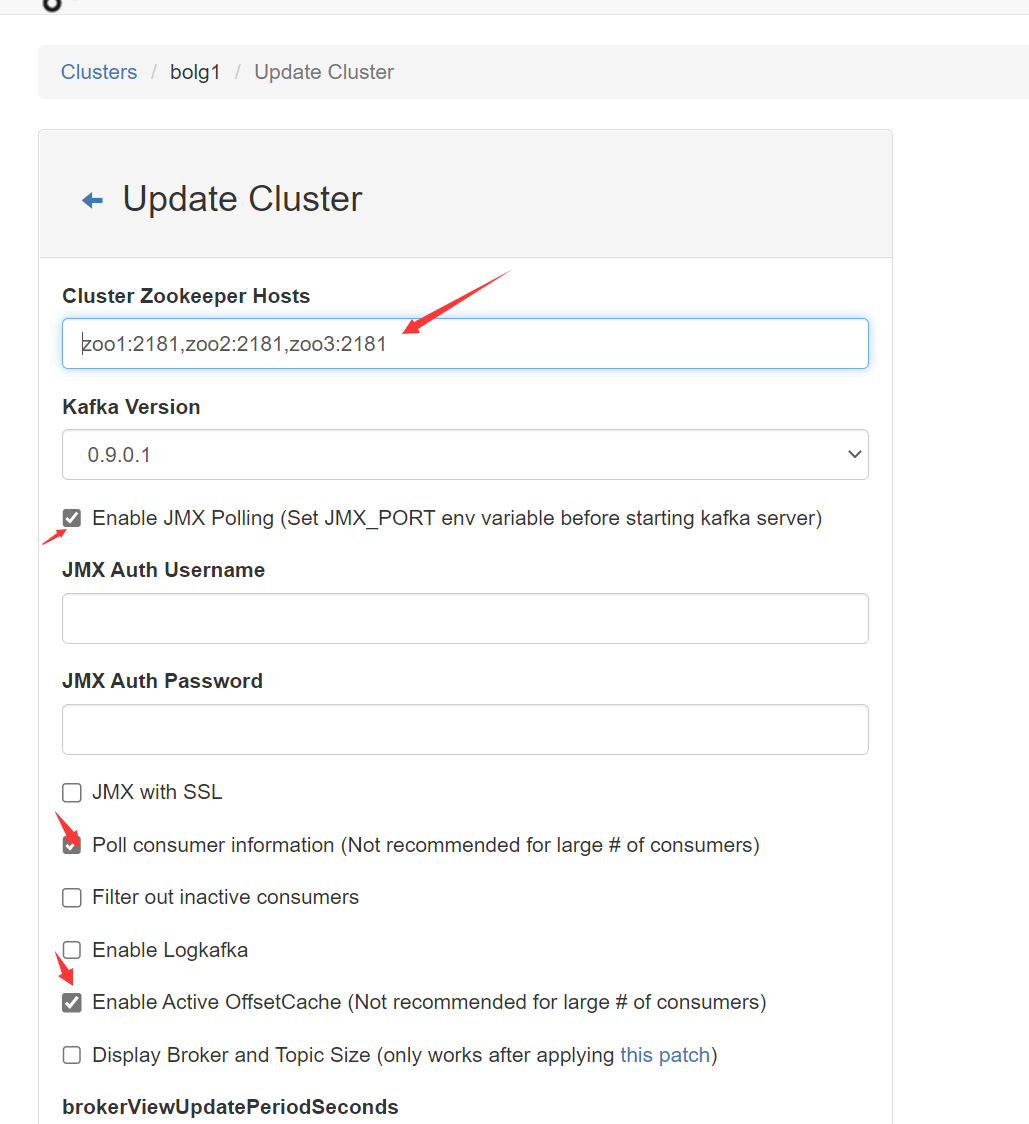

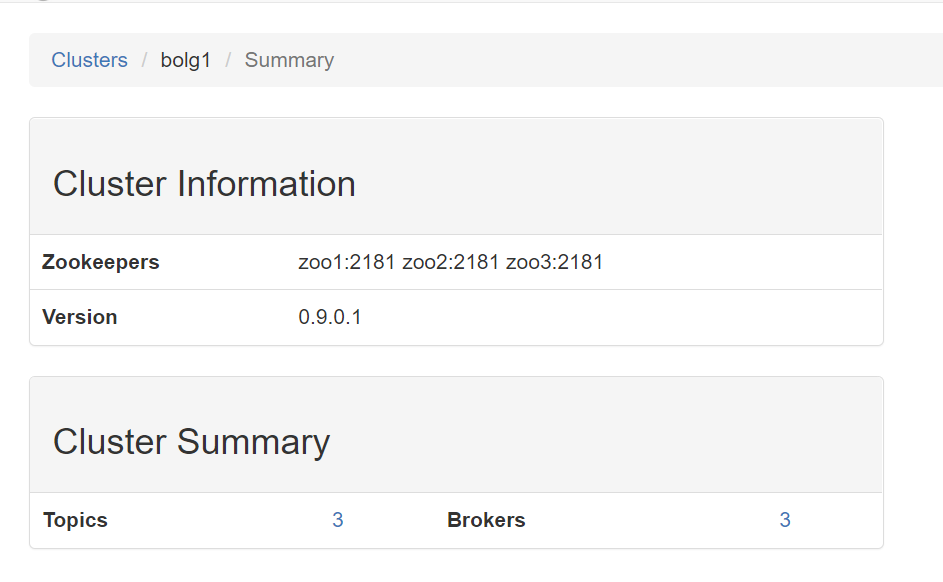

这里是我已经配置好的集群,新建需要的参数如下,集群名称随便取,hosts是启动脚本里面配置好的,如果采用的是此脚本,那么hosts如下

zoo1:2181,zoo2:2181,zoo3:2181

最后点击进入集群如下,则怎么成功

本文来自博客园,作者:迷糊桃,转载请注明原文链接:https://www.cnblogs.com/mihutao/p/16664588.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号