ES 手册安装

ES版本为: 8.11.4

JAVA 版本用 11版本. 务必!!!!!!!!【默认也行,不必11】

[root@es1 bin]# pwd

/opt/elasticsearch-8.11.4/bin

集群配置文件 不带密码的 :

ES1

[root@es8 config]# vi elasticsearch.yml #-----------------------Cluster----------------------- cluster.name: myes #-----------------------Node----------------------- node.name: es81-node #-----------------------Paths----------------------- path.data: /opt/elasticsearch-8.11.4/data #-----------------------Memory----------------------- bootstrap.memory_lock: false #-----------------------Network----------------------- network.host: es8 #-----------------------Discovery----------------------- discovery.seed_hosts: ["es8","es8-2"] cluster.initial_master_nodes: ["es81-node"] xpack.security.enabled: false #xpack.security.transport.ssl.enabled: false #xpack.security.http.ssl:endabled: falsea xpack.security.transport.ssl: enabled: false xpack.security.http.ssl: enabled: false

ES2

#-----------------------Cluster----------------------- cluster.name: myes #-----------------------Node----------------------- node.name: es81-node #-----------------------Paths----------------------- path.data: /opt/elasticsearch-8.11.4/data #-----------------------Memory----------------------- bootstrap.memory_lock: false #-----------------------Network----------------------- network.host: es8 #-----------------------Discovery----------------------- discovery.seed_hosts: ["es8","es8-2"] #cluster.initial_master_nodes: ["es81-node"] xpack.security.enabled: false xpack.security.transport.ssl: enabled: false xpack.security.http.ssl: enabled: false

config 配置文件的配置:

#-----------------------Cluster----------------------- cluster.name: myes #-----------------------Node----------------------- node.name: node102 #-----------------------Paths----------------------- path.data: /opt/module/elasticsearch/data #-----------------------Memory----------------------- bootstrap.memory_lock: false #-----------------------Network----------------------- network.host: es1 #-----------------------Discovery----------------------- discovery.seed_hosts: ["es1", "es1","es1"] cluster.initial_master_nodes: ["es1"]

#最好如下参数也配置上 .

xpack.security.enabled: false

‘

请注意如下是单节点模式配置 single’

cluster.name: myes #-----------------------Node----------------------- node.name: node1 #-----------------------Paths----------------------- path.data: /opt/elasticsearch-8.11.4/data #-----------------------Memory----------------------- bootstrap.memory_lock: false #-----------------------Network----------------------- network.host: es1 #-----------------------Discovery----------------------- ##不是集群就把下面的注释掉 discovery.seed_hosts: ["es1", "es1","es1"] #cluster.initial_master_nodes: ["node1"]

单节点模式的配置

discovery.type: single-node xpack.security.enabled: false

这个也是单节点模式,之前一直报错,复制的配置文件

#-----------------------Cluster----------------------- cluster.name: myes #-----------------------Node----------------------- node.name: node1 #-----------------------Paths----------------------- path.data: /opt/elasticsearch-8.11.4/data #-----------------------Memory----------------------- bootstrap.memory_lock: false #-----------------------Network----------------------- network.host: hdp01 #-----------------------Discovery----------------------- discovery.seed_hosts: ["hdp01"] #cluster.initial_master_nodes: ["es75"] discovery.type: single-node

2.2.2 调整linux内核参数 1)切换到root用户,编辑limits.conf 添加类似如下内容 [root@hadoop102 elasticsearch]# vi /etc/security/limits.conf 添加如下内容: * soft nofile 65536 * hard nofile 131072 * soft nproc 2048 * hard nproc 4096 注意:“*” 不要省略掉。以上操作为增加Linux文件系统中可以同时打开的文件句柄数。 2)切换到root用户修改配置sysctl.conf [root@hadoop102 elasticsearch]# vi /etc/sysctl.conf 添加下面配置: vm.max_map_count=655360 以上修改的Linux配置需要分发至其他节点 然后,重新启动Linux,必须重启!!!

groupadd esgroup useradd -g esgroup esuser chown -R esuser:esgroup /opt/elasticsearch-8.11.4 passwd esuser 密码为: esuser123 //切换用户

为elaticsearch创建用户并赋予相应权限(推荐) //创建用户组 groupadd esgroup //创建用户 useradd -g esgroup esuser //设置权限 chown -R esuser:esgroup /opt/elasticsearch-8.11.4 //设置密码 passwd esuser 密码为: esuser123 //切换用户 su esuser //重新启动elaticsearch ./bin/elasticsearch

KIBANA配置文件:

server.host: "es75" elasticsearch.hosts: ["http://es75:9200"] i18n.locale: "zh-CN"

1 # NOTE: Elasticsearch comes with reasonable defaults for most settings. 2 # Before you set out to tweak and tune the configuration, make sure you 3 # understand what are you trying to accomplish and the consequences. 4 # 5 # The primary way of configuring a node is via this file. This template lists 6 # the most important settings you may want to configure for a production cluster. 7 # 8 # Please consult the documentation for further information on configuration options: 9 # https://www.elastic.co/guide/en/elasticsearch/reference/index.html 10 # 11 # ---------------------------------- Cluster ----------------------------------- 12 # 13 # Use a descriptive name for your cluster: 14 # 15 #cluster.name: my-application 16 # 17 # ------------------------------------ Node ------------------------------------ 18 # 19 # Use a descriptive name for the node: 20 # 21 #node.name: node-1 22 # 23 # Add custom attributes to the node: 24 # 25 #node.attr.rack: r1 26 # 27 # ----------------------------------- Paths ------------------------------------ 28 # 29 # Path to directory where to store the data (separate multiple locations by comma): 30 # 31 #path.data: /path/to/data 32 # 33 # Path to log files: 34 # 35 #path.logs: /path/to/logs 36 # 37 38 39 ------------------------ Memory ----------------------------------- 40 # 41 # Lock the memory on startup: 42 # 43 #bootstrap.memory_lock: true 44 # 45 # Make sure that the heap size is set to about half the memory available 46 # on the system and that the owner of the process is allowed to use this 47 # limit. 48 # 49 # Elasticsearch performs poorly when the system is swapping the memory. 50 # 51 # ---------------------------------- Network ----------------------------------- 52 # 53 # By default Elasticsearch is only accessible on localhost. Set a different 54 # address here to expose this node on the network: 55 # 56 #network.host: 192.168.0.1 57 # 58 # By default Elasticsearch listens for HTTP traffic on the first free port it 59 # finds starting at 9200. Set a specific HTTP port here: 60 # 61 #http.port: 9200 62 # 63 # For more information, consult the network module documentation. 64 # 65 # --------------------------------- Discovery ---------------------------------- 66 # 67 # Pass an initial list of hosts to perform discovery when this node is started: 68 # The default list of hosts is ["127.0.0.1", "[::1]"] 69 # 70 #discovery.seed_hosts: ["host1", "host2"] 71 # 72 # Bootstrap the cluster using an initial set of master-eligible nodes: 73 # 74 #cluster.initial_master_nodes: ["node-1", "node-2"] 75 # 76 # For more information, consult the discovery and cluster formation module documentation. 77 # 78 # ---------------------------------- Various ----------------------------------- 79 # 80 # Allow wildcard deletion of indices: 81 # 82 #action.destructive_requires_name: false

创建hive es外表。

CREATE EXTERNAL TABLE my_table ( id INT, name STRING, age INT ) ROW FORMAT SERDE 'org.elasticsearch.hadoop.hive.EsSerDe' STORED BY 'org.elasticsearch.hadoop.hive.EsStorageHandler' TBLPROPERTIES ( 'es.index.auto.create'= 'true', 'es.port' = '9200', 'es.net.http.auth.user' = 'elastic' 'es.net.http.auth.pass' = 'elastic' 'es.resource' = 'mytable', 'es.nodes' = '192.168.9.237', 'es.index.auto.create' = 'true', 'es.write.operation' = 'upsert' );

创建表报如下错误:

elasticsearch - 失败:SemanticException找不到类 'org.elasticsearch.hadoop.hive.ESStorageHandler

Error: Error while compiling statement: FAILED: SemanticException Cannot find class 'org.elasticsearch.hadoop.hive.EsStorageHandler' (state=42000,code=40000)

网上的解决办法如下:

在$ HIVE_HOME / lib文件夹中添加 elasticsearch-hadoop-2.3.0.jar 和 elasticsearch-hadoop-hive-2.3.0.jar 文件后,我解决了该问题。

我的解决办法是 : 我的是 8.11.4 的es

放到hive 的lib目录下

elasticsearch-hadoop-8.11.4.jar

elasticsearch-hadoop-hive-8.11.4.jar

这两个即可.

据说.

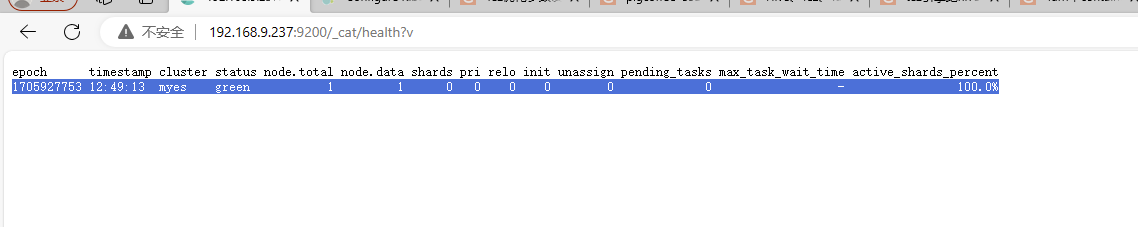

curl -XGET 192.168.9.201:9200/_cat/indices?v # Linux /_cat/indices?v # Kinaba http://192.168.9.201:9200/_cat/indices?v # WebUI

查看系统是否装nfs了. rpm -qa nfs-utils rpm -qa rpcbind --在新的节点上配置. vi /etc/idmapd.conf Domain = 192.168.9.241 --编辑 /etc/exports /cloud/data02/nfs_data 192.168.9.0/24(rw,sync,insecure,no_subtree_check,no_root_squash) --开机启动设置. systemctl enable --now rpcbind nfs-server --启动命令.[这个服务必须重启] sudo systemctl restart nfs-server.service -- status --生效命令. sudo exportfs -r 确保老集群中安装了nfs rpm -qa nfs-utils rpm -qa rpcbind /cloud/data02/nfs_data 192.168.9.0/24(rw,sync,insecure,no_subtree_check,no_root_squash) -- 在老集群上挂载 : mount -t nfs 192.168.9.242:/cloud/data02/nfs_data /data/backups/my_backup --在客户端查看挂载情况. df -h --在服务端查看 showmount -e ---每个节点上都要挂载一遍. --查看ntf状态. --在服务端挂载的目录新建个文件夹,看看客户端能不能获取到这个文件。 --- 每个就集群 elasticsearch.yml 文件 修改elastticsearch.yml配置文件指定snapshot的共享目录: --旧集群指定:/data/backups/my_backup path.repo: ["/data/backups/my_backup"] -- 修改所属群组使得es可以写入 chown -R es:es /data/backups/my_backup chmod 777 /data/backups/my_backup --新集群指定到/cloud/data01/backup path.repo: [“/cloud/data01/”] backup mkdir -p /cloud/data01/backup chown -R es:es /cloud/data01/backup chmod 777 /cloud/data01/backup -- 修改elasticsearch.yml配置文件并重启集群,重启ES各节点 ---重启集群 修改elasticsearch.yml配置文件并重启集群,重启ES各节点 重启两个es集群,先杀掉es进程( tips:一台一台重启比较集群恢复比较快一点 ) jps查看es进程id kill -9 es进程id su es bin/elasticsearch -d 先禁用两个集群的自动分配: 在两个集群其中一台上面执行即可: 关闭: curl -XPUT http://127.0.0.1:9200/_cluster/settings -d '{ "transient": {"cluster.routing.allocation.enable": "none"} }' 3.4.2.ES旧集群创建快照仓库 登录kibana,进入dev tools输入如下指令 #先建立旧集群索引快照库: #先建立旧集群索引快照库: chown esuser:esuser -R my_backup/ --如下直接执行. PUT /_snapshot/my_backup { "type": "fs", "settings": { "compress": true, "location": "/data/backups/my_backup" } } -- PUT /_snapshot/my_backup { "type": "fs", "settings": { "compress": true, "location": "/data/backups/my_backup" }} curl -XPUT "http://localhost:9200/_snapshot/my_backup" -H 'Content-Type: application/json' -d'{ "type": "fs", "settings": { "compress": true, "location": "/data/backups/my_backup" }}' 3.4.4.ES新集群创建快照仓库 chown es:es backup/ PUT /_snapshot/my_backup { "type": "fs", "settings": { "compress": true, "location": "/cloud/data01/backup", "readonly": true } } ###报错原因: { "error": { "root_cause": [ { "type": "exception", "reason": "failed to create blob container" } ], "type": "exception", "reason": "failed to create blob container", "caused_by": { "type": "access_denied_exception", "reason": "/data/backups/my_backup/tests-FV56JqXyTxaTa5qbXUms6w" } }, "status": 500 } 如下命令执行: chown -R es:es /data/backups/my_backup

浙公网安备 33010602011771号

浙公网安备 33010602011771号