数据采集与融合技术实践作业二

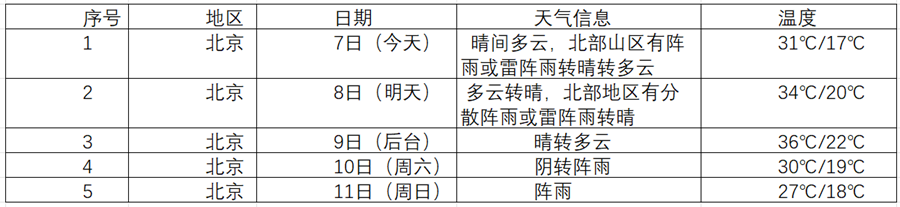

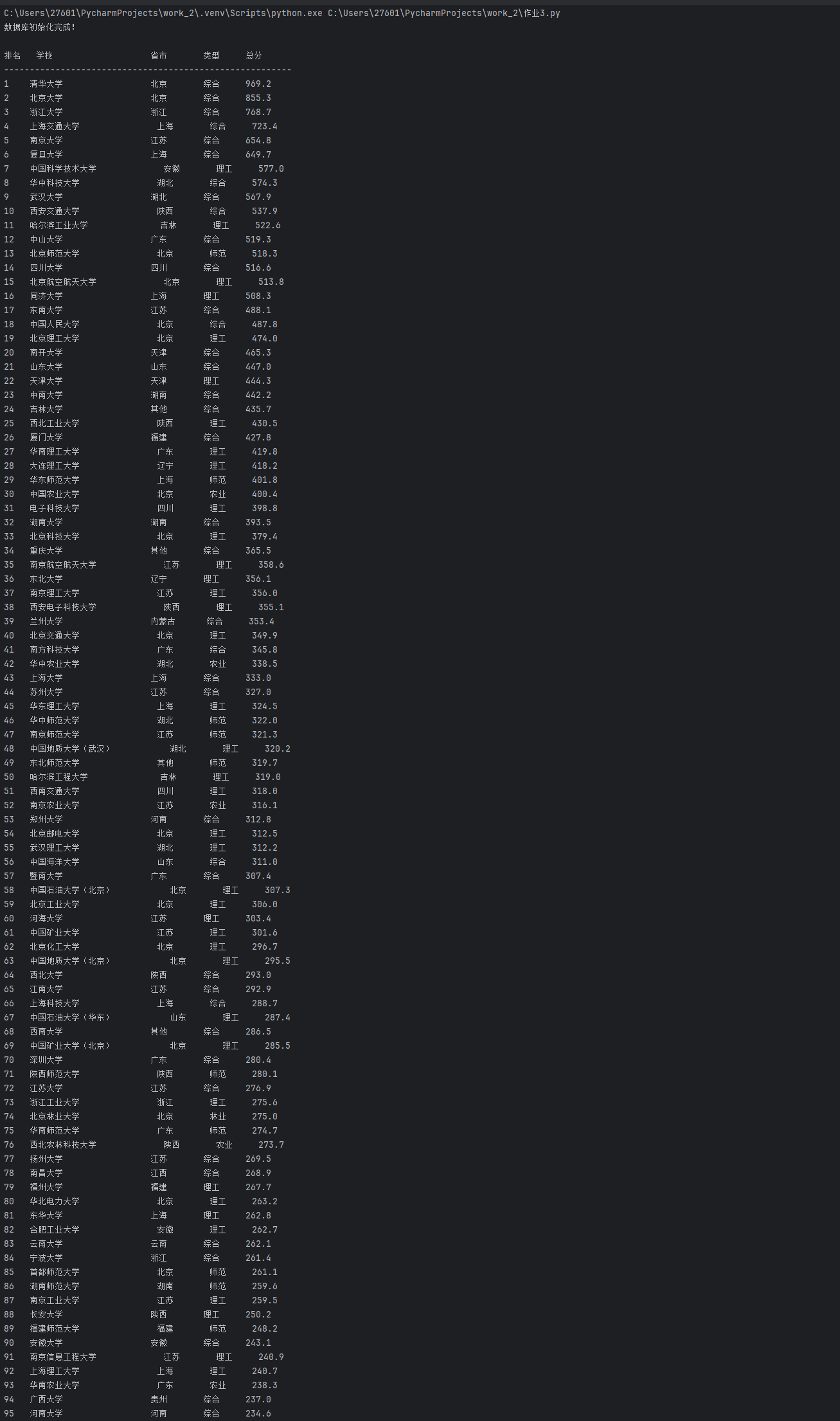

作业1

– 要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

– 输出信息:

Gitee文件夹链接

代码:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con=sqlite3.connect("weathers.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)",

(city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

心得体会:这份作业做的主要是复现,难度适中

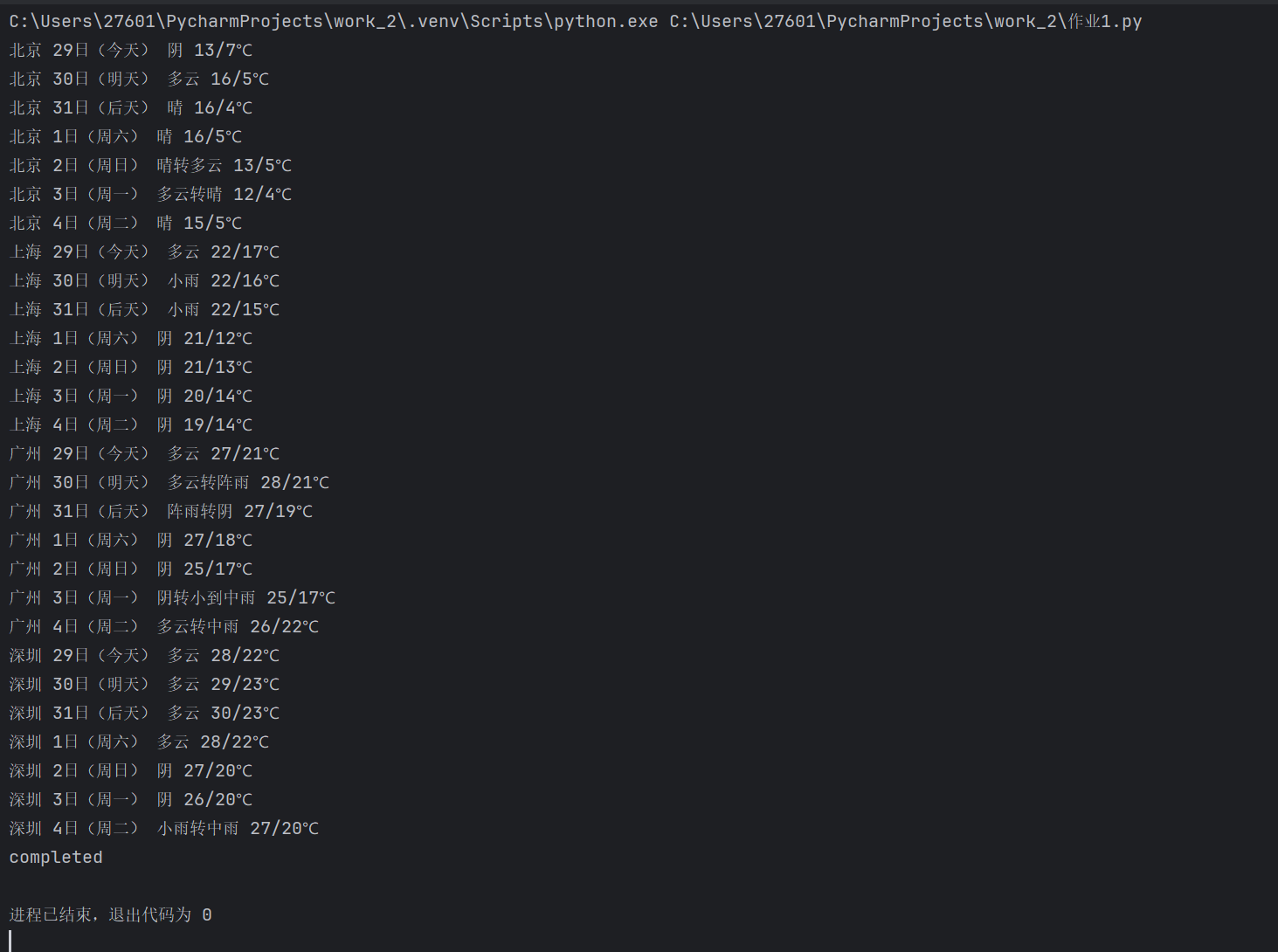

作业2

– 要求:用requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。

– 候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

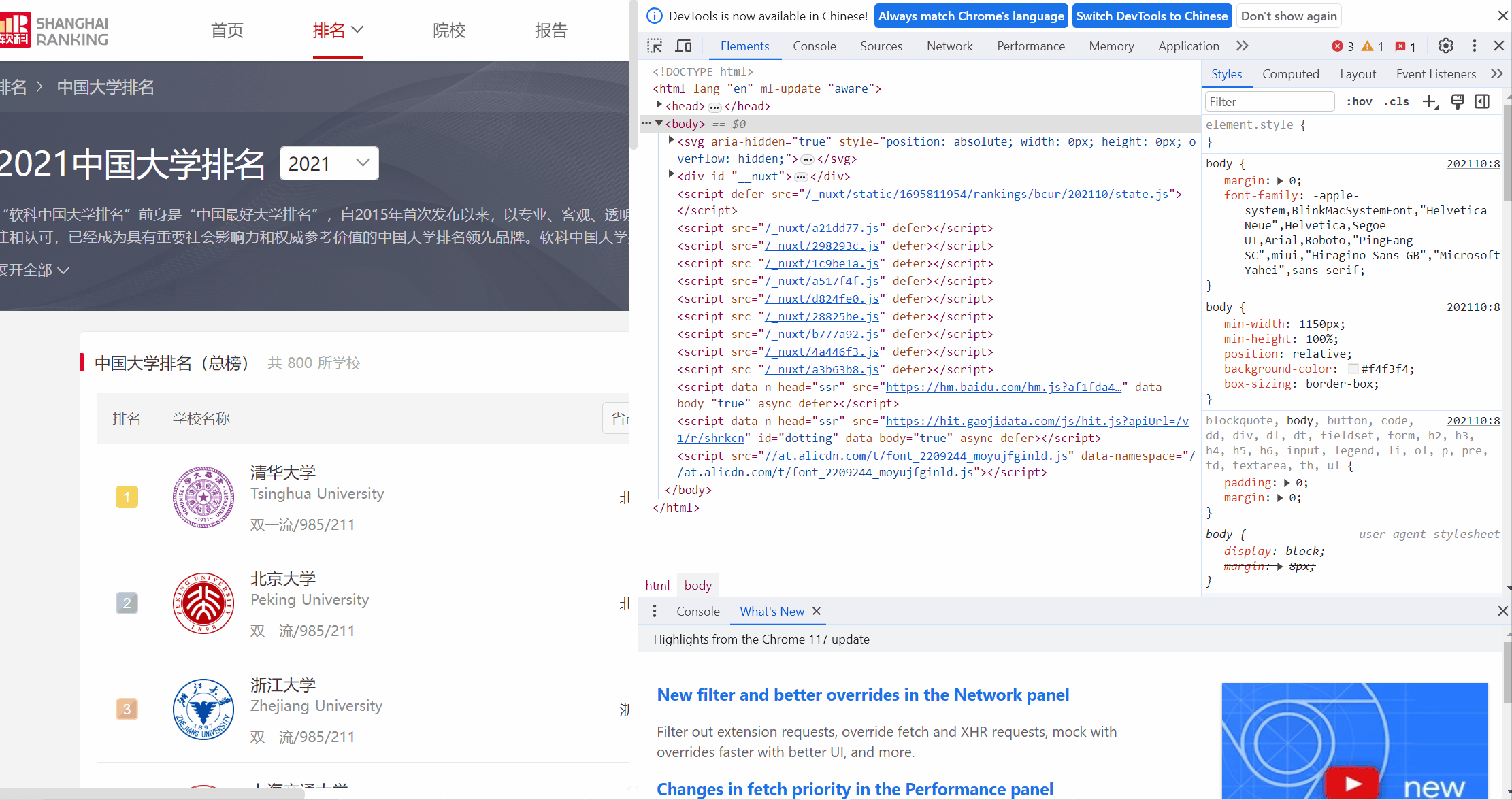

– 技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084

– 输出信息:

Gitee文件夹链接

代码

import requests

import pandas as pd

import json

import re

import time

class SimpleStockSpider:

def __init__(self):

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36 Edg/141.0.0.0'

}

def get_stock_data(self):

"""获取股票数据"""

stocks_data = []

# 主要股票代码

stock_codes = [

('1.000001', '上证指数'),

('0.399001', '深证成指'),

('0.399006', '创业板指'),

('1.600036', '招商银行'),

('0.000858', '五粮液')

]

for code, name in stock_codes:

try:

url = f'http://push2.eastmoney.com/api/qt/stock/get'

params = {

'ut': 'fa5fd1943c7b386f172d6893dbfba10b',

'invt': '2',

'fltt': '2',

'fields': 'f43,f44,f45,f46,f60,f84,f85,f86,f169,f170',

'secid': code,

'cb': f'jQuery1124_{int(time.time() * 1000)}',

'_': str(int(time.time() * 1000))

}

response = requests.get(url, params=params, headers=self.headers, timeout=10)

if response.status_code == 200:

content = response.text

json_str = re.search(r'\{.*\}', content)

if json_str:

data = json.loads(json_str.group())

if data.get('data'):

stock = data['data']

stock_info = {

'股票代码': code.split('.')[-1],

'股票名称': name,

'最新报价': stock.get('f43', 0) / 100 if stock.get('f43') else 0,

'涨跌幅': round(stock.get('f170', 0) / 100, 2),

'涨跌额': round(stock.get('f169', 0) / 100, 2),

'成交量': f"{stock.get('f84', 0) / 10000:.2f}万",

'成交额': f"{stock.get('f86', 0) / 100000000:.2f}亿",

'振幅': round(stock.get('f171', 0) / 100, 2),

'最高': stock.get('f44', 0) / 100 if stock.get('f44') else 0,

'最低': stock.get('f45', 0) / 100 if stock.get('f45') else 0,

'今开': stock.get('f46', 0) / 100 if stock.get('f46') else 0,

'昨收': stock.get('f60', 0) / 100 if stock.get('f60') else 0

}

stocks_data.append(stock_info)

time.sleep(0.5)

except Exception as e:

print(f"获取股票 {name} 数据失败: {e}")

continue

return stocks_data

def save_to_csv(self, stocks_data):

"""保存到CSV文件"""

if stocks_data:

df = pd.DataFrame(stocks_data)

df.to_csv('stock_data.csv', index=False, encoding='utf-8-sig')

print(f"数据已保存到 stock_data.csv,共 {len(stocks_data)} 条记录")

# 显示数据

print("\n爬取的股票数据:")

print(df.to_string(index=False))

else:

print("没有获取到数据")

def run(self):

"""运行爬虫"""

print("开始爬取股票数据...")

stocks_data = self.get_stock_data()

self.save_to_csv(stocks_data)

if __name__ == "__main__":

spider = SimpleStockSpider()

spider.run()

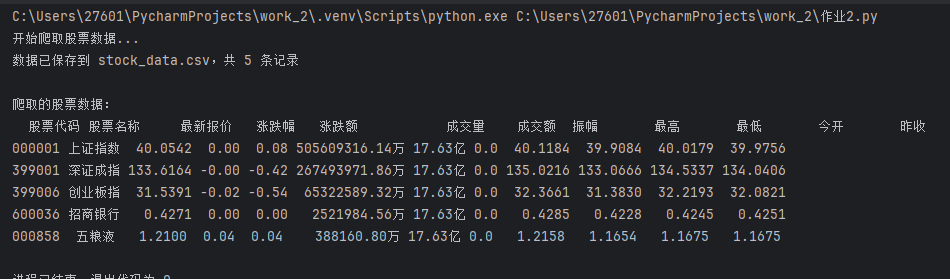

作业3

要求: 爬取中国大学 2021 主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的过程录制 Gif 加入至博客中。

输出信息: gitee文件夹链接

代码

import re

import requests

import sqlite3

from datetime import datetime

# ===== Consts =====

DB_FILE = "university_rankings_2021.db"

URL = "https://www.shanghairanking.cn/_nuxt/static/1762223212/rankings/bcur/2021/payload.js"

HEADERS = {

"User-Agent": (

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"

)

}

PROVINCE_MAP = {

'k': '江苏', 'n': '山东', 'o': '河南', 'p': '河北', 'q': '北京', 'r': '辽宁', 's': '陕西', 't': '四川', 'u': '广东',

'v': '湖北', 'w': '湖南', 'x': '浙江', 'y': '安徽', 'z': '江西', 'A': '黑龙江', 'B': '吉林', 'D': '上海', 'F': '福建', 'E': '山西',

'H': '云南', 'G': '广西', 'I': '贵州', 'J': '甘肃', 'K': '内蒙古', 'L': '重庆', 'N': '天津', 'O': '新疆', 'az': '宁夏', 'aA': '青海', 'aB': '西藏'

}

CATEGORY_MAP = {

'f': '综合', 'e': '理工', 'h': '师范', 'm': '农业', 'S': '林业',

}

# 预编译正则

PATTERN = re.compile(

r'univNameCn:"(?P<univNameCn>[^"]+)",'

r'.*?univCategory:(?P<univCategory>[^,]+),'

r'.*?province:(?P<province>[^,]+),'

r'.*?score:(?P<score>[^,]+),',

re.S

)

def _clean(s: str) -> str:

"""去掉包裹的引号与首尾空白"""

return s.strip().strip('"')

# ===== DB =====

def init_db():

with sqlite3.connect(DB_FILE) as conn:

conn.execute(

"""

CREATE TABLE IF NOT EXISTS bcur_2021_main (

id INTEGER PRIMARY KEY AUTOINCREMENT,

ranking INTEGER NOT NULL,

university_name TEXT NOT NULL,

province TEXT NOT NULL,

category TEXT NOT NULL,

total_score FLOAT NOT NULL,

crawl_time DATETIME NOT NULL

)

"""

)

print("数据库初始化完成!")

def bulk_insert(rows):

"""rows: [(school, province, category, score), ...]"""

crawl_time = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

payload = [(i, s, p, c, sc, crawl_time) for i, (s, p, c, sc) in enumerate(rows, start=1)]

with sqlite3.connect(DB_FILE) as conn:

conn.executemany(

"""

INSERT INTO bcur_2021_main

(ranking, university_name, province, category, total_score, crawl_time)

VALUES (?, ?, ?, ?, ?, ?)

""",

payload

)

# ===== Crawler =====

def fetch_rankings():

with requests.Session() as sess:

r = sess.get(URL, headers=HEADERS)

r.raise_for_status()

r.encoding = r.apparent_encoding

text = r.text

results = []

for m in PATTERN.finditer(text):

name = _clean(m.group('univNameCn'))

cat_raw = _clean(m.group('univCategory'))

prov_code = _clean(m.group('province'))

score_raw = _clean(m.group('score'))

province = PROVINCE_MAP.get(prov_code, '其他')

category = CATEGORY_MAP.get(cat_raw, '其他')

try:

score = float(score_raw)

except ValueError:

continue

if name:

results.append((name, province, category, score))

# 与原逻辑一致:按总分降序

results.sort(key=lambda x: x[3], reverse=True)

return results

def main():

init_db()

rows = fetch_rankings()

# 打印格式保持不变

print("\n{:<4} {:<20} {:<8} {:<6} {:<8}".format('排名', '学校', '省市', '类型', '总分'))

print("-" * 56)

for idx, (school, province, category, score) in enumerate(rows, start=1):

print("{:<4} {:<20} {:<8} {:<6} {:<8.1f}".format(idx, school, province, category, score))

bulk_insert(rows)

if __name__ == "__main__":

main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号