聊斋相关分词

import jieba text=open('E:/聊斋志异/聊斋志异.txt',"r",encoding='utf-8').read() words=jieba.lcut(text) counts={} for word in words: if len(word)==1: #排除带个字符的分词效果 continue else: counts[word]=counts.get(word,0)+1 items=list(counts.items()) items.sort(key=lambda x:x[1],reverse=True) for i in range(20): word,count=items[i] print("{0:<10}{1:>5}".format(word,count))

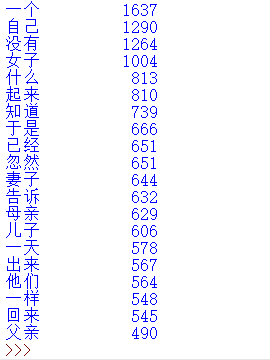

结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号