Kafka:客户端连接服务器一直响应超时与advertised.listeners

一、Kafka连接超时异常

Kafka版本是2.7.1。Kafka客户端报错部分信息:

1 Exception in thread "main" java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Call(callName=listNodes, deadlineMs=1636366763102, tries=1, nextAllowedTryMs=1636366763208) timed out at 1636366763108 after 1 attempt(s) 2 at org.apache.kafka.common.internals.KafkaFutureImpl.wrapAndThrow(KafkaFutureImpl.java:45) 3 at org.apache.kafka.common.internals.KafkaFutureImpl.access$000(KafkaFutureImpl.java:32) 4 at org.apache.kafka.common.internals.KafkaFutureImpl$SingleWaiter.await(KafkaFutureImpl.java:89) 5 at org.apache.kafka.common.internals.KafkaFutureImpl.get(KafkaFutureImpl.java:260) 6 at com.xxx.demo.kafka.KafkaApi.main(KafkaApi.java:21) 7 Caused by: org.apache.kafka.common.errors.TimeoutException: Call(callName=listNodes, deadlineMs=1636366763102, tries=1, nextAllowedTryMs=1636366763208) timed out at 1636366763108 after 1 attempt(s) 8 Caused by: org.apache.kafka.common.errors.TimeoutException: Timed out waiting to send the call. Call: listNodes

报错信息表示的很明白,是超时的异常。

二、排除常见的问题

首先,我的Kafka的服务是跑在IaaS上的,出现连接超时,一般先想到的是防火墙问题,没有开放端口,接下来是在本地使用Telnet命令来检测服务器的ip和port,telnet能通,又去查看了端口开放情况,排除了防火墙问题。而且服务器上部署都没有问题,也能生产和消费信息。

三、从客户端源码进行探究

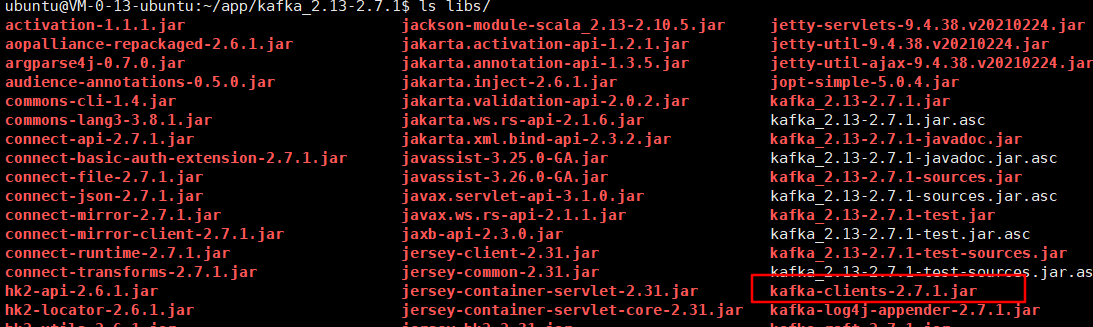

确认服务端的Kafka版本和客户端的版本是否一致,版本号都是2.7.1。

1 <dependency> 2 <groupId>org.apache.kafka</groupId> 3 <artifactId>kafka-clients</artifactId> 4 <version>2.7.1</version> 5 </dependency>

创建Kafka客户端时,实例化一个Selector实例,这个实例内部打开了一个Java NIO的Selector。

static KafkaAdminClient createInternal(AdminClientConfig config, TimeoutProcessorFactory timeoutProcessorFactory) {

...

selector = new Selector(config.getLong(AdminClientConfig.CONNECTIONS_MAX_IDLE_MS_CONFIG),

metrics, time, metricGrpPrefix, channelBuilder, logContext);

...

}

public Selector(int maxReceiveSize, long connectionMaxIdleMs, int failedAuthenticationDelayMs, Metrics metrics, Time time, String metricGrpPrefix, Map<String, String> metricTags, boolean metricsPerConnection, boolean recordTimePerConnection, ChannelBuilder channelBuilder, MemoryPool memoryPool, LogContext logContext) { try { this.nioSelector = java.nio.channels.Selector.open(); } catch (IOException e) { throw new KafkaException(e); } ... }

Kafka客户端实例好之后,客户端线程就启动了,while不断循环去处理请求。

@Override public void run() { log.trace("Thread starting"); try { processRequests(); } finally { ... } }

接着,主程序调用客户端的 describeCluster 方法来获取一些Kafka集群的服务器配置,发起了一个新的Call,去选择一个请求最少的节点Node并返回,

@Override public DescribeClusterResult describeCluster(DescribeClusterOptions options) { final KafkaFutureImpl<Collection<Node>> describeClusterFuture = new KafkaFutureImpl<>(); final KafkaFutureImpl<Node> controllerFuture = new KafkaFutureImpl<>(); final KafkaFutureImpl<String> clusterIdFuture = new KafkaFutureImpl<>(); final KafkaFutureImpl<Set<AclOperation>> authorizedOperationsFuture = new KafkaFutureImpl<>(); final long now = time.milliseconds(); runnable.call(new Call("listNodes", calcDeadlineMs(now, options.timeoutMs()), new LeastLoadedNodeProvider()) { @Override MetadataRequest.Builder createRequest(int timeoutMs) { // Since this only requests node information, it's safe to pass true for allowAutoTopicCreation (and it // simplifies communication with older brokers) return new MetadataRequest.Builder(new MetadataRequestData() .setTopics(Collections.emptyList()) .setAllowAutoTopicCreation(true) .setIncludeClusterAuthorizedOperations(options.includeAuthorizedOperations())); } ... }

这个新的Call就会被客户端线程复制到pendingCall进行处理,为这个Call选择一个节点进行处理。

1 // Choose nodes for our pending calls. 2 pollTimeout = Math.min(pollTimeout, maybeDrainPendingCalls(now)); 3 long metadataFetchDelayMs = metadataManager.metadataFetchDelayMs(now); 4 if (metadataFetchDelayMs == 0) { 5 metadataManager.transitionToUpdatePending(now); 6 Call metadataCall = makeMetadataCall(now); 7 // Create a new metadata fetch call and add it to the end of pendingCalls. 8 // Assign a node for just the new call (we handled the other pending nodes above). 9 10 if (!maybeDrainPendingCall(metadataCall, now)) 11 pendingCalls.add(metadataCall); 12 }

选择具有最少未完成请求且至少符合连接条件的节点,首先会去测试能不能连接。

1 @Override 2 public Node leastLoadedNode(long now) { 3 List<Node> nodes = this.metadataUpdater.fetchNodes(); 4 if (nodes.isEmpty()) 5 throw new IllegalStateException("There are no nodes in the Kafka cluster"); 6 int inflight = Integer.MAX_VALUE; 7 8 Node foundConnecting = null; 9 Node foundCanConnect = null; 10 Node foundReady = null; 11 12 int offset = this.randOffset.nextInt(nodes.size()); 13 for (int i = 0; i < nodes.size(); i++) { 14 int idx = (offset + i) % nodes.size(); 15 Node node = nodes.get(idx); 16 if (canSendRequest(node.idString(), now)) { 17 int currInflight = this.inFlightRequests.count(node.idString()); 18 if (currInflight == 0) { 19 // if we find an established connection with no in-flight requests we can stop right away 20 log.trace("Found least loaded node {} connected with no in-flight requests", node); 21 return node; 22 } else if (currInflight < inflight) { 23 // otherwise if this is the best we have found so far, record that 24 inflight = currInflight; 25 foundReady = node; 26 } 27 } else if (connectionStates.isPreparingConnection(node.idString())) { 28 foundConnecting = node; 29 } else if (canConnect(node, now)) { 30 if (foundCanConnect == null || 31 this.connectionStates.lastConnectAttemptMs(foundCanConnect.idString()) > 32 this.connectionStates.lastConnectAttemptMs(node.idString())) { 33 foundCanConnect = node; 34 } 35 } else { 36 log.trace("Removing node {} from least loaded node selection since it is neither ready " + 37 "for sending or connecting", node); 38 } 39 }

Kafka客户端的线处理主程序发起的请求。顺着代码走下去,发现 leastLoadedNode 方法返回的节点正是服务器的ip和port。所以当前这个结点来处理Call请求,紧接着是去给这个结点“打电话”。

1 pollTimeout = Math.min(pollTimeout, sendEligibleCalls(now));

现在是打电话的流程,根据要打电话的名单列表中一个一个的打电话。

1 private long sendEligibleCalls(long now) { 2 long pollTimeout = Long.MAX_VALUE; 3 for (Iterator<Map.Entry<Node, List<Call>>> iter = callsToSend.entrySet().iterator(); iter.hasNext(); ) { 4 Map.Entry<Node, List<Call>> entry = iter.next(); 5 List<Call> calls = entry.getValue(); 6 if (calls.isEmpty()) { 7 iter.remove(); 8 continue; 9 } 10 Node node = entry.getKey(); 11 if (!client.ready(node, now)) { 12 long nodeTimeout = client.pollDelayMs(node, now); 13 pollTimeout = Math.min(pollTimeout, nodeTimeout); 14 log.trace("Client is not ready to send to {}. Must delay {} ms", node, nodeTimeout); 15 continue; 16 } 17 Call call = calls.remove(0); 18 int requestTimeoutMs = Math.min(KafkaAdminClient.this.requestTimeoutMs, 19 calcTimeoutMsRemainingAsInt(now, call.deadlineMs)); 20 AbstractRequest.Builder<?> requestBuilder; 21 try { 22 requestBuilder = call.createRequest(requestTimeoutMs); 23 } catch (Throwable throwable) { 24 call.fail(now, new KafkaException(String.format( 25 "Internal error sending %s to %s.", call.callName, node))); 26 continue; 27 } 28 ClientRequest clientRequest = client.newClientRequest(node.idString(), requestBuilder, now, 29 true, requestTimeoutMs, null); 30 log.debug("Sending {} to {}. correlationId={}", requestBuilder, node, clientRequest.correlationId()); 31 client.send(clientRequest, now); 32 getOrCreateListValue(callsInFlight, node.idString()).add(call); 33 correlationIdToCalls.put(clientRequest.correlationId(), call); 34 } 35 return pollTimeout; 36 }

client.ready 检查节点的状态,节点没有状态就会开始初始化连接,有状态则会检查通过Selector打开的KafkaChannel是否就绪,最后检查能否向这个节点发送更多请求,具备这些请求就可以返回true,继续对这个节点打电话。

1 public boolean ready(Node node, long now) { 2 if (node.isEmpty()) 3 throw new IllegalArgumentException("Cannot connect to empty node " + node); 4 5 if (isReady(node, now)) 6 return true; 7 8 if (connectionStates.canConnect(node.idString(), now)) 9 // if we are interested in sending to a node and we don't have a connection to it, initiate one 10 initiateConnect(node, now); 11 12 return false; 13 }

isReady(node, now) 中检查给定 id 的节点是否准备好发送更多请求 canSendRequest 。

1 private boolean canSendRequest(String node, long now) { 2 return connectionStates.isReady(node, now) && selector.isChannelReady(node) && 3 inFlightRequests.canSendMore(node); 4 }

节点没准备好就会初始化连接, connectionStates.canConnect 是没有连接并且至少在最小重新连接退避周期内没有连接的话就能初始化连接。

1 private void initiateConnect(Node node, long now) { 2 String nodeConnectionId = node.idString(); 3 try { 4 connectionStates.connecting(nodeConnectionId, now, node.host(), clientDnsLookup); 5 InetAddress address = connectionStates.currentAddress(nodeConnectionId); 6 log.debug("Initiating connection to node {} using address {}", node, address); 7 selector.connect(nodeConnectionId, 8 new InetSocketAddress(address, node.port()), 9 this.socketSendBuffer, 10 this.socketReceiveBuffer); 11 } catch (IOException e) { 12 log.warn("Error connecting to node {}", node, e); 13 // Attempt failed, we'll try again after the backoff 14 connectionStates.disconnected(nodeConnectionId, now); 15 // Notify metadata updater of the connection failure 16 metadataUpdater.handleServerDisconnect(now, nodeConnectionId, Optional.empty()); 17 } 18 }

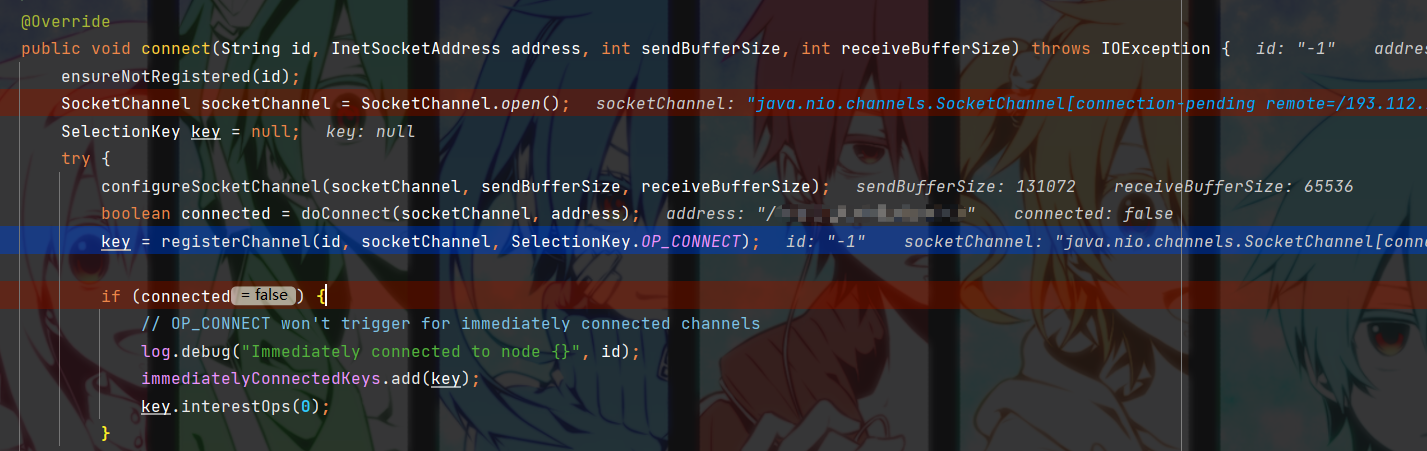

通过Kafka的Selector(底层用的还是NIO的Selector)进行连接节点,将这个连接添加到与给定 id 号关联的这个 nioSelector。

1 @Override 2 public void connect(String id, InetSocketAddress address, int sendBufferSize, int receiveBufferSize) throws IOException { 3 ensureNotRegistered(id); 4 SocketChannel socketChannel = SocketChannel.open(); 5 SelectionKey key = null; 6 try { 7 configureSocketChannel(socketChannel, sendBufferSize, receiveBufferSize); 8 boolean connected = doConnect(socketChannel, address); 9 key = registerChannel(id, socketChannel, SelectionKey.OP_CONNECT); 10 11 if (connected) { 12 // OP_CONNECT won't trigger for immediately connected channels 13 log.debug("Immediately connected to node {}", id); 14 immediatelyConnectedKeys.add(key); 15 key.interestOps(0); 16 } 17 } catch (IOException | RuntimeException e) { 18 if (key != null) 19 immediatelyConnectedKeys.remove(key); 20 channels.remove(id); 21 socketChannel.close(); 22 throw e; 23 } 24 }

但是这里进行连接时返回的是false,初始化连接失败。回到打电话的主流程中,打电话这个节点就被跳过了,通话列表里只有这个节点。

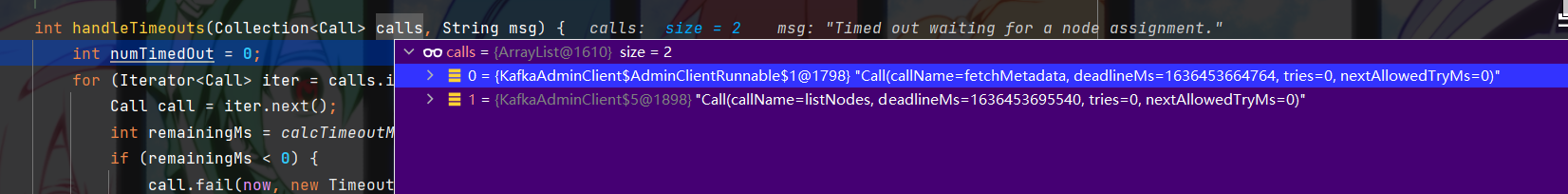

最后,Kafka客户端主线程每次while循环都会检查每个Call的请求超时的Call请求,并将其移除请求Call列表。

最后因为连接不上Kafka服务器,连接超时,客户端抛出超时异常。

最后才在控制台的输出发现,Kafka客户端一直尝试连接的节点ip地址是127.0.0.1,这不是本地回环地址吗,本地也没有启动Kafka,那应该是服务器配置问题了。因为在服务器上部署Kafka的时候并没有什么异常,服务器上也能正常使用生产者和消费者的控制台命令,进行生产/消费消息,那应该是缺少什么才会导致寻找的结点IP错误。

1 19519 [kafka-admin-client-thread | adminclient-1] WARN org.apache.kafka.clients.NetworkClient - [AdminClient clientId=adminclient-1] Connection to node 0 (localhost.localdomain/127.0.0.1:9092) could not be established. Broker may not be available.

三、从Kafka服务器配置排查问题

然后转向排查服务器配置,观察命令行启动Kafka服务器的输出日志:

[2021-11-08 18:51:46,987] INFO KafkaConfig values: advertised.host.name = null advertised.listeners = null advertised.port = null ... host.name=null ... listeners = null ... port = 9092 ... (kafka.server.KafkaConfig)

看到其配置参数的值有很多null,在config目录下的server.properties启动配置文件中,也只提到过listeners这个参数,还是注释里的。此时把问题转向Kafka的配置问题上,查查这些值为空的参数的作用。

listeners 由于 Kafka Connect 旨在作为服务运行,因此它还提供了用于管理连接器的 REST API。 可以使用 listeners 侦听器配置选项配置 REST API 服务器。

advertised.host.name 已弃用,仅在用在未使用 listeners 或者使用了 advertised.listeners 的器情况下。有 advertised.listeners 完全可以替代此属性。在 IaaS 环境中,这可能需要与代理绑定的接口不同。如果未设置,它将使用 host.name 的值(如果已配置)。否则它将使用从 java.net.InetAddress.getCanonicalHostName() 返回的值。

advertised.port 已弃用,使用情况同上。在 IaaS 环境中,这可能需要与代理绑定的端口不同。如果未设置,它将发布代理绑定到的相同端口。

advertised.listeners 如果与 listeners 配置属性不同,则发布到 ZooKeeper 供客户端使用的侦听器。在 IaaS 环境中,这可能需要与代理绑定的接口不同。如果未设置,则将使用侦听器的值。与侦听器不同,通告 0.0.0.0 元地址是无效的。 同样与侦听器不同的是,此属性中可以有重复的端口,因此可以将一个侦听器配置为通告另一个侦听器的地址。这在使用外部负载平衡器的某些情况下很有用。

四、得出结论

可以看出, advertised.listeners 就是关键,这个配置就是用来给定一个外部地址(外网ip),以便客户端能正确的连接Kafka,这个没有设定配置的话,就会默认使用listeners的值,这样外网的客户端就尝试着去使用内网的ip去连接代理服务器,在IaaS外部使用其内网的ip去访问根本无法连的上的,所以一直连不上最后会出现超时异常。

1 19519 [kafka-admin-client-thread | adminclient-1] WARN org.apache.kafka.clients.NetworkClient - [AdminClient clientId=adminclient-1] Connection to node 0 (localhost.localdomain/127.0.0.1:9092) could not be established. Broker may not be available.

五、解决办法

Kafka服务器启动的配置文件 server.properties 中正确配置 advertised.listeners ,把其配置成外网的ip,这样,外网的客户端就能连接的上Kafka了。

############################# Socket Server Settings ############################# # The address the socket server listens on. It will get the value returned from # java.net.InetAddress.getCanonicalHostName() if not configured. # FORMAT: # listeners = listener_name://host_name:port # EXAMPLE: # listeners = PLAINTEXT://your.host.name:9092 listeners=PLAINTEXT://:9092 advertised.listeners=PLAINTEXT://外网ip:9092

浙公网安备 33010602011771号

浙公网安备 33010602011771号