python爬取图书内容

import requests

from bs4 import BeautifulSoup

import time

import random

class DoubanBookReviewsCrawler:

def init(self):

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,/;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive',

}

self.book_mapping = {

'平凡的世界': '1084336',

'都挺好': '30328107',

}

self.sort_mapping = {

'热门': 'hottest',

'最新': 'new_score',

}

def get_reviews(self, book_name, sort_type, pages=3):

"""爬取指定图书的短评信息"""

if book_name not in self.book_mapping:

print(f"抱歉,暂不支持爬取《{book_name}》的评论信息。")

return []

book_id = self.book_mapping[book_name]

sort_code = self.sort_mapping[sort_type]

reviews = []

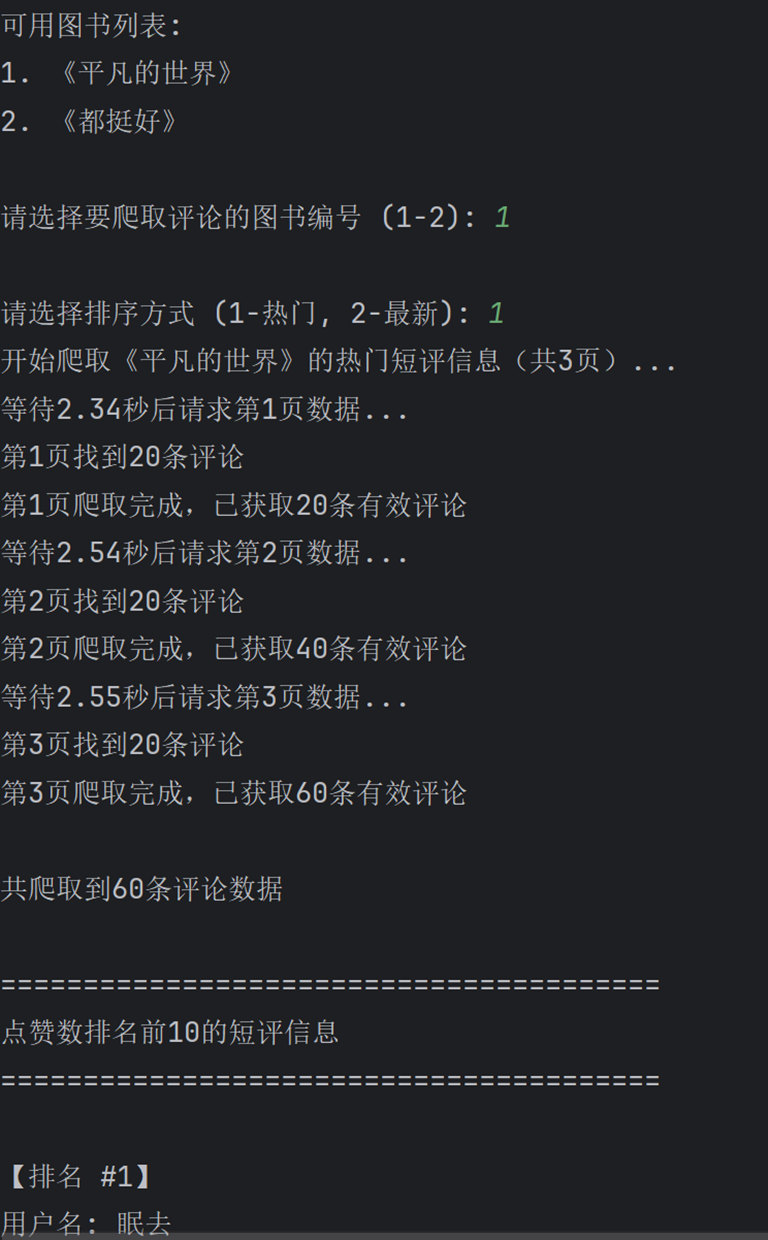

print(f"开始爬取《{book_name}》的{sort_type}短评信息(共{pages}页)...")

for page in range(pages):

start = page * 20 # 每页20条评论

url = f"https://book.douban.com/subject/{book_id}/comments/?start={start}&limit=20&status=P&sort={sort_code}"

try:

# 添加随机延迟,避免请求过于频繁

wait_time = random.uniform(2, 5)

print(f"等待{wait_time:.2f}秒后请求第{page + 1}页数据...")

time.sleep(wait_time)

response = requests.get(url, headers=self.headers, timeout=15)

response.raise_for_status()

# 检查是否被反爬

if '检测到有异常请求' in response.text:

print(f"第{page + 1}页请求被拦截,可能触发了反爬机制")

continue

soup = BeautifulSoup(response.text, 'html.parser')

# 尝试多种选择器定位评论区域

comment_items = soup.select('div.comment-item')

if not comment_items:

# 尝试备用选择器

comment_items = soup.select('li.comment-item')

if not comment_items:

print(f"第{page + 1}页未找到评论内容,可能页面结构已更新")

continue

print(f"第{page + 1}页找到{len(comment_items)}条评论")

for item in comment_items:

review = self._parse_review(item)

if review:

reviews.append(review)

print(f"第{page + 1}页爬取完成,已获取{len(reviews)}条有效评论")

except Exception as e:

print(f"爬取第{page + 1}页时出错: {e}")

continue

return reviews

def _parse_review(self, item):

"""解析单条评论信息"""

try:

# 用户名

user_elem = item.select_one('span.comment-info a')

if not user_elem:

return None

user_name = user_elem.text.strip()

# 评分

rating = '未评分'

rating_elem = item.select_one('span.comment-info span[title]')

if rating_elem:

rating_title = rating_elem.get('title', '')

if rating_title:

rating = rating_title

# 评论时间

time_elem = item.select_one('span.comment-info span:last-child')

comment_time = '未知时间'

if time_elem and time_elem.get('title'):

comment_time = time_elem.get('title').strip()

elif time_elem and not time_elem.get('class'):

comment_time = time_elem.text.strip()

# 短评内容

content_elem = item.select_one('span.short')

content = content_elem.text.strip() if content_elem else ''

# 点赞数

vote_elem = item.select_one('span.vote-count')

vote_count = 0

if vote_elem and vote_elem.text.strip().isdigit():

vote_count = int(vote_elem.text.strip())

return {

'user_name': user_name,

'rating': rating,

'comment_time': comment_time,

'content': content,

'vote_count': vote_count

}

except Exception as e:

print(f"解析评论时出错: {e}")

return None

def print_top_reviews(self, reviews, count=10, sort_by='vote_count'):

"""输出前N条评论"""

if not reviews:

print("没有可显示的评论信息。")

return

# 按指定字段排序

sorted_reviews = sorted(reviews, key=lambda x: x[sort_by], reverse=True)[:count]

print(f"\n{'=' * 40}")

if sort_by == 'vote_count':

print(f"点赞数排名前{count}的短评信息")

else:

print(f"{sort_by}排序的前{count}条短评信息")

print(f"{'=' * 40}")

for i, review in enumerate(sorted_reviews, 1):

print(f"\n【排名 #{i}】")

print(f"用户名: {review['user_name']}")

print(f"评分: {review['rating']}")

print(f"评论时间: {review['comment_time']}")

print(f"点赞数: {review['vote_count']}")

print(f"短评内容: {review['content'][:100]}...") # 限制显示长度

print(f"{'-' * 40}")

def main():

crawler = DoubanBookReviewsCrawler()

print("=" * 50)

print(" 豆瓣图书评论数据分析工具 ")

print("=" * 50)

选择图书

print("\n可用图书列表:")

for i, book in enumerate(crawler.book_mapping.keys(), 1):

print(f"{i}. 《{book}》")

book_choice = input("\n请选择要爬取评论的图书编号 (1-2): ")

try:

book_choice = int(book_choice)

if book_choice not in [1, 2]:

raise ValueError

book_name = list(crawler.book_mapping.keys())[book_choice - 1]

except:

print("无效的选择,使用默认图书《平凡的世界》")

book_name = "平凡的世界"

选择排序方式

sort_type = input("\n请选择排序方式 (1-热门, 2-最新): ")

try:

sort_type = int(sort_type)

if sort_type == 1:

sort_name = "热门"

elif sort_type == 2:

sort_name = "最新"

else:

raise ValueError

except:

print("无效的选择,使用默认排序方式【热门】")

sort_name = "热门"

爬取评论

reviews = crawler.get_reviews(book_name, sort_name)

if reviews:

print(f"\n共爬取到{len(reviews)}条评论数据")

# 按排序方式输出前10条

crawler.print_top_reviews(reviews, 10, sort_by='vote_count')

# 按点赞数输出前10条

crawler.print_top_reviews(reviews, 10, sort_by='vote_count')

else:

print("未能获取到任何评论信息,可能是由于反爬机制或页面结构变化导致的。")

print("请尝试以下操作:")

print("1. 稍后再试")

print("2. 更新代码中的请求头信息")

print("3. 检查并更新评论选择器")

if name == "main":

main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号