进程池和线程池concurrent和multiprocessing

1.为什么用进程池和线程池

开设进程和开设线程都要消耗资源,只不过线程比进程消耗少一点

不能无限制的开始进程/开设线程,因为计算机硬件资源吃不消

在保证计算机硬件能够正常工作的情况下,最大化压榨它

-

IO 密集型 vs 计算密集型:

-

IO密集型:读取文件,读取网络套接字频繁。

-

计算密集型:大量消耗CPU的数学与逻辑运算,也就是我们这里说的平行计算。

-

-

池的概念

- 池是保证计算机硬件能够正常工作的情况下,最大化压榨它

- 它降低了程序的效率,但是保证了计算机硬件安全

Python 3.2引入了concurrent.futures。

3.4版本引入了asyncio到标准库, python3.5以后使用async/await语法。(协程)

2.concurrent.futures

标准库 concurrent.futures 是 python3.2+ 自带,python2 需要安装,它提供 ThreadPoolExecutor (线程池) 和 ProcessPoolExecutor (进程池) 两个类,实现了对 threading 和 multiprocessing 的更高级的抽象,对编写 线程池/进程池 提供支持。 可以将相应的 tasks 直接放入线程池/进程池,不需要维护Queue来操心死锁的问题,线程池/进程池会自动调度。

pool.submit(函数名, 函数参数) # 执行进程

pool.submit(函数名, 函数参数).result( ) # 拿回函数中return的返回值,直接在后面加,程序就变成了串行,这个需要加到回调函数里面

pool.submit(函数名, 函数参数).add_done_callback(回调函数名) # 给每个执行的绑定一个回调函数对象

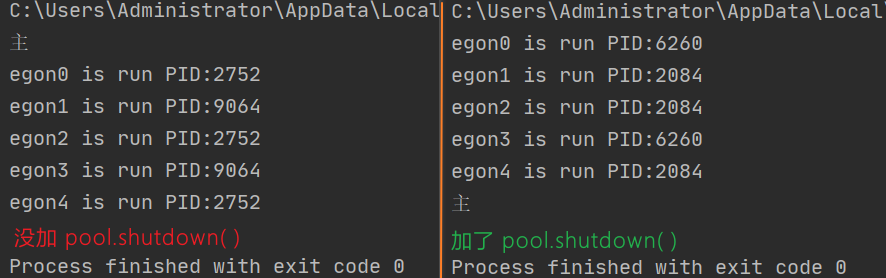

2-1 concurrent.futures进程池

初步使用

from concurrent.futures import ProcessPoolExecutor #导入进程池

import os

import time

import random

def walk(name):

print(f'{name} is run PID:{os.getpid()}')

time.sleep(random.randint(1, 3))

if __name__ == '__main__':

pool = ProcessPoolExecutor(2) # 1.创建进程2个进程次(默认本机的CPU核数)

'''

池子创建出来之后,里面会固定存在2个进程

这2个进程不会重复出现 创建和销毁 的过程

'''

for i in range(5): # 往池子中提交5个任务,但是池子中只有2个位置

pool.submit(walk, f'egon{i}') # 2.往池子中提交任务 pool.submit(函数名, 函数参数)

'''

任务提交方式:

同步:提交任务之后原地等待执行任务的返回结果,期间不做任何事

异步:提交任务之后不等待待执行任务的返回结果,执行继续往下

'''

pool.shutdown() # 关闭进程池,等待进程池中多有任务运行完毕(阻塞主进程)

print('主')

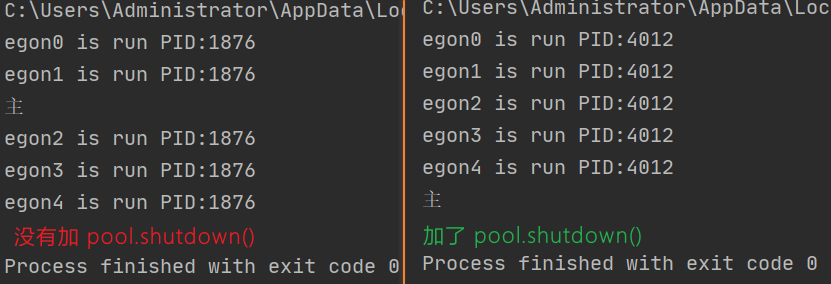

2-2 concurrent.futures线程池

初步使用

线程池的PID都一样

from concurrent.futures import ThreadPoolExecutor #导入线程池

import os

import time

import random

def walk(name):

print(f'{name} is run PID:{os.getpid()}')

time.sleep(random.randint(1, 3))

if __name__ == '__main__':

pool = ThreadPoolExecutor(2) # 创建2个线程(默认是CPU核数的5倍)

'''

池子创建出来之后,里面会固定存在2个线程

这2个线程不会重复出现 创建和销毁 的过程

'''

for i in range(5):

# submit是异步提交任务

pool.submit(walk, f'egon{i}') # 2.往池子中提交任务 pool.submit(函数名, 函数参数)

'''

任务提交方式:

同步:提交任务之后原地等待执行任务的返回结果,期间不做任何事

异步:提交任务之后不等待待执行任务的返回结果,执行继续往下

'''

pool.shutdown() # 关闭线程池,等待线程池中多有任务运行完毕(阻塞主进程)

print('主')

获取返回值的异步调用/同步调用

提交任务的方式

获取返回值---同步调用

from concurrent.futures import ThreadPoolExecutor #导入线程池

import time

import random

def walk(i):

print(f'{name} is run')

time.sleep(random.randint(1, 3))

return i*i

if __name__ == '__main__':

pool = ThreadPoolExecutor(2) # 创建2个线程

t_list = []

for i in range(5):

res = pool.submit(walk, i) # res是一个Future对象

# res.result() 拿到Future对象的返回结果

print(res.result()) # result方法 变成了同步提交 有点像join 阻塞了下面的线程

获取返回值的异步调用★★★★★

from concurrent.futures import ThreadPoolExecutor

import time

import random

def walk(i):

print(f'{name} is run')

time.sleep(random.randint(1, 3))

return i*i

def ppp(res): # 2. res就是Future对象

res = res.result() # 3. res.result()就是获取对象的返回值

print(res)

if __name__ == '__main__':

pool = ThreadPoolExecutor(2)

t_list = []

for i in range(5):

# 1. add_done_callback(ppp)回调函数,默认把Future对象当做参数传入

res = pool.submit(walk, i).add_done_callback(ppp)

3.multiprocessing

3-1 multiprocessing进程池

3-1-1 开启多进程

3-1-1-1 apply_async

apply_async()是异步非阻塞式,不用等待当前进程执行完毕,随时跟进操作系统调度来进行进程切换,即多个进程并行执行,提高程序的执行效率。

- func: 执行的函数名

- args=(): 位置参数

- kwds={}: 关键字参数

- callback: 回调函数

- error_callback: 内部发生错误回调函数

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

if __name__ == '__main__':

pool = Pool(5)

for i in range(1, 5):

pool.apply_async(func=task, args=(i,), callback=func2)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

2 is done

4 is done

3 is done

1:当前进程:MainProcess pid:12080

2:当前进程:MainProcess pid:12080

4:当前进程:MainProcess pid:12080

3:当前进程:MainProcess pid:12080

主

3-1-1-2 map_async

map_async()也是异步非阻塞式

map_async只能传入一个参数

-

func: 执行的函数名

-

iterable: 可迭代对象

-

chunksize: 指定每块中的项数,如果数据量较大,可以增大chunksize的值来提升性能

-

callback, extra = divmod(len(iterable), len(pool) * 4)

-

-

callback: 回调函数

-

error_callback: 内部发生错误回调函数

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

if __name__ == '__main__':

pool = Pool(5)

pool.map_async(func=task, iterable=[i for i in range(1, 5)], callback=func2)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

2 is done

3 is done

4 is done

[1, 2, 3, 4]:当前进程:MainProcess pid:12548

主

3-1-1-3 starmap_async

starmap_asyn和map_async区别就是可以掺入多个参数

-

func: 执行的函数名

-

iterable: 可迭代对象

-

chunksize: 指定每块中的项数,如果数据量较大,可以增大chunksize的值来提升性能

-

callback, extra = divmod(len(iterable), len(pool) * 4)

-

-

callback: 回调函数

-

error_callback: 内部发生错误回调函数

from multiprocessing import Pool, current_process

import time

def task2(name, age):

print(name, age)

if __name__ == '__main__':

pool = Pool(5)

pool.starmap_async(func=task2, iterable=[('张珊', 5), ('李四', 4), ('王五', 9)])

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

3-1-1-4 imap[有序]

返回结果和iterable顺序一样

res:<multiprocessing.pool.IMapIterator object>

-

func: 执行的函数名

-

iterable: 可迭代对象

-

chunksize: 指定每块中的项数,如果数据量较大,可以增大chunksize的值来提升性能

-

callback, extra = divmod(len(iterable), len(pool) * 4)

-

from multiprocessing import Pool

def task2(name):

print(name)

return f"{name} OK"

if __name__ == '__main__':

pool = Pool(5)

res = pool.imap(func=task2, iterable=[i for i in range(1, 5)])

for i in res:

print(i)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1

3

4

2

1 OK

2 OK

3 OK

4 OK

主

3-1-1-5 imap_unordered[无序]

返回结果无序的

res:<multiprocessing.pool.IMapUnorderedIterator object>

-

func: 执行的函数名

-

iterable: 可迭代对象

-

chunksize: 指定每块中的项数,如果数据量较大,可以增大chunksize的值来提升性能

-

callback, extra = divmod(len(iterable), len(pool) * 4)

-

from multiprocessing import Pool

def task2(name):

print(name)

return f"{name} OK"

if __name__ == '__main__':

pool = Pool(5)

res = pool.imap_unordered(func=task2, iterable=[i for i in range(1, 5)])

for i in res:

print(i)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1

2

3

4

1 OK

3 OK

2 OK

4 OK

主

3-1-1-6 区别

3-1-1-6-1 apply_async和map_async的区别

apply_async():由于是手动指定进程并添加任务,这样每个进程的执行结果之间是独立的,会分别保存,这样的好处在于,尽管可能其中某几个进程出现了错误,抛出异常,但是并不会导致其他的进程也失败,其他的进程并不会受影响,而且当获取这个抛出异常的进程的结果时,还会返回异常信息

map_async():其子参数任务并不是独立的,如果其中的某个子参数任务抛出异常,同时也会导致其他的子参数任务停止,也就是说,并不是通过独立线程来执行不同的子参数任务的。

3-1-1-6-2 map_async与imap和imap_unordered的区别

map_async 与 imap、imap_unordered区别是:map_async需要等待所有Task执行结束后返回list,而imap 和 imap_unordered 可以尽快返回一个Iterable的结果。

imap 和 imap_unordered 的区别是:imap 和 map_async一样,都按顺序等待Task的执行结果,而imap_unordered则不必。 imap_unordered返回的Iterable,会优先迭代到先执行完成的Task。

3-1-1-6-3 starmap_async与 map_async的区别是

starmap_async 可以传入多个参数

3-1-2 callback回调函数用法

callback是在结果返回之前调用的一个函数,这个函数必须只有一个参数,它会首先接收到结果。callback不能有耗时操作,因为它会阻塞主线程。

3-1-2-1 apply_async案例

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

if __name__ == '__main__':

pool = Pool(5)

for i in range(1, 5):

pool.apply_async(func=task, args=(i,), callback=func2)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

3-1-3 error_callback报错处理用法

3-1-3-1 apply_async内部报错

会把报错信息返回给

func3

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

if name == 2:

a = 1/0

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

def func3(error):

print(error)

if __name__ == '__main__':

pool = Pool(5)

for i in range(1, 5):

pool.apply_async(func=task, args=(i,),callback=func2, error_callback=func3)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

3 is done

4 is done

1:当前进程:MainProcess pid:12660

3:当前进程:MainProcess pid:12660

division by zero

4:当前进程:MainProcess pid:12660

主

3-1-3-2 apply_async内部报错捕获异常

捕获异常后使用

return会被fun2捕获,不会到fun3

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

if name == 2:

try:

a = 1 / 0

except:

return 'error 1/0'

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

def func3(error):

print(error)

if __name__ == '__main__':

pool = Pool(5)

for i in range(1, 5):

pool.apply_async(func=task, args=(i,), callback=func2, error_callback=func3)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

3 is done

4 is done

1:当前进程:MainProcess pid:6776

exceptions must derive from BaseException

3:当前进程:MainProcess pid:6776

4:当前进程:MainProcess pid:6776

主

3-1-3-3 map_async内部报错

内部报错之后,都不会调用回调函数

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

if name == 2:

1 / 0

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

def func3(err):

print(err)

if __name__ == '__main__':

pool = Pool(5)

pool.map_async(func=task, iterable=[i for i in range(1, 5)], callback=func2, error_callback=func3)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

3 is done

4 is done

division by zero

主

3-1-3-4 map_async内部报错捕获异常

from multiprocessing import Pool, current_process

import time

def task(name):

print(f'{name} is running')

time.sleep(1)

if name == 2:

try:

1 / 0

except:

return 'error'

print(f'{name} is done')

return name

def func2(res):

time.sleep(1)

print('%s:当前进程:%s pid:%d' % (res, current_process().name, current_process().pid))

def func3(err):

print(err)

if __name__ == '__main__':

pool = Pool(5)

pool.map_async(func=task, iterable=[i for i in range(1, 5)], callback=func2, error_callback=func3)

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print('主')

1 is running

2 is running

3 is running

4 is running

1 is done

3 is done

4 is done

[1, 'error', 3, 4]:当前进程:MainProcess pid:11224

主

3-1-4 获取返回结果

3-1-4-1 内部无报错

from multiprocessing import Pool, current_process

import os

def task2(name):

print(name, os.getpid(), current_process().name)

return f"{name} OK"

if __name__ == '__main__':

pool = Pool(5)

result = []

for i in range(1, 5):

result.append(pool.apply_async(func=task2, args=(i,)))

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print(" ok ".center(60, "="))

for res in result:

# ready测试是否已经完成;

print(res.ready())

# successful是在确定已经ready的情况下,如果执行中没有抛出异常,则成功

print(res.successful())

# get是获取结果,如果设置了timeout时间,超时会抛出multiprocessing.TimeoutError异常;

print(res.get())

print('主')

1 10544 SpawnPoolWorker-3

2 8880 SpawnPoolWorker-1

3 10544 SpawnPoolWorker-3

4 8880 SpawnPoolWorker-1

============================ ok ============================

True

True

1 OK

True

True

2 OK

True

True

3 OK

True

True

4 OK

主

3-1-4-2 内部有报错

res无法获取解析,解决方法,使用内部

try捕获可能出现的异常

from multiprocessing import Pool, current_process

import os

def task2(name):

print(name, os.getpid(), current_process().name)

if name == 2:

1 / 0

return f"{name} OK"

if __name__ == '__main__':

pool = Pool(5)

result = []

for i in range(1, 5):

result.append(pool.apply_async(func=task2, args=(i,)))

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print(" ok ".center(60, "="))

for res in result:

# ready测试是否已经完成;

print('ready', res.ready())

# successful是在确定已经ready的情况下,如果执行中没有抛出异常,则成功

print('successful', res.successful())

# get是获取结果,如果设置了timeout时间,超时会抛出multiprocessing.TimeoutError异常;

print('get', res.get())

print('主')

1 2744 SpawnPoolWorker-2

2 2744 SpawnPoolWorker-2

3 2744 SpawnPoolWorker-2

4 2744 SpawnPoolWorker-2

============================ ok ============================

ready True

successful True

get 1 OK

ready True

## 第二个任务失败了,导致获取不到其它进程的结果

successful False

multiprocessing.pool.RemoteTraceback:

"""

Traceback (most recent call last):

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "E:\oldboy-python\plotly_test\r1.py", line 8, in task2

1 / 0

ZeroDivisionError: division by zero

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "E:\r1.py", line 28, in <module>

print(res.get())

File "E:\Python\Python39\lib\multiprocessing\pool.py", line 771, in get

raise self._value

ZeroDivisionError: division by zero

Process finished with exit code 1

3-1-4-3 callback不会影响输出结果

from multiprocessing import Pool, current_process

import os

def task2(name):

print(name, os.getpid(), current_process().name)

return f"{name} OK"

def func2(res):

c = res + "<<<"

print(c)

return c

if __name__ == '__main__':

pool = Pool(5)

result = []

for i in range(1, 5):

result.append(pool.apply_async(func=task2, args=(i,), callback=func2))

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

pool.close()

pool.join() # 等待进程池中的所有进程执行完毕

print(" ok ".center(60, "="))

for res in result:

# ready测试是否已经完成;

print('ready', res.ready())

# successful是在确定已经ready的情况下,如果执行中没有抛出异常,则成功

print('successful', res.successful())

# get是获取结果,如果设置了timeout时间,超时会抛出multiprocessing.TimeoutError异常;

print('get', res.get())

print('主')

1 12940 SpawnPoolWorker-2

2 12940 SpawnPoolWorker-2

3 12940 SpawnPoolWorker-2

4 12940 SpawnPoolWorker-2

1 OK<<<

2 OK<<<

3 OK<<<

4 OK<<<

============================ ok ============================

ready True

successful True

get 1 OK

ready True

successful True

get 2 OK

ready True

successful True

get 3 OK

ready True

successful True

get 4 OK

主

3-2 multiprocessing线程池

使用方法和

multiprocessing.Pool一样类似于把

Pool换成ThreadPool就好了

from multiprocessing.pool import ThreadPool

from multiprocessing import current_process

import os

import time

def task2(name):

time.sleep(1)

print(name, os.getpid(), current_process().name)

return f"{name} OK"

def func2(res):

c = res + "<<<"

print(c)

return c

if __name__ == '__main__':

thread_pool = ThreadPool(5)

result = []

for i in range(1, 5):

result.append(thread_pool.apply_async(func=task2, args=(i,), callback=func2))

# 关闭进程池(关闭后不能在向进程池添加事件了)

# 需要在join之前调用,否则会报ValueError: Pool is still running错误

thread_pool.close()

thread_pool.join() # 等待进程池中的所有进程执行完毕

print(" ok ".center(60, "="))

for res in result:

# ready测试是否已经完成;

print('ready', res.ready())

# successful是在确定已经ready的情况下,如果执行中没有抛出异常,则成功

print('successful', res.successful())

# get是获取结果,如果设置了timeout时间,超时会抛出multiprocessing.TimeoutError异常;

print('get', res.get())

print('主')

4321 11980 1198011980 11980MainProcess MainProcessMainProcess

3 OK<<<

MainProcess

1 OK<<<

2 OK<<<

4 OK<<<

============================ ok ============================

ready True

successful True

get 1 OK

ready True

successful True

get 2 OK

ready True

successful True

get 3 OK

ready True

successful True

get 4 OK

主

4.concurrent和multiprocessing区别

concurrent.futures 更加易用

concurrent.futures > [ threading , multiprocessing]

asyncio > threading

浙公网安备 33010602011771号

浙公网安备 33010602011771号