RL MC ε-greedy (5.3)

如书中所说算法很快收敛,但是实验发现结果不稳定。有待进一步探究。

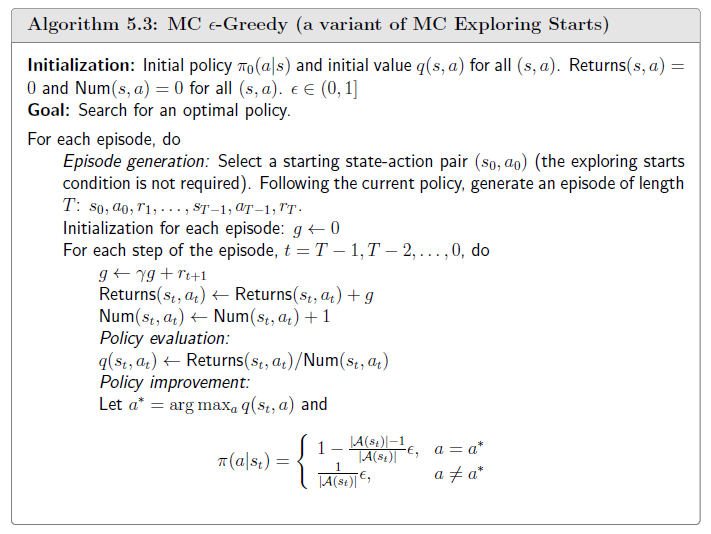

ε-贪婪蒙特卡洛控制:5×5 网格世界实战

场景设定与实验目标

- 环境:\(5\times 5\) 网格,左上角为 \((1,1)\)。

- 动作集合:上、下、左、右、不动,共 5 个确定性动作。

- 奖励:

- 出格:\(-1\)

- 禁区(橙色格子):\(-1\)

- 目标 \((4,3)\):\(+1\)

- 其它格子:\(0\)

- 折扣因子:\(\gamma = 0.9\)

- 探索系数:\(\varepsilon = 0.5\)

目标是依据教科书中的蒙特卡洛控制流程,利用 \(\varepsilon\)-贪婪策略学习最优策略 \(\pi_*\)。

算法流程(教科书版本)

1. 初始化

- 策略:\(\pi_0(a\mid s) = \frac{1}{|\mathcal{A}|}\)(均匀随机)。

- 动作价值与统计量:\[q(s,a) = 0, \quad Returns(s,a) = 0, \quad Num(s,a) = 0 \]

- 设定 \(\varepsilon \in (0,1]\)。

2. 对每个 Episode 重复:

- 生成 Episode:按照当前 \(\pi\) 与 \(\varepsilon\)-贪婪选择动作,得到序列\[s_0,a_0,r_1,s_1,a_1,\ldots,s_{T-1},a_{T-1},r_T. \]

- 倒序策略评估:令 \(g=0\),对 \(t=T-1,\ldots,0\):\[\begin{aligned} g &\leftarrow \gamma g + r_{t+1}, \\ Returns(s_t,a_t) &\leftarrow Returns(s_t,a_t) + g, \\ Num(s_t,a_t) &\leftarrow Num(s_t,a_t) + 1, \\ q(s_t,a_t) &\leftarrow \frac{Returns(s_t,a_t)}{Num(s_t,a_t)}. \end{aligned}\]

- 策略改进(\(\varepsilon\)-贪婪):记 \(a^* = \arg\max_a q(s_t,a)\),\(|\mathcal{A}|=5\):\[\pi(a\mid s_t) = \begin{cases} 1 - \varepsilon + \dfrac{\varepsilon}{|\mathcal{A}|}, & a=a^*, \\ \dfrac{\varepsilon}{|\mathcal{A}|}, & a\neq a^*. \end{cases} \]

3. 终止条件

当策略在若干 Episode 后不再发生变化(或达到预设轮数)即停止。

代码结构概览

文件:epsilon_greedy_mc_v2.py

policy0 = initialize_policy(n, len(actions))

Returns = np.zeros((n, n, num_actions))

Counts = np.zeros((n, n, num_actions))

Q = np.zeros((n, n, num_actions))

while True:

policy_new, Q = mc_policy_evaluation_and_improvement(policy_curr, r, epsilon, gamma)

if np.array_equal(policy_new, policy_curr):

break

policy_curr = policy_new

关键函数:

- 倒序遍历 Episode,累积 \(g\) 并更新

Returns/Counts/Q; - 按 \(\varepsilon\)-贪婪公式修正当前状态的策略分布。

逐步解析代码实现

Step 1:初始化策略与统计量

policy0 = initialize_policy(n, len(actions))

Returns = np.zeros((n, n, num_actions))

Counts = np.zeros((n, n, num_actions))

Q = np.zeros((n, n, num_actions))

initialize_policy将每个状态的 5 个动作概率置为 \(1/5\)。Returns、Counts、Q分别对应教科书里的 \(Returns(s,a)\)、\(Num(s,a)\)、\(q(s,a)\),为后续回报均值估计做准备。

Step 2:生成 Episode

def generate_episode(policy, r, epsilon, max_steps=1000):

state = (np.random.randint(n), np.random.randint(n))

for _ in range(max_steps):

action_probs = policy[i, j]

action_idx = np.random.choice(len(actions), p=action_probs)

# 出格奖励 -1,否则查表 r

episode.append((state, action_idx, reward))

state = (ni, nj)

- 起点随机,无需“探索开始”约束。

- 每一步依据当前策略抽样动作;若越界则停留并收到 \(-1\),否则使用 reward 表。

- Episode 记录 \((s_t, a_t, r_{t+1})\) 可直接用于倒序计算。

Step 3:蒙特卡洛策略评估 + \(\varepsilon\)-贪婪改进

def mc_policy_evaluation_and_improvement(...):

G = 0.0

for t in reversed(range(len(episode))):

G = gamma * G + reward

Returns[i, j, action_idx] += G

Counts[i, j, action_idx] += 1

Q[i, j, action_idx] = Returns[i, j, action_idx] / Counts[i, j, action_idx]

base_prob = epsilon / num_actions

new_policy[i, j] = base_prob

new_policy[i, j, best_action] = 1 - epsilon + base_prob

G累积折扣回报,完全对应文本中的 \(g \leftarrow \gamma g + r_{t+1}\)。- 每见一次 \((s_t,a_t)\) 就更新 Returns/Counts,并用均值给出最新 \(q(s_t,a_t)\)。

- 最优动作 \(a^*\) 获得 \(1-\varepsilon + \varepsilon/|\mathcal{A}|\),其余仅保留探索概率。

Step 4:主循环与收敛判断

while True:

policy_new, Q = mc_policy_evaluation_and_improvement(...)

if np.array_equal(policy_new, policy_curr):

break

policy_curr = policy_new

- 每次迭代运行完整 Episode → 评估 → 改进。

- 策略前后完全一致即判定收敛;设定 100 次上限防止异常。

Step 5:价值估计与可视化

V[i, j] += policy_curr[i, j, a] * Q[i, j, a]

render_policy_probabilities(policy_curr)

- 根据 \(V(s)=\sum_a \pi(a\mid s) q(s,a)\) 得到状态价值矩阵。

render_policy_probabilities把每个格子的“左右上下不动”概率打印在同一张表中,便于人工检查策略分布。

运行步骤

- 进入脚本目录。

- 在已配置好的虚拟环境中执行:

python.exe epsilon_greedy_mc_v2.py - 控制台将打印:

- 收敛所需 Episode 数;

- 完整的 \(Q(s,a)\) 表;

- 由 \(\pi\) 加权得到的状态价值 \(V(s)\);

- 提示

policy_prob_grid.png已生成。

结果展示

策略概率热力图:

- 每个格子列出“左/右、上/下、不动”五个动作的概率。

- 禁区与目标的策略会逐步被学到:

- 接近目标的格子会倾向于“向目标移动”;

- 禁区附近的概率分布体现了远离惩罚区域的行为。

对应的状态价值矩阵示例:

\[V = \begin{bmatrix}

-1.73 & -2.39 & -2.50 & -1.77 & -2.25 \\

-1.44 & -1.60 & -1.74 & -1.00 & -1.39 \\

-1.24 & -0.99 & 1.19 & -0.54 & -1.50 \\

-1.94 & 0.74 & 1.64 & 1.22 & -0.45 \\

-3.45 & -1.64 & 1.28 & -0.43 & -1.49

\end{bmatrix}

\]

从表中可以看到:

- 目标附近(第四行第三列)价值为正,策略鼓励朝目标移动;

- 禁区与边界的价值远低于其它区域,反映惩罚累积。

总结与扩展

- 该实现严格遵循“教科书”版蒙特卡洛控制:Episode 生成→倒序回报→\(\varepsilon\)-贪婪策略改进。

- 可以通过调整 \(\varepsilon\) 观察探索与利用的平衡,也可改变奖励矩阵或网格大小扩展实验。

- 若结合可视化(如轨迹动画、价值等高线)将更直观地呈现策略的演化过程。

附件:脚本输出的

policy_prob_grid.png可直接嵌入文档/幻灯片,用于教学或复现记录。

import numpy as np

# n * n 网格世界中的策略评估示例, n = 5,第一个格子表示为(1,1)最左上方。

# 设定奖励规则:

## 出格奖励-1,进入禁区奖励-10,进入目标奖励+1,其余格子奖励0

## 禁区橙色位置为(2,2),(2,3),(3,3),(4,2),(4,4),(5,2)

## 目标位置(4,3)

# 初始化参数

n = 5 # 网格大小 n*n

epsilon = 0.5 # ε-贪婪策略中的ε值

gamma = 0.9

# 初始化策略为全部不移动

# 动作定义:上、下、左、右、不动

actions = [(-1, 0), (1, 0), (0, -1), (0, 1), (0, 0)]

# 根据设定生成奖励矩阵 r

r = np.zeros((n, n))

forbidden_positions = [(2, 2), (2, 3), (3, 3), (4, 2), (4, 4), (5, 2)]

goal_position = (4, 3)

# 将坐标从 1-based 转换为 0-based 并赋值

for (ri, ci) in forbidden_positions:

r[ri - 1, ci - 1] = -1

r[goal_position[0] - 1, goal_position[1] - 1] = 1

# 初始化policy,每个状态每个动作的policy均等分布

def initialize_policy(n, num_actions):

policy = np.ones((n, n, num_actions)) / num_actions

return policy

policy0 = initialize_policy(n, len(actions))

num_actions = len(actions)

Returns = np.zeros((n, n, num_actions))

Counts = np.zeros((n, n, num_actions))

Q = np.zeros((n, n, num_actions))

# episode 生成函数

def generate_episode(policy, r, epsilon, max_steps=1000):

n = r.shape[0]

episode = []

state = (np.random.randint(n), np.random.randint(n))

for _ in range(max_steps):

i, j = state

action_probs = policy[i, j]

action_idx = np.random.choice(len(actions), p=action_probs)

di, dj = actions[action_idx]

ni, nj = i + di, j + dj

if ni < 0 or ni >= n or nj < 0 or nj >= n:

ni, nj = i, j

reward = -1

else:

reward = r[ni, nj]

episode.append((state, action_idx, reward))

state = (ni, nj)

return episode

num_actions = len(actions)

# 给定一个episode, 用蒙特卡洛方法评估当前策略, 用ε-贪婪策略改进策略

def mc_policy_evaluation_and_improvement(policy, r, epsilon, gamma=0.9):

global Q, Returns, Counts

new_policy = policy.copy()

episode = generate_episode(policy, r, epsilon)

G = 0.0

for t in reversed(range(len(episode))):

state, action_idx, reward = episode[t]

i, j = state

G = gamma * G + reward

Returns[i, j, action_idx] += G

Counts[i, j, action_idx] += 1

Q[i, j, action_idx] = Returns[i, j, action_idx] / Counts[i, j, action_idx]

best_action = np.argmax(Q[i, j])

base_prob = epsilon / num_actions

new_policy[i, j] = base_prob

new_policy[i, j, best_action] = 1 - epsilon + base_prob

return new_policy, Q

# 将每个状态的策略概率在网格中可视化(左右上下不变,保留两位小数)

def render_policy_probabilities(policy, forbidden=None, goal=None, filename='policy_prob_grid.png'):

import matplotlib.pyplot as plt

from matplotlib.table import Table

n = policy.shape[0]

fig, ax = plt.subplots(figsize=(8, 8))

ax.set_axis_off()

tb = Table(ax, bbox=[0, 0, 1, 1])

forbidden_set = set()

if forbidden is not None:

forbidden_set = {(r - 1, c - 1) for (r, c) in forbidden}

goal_cell = None

if goal is not None:

goal_cell = (goal[0] - 1, goal[1] - 1)

# actions 索引: 0=↑, 1=↓, 2=←, 3=→, 4=不动

for i in range(n):

for j in range(n):

probs = policy[i, j]

max_val = probs.max()

tol = 1e-9

face = 'white'

if (i, j) in forbidden_set:

face = '#FFA500'

elif goal_cell is not None and (i, j) == goal_cell:

face = '#1E90FF'

cell = tb.add_cell(i, j, 1/n, 1/n, text='', loc='center', facecolor=face)

cx = (j + 0.5) / n

cy = 1 - (i + 0.5) / n

cell_w = 1 / n

cell_h = 1 / n

def draw(label, value, dx, dy):

color = 'red' if abs(value - max_val) < tol else 'black'

ax.text(

cx + dx,

cy + dy,

f"{label}{value:.2f}",

ha='center',

va='center',

fontsize=9,

transform=ax.transAxes,

color=color

)

draw('←', probs[2], -0.35 * cell_w, 0.15 * cell_h)

draw('→', probs[3], 0.35 * cell_w, 0.15 * cell_h)

draw('↑', probs[0], 0.0, 0.35 * cell_h)

draw('↓', probs[1], 0.0, -0.05 * cell_h)

draw('o', probs[4], 0.0, -0.35 * cell_h)

ax.add_table(tb)

plt.title('Policy Probabilities per State')

plt.savefig(filename)

plt.close()

# 迭代直到策略收敛

policy_curr = policy0

iter_n = 0

while True:

iter_n += 1

policy_new, _ = mc_policy_evaluation_and_improvement(policy_curr, r, epsilon, gamma)

# 检查策略是否收敛

if np.array_equal(policy_new, policy_curr):

break

policy_curr = policy_new

if iter_n >= 100: # 防止无限循环

print("达到最大迭代次数,停止迭代。")

break

# 计算此时的状态价值, 状态 s 的价值,等于 “在策略 \(\pi\) 下,所有可能动作 a 的动作价值,按动作选择概率加权求和”。

V = np.zeros((n, n))

for i in range(n):

for j in range(n):

for a in range(num_actions):

V[i, j] += policy_curr[i, j, a] * Q[i, j, a]

print(f"策略迭代收敛于 {iter_n} 次迭代。")

print("对应的Q值:")

print(Q)

print("对应的状态价值V:")

print(V)

# 可视化最终策略概率分布(禁区橙色、目标蓝色,最大动作概率红色显示)

render_policy_probabilities(

policy_curr,

forbidden=forbidden_positions,

goal=goal_position,

filename='policy_prob_grid.png'

)

print("已生成策略概率网格图:policy_prob_grid.png")

浙公网安备 33010602011771号

浙公网安备 33010602011771号