python scrapy项目下spiders内多个爬虫同时运行

一般创建了scrapy文件夹后,可能需要写多个爬虫,如果想让它们同时运行而不是顺次运行的话,得怎么做?

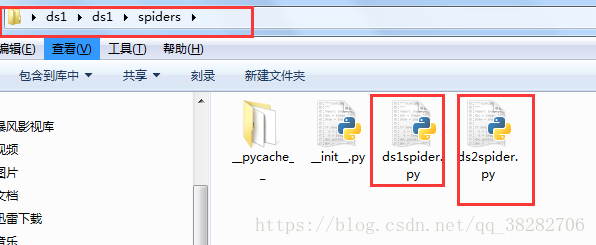

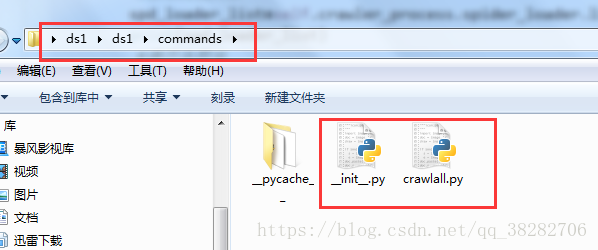

a、在spiders目录的同级目录下创建一个commands目录,并在该目录中创建一个crawlall.py,将scrapy源代码里的commands文件夹里的crawl.py源码复制过来,只修改run()方法即可!

1 import os 2 from scrapy.commands import ScrapyCommand 3 from scrapy.utils.conf import arglist_to_dict 4 from scrapy.utils.python import without_none_values 5 from scrapy.exceptions import UsageError 6 7 class Command(ScrapyCommand): 8 requires_project = True 9 def syntax(self): 10 return "[options] <spider>" 11 def short_desc(self): 12 return "Run all spider" 13 def add_options(self, parser): 14 ScrapyCommand.add_options(self, parser) 15 parser.add_option("-a", dest="spargs", action="append", default=[], metavar="NAME=VALUE", 16 help="set spider argument (may be repeated)") 17 parser.add_option("-o", "--output", metavar="FILE", 18 help="dump scraped items into FILE (use - for stdout)") 19 parser.add_option("-t", "--output-format", metavar="FORMAT", 20 help="format to use for dumping items with -o") 21 22 def process_options(self, args, opts): 23 ScrapyCommand.process_options(self, args, opts) 24 try: 25 opts.spargs = arglist_to_dict(opts.spargs) 26 except ValueError: 27 raise UsageError("Invalid -a value, use -a NAME=VALUE", print_help=False) 28 if opts.output: 29 if opts.output == '-': 30 self.settings.set('FEED_URI', 'stdout:', priority='cmdline') 31 else: 32 self.settings.set('FEED_URI', opts.output, priority='cmdline') 33 feed_exporters = without_none_values( 34 self.settings.getwithbase('FEED_EXPORTERS')) 35 valid_output_formats = feed_exporters.keys() 36 if not opts.output_format: 37 opts.output_format = os.path.splitext(opts.output)[1].replace(".", "") 38 if opts.output_format not in valid_output_formats: 39 raise UsageError("Unrecognized output format '%s', set one" 40 " using the '-t' switch or as a file extension" 41 " from the supported list %s" % (opts.output_format, 42 tuple(valid_output_formats))) 43 self.settings.set('FEED_FORMAT', opts.output_format, priority='cmdline') 44 45 def run(self, args, opts): 46 #获取爬虫列表 47 spd_loader_list=self.crawler_process.spider_loader.list()#获取所有的爬虫文件。 48 print(spd_loader_list) 49 #遍历各爬虫 50 for spname in spd_loader_list or args: 51 self.crawler_process.crawl(spname, **opts.spargs) 52 print ('此时启动的爬虫为:'+spname) 53 self.crawler_process.start()

b、还得在里面加个_init_.py文件

c、到这里还没完,settings.py配置文件还需要加一条。

COMMANDS_MODULE = ‘项目名称.目录名称’

COMMANDS_MODULE = 'ds1.commands'

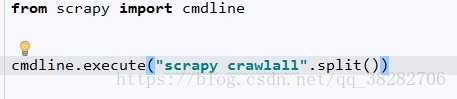

d、最后启动crawlall即可!

当然,安全起见,可以先在命令行中进入该项目所在目录,并输入scrapy -h,可以查看是否有命令crawlall 。如果有,那就成功了,可以启动了

我是写了个启动文件,放在第一级即可

要不直接在命令台cmd里输入 scrapy crawlall 就行了

##注意的是,爬虫好像是2个同时运行,而且运行时是交叉的?

还有settings里的文件,只针对其中一个?

摘自:https://blog.csdn.net/qq_38282706/article/details/80977576

浙公网安备 33010602011771号

浙公网安备 33010602011771号