17.docker - elk - logstash - pipline配置

参考:

springboot+logstash将指定日志推送到elasticsearch、ELK logstash 配置语法(24th)、Logstash 字符串的提取、Grok Debuger

swarm集群中的每个docker host中都部署logspout,把当前docker host中的容器日志都转发给logstash,logstash把日志存入elashticsearch(或者存入其他地方。)

logstash.conf

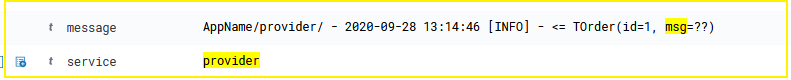

input { udp { port => 5000 codec => json } } filter { if [docker][image] =~ /logstash|elasticsearch|kibana/{ drop { } } # 我在打印的每条日至都加了 AppName作为前缀,方便定位到每条日志是由那个服务产生的 if [message] !~ /AppName/{ drop { } } # 用了nacos,我想过滤掉get changedGroupKeys:[] if [message] =~ /get changedGroupKeys:\[\]/{ drop{} } # 根据AppName前缀获取日志归属的服务名称 grok { match => ["message","^AppName/(?<service>(.*))/ "] } # @timestamp + 8小时 ruby { code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)" } ruby { code => "event.set('@timestamp',event.get('timestamp'))" } mutate { remove_field => ["timestamp"] } } output { # 方便我调试,直接docker logs -f container_id就可以看到logspout发过来的日志 stdout{ codec => rubydebug } elasticsearch { hosts => "elasticsearch:9200" user => "elastic" password => "changeme" index => "spring-%{service}-%{+YYYY.MM}" } }

因为打印日志的时候,设置了把当前服务名称输出到日志里面,所以通过logstash.conf中配置grok,通过正则表达式可以提取出服务名称,然后添加字段service,方便在elasticsearch中做检索。

logback-spring.xml

<?xml version="1.0" encoding="UTF-8"?> <configuration> <springProperty scope="context" name="application.name" source="spring.application.name"/> <appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"> <encoder> <pattern>AppName/${application.name}/ - %d{yyyy-MM-dd HH:mm:ss} [%level] - %m%n</pattern> <!--<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern>--> </encoder> <filter class="ch.qos.logback.classic.filter.LevelFilter"> <level>info</level> <onMatch>ACCEPT</onMatch> <onMismatch>DENY</onMismatch> </filter> </appender> <root level="debug"> <appender-ref ref="STDOUT" /> </root> </configuration>

浙公网安备 33010602011771号

浙公网安备 33010602011771号