springboot+logstash将指定日志推送到elasticsearch

前言

在平时工作中,我们需要对相关日志进行分析,随着平台的允许,日志会越来越大,不便于分析,此时我们需要将日志写入es,在这个过程中logstash起到中间转发的作用,类似于ETL工具。

1、搭建EL环境(此处没有使用Kibana)

(1)、安装es(5.6.16)

下载地址:https://elasticsearch.cn/download/

安装步骤:https://www.cnblogs.com/cq-yangzhou/p/9310431.html

(2)、安装logstash

下载地址:https://elasticsearch.cn/download/

安装步骤:解压即可。

(3)、安装IK分词器

下载地址:https://github.com/medcl/elasticsearch-analysis-ik/releases

安装步骤:解压,将里面的内容拷贝到es的plugins/ik(ik目录自己创建)目录下面,重启es即可

2、搭建springboot+logstash环境

(1)、引入logstash的mven依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>5.1</version>

</dependency>

(2)、编写logback-spring.xml放在resources目录下面

<?xml version="1.0" encoding="UTF-8" ?>

<configuration>

<!--日志写入logstash-->

<appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4567</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<!--监控指定的日志类-->

<logger name="com.example.demo.logutil.LogStashUtil" level="INFO">

<appender-ref ref="logstash"/>

</logger>

</configuration>

(3)、编写日志类LogStashUtil

@Slf4j

public class LogStashUtil {

public static void sendMessage(String username, String type, String content,

Date createTime,String parameters){

JSONObject jsonObject = new JSONObject();

jsonObject.putOpt("username",username);

jsonObject.putOpt("type",type);

jsonObject.putOpt("content",content);

jsonObject.putOpt("parameters",parameters);

jsonObject.putOpt("createTime", createTime);

log.info(jsonObject.toString());

}

}

(4)、编写logstash对应的日志收集配置文件

input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 4567

codec => json{

charset=>"UTF-8"

}

}

}

filter {

json {

source => "message"

#移除的字段,不会存入es

remove_field => ["message","port","thread_name","logger_name","@version","level_value","tags"]

}

date {

match => [ "createTime", "UNIX_MS" ]

target => "@timestamp"

}

ruby {

code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)"

}

ruby {

code => "event.set('@timestamp',event.get('timestamp'))"

}

mutate {

remove_field => ["timestamp"]

}

date {

match => [ "createTime", "UNIX_MS" ]

target => "createTime"

}

ruby {

code => "event.set('createTime', event.get('createTime').time.localtime + 8*60*60)" #时间加8个小时

}

}

output {

elasticsearch {

hosts => "localhost:9200"

index => "springboot-logstash-%{+YYYY.MM}"

document_type => access

#关闭模板管理,使用es通过API创建的模板

manage_template => false

#es中模板的名称

template_name => "message"

}

}

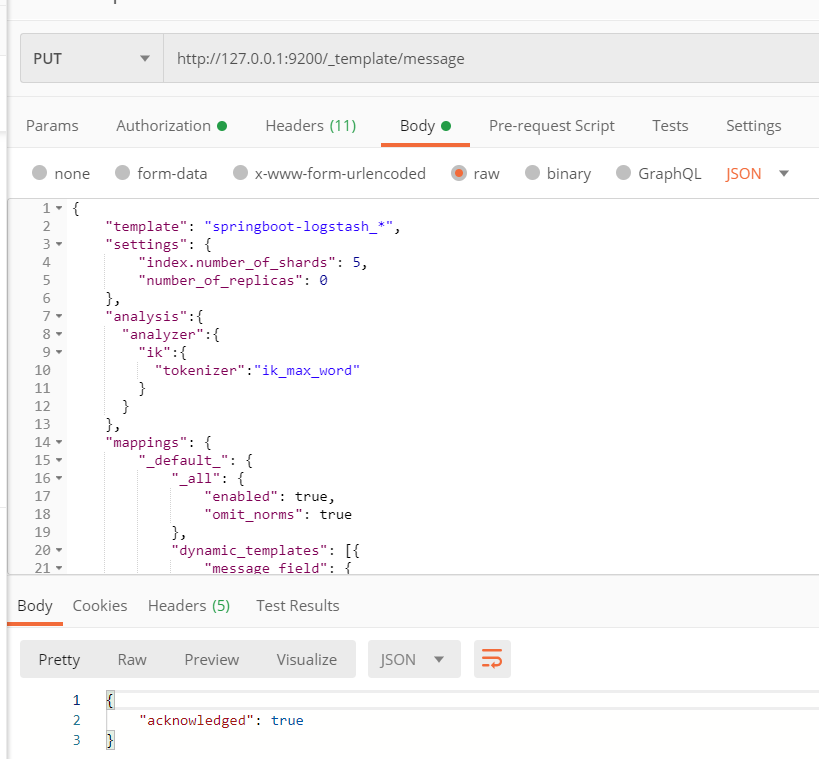

补充:模板编写

{ "template": "springboot-logstash_*", "settings": { "index.number_of_shards": 5, "number_of_replicas": 0 }, #指定ik分词 "analysis":{ "analyzer":{ "ik":{ "tokenizer":"ik_max_word" } } }, "mappings": { "_default_": { "_all": { "enabled": true, "omit_norms": true }, ##指定字段type需要进行ik分词 ik_max_word 最大粒度分词,ik_smart粗粒度分词 "dynamic_templates": [{ "message_field": { "match": "type", "match_mapping_type": "string", "mapping": { "type": "string", "index": "analyzed", "analyzer":"ik_max_word" } } }, { "string_fields": { "match": "*", "match_mapping_type": "string", "mapping": { "type": "string", "index": "not_analyzed", "doc_values": true } } }], "properties": { "@timestamp": { "type": "date" }, "@version": { "type": "string", "index": "not_analyzed" } /*, "geoip": { "dynamic": true, "properties": { "ip": { "type": "ip" }, "location": { "type": "geo_point" }, "latitude": { "type": "float" }, "longitude": { "type": "float" } } }*/ } } } }

模板创建好之后,调用es的创建模板接口,传入参数进行创建

#创建模板(覆盖模板)

PUT _template/template_name

#查看模板

GET _template/template_name

#删除模板

DELETE _template/template_name

栗子:

(5)、启动es和logstash

1、启动es

进入es的bin目录

elasticsearch -d p pid

(-p:在文件中记录进程id)

2、启动logstash

logstash -f message.conf(指定对应的日志配置文件路径) -d(后台运行)

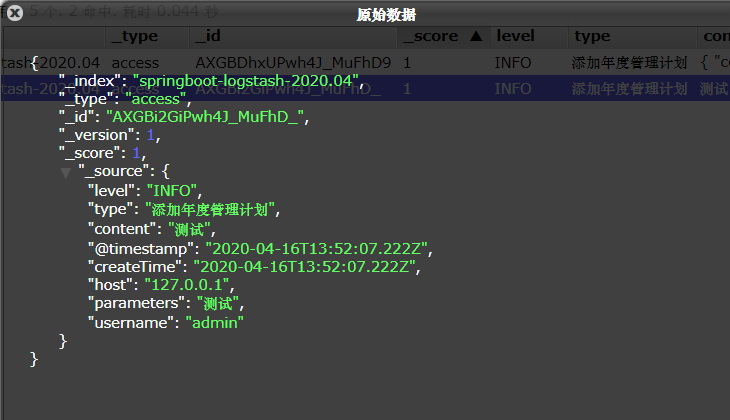

(6)、单元测试,调用日志收集类LogStashUtil

@Test

public void contextLoads() {

LogStashUtil.sendMessage(

"admin","添加年度管理计划","测试",new Date(),"测试");

}

(7)、查看es中的结果

浙公网安备 33010602011771号

浙公网安备 33010602011771号