14.docker日志收集 --base on docker-elk && elk时间问题

elastic search入门 elastic search 7.x文档

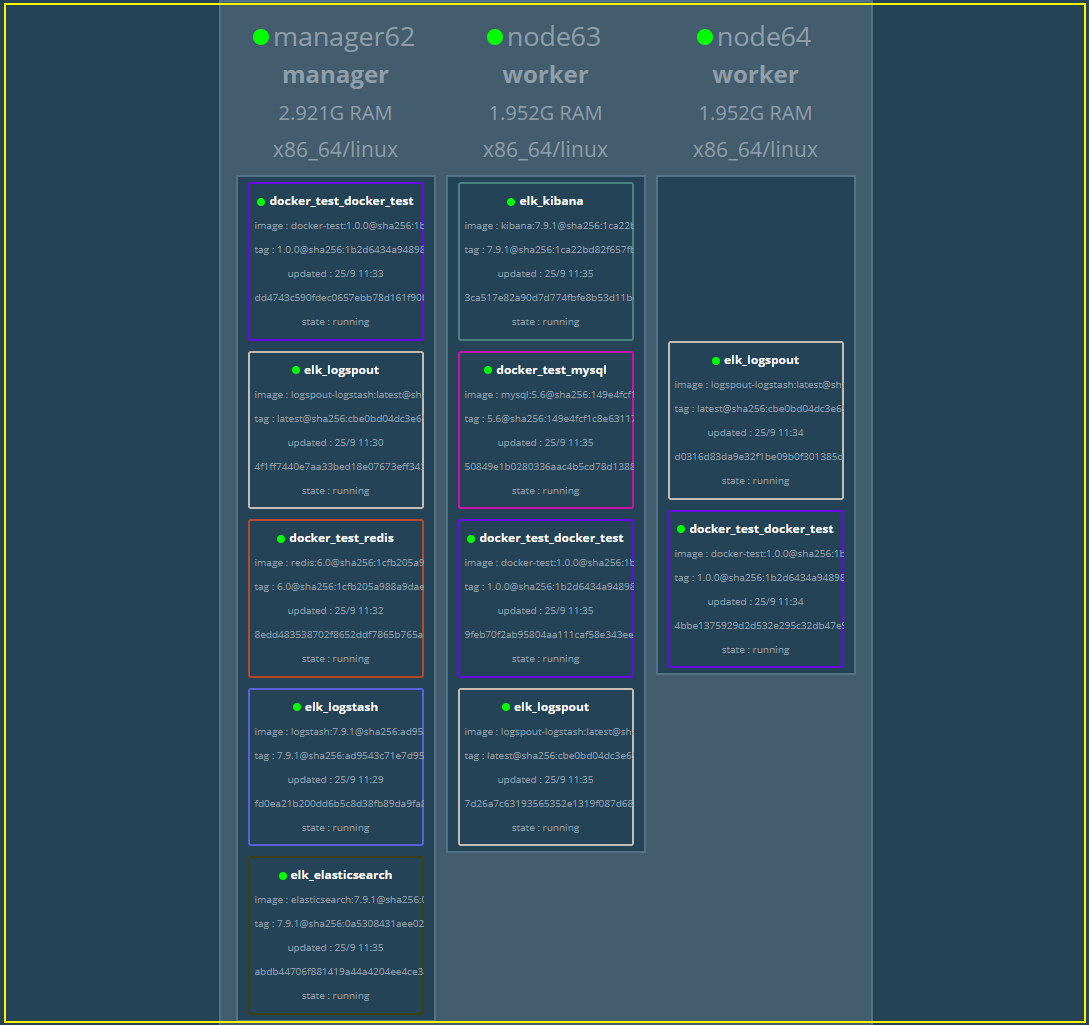

swarm环境。每个docker host 部署一个logspout把当前docker host上的日志发送到logstash, logstash把日志发送到ElasticSearch, 通过Kibana展示日志。

要想正常运行elk,每个节点需要执行如下命令,执行后需要重启生效(不想重启执行sudo sysctl -w vm.max_map_count=262144).

echo "vm.max_map_count=262144" >> /etc/sysctl.conf # reboot

elk配置从这里获得。git clone 下配置后,修改docker-stack.yml,添加logspout,另外我把镜像放到我的私有仓库了,这样会快很多,修改后的文件如下:

version: '3.3'

services:

logspout:

image: bekt/logspout-logstash

environment:

ROUTE_URIS: 'logstash://logstash:5000'

RETRY_SEND: 10 #如果不加这个环境变量,logspout连不上logstash,会crash

volumes:

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- logstash

networks:

- elk

deploy:

mode: global

restart_policy:

condition: on-failure

logstash:

image: manager62:7000/logstash:7.9.1

#image: docker.elastic.co/logstash/logstash:7.9.1

depends_on:

- elasticsearch

ports:

- "5000:5000"

- "9600:9600"

configs:

- source: logstash_config

target: /usr/share/logstash/config/logstash.yml

- source: logstash_pipeline

target: /usr/share/logstash/pipeline/logstash.conf

environment:

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

networks:

- elk

deploy:

mode: replicated

replicas: 1

elasticsearch:

#image: docker.elastic.co/elasticsearch/elasticsearch:7.9.1

image: manager62:7000/elasticsearch:7.9.1

ports:

- "9200:9200"

- "9300:9300"

configs:

- source: elastic_config

target: /usr/share/elasticsearch/config/elasticsearch.yml

environment:

ES_JAVA_OPTS: "-Xmx500m -Xms500m"

ELASTIC_PASSWORD: changeme

discovery.type: single-node

networks:

- elk

deploy:

mode: replicated

replicas: 1

kibana:

#image: docker.elastic.co/kibana/kibana:7.9.1

image: manager62:7000/kibanan:7.9.1 #单词拼错了

ports:

- "5601:5601"

configs:

- source: kibana_config

target: /usr/share/kibana/config/kibana.yml

networks:

- elk

deploy:

mode: replicated

replicas: 1

configs:

elastic_config:

file: ./elasticsearch/config/elasticsearch.yml

logstash_config:

file: ./logstash/config/logstash.yml

logstash_pipeline:

file: ./logstash/pipeline/logstash.conf

kibana_config:

file: ./kibana/config/kibana.yml

networks:

elk:

driver: overlay

修改logstash/pipeline/logstash.conf,修改后的文件如下:

input { udp { port => 5000 codec => json } } filter { if [docker][image] =~ /logstash|elasticsearch|kibana/{ drop { } } # 我在打印的每条日至都加了 AppName作为前缀,方便定位到每条日志是由那个服务产生的 if [message] !~ /AppName/{ drop { } } # 用了nacos,我想过滤掉get changedGroupKeys:[] if [message] =~ /get changedGroupKeys:\[\]/{ drop{} } # 根据AppName前缀获取日志归属的服务名称 grok { match => ["message","^AppName/(?<service>(.*))/ "] } # @timestamp + 8小时 ruby { code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)" } ruby { code => "event.set('@timestamp',event.get('timestamp'))" } mutate { remove_field => ["timestamp"] } } output { # 方便我调试,直接docker logs -f container_id就可以看到logspout发过来的日志 stdout{ codec => rubydebug } elasticsearch { hosts => "elasticsearch:9200" user => "elastic" password => "changeme" index => "spring-%{service}-%{+YYYY.MM}" } }

swarm环境节点截图:

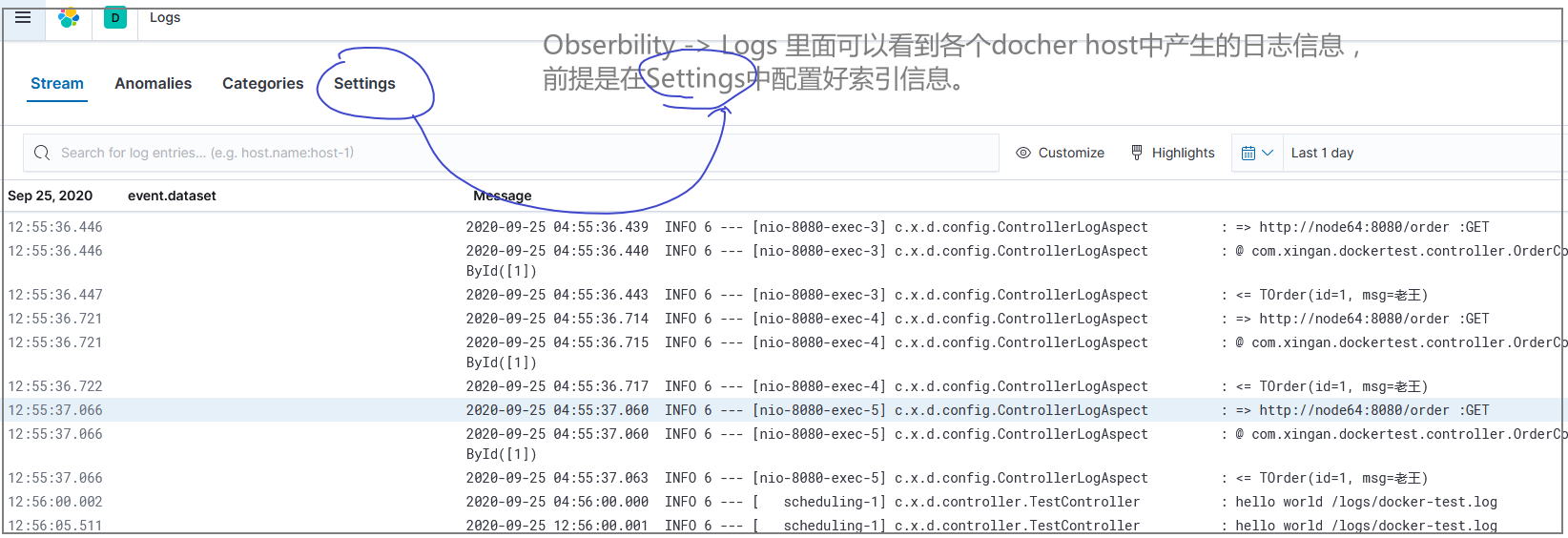

kibana截图:

---

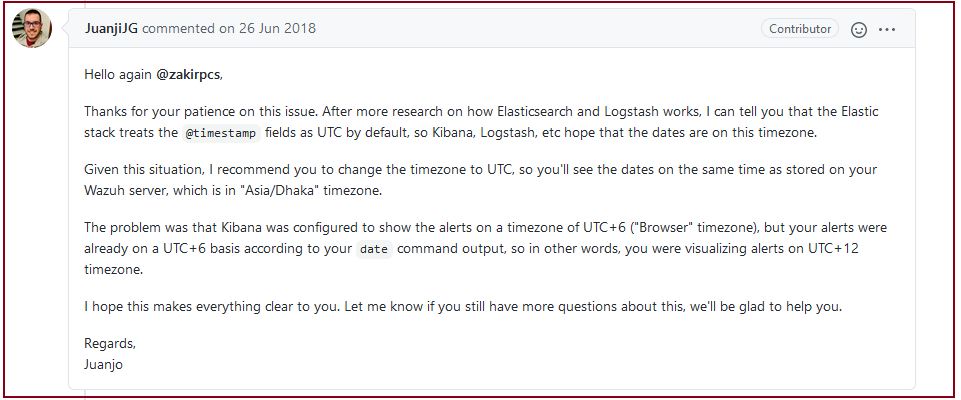

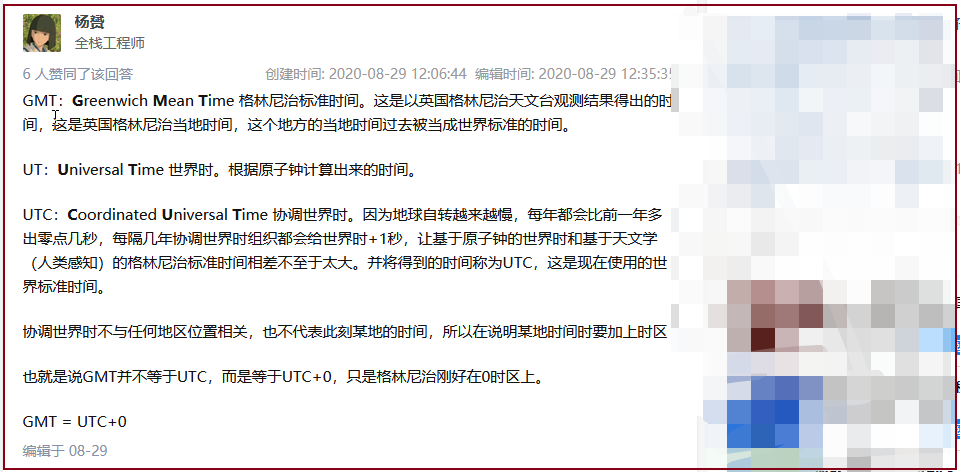

补充关于时间差8小时的问题:

啊

也就是说elk环境把@timestamp当作utc时间来对待,如果向我这样在logstash中把时间强行改到了utc+8,然鹅,kibana还是以为修改后的时间是utc,

她默认会把这个处理后的时间使用浏览器所在的timezone显示时间,然后就像我上面的截图中显示的,前面的时间比日志中的时间多了8个小时。

最后需要调整kibana页面中有设置,设置(7.9.1的路径为:)时区到GMT+0就好了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号